The Preface

One of my earlier failed experiments on Depiction Offset involved a superimposed grid like this.

So the reason behind why this didn't work in the original attempts nearly a year ago is actually pretty straightforward in the end.

I didn't know why the dims did what they did, so I just set what people suggested.

I didn't know what alpha did at all, so I just guessed at some values and then ran a bunch of trainings trying to make it work.

I was basically trying to monkey typewriter the thing. This can yield some valid results when bulk training enough data, as shown with many large scale AI trainings with billions of parameters, and many other modern AI experiments; but I don't have grand scale hardware, nor do I have grand scale data. I was trying to shove a little tiny bit of data into a Cruiseliner engine and call it gas.

It goes in the square hole yes, but not all shapes simply FIT in the square hole. There are... many other shapes.

This the preface to the grid concept in SDXL-Simulacrum

My first experiments were on depiction offset, and then multiple grid trainings that worked okay, and then finally the full SDXL-Simulacrum that has superior grid control of many elements on many positions in many ways.

It does NOT have enough data, so I devised a way to improve it with what it already has; the grid imprint.

I'm under the fairly unproven impression, that all CLIP-based models are intelligent to a degree beyond what people understand, and that my CLIP_L and CLIP_G models are trained with timestep derivations; which provides them additional accessor points, details, and data than the average CLIP_L and CLIP_G based models.

This isn't an additional piece to that, but it definitely showcases the power of training a CLIP-BASED model on SDXL.

The Hypothesis

My hypothesis is simple; imprinting a 2d shape overlay on a 3d structure based on a dimensional strength and high degree alpha depth, will allow a substantially stronger introduction of screen control when attempting to generate controlled depicted images in not only a grid format, but also in many many other formats simultaneously.

The Experiment

I took the same grid data as before, and didn't even bother altering the captions. I figured that the captions would work with minimal tweaking, so I handed that off to the CIVIT tagger.

Not too many images, and one that wasn't even tagged. I captioned it.

256x256, 1024x1024, and so on. All specifically grid formatted.

Many of the specific icons were formatted with random shapes using a simple workflow in comfyui, which was essentially noise random; don't look too far into this one for any special math. It's literally just noise random with random shapes on seed 420. Those are all labeled to reduce or increase chaotic outcome.

Dim 2

Alpha 128

Why alpha 128 you probably wonder, why did I even bother?

Well, the idea here is simple; we only really need a couple dimensions for this grid;

The fixation of what you want the grid to do

The outcome from the intention of the grid

So, in this case all these accessors will enable entirely different untested subsystems, and it's very interesting to see them in action due to the noise introduction and shapes post training.

Identification of grids, involves many detailed elements; you can't simply say OH LINES. Yeah... lines work sure, but if you have nothing within the lines, you just get lines.

If you don't teach the lines with enough depth, it won't even have an effect. Basically trash can lora.

HOWEVER, in this case the idea was to teach CLIP CHANGES specific to this orientation. Teaching the CLIPS how to peek for stuff, and then the depths they reach within the lora.

The outcome.

I determined that; based on all the various important elements built into SDXL-Simulacrum, that the trained CLIP_L and CLIP_G were more than expert enough at this concept to superimpose something powerful and train it onto the clips.

The outcome is definitely something potent, and the plain English captions are powerful in this sense; providing not only grids but grafting grids onto the plain English sections of the model in carefully organized fashions. Enabling them when colors are expected, shapes are expected, and so on.

Simply adding them where colors are expected, is enough to fully enhance any prompt where any of the trained colors are used; including grey, black, and so on.

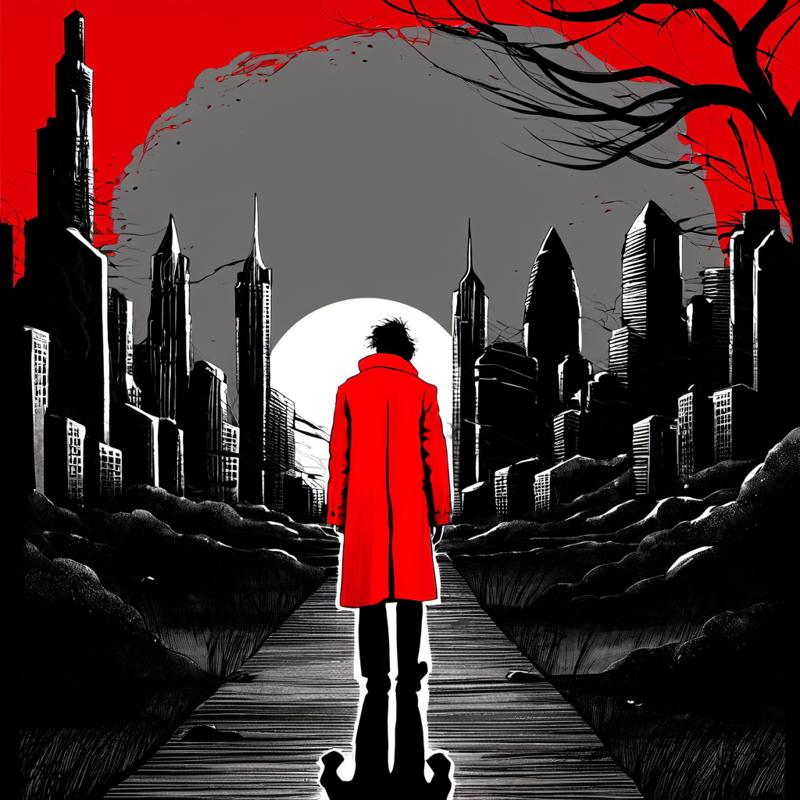

a beautiful cybernetic future full of dystopian and dark tall buildings in the style of scary stories, dark monochrome thick black lined greyscale, messy and distorted, tendrils,

a lone man wanders, he is the only off-colored part of the image with his red coat,

<lora:Grid_Overlay_grid-000001:1>Without the grid;

-

Grid On

The artistic power of SDXL-Simulacrum isn't to be understated; I chose some of the best art in the world to improve SDXL's already best art in the world dataset.

As you can see, the art style of everything colored was flattened quite a bit, shading a bit removed, and so on. A lot of detail removed, and this is a byproduct of the GRID lora.

Lets break it down now; How many dimensions did we change.... 2.

Alright, red coat is clearly the fixation of that, and it gave us a human outline below that. Simple.

Lets run it again, at half strength.

Gorgeous. It adapted more of the "scary stories" vibe; while still applying the necessary style cross contamination, and superimposing new elements; all because I loaded the lora.

The clips understand a good amount of English. CLIP_L and CLIP_G both do, just not as much as T5. T5 is a master of English vs the other two; so training things like this in the T5 requires T5 attention masking.

Lets do a few more to test the outcomes for solidity.

0

0.5

1

Lets run this last one specifically with the grid tag, rather than not using it.

a beautiful cybernetic future full of dystopian and dark tall buildings in the style of scary stories, dark monochrome thick black lined greyscale, messy and distorted, tendrils,

a lone man wanders, he is the only off-colored part of the image with his red coat,

a grid depiction of a landscape with a lone person,

<lora:Grid_Overlay_grid-000001:1>1

0.5

0

We now have evidence of the hypothesis, the outcome has shifted; providing additional control to the artistic outcome of the model.

More experiments in the future. Consult my various articles for generation params, tag guides, and more.