Could a model that was originally trained on SD 1.4 like NAI, Anything V3 or a checkpoint mix that heavily relies on SD 1.4 trained models benefit from a rebase to SD 1.5?

First things first, what the actual fuck I mean by "model merge rebase"?

Let's say that Anything V3 was originally trained using the weights from the full ema version of Stable Diffusion 1.4 model or any model which was also trained using SD1.4 as a base model. Since SD1.4 and Stable Diffusion 1.5 model use the same architecture and the later being a updated version capable reproducing more concepts, we would have an updated version of Anything V3 if we could take all the stuff was learned during the training process and transfer it to SD1.5. Translating all this in a equation:

In case of Anything V3:

α = how closer from the trained model we want our updated model to be

That's were the merging processes come in handy. Using super merger or sd-meh we can merge all 3 models using the "Add difference" method to extract the trained weights from Anything V3, remove all the base knowledge from SD1.4, and finally merge the resulting weights with SD1.5

Is there any benefit in doing all this?

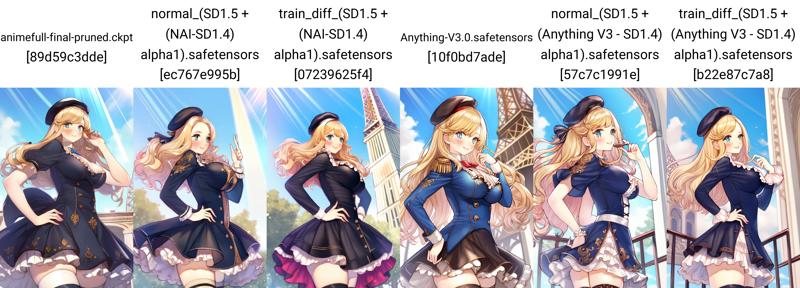

I think benefits aren't that clear, but while testing with NAI, Anything V3, Based64 and Counterfeit V3, the resulting images seem to have more complex backgrounds, detailed textures and it seems to grasp some concepts better using the new rebased models. At the same time, hands seemed to get worse, shadows and depth seem less pronounced and in some merges it seems like the image got more realistic even at alpha = 1.

For instance:

Positive:

masterpiece, best quality, highest quality, highly detailed, beautiful, intricate details, absurdres,

1girl, solo, blonde hair, long hair, hair intakes, blue eyes, smiling, blush, large breasts,

frilled dress, thighhighs, beret,

looking up, hand on hip, hand up, arm up,

BREAK

outdoors, eiffel tower, day, light rays,

BREAK

soft focus,

Negative:

(low quality, worst quality:1.4),

badhandv4, [(ng_deepnegative_v1_75t :0.9) :0.1],

blurry, out of focus,

multiple views, inset,

disembodied limb, extra digits, fewer digits,

Steps: 26, Sampler: Euler a, CFG scale: 8, Seed: -1, Size: 512x768, Denoising strength: 0.25, Clip skip: 2, ENSD: 31337, Token merging ratio hr: 0.5, RNG: CPU, Hires upscale: 2, Hires steps: 10, Hires upscaler: 4x-AnimeSharp, Version: v1.3.1

More grids using wildcards: #1 , #2 , #3 , #4

What about lora compatibility, is it screwed?

Compatibility for inference doesn't seem to change much, but some merges performed worse when paired with some loras. Here are some examples using 2 of my homebrew style loras:

Lora 1

Lora 2

More grids using wildcards: #1 , #2 , #3 , #4 , #5 , #6 , #7 , #8

Does it improve compatibility with loras trained with SD1.5 or photo realistic subjects:

I'm still testing, but some loras that don't seem to work at all while paired with anime models seem to reproduce the likeness of the photorealistic subject better, but image quality drops a little bit or start to look fried.

Belle Delphine by DiffusedIdentity:

Katherine Winnick by dogu_cat:

Conclusions:

There isn't much reason to run the full model merge rebase procedure yet aside from situations where you want the new model to be able to work with concepts where SD1.5 works better or you want a little more photorealism in your images (changing alpha or block weight merging).

A better scenario would be using a new model trained on anime pictures from scratch using the SD1.5 as a base, but with the community changing focus to SDXL, there will be hardly any new anime trained checkpoints.

Further work:

There is still a lot to be testing regarding the resulting models compatibility with loras.

There's clearly improvements to be made during the merging process, such as merging the models using sd meh and rebasing the weights. While we are at it, it should be cool to test the effects of weights_clip and using other merging methods like similarity_add_difference, weighted_subtraction and multiply_difference.

With a stable anime base model that performs as well as NAI or Anything V3, we could also test how well those rebased models perform at training new loras.

This theory doesn't only applies to rebasing the Stable Diffusion foundational models, but it should be useful to extract useful features from models like AbyssFuta and MonsterCock (muh models can't draw good futanaris ugu) and transferring them into a better foundation for further merging.

Part of the block weight merging and finding the best parameters can be automated with bayesian merger and given the right prompts for triggering all the desired characteristics to be evaluated (composition, anatomy, photorealism, lora compatibility, fidelity to the original model, etc.).