I'm onto a chain of research that might lead to something fruitful with this. There is a series of papers based on interpolation leading up to the formation of Hunyuan, and the papers for Hunyuan are fairly cited so the chain should yield some fruit.

I'll keep at it. There are multiple intermingled processes here.

It SEEMS MANY Flux loras have little to no impact on Hunyuan, while many others; mine included, have a massive impact, sometimes entirely altering the core of the model.

It might have something to do with the CLIP_L and sentencepiece, but I haven't had good results with other CLIP_L trained loras either- so for now I don't have much new information, other than I'm following the trail.

Alright I released the Schnell I was testing with. Have at it.

So I'm taking it easy right not doing any heavy research right now... but I felt like firing up and playing with Hunyuan a bit.

I got to reading the Hunyuan block information, and thought to myself; oh this is... this is nearly identical to flux, except the dual blocks are purposed for something else; and there is a series of offshoot sub-systems that seem to perform new sorts of behaviors. I assume the reuse of dual blocks are based on some sort of interpolation overlapping conceptual attention compartmentalizers, but I really couldn't get a good answer to that by reading the documentation.

SO I went and read the model itself, and sure enough they used some of the same exact Flux model code that I myself have experimented on... in multiple occasions. Not only that, but it's tagged SD3 and/or BlackForestLabs in multiple places.

So I got that itch in the back of my neck right, what happens if I just... y'know load my Simulacrum Schnell lora. It IS heavily based on timestep training, and does actually reflect outcome based on a fair amount of CFG.

Now I HAVE to release the newest one.

It kinda bugged me for a minute until I got it all loaded up and tried it.

OMEGA_24_CLIP_L

LLAMA whatever... then I shoved my Schnell Lora into the square hole...

Well it didn't QUITE work at first, but it also... didn't not work. It worked in multiple ways that were unexpected.

"OKAY this can't possibly be real, this can't be working like this." I said out loud to myself, and then y'know I just kinda cranked up the lora to 2x on the unet. Totally burned the next video, but the shape... stayed. Like the human form stayed.

I read a little bit more on the subject right, trying to figure out which blocks did what, and I couldn't find a definitive answer... I'm pretty sure they don't have a damn clue. One person or a couple people somewhere knew exactly how to put this together, and then a bunch of others collaborated and made it happen. That's what this model reeks of.

So I tried loading up JUST THE single blocks, using the same hunyuan lora loader I was using before.

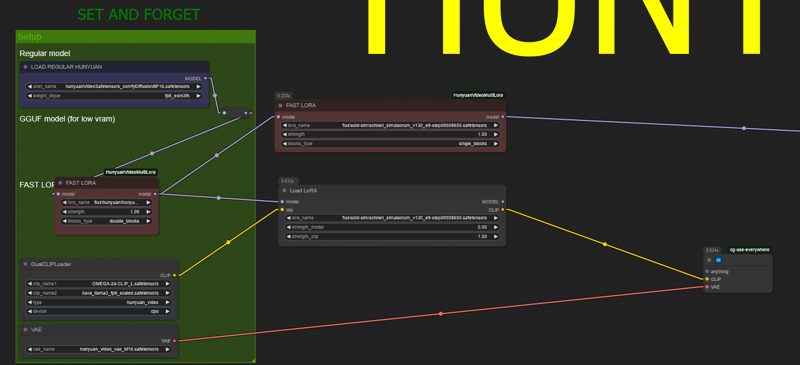

SO I ended up loading them like this.

Well... that was WAY more cohesive. Not messing with the double blocks is apparently a big impacting factor with interpolation. Messing with them too much kinda messes up the model.

So I loaded ONLY the single blocks, and I shoved maybe 5 loras in there, seeing what sort of shenanigans I could get up to... Suffice it to say, the Flux loras work, especially mine.

Double blocks seem to house a large amount of human anatomy and structural responses to interpolation, and the single blocks seem to house more of the direct situation to situation interpolations; however it also seems the single blocks also house a great deal of information about color, shape, depiction, lighting, and so on.

Eh I'll need to make a full mockup... I just don't have the time today.