Hunyuan have released the official image to video model.

it can be trained for image to video lora's.

Workflow Pack: https://civitai.com/models/1013919 (v4 onwards)

You will need:

Checkpoint:

(Native BF16)

V1

https://huggingface.co/Comfy-Org/HunyuanVideo_repackaged/resolve/main/split_files/diffusion_models/hunyuan_video_image_to_video_720p_bf16.safetensors

V2

https://huggingface.co/Comfy-Org/HunyuanVideo_repackaged/resolve/main/split_files/diffusion_models/hunyuan_video_v2_replace_image_to_video_720p_bf16.safetensors

place inside ComfyUI\models\checkpoints\

(GGUF UNET)

https://huggingface.co/city96/HunyuanVideo-I2V-gguf/tree/main

recommended: (system dependent)

Q8_0 = 14GB

Q5_K_M = 9.43GB

Q4_K_M = 7.86GB

place inside ComfyUI\models\unet\

Clip Vision Model:

https://huggingface.co/Comfy-Org/HunyuanVideo_repackaged/resolve/main/split_files/clip_vision/llava_llama3_vision.safetensors

place inside ComfyUI\models\clip_vision\

the text encoder / vae is unchanged, refer to my earlier article on Hunyuan Video (text 2 image)

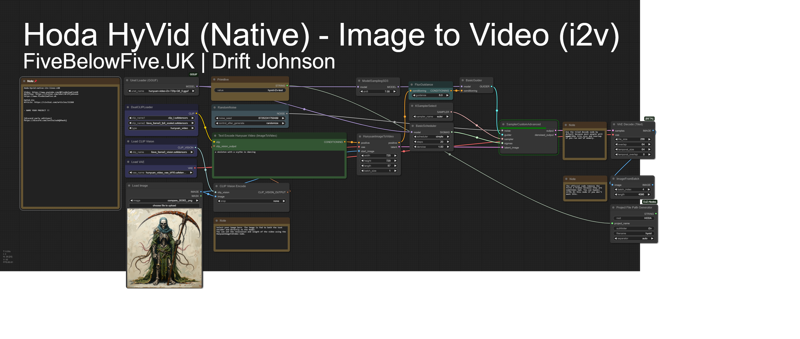

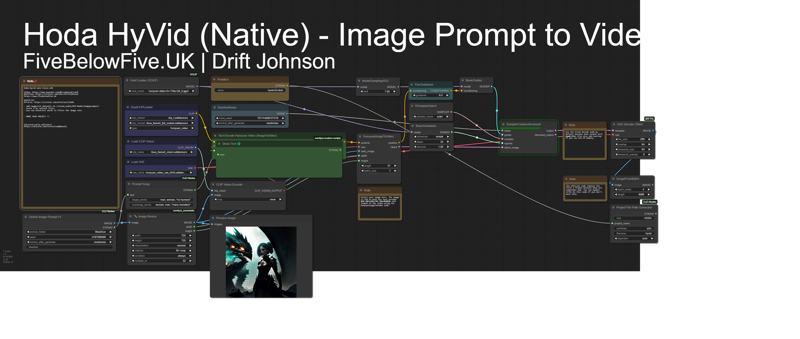

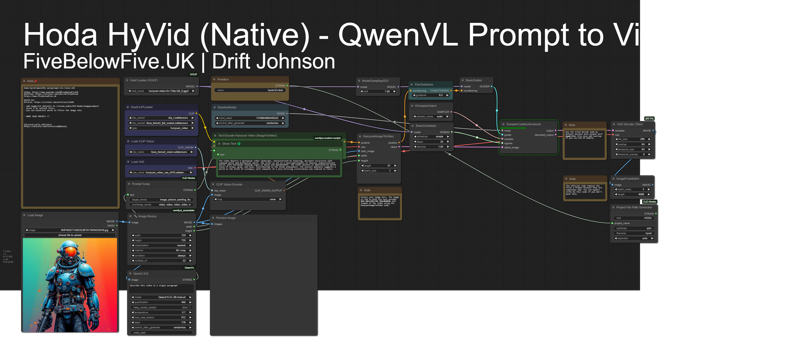

WORKFLOWS: (GGUF)