The captions used for these 600 were primarily randomly generated using the earlier form of the caption randomizer, and then fed into the T5-Flan instruct for summarization.

I tested 5 systems;

Deepseek 8b - terrible, worst captions by far.

Legitimately uncertain why people think this thing is smart. It basically produced lobotomized garbage.

LLAMA 3b? - really not bad, but liked to include THE EXPLANATION. Very annoying so they can't be used.

It had no idea what the rule of 3 is.

T5-base - okay responses, not very good though.

The prompt used was;

Compress and keep visual clues and object positions:

T5 Instruct - the only one that was fast enough to work and produce fair summarizations.

Compress and keep visual clues and object positionsGPT4o for generating captions was good, but slower than the T5 due to token restraints. Either that, or shell out money for the API which costs more than I'm willing to pay due to the high complexity.

For vision and identification post-generation for the chaotic Flux 1S potential poisons;

GPT 4o - the best by far, also the most expensive. I only used it as proof of concept, as it would cost nearly $1000 to caption the 100k images I want, even low quality captions.

SigLip is our go to, and it looks like attaching it to the big cheese itself joycaption is the way to go, so the curated datasets will be ran through siglip and checked using a series of accuracy tests based on the outcomes and the image detections themselves.

For this 600 I went with the t5 because it was the only one that didn't require additional curation other than GPT 4o.

On my huggingface you'll see the preliminary 10m captions generated using the random-gen noise captions.

https://huggingface.co/datasets/AbstractPhil/random-captions-10m

I've prepared 1b BETTER and more carefully curated captions but it's not to the correct format, so I'll need to reformat the 10m dataset and then reformat the 1b dataset to make sure they're compliant with the csv standards put forth by huggingface.

Well, this was a good idea, but too noisy. There's many chaotic elements involved that cannot be captioned, so the Flux 1D 50 step images that resulted - the first 600 - weren't the best. However, the outcomes were still quite... interesting.

I'd do more than 600, but the damn things took a long time to generate.

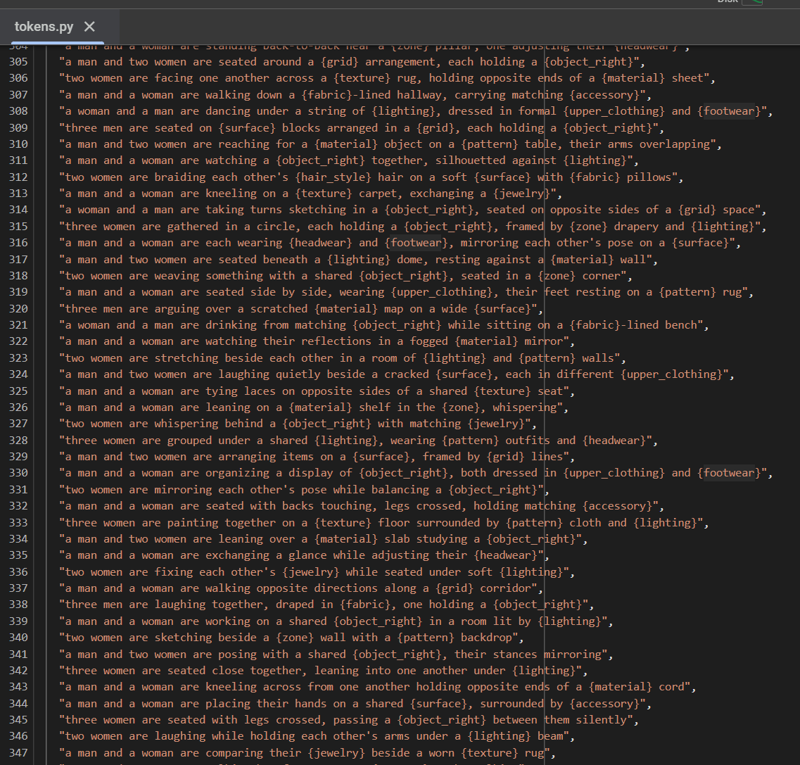

The next batch is "Flux 1S 25k", which is using a more robust object association generation set, not summarized by the t5, but instead using full caption depiction templates for cohesion.

There are a couple thousand of these types of templates.

In conjunction, there is a massive series of lists for clothing, objects, items, themes, and more.

It's essentially the one-stop-shop of tons of helper caption gens.

It WILL create bias, primarily towards humans for now, but it's going to be resolved over time when I produce more caption template types and automate accordingly.

Pony

Not a bad response honestly. It's about what I would expect, considering pony itself has pretty simple backgrounds.

Object association up by a degree, people control down by a degree, fidelity suffers as any core quick-cook will do, and a large degree of damage is to be expected.

Pony... did learn how to object associate, somewhat. It just wasn't enough images. 600 images isn't enough to teach Pony the fundamentals of object and people association with the environment. It requires a much larger finetune to un-roast the backgrounds and surfaces.

Illustrious

I did 2 variations of this one;

HIGH_DIMS - 64

NORMAL_DIMS - 32

High dims had some interesting responses. It seems to have learned a great deal of bad behavior because the cook didn't run long enough. Akin to a crash course in a task without the real quality details involved. Like... giving it a CERT in object association, but not a legitimate degree.

Normal dims is considerably better, but really lacks the robust response required to truly give the deterministic output required.

SimV4

Standard SimV4 SDXL epred train - with a bit of a twist. I didn't train high alpha.

The outcome suffers rapidly if you don't train SimV4 with a fairly high alpha. The model was trained ON alpha as a catalyst, making the model associate indirectly (or maybe directly?) with alpha when learnining.

In any case, the model only lost fidelity and quality rather than it actually improving. However, it did reintroduce some broken object associations as well as introducing some Flux styling to the model.

Conclusion;

Not enough concepts.

Requires additional full-course baselines, like the variations used for the finetune ran with COCO as a catalyst as well.

600 Flux1D images did not give the required power. Next is 10,000 Flux1S images, specifically formatted with object association and human interactions in mind.