Details on how the big diffusion model finetunes are trained is scarce, so just like with version 1, and version 2 of my model bigASP, I'm sharing all the details here to help the community. However, unlike those versions, this version is an experimental side project. And a tumultuous one at that. I’ve kept this article long, even if that may make it somewhat boring, so that I can dump as much of the hard earned knowledge for others to sift through. I hope it helps someone out there.

To start, the rough outline: Both v1 and v2 were large scale SDXL finetunes. They used millions of images, and were trained for 30m and 40m samples respectively. A little less than a week’s worth of 8xH100s. I shared both models publicly, for free, and did my best to document the process of training them and share their training code.

Two months ago I was finishing up the latest release of my other project, JoyCaption, which meant it was time to begin preparing for the next version of bigASP. I was very excited to get back to the old girl, but there was a mountain of work ahead for v3. It was going to be my first time breaking into the more modern architectures like Flux. Unable to contain my excitement for training I figured why not have something easy training in the background? Slap something together using the old, well trodden v2 code and give SDXL one last hurrah.

TL;DR

If you just want the quick recap of everything, here it is. Otherwise, continue on to “A Farewell to SDXL.”

I took SDXL and slapped on the Flow Matching objective from Flux.

The dataset was more than doubled to 13M images

Frozen text encoders

Trained nearly 4x longer (150m samples) than the last version, in the ballpark of PonyXL training

Trained for about 6 days on a rented four n

ode cluster (Nebius) for a total of 32 H100 SXM5 GPUs; 300 samples/s training speed

4096 batch size, 1e-4 lr, 0.1 weight decay, fp32 params, bf16 amp

Training code and config: Github

Training run: Wandb

Model: HuggingFace

Total cost including wasted compute on mistakes: $16k

A Farewell to SDXL

The goal for this experiment was to keep things simple but try a few tweaks, so that I could stand up the run quickly and let it spin, hands off. The tweaks were targeted to help me test and learn things for v3:

more data

add anime data

train longer

flow matching

I had already started to grow my dataset in preparation for v3, so more data was easy. Adding anime data was two fold experiment: can the more diverse anime data help expand the concepts the model can use for photoreal gens; and can I train a unified model that performs well in both photoreal and non-photoreal. Both v1 and v2 are primarily meant for photoreal generation, so their datasets had always focused on, well, photos. A big problem with strictly photo based datasets is that the range of concepts that photos cover is far more limited than art in general. And for me, diffusion models are about art and expression, photoreal or otherwise. To help bring more flexibility to the photoreal domain, I figured adding anime data might allow the model to generalize the concepts from that half over to the photoreal half. As for building a unified model, by that I mean that the open model community has generally been split between models that can do photorealistic generations and models that can do everything else. While big, commercial gorillas like GPT 4o are universal, it wasn’t really known if that was something hobbyists could strive for.

Besides more data, I really wanted to try just training the model for longer. As we know, training compute is king, and both v1 and v2 had smaller training budgets than the giants in the community like PonyXL. I wanted to see just how much of an impact compute would make, so the training was increased from 40m to 150m samples. That brings it into the range of PonyXL and Illustrious.

Finally, flow matching. I’ll dig into flow matching more in a moment, but for now the important bit is that it is the more modern way of formulating diffusion, used by revolutionary models like Flux. It improves the quality of the model’s generations, as well as simplifying and greatly improving the noise schedule.

Now it should be noted, unsurprisingly, that SDXL was not trained to flow match. Yet I had already run small scale experiments that showed it could be finetuned with the flow matching objective and successfully adapt to it. In other words, I said “fuck it” and threw it into the pile of tweaks.

So, the stage was set for v2.5. All it was going to take was a few code tweaks in the training script and re-running the data prep on the new dataset. I didn’t expect the tweaks to take more than a day, and the dataset stuff can run in the background. Once ready, the training run was estimated to take 22 days on a rented 8xH100 sxm5 machine.

A Word on Diffusion

Flow matching is the technique used by modern models like Flux. If you read up on flow matching you’ll run into a wall of explanations that will be generally incomprehensible even to the people that wrote the papers. Yet it is nothing more than two simple tweaks to the training recipe.

If you already understand what diffusion is, you can skip ahead to “A Word on Noise Schedules”. But if you want a quick, math-lite overview of diffusion to lay the ground work for explaining Flow Matching then continue forward!

Starting from the top: All diffusion models train on noisy samples, which are built by mixing the original image with noise. The mixing varies between pure image and pure noise. During training we show the model images at different noise levels, and ask it to predict something that will help denoise the image. During inference this allows us to start with a pure noise image and slowly step it toward a real image by progressively denoising it using the model’s predictions.

That gives us a few pieces that we need to define for a diffusion model:

the mixing formula

what specifically we want the model to predict

The mixing formula is anything like:

def add_noise(image, noise, a, b):

return a * image + b * noise

Basically any function that takes some amount of the image and mixes it with some amount of the noise. In practice we don’t like having both a and b, so the function is usually of the form add_noise(image, noise, t) where t is a number between 0 and 1. The function can then convert t to some value for a and b using a formula. Usually it’s define such that at t=1 the function returns “pure noise” and at t=0 the function returns image. Between those two extremes it’s up to the function to decide what exact mixture it wants to define. The simplest is a linear mixing:

def add_noise(image, noise, t):

return (1 - t) * image + t * noise

That linearly blends between noise and the image. But there are a variety of different formulas used here. I’ll leave it at linear so as not to complicate things.

With the mixing formula in hand, what about the model predictions? All diffusion models are called like: pred = model(noisy_image, t) where noisy_image is the output of add_noise. The prediction of the model should be anything we can use to “undo” add_noise. i.e. convert from noisy_image to image. Your intuition might be to have it predict image, and indeed that is a valid option. Another option is to predict noise, which is also valid since we can just subtract it from noisy_image to get image. (In both cases, with some scaling of variables by t and such).

Since predicting noise and predicting image are equivalent, let’s go with the simpler option. And in that case, let’s look at the inner training loop:

t = random(0, 1)

original_noise = generate_random_noise()

noisy_image = add_noise(image, original_noise, t)

predicted_image = model(noisy_image, t)

loss = (image - predicted_image)**2

So the model is, indeed, being pushed to predict image. If the model were perfect, then generating an image becomes just:

original_noise = generate_random_noise()

predicted_image = model(original_noise, 1)

image = predicted_image

And now the model can generate images from thin air! In practice things are not perfect, most notably the model’s predictions are not perfect. To compensate for that we can use various algorithms that allow us to “step” from pure noise to pure image, which generally makes the process more robust to imperfect predictions.

A Word on Noise Schedules

Before SD1 and SDXL there was a rather difficult road for diffusion models to travel. It’s a long story, but the short of it is that SDXL ended up with a whacky noise schedule. Instead of being a linear schedule and mixing, it ended up with some complicated formulas to derive the schedule from two hyperparameters. In its simplest form, it’s trying to have a schedule based in Signal To Noise space rather than a direct linear mixing of noise and image. At the time that seemed to work better. So here we are.

The consequence is that, mostly as an oversight, SDXL’s noise schedule is completely broken. Since it was defined by Signal-to-Noise Ratio you had to carefully calibrate it based on the signal present in the images. And the amount of signal present depends on the resolution of the images. So if you, for example, calibrated the parameters for 256x256 images but then train the model on 1024x1024 images… yeah… that’s SDXL.

Practically speaking what this means is that when t=1 SDXL’s noise schedule and mixing don’t actually return pure noise. Instead they still return some image. And that’s bad. During generation we always start with pure noise, meaning the model is being fed an input it has never seen before. That makes the model’s predictions significantly less accurate. And that inaccuracy can compile on top of itself. During generation we need the model to make useful predictions every single step. If any step “fails”, the image will veer off into a set of “wrong” images and then likely stay there unless, by another accident, the model veers back to a correct image. Additionally, the more the model veers off into the wrong image space, the more it gets inputs it has never seen before. Because, of course, we only train these models on correct images.

Now, the denoising process can be viewed as building up the image from low to high frequency information. I won’t dive into an explanation on that one, this article is long enough already! But since SDXL’s early steps are broken, that results in the low frequencies of its generations being either completely wrong, or just correct on accident. That manifests as the overall “structure” of an image being broken. The shapes of objects being wrong, the placement of objects being wrong, etc. Deformed bodies, extra limbs, melting cars, duplicated people, and “little buddies” (small versions of the main character you asked for floating around in the background).

That also means the lowest frequency, the overall average color of an image, is wrong in SDXL generations. It’s always 0 (which is gray, since the image is between -1 and 1). That’s why SDXL gens can never really be dark or bright; they always have to “balance” a night scene with something bright so the image’s overall average is still 0.

In summary: SDXL’s noise schedule is broken, can’t be fixed, and results in a high occurrence of deformed gens as well as preventing users from making real night scenes or real day scenes.

A Word on Flow Matching

phew Finally, flow matching. As I said before, people like to complicate Flow Matching when it’s really just two small tweaks. First, the noise schedule is linear. t is always between 0 and 1, and the mixing is just (t - 1) * image + t * noise. Simple, and easy. That one tweak immediately fixes all of the problems I mentioned in the section above about noise schedules.

Second, the prediction target is changed to noise - image. The way to think about this is, instead of predicting noise or image directly, we just ask the model to tell us how to get from noise to the image. It’s a direction, rather than a point.

Again, people waffle on about why they think this is better. And we come up with fancy ideas about what it’s doing, like creating a mapping between noise space and image space. Or that we’re trying to make a field of “flows” between noise and image. But these are all hypothesis, not theories.

I should also mention that what I’m describing here is “rectified flow matching”, with the term “flow matching” being more general for any method that builds flows from one space to another. This variant is rectified because it builds straight lines from noise to image. And as we know, neural networks love linear things, so it’s no surprise this works better for them.

In practice, what we do know is that the rectified flow matching formulation of diffusion empirically works better. Better in the sense that, for the same compute budget, flow based models have higher FID than what came before. It’s as simple as that.

Additionally it’s easy to see that since the path from noise to image is intended to be straight, flow matching models are more amenable to methods that try and reduce the number of steps. As opposed to non-rectified models where the path is much harder to predict.

Another interesting thing about flow matching is that it alleviates a rather strange problem with the old training objective. SDXL was trained to predict noise. So if you follow the math:

t = 1

original_noise = generate_random_noise()

noisy_image = (1 - 1) * image + 1 * original_noise

noise_pred = model(noisy_image, 1)

image = (noisy_image - t * noise_pred) / (t - 1)

# Simplify

original_noise = generate_random_noise()

noisy_image = original_noise

noise_pred = model(noisy_image, 1)

image = (noisy_image - t * noise_pred) / (t - 1)

# Simplify

original_noise = generate_random_noise()

noise_pred = model(original_noise, 1)

image = (original_noise - 1 * noise_pred) / (1 - 1)

# Simplify

original_noise = generate_random_noise()

noise_pred = model(original_noise, 1)

image = (original_noise - noise_pred) / 0

# Simplify

image = 0 / 0

Ooops. Whereas with flow matching, the model is predicting noise - image so it just boils down to:

image = original_noise - noise_pred

# Since we know noise_pred should be equal to noise - image we get

image = original_noise - (original_noise - image)

# Simplify

image = image

Much better.

As another practical benefit of the flow matching objective, we can look at the difficulty curve of the objective. Suppose the model is asked to predict noise. As t approaches 1, the input is more and more like noise, so the model’s job is very easy. As t approaches 0, the model’s job becomes harder and harder since less and less noise is present in the input. So the difficulty curve is imbalanced. If you invert and have the model predict image you just flip the difficulty curve. With flow matching, the job is equally difficult on both sides since the objective requires predicting the difference between noise and image.

Back to the Experiment

Going back to v2.5, the experiment is to take v2’s formula, train longer, add more data, add anime, and slap SDXL with a shovel and graft on flow matching.

Simple, right?

Well, at the same time I was preparing for v2.5 I learned about a new GPU host, sfcompute, that supposedly offered renting out H100s for $1/hr. I went ahead and tried them out for running the captioning of v2.5’s dataset and despite my hesitations … everything seemed to be working. Since H100s are usually $3/hr at my usual vendor (Lambda Labs), this would have slashed the cost of running v2.5’s training from $10k to $3.3k. Great! Only problem is, sfcompute only has 1.5TB of storage on their machines, and v2.5’s dataset was 3TBs.

v2’s training code was not set up for streaming the dataset; it expected it to be ready and available on disk. And streaming datasets are no simple things. But with $7k dangling in front of me I couldn’t not try and get it to work. And so began a slow, two month descent into madness.

The Nightmare Begins

I started out by finding MosaicML’s streaming library, which purported to make streaming from cloud storage easy. I also found their blog posts on using their composer library to train SDXL efficiently on a multi-node setup. I’d never done multi-node setups before (where you use multiple computers, each with their own GPUs, to train a single model), only single node, multi-GPU. The former is much more complex and error prone, but … if they already have a library, and a training recipe, that also uses streaming … I might as well!

As is the case with all new libraries, it took quite awhile to wrap my head around using it properly. Everyone has their own conventions, and those conventions become more and more apparent the higher level the library is. Which meant I had to learn how MosaicML’s team likes to train models and adapt my methodologies over to that.

Problem number 1: Once a training script had finally been constructed it was time to pack the dataset into the format the streaming library needed. After doing that I fired off a quick test run locally only to run into the first problem. Since my data has images at different resolutions, they need to be bucketed and sampled so that every minibatch contains only samples from one bucket. Otherwise the tensors are different sizes and can’t be stacked. The streaming library does support this use case, but only by ensuring that the samples in a batch all come from the same “stream”. No problem, I’ll just split my dataset up into one stream per bucket.

That worked, albeit it did require splitting into over 100 “streams”. To me it’s all just a blob of folders, so I didn’t really care. I tweaked the training script and fired everything off again. Error.

Problem number 2: MosaicML’s libraries are all set up to handle batches, so it was trying to find 2048 samples (my batch size) all in the same bucket. That’s fine for the training set, but the test set itself is only 2048 samples in total! So it could never get a full batch for testing and just errored out. sigh Okay, fine. I adjusted the training script and threw hacks at it. Now it tricked the libraries into thinking the batch size was the device mini batch size (16 in my case), and then I accumulated a full device batch (2048 / n_gpus) before handing it off to the trainer. That worked! We are good to go! I uploaded the dataset to Cloudflare’s R2, the cheapest reliable cloud storage I could find, and fired up a rented machine. Error.

Problem number 3: The training script began throwing NCCL errors. NCCL is the communication and synchronization framework that PyTorch uses behind the scenes to handle coordinating multi-GPU training. This was not good. NCCL and multi-GPU is complex and nearly impenetrable. And the only errors I was getting was that things were timing out. WTF?

After probably a week of debugging and tinkering I came to the conclusion that either the streaming library was bugging on my setup, or it couldn’t handle having 100+ streams (timing out waiting for them all to initialize). So I had to ditch the streaming library and write my own.

Which is exactly what I did. Two weeks? Three weeks later? I don’t remember, but after an exhausting amount of work I had built my own implementation of a streaming dataset in Rust that could easily handle 100+ streams, along with better handling my specific use case. I plugged the new library in, fixed bugs, etc and let it rip on a rented machine. Success! Kind of.

Problem number 4: MosaicML’s streaming library stored the dataset in chunks. Without thinking about it, I figured that made sense. Better to have 1000 files per stream than 100,000 individually encoded samples per stream. So I built my library to work off the same structure. Problem is, when you’re shuffling data you don’t access the data sequentially. Which means you’re pulling from a completely different set of data chunks every batch. Which means, effectively, you need to grab one chunk per sample. If each chunk contains 32 samples, you’re basically multiplying your bandwidth by 32x for no reason. D’oh! The streaming library does have ways of ameliorating this using custom shuffling algorithms that try to utilize samples within chunks more. But all it does is decrease the multiplier. Unless you’re comfortable shuffling at the data chunk level, which will cause your batches to always group the same set of 32 samples together during training.

That meant I had to spend more engineering time tearing my library apart and rebuilding it without chunking. Once that was done I rented a machine, fired off the script, and … Success! Kind of. Again.

Problem number 5: Now the script wasn’t wasting bandwidth, but it did have to fetch 2048 individual files from R2 per batch. To no one’s surprise neither the network nor R2 enjoyed that. Even with tons of buffering, tons of concurrent requests, etc, I couldn’t get sfcompute and R2’s networks doing many, small transfers like that fast enough. So the training became bound, leaving the GPUs starved of work. I gave up on streaming.

With streaming out of the picture, I couldn’t use sfcompute. Two months of work, down the drain. In theory I could tie together multiple filesystems across multiple nodes on sfcompute to get the necessary storage, but that was yet more engineering and risk. So, with much regret, I abandoned the siren call of cost savings and went back to other providers.

Now, normally I like to use Lambda Labs. Price has consistently been the lowest, and I’ve rarely run into issues. When I have, their support has always refunded me. So they’re my fam. But one thing they don’t do is allow you to rent node clusters on demand. You can only rent clusters in chunks of 1 week. So my choice was either stick with one node, which would take 22 days of training, or rent a 4 node cluster for 1 week and waste money. With some searching for other providers I came across Nebius, which seemed new but reputable enough. And in fact, their setup turned out to be quite nice. Pricing was comparable to Lambda, but with stuff like customizable VM configurations, on demand clusters, managed kubernetes, shared storage disks, etc. Basically perfect for my application. One thing they don’t offer is a way to say “I want a four node cluster, please, thx” and have it either spin that up or not depending on resource availability. Instead, you have to tediously spin up each node one at a time. If any node fails to come up because their resources are exhausted, well, you’re SOL and either have to tear everything down (eating the cost), or adjust your plans to running on a smaller cluster. Quite annoying.

In the end I preloaded a shared disk with the dataset and spun up a 4 node cluster, 32 GPUs total, each an H100 SXM5. It did take me some additional debugging and code fixes to get multi-node training dialed in (which I did on a two node testing cluster), but everything eventually worked and the training was off to the races!

The Nightmare Continues

Picture this. A four node cluster, held together with duct tape and old porno magazines. Burning through $120 per hour. Any mistake in the training scripts, dataset, a GPU exploding, was going to HURT. I was already terrified of dumping this much into an experiment.

So there I am, watching the training slowly chug along and BOOM, the loss explodes. Money on fire! HURRY! FIX IT NOW!

The panic and stress was unreal. I had to figure out what was going wrong, fix it, deploy the new config and scripts, and restart training, burning everything done so far.

Second attempt … explodes again.

Third attempt … explodes.

DAYS had gone by with the GPUs spinning into the void.

In a desperate attempt to stabilize training and salvage everything I upped the batch size to 4096 and froze the text encoders. I’ll talk more about the text encoders later, but from looking at the gradient graphs it looked like they were spiking first so freezing them seemed like a good option. Increasing the batch size would do two things. One, it would smooth the loss. If there was some singular data sample or something triggering things, this would diminish its contribution and hopefully keep things on the rails. Two, it would decrease the effective learning rate. By keeping learning rate fixed, but doubling batch size, the effective learning rate goes down. Lower learning rates tend to be more stable, though maybe less optimal. At this point I didn’t care, and just plugged in the config and flung it across the internet.

One day. Two days. Three days. There was never a point that I thought “okay, it’s stable, it’s going to finish.” As far as I’m concerned, even though the training is done now and the model exported and deployed, the loss might still find me in my sleep and climb under the sheets to have its way with me. Who knows.

In summary, against my desires, I had to add two more experiments to v2.5: freezing both text encoders and upping the batch size from 2048 to 4096. I also burned through an extra $6k from all the fuck ups. Neat!

The Training

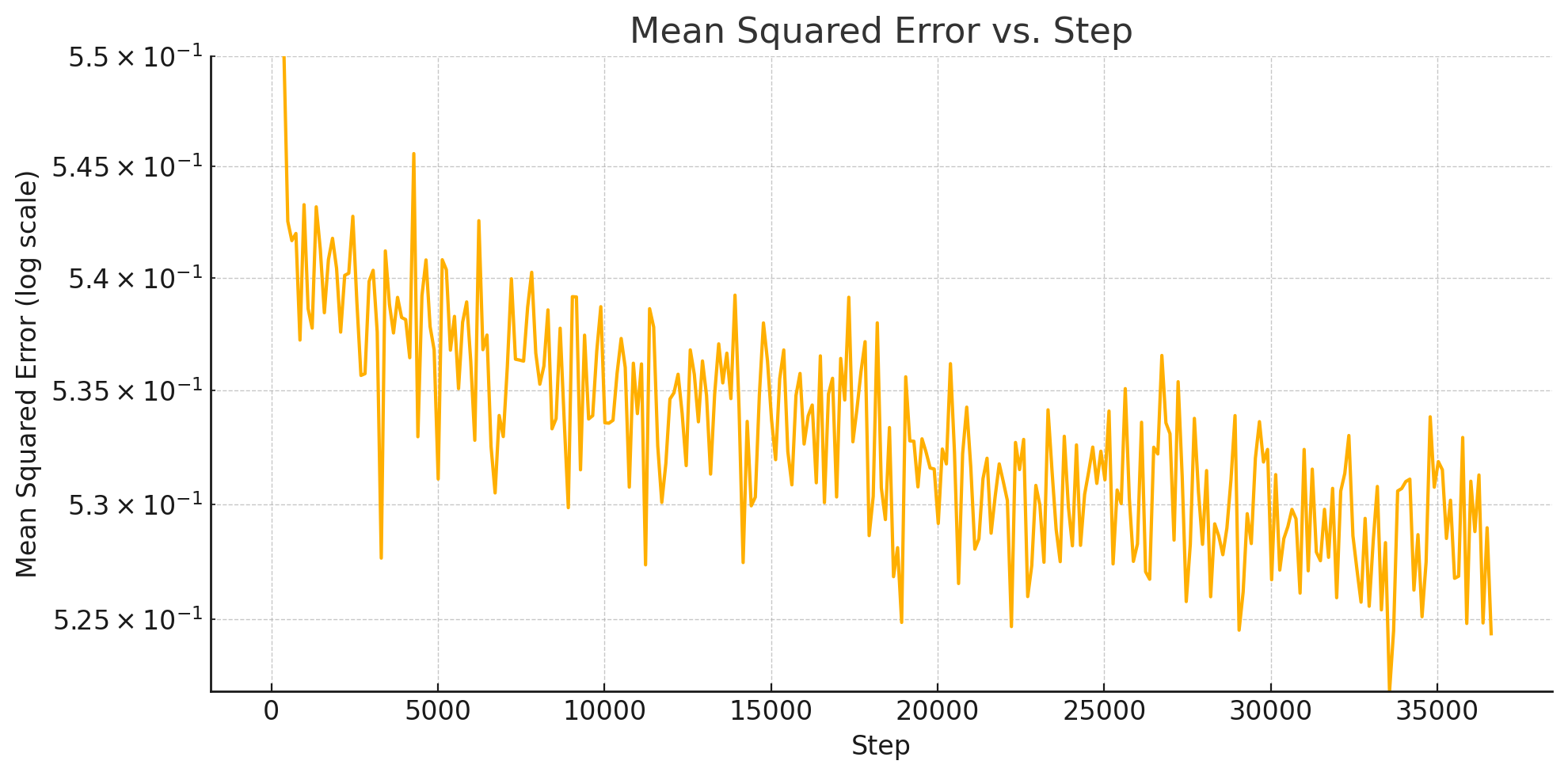

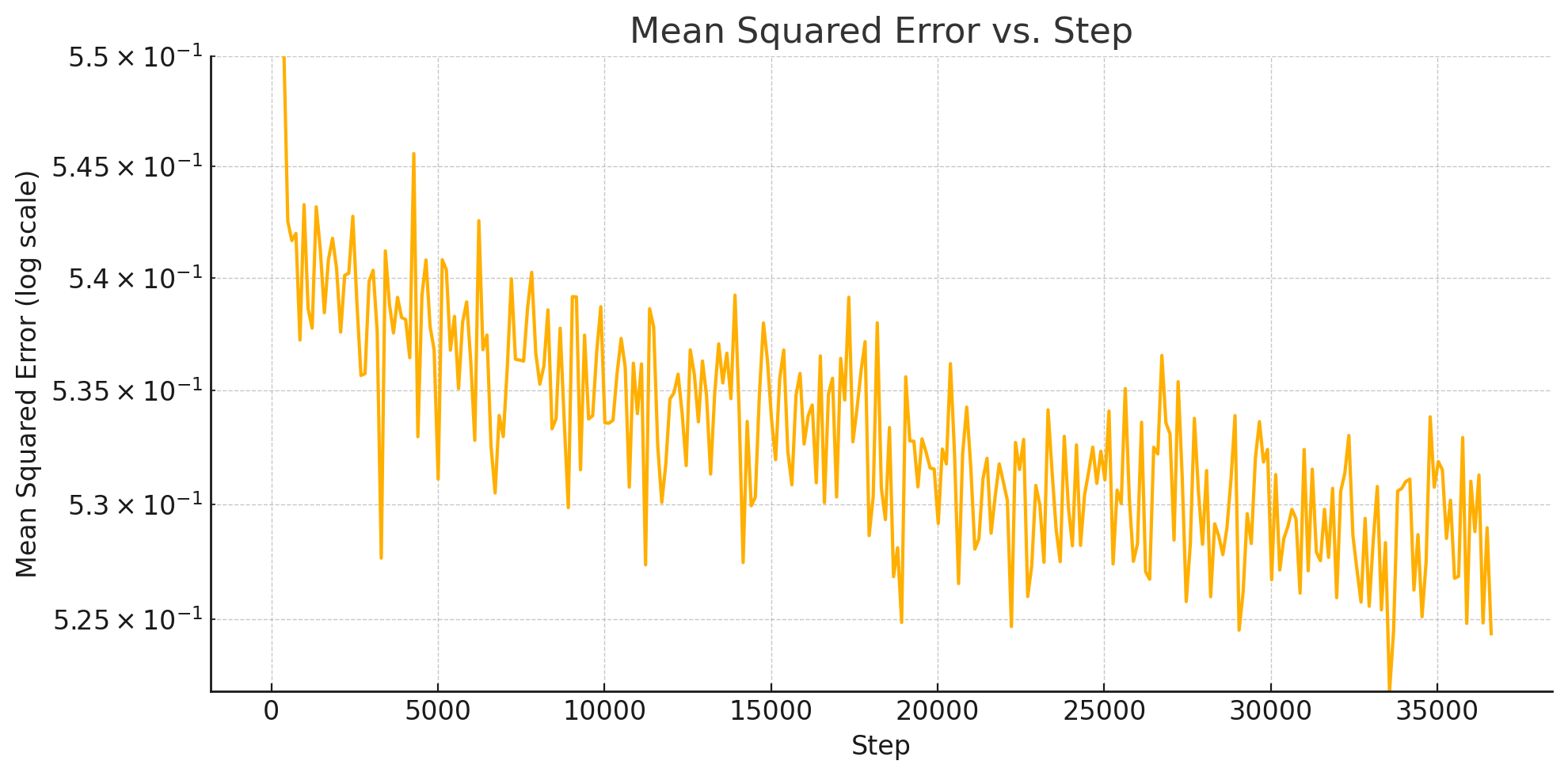

Above is the test loss. As with all diffusion models, the changes in loss over training are extremely small so they’re hard to measure except by zooming into a tight range and having lots and lots of steps. In this case I set the max y axis value to .55 so you can see the important part of the chart clearly. Test loss starts much higher than that in the early steps.

With 32x H100 SXM5 GPUs training progressed at 300 samples/s, which is 9.4 samples/s/gpu. This is only slightly slower than the single node case which achieves 9.6 samples/s/gpu. So the cost of doing multinode in this case is minimal, thankfully. However, doing a single GPU run gets to nearly 11 samples/s, so the overhead of distributing the training at all is significant. I have tried a few tweaks to bring the numbers up, but I think that’s roughly just the cost of synchronization.

Training Configuration:

AdamW optimizer

float32 parameters, bf16 amp

Adam Beta1 = 0.9

Adam Beta2 = 0.999

Adam EPS = 1e-8

LR = 0.0001

Linear warmup for 1,000,000 samples

Cosine annealing down to 0.0 after warmup.

Total training duration = 150,000,000 samples

Device batch size = 16 samples

Batch size = 4096

Gradient Norm Clipping = 1.0

Unet completely unfrozen

Both text encoders frozen

Gradient checkpointing (memory usage is explosively high without it)

PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True

No torch.compile (I could never get it to work here)

The exact training script and training configuration file can be found on the Github repo. They are incredibly messy, which I hope is understandable given the nightmare I went through for this run. But they are recorded as-is for posterity.

All image latents were pre-encoded. Text encodings are computed on the fly. Gzip compression is used to compress the latents losslessy, providing a nice space savings (about 30%). I tried other compression algorithms, but nothing really beat good ole gzip here.

FSDP1 is used in the SHARD_GRAD_OP mode to split training across GPUs and nodes. I was limited to a max device batch size of 16 for other reasons, so trying to reduce memory usage further wasn’t helpful. Per-GPU memory usage peaked at about 31GB. MosaicML’s Composer library handled launching the run, but it doesn’t do anything much different than torchrun.

The prompts for the images during training are constructed on the fly. 80% of the time it is the caption from the dataset; 20% of the time it is the tag string from the dataset (if one is available). Quality strings like “high quality” (calculated using my custom aesthetic model) are added to the tag string on the fly 90% of the time. For captions, the quality keywords were already included during caption generation (with similar 10% dropping of the quality keywords). Most captions are written by JoyCaption Beta One operating in different modes to increase the diversity of captioning methodologies seen. Some images in the dataset had preexisting alt-text that was used verbatim. When a tag string is used the tags are shuffled into a random order. Designated “important” tags (like ‘watermark’) are always included, but the rest are randomly dropped to reach a randomly chosen tag count.

The final prompt is dropped 5% of the time to facilitate UCG. When the final prompt is dropped there is a 50% chance it is dropped by setting it to an empty string, and a 50% change that it is set to just the quality string. This was done because most people don’t use blank negative prompts these days, so I figured giving the model some training on just the quality strings could help CFG work better.

After tokenization the prompt tokens get split into chunks of 75 tokens. Each chunk is prepended by the BOS token and appended by the EOS token (resulting in 77 tokens per chunk). Each chunk is run through the text encoder(s). The embedded chunks are then concat’d back together. This is the NovelAI CLIP prompt extension method. A maximum of 3 chunks is allowed (anything beyond that is dropped).

In addition to grouping images into resolution buckets for aspect ratio bucketing, I also group images based on their caption’s chunk length. If this were not done, then almost every batch would have at least one image in it with a long prompt, resulting in every batch seen during training containing 3 chunks worth of tokens, most of which end up as padding. By bucketing by chunk length, the model will see a greater diversity of chunk lengths and less padding, better aligning it with inference time.

Training progresses as usual with SDXL except for the objective. Since this is Flow Matching now, a random timestep is picked using (roughly):

t = random.normal(mean=0, std=1)

t = sigmoid(t)

t = shift * t / (1 + (shift - 1) * sigmas)

This is the Shifted Logit Normal distribution, as suggested in the SD3 paper. The Logit Normal distribution basically weights training on the middle timesteps a lot more than the first and last timesteps. This was found to be empirically better in the SD3 paper. In addition they document the Shifted variant, which was also found to be empirically better than just Logit Normal. In SD3 they use shift=3. The shift parameter shifts the weights away from the middle and towards the noisier end of the spectrum.

Now, I say “roughly” above because I was still new to flow matching when I wrote v2.5’s code so its scheduling is quite messy and uses a bunch of HF’s library functions.

As the Flux Kontext paper points out, the shift parameter is actually equivalent to shifting the mean of the Logit Normal distribution. So in reality you can just do:

t = random.normal(mean=log(shift), std=1)

t = sigmoid(t)

Finally, the loss is just

target = noise - latents

loss = mse(target, model_output)

No loss weighting is applied.

That should be about it for v2.5’s training. Again, the script and config are in the repo. I trained v2.5 with shift set to 3. Though during inference I found shift=6 to work better.

The Text Encoder Tradeoff

Keeping the text encoders frozen versus unfrozen is an interesting trade off, at least in my experience. All of the foundational models like Flux keep their text encoders frozen, so it’s never a bad choice. The likely benefit of this is:

The text encoders will retain all of the knowledge they learned on their humongous datasets, potentially helping with any gaps in the diffusion model’s training.

The text encoders will retain their robust text processing, which they acquired by being trained on utter garbage alt-text. The boon of this is that it will make the resulting diffusion model’s prompt understanding very robust.

The text encoders have already linearized and orthogonalized their embeddings. In other words, we would expect their embeddings to contain lots of well separated feature vectors, and any prompt gets digested into some linear combination of these features. Neural networks love using this kind of input. Additionally, by keeping this property, the resulting diffusion model might generalize better to unseen ideas.

The likely downside of keeping the encoders frozen is prompt adherence. Since the encoders were trained on garbage, they tend to come out of their training with limited understanding of complex prompts. This will be especially true of multi-character prompts, which require cross referencing subjects throughout the prompt.

What about unfreezing the text encoders? An immediately likely benefit is improving prompt adherence. The diffusion model is able to dig in and elicit the much deeper knowledge that the encoders have buried inside of them, as well as creating more diverse information extraction by fully utilizing all 77 tokens of output the encoders have. (In contrast to their native training which pools the 77 tokens down to 1).

Another side benefit of unfreezing the text encoders is that I believe the diffusion models offload a large chunk of compute onto them. What I’ve noticed in my experience thus far with training runs on frozen vs unfrozen encoders, is that the unfrozen runs start off with a huge boost in learning. The frozen runs are much slower, at least initially. People training LORAs will also tell you the same thing: unfreezing TE1 gives a huge boost.

The downside? The likely loss of all the benefits of keeping the encoder frozen. Concepts not present in the diffuser’s training will be slowly forgotten, and you lose out on any potential generalization the text encoder’s embeddings may have provided. How significant is that? I’m not sure, and the experiments to know for sure would be very expensive. That’s just my intuition so far from what I’ve seen in my training runs and results.

In a perfect world, the diffuser’s training dataset would be as wide ranging and nuanced as the text encoder’s dataset, which might alleviate the disadvantages.

For v2.5, I’m considering doing a second finetune on top of it for a short time with the text encoders lightly unfrozen. Either with a low learning rate, or only unfreezing a few layers. I believe that might strike a nice balance. It’s also possible, in theory, to add an alignment loss that keeps the encoder’s embedding vectors aligned with the original embeddings, which I might try.

For v3, I’m considering trying a pretraining approach, where I finetune the text encoders by themselves using their original contrastive loss, but on a dataset that is a mix of their original dataset and generated captions. That should pretrain the text encoders to benefit from the better captions while retaining their robustness to garbage captions. Then the text encoder is kept frozen during diffusion training. Best of both worlds? We’ll see.

Inference

Since v2.5 is a frankenstein model, I was worried about getting it working for generation. Luckily, ComfyUI can be easily coaxed into working with the model. The architecture of v2.5 is the same as any other SDXL model, so it has no problem loading it. Then, to get Comfy to understand its outputs as Flow Matching you just have to use the ModelSamplingSD3 node. That node, conveniently, does exactly that: tells Comfy “this model is flow matching” and nothing else. Nice!

That node also allows adjusting the shift parameter, which works in inference as well. Similar to during training, it causes the sampler to spend more time on the higher noise parts of the schedule.

Now the tricky part is getting v2.5 to produce reasonable results. As far as I’m aware, other flow matching models like Flux work across a wide range of samplers and schedules available in Comfy. But v2.5? Not so much. In fact, I’ve only found it to work well with the Euler sampler. Everything else produces garbage or bad results. I haven’t dug into why that may be. Perhaps those other samplers are ignoring the SD3 node and treating the model like SDXL? I dunno. But Euler does work.

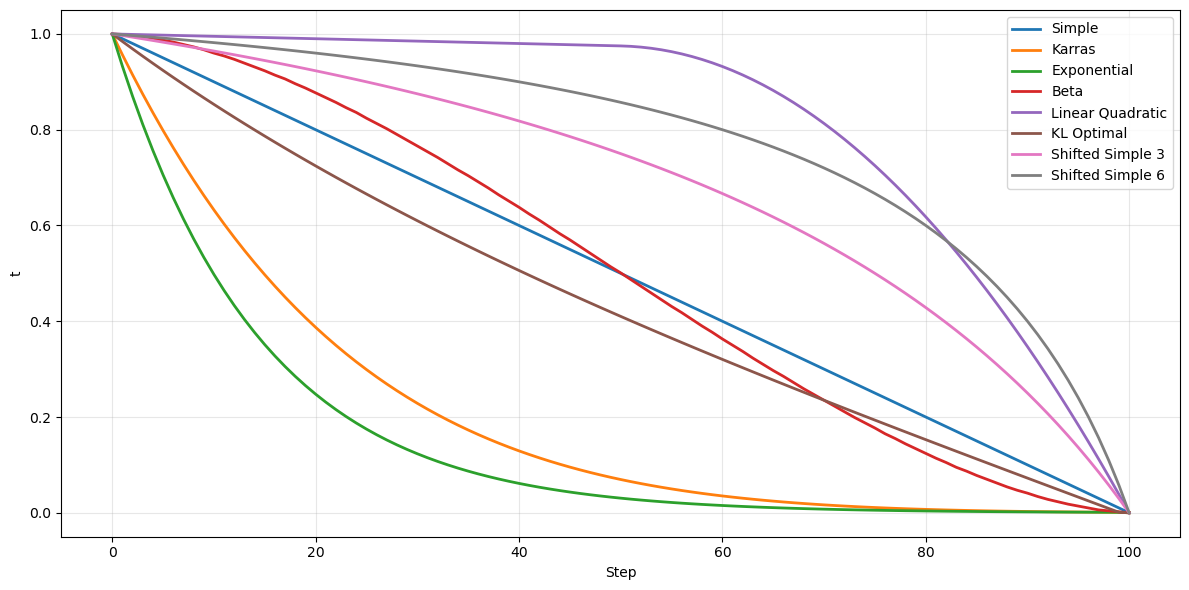

For schedules the model is similarly limited. The Normal schedule works, but it’s important to use the “shift” parameter from the ModelSamplingSD3 node to bend the schedule towards earlier steps. Shift values between 3 and 6 work best, in my experience so far.

In practice, the shift parameter is causing the sampler to spend more time on the structure of the image. A previous section in this article talks about the importance of this and what “image structure” means. But basically, if the image structure gets messed up you’ll see bad composition, deformed bodies, melting objects, duplicates, etc. It seems v2.5 can produce good structure, but it needs more time there than usual. Increasing shift gives it that chance.

The downside is that the noise schedule is always a tradeoff. Spend more time in the high noise regime and you lose time to spend in the low noise regime where details are worked on. You’ll notice at high shift values the images start to smooth out and lose detail.

Thankfully the Beta schedule also seems to work. You can see the shifted normal schedules, beta, and other schedules plotted here:

Beta is not as aggressive as Normal+Shift in the high noise regime, so structure won’t be quite as good, but it also switches to spending time on details in the latter half so you get details back in return!

Finally there’s one more technique that pushes quality even further. PAG! Perturbed Attention Guidance is a funky little guy. Basically, it runs the model twice, once like normal, and once with the model fucked up. It then adds a secondary CFG which pushes predictions away from not only your negative prompt but also the predictions made by the fucked up model.

In practice, it’s a “make the model magically better” node. For the most part. By using PAG (between ModelSamplingSD3 and KSampler) the model gets yet another boost in quality. Note, importantly, that since PAG is performing its own CFG, you typically want to tone down the normal CFG value. Without PAG, I find CFG can be between 3 and 6. With PAG, it works best between 2 and 5, tending towards 3. Another downside of PAG is that it can sometimes overcook images. Everything is a tradeoff.

With all of these tweaks combined, I’ve been able to get v2.5 closer to models like PonyXL in terms of reliability and quality. With the added benefit of Flow Matching giving us great dynamic range!

What Worked and What Didn’t

More data and more training is more gooder. Hard to argue against that.

Did adding anime help? Overall I think yes, in the sense that it does seem to have allowed increased flexibility and creative expression on the photoreal side. Though there are issues with the model outputting non-photoreal style when prompted for a photo, which is to be expected. I suspect the lack of text encoder training is making this worse. So hopefully I can improve this in a revision, and refine my process for v3.

Did it create a unified model that excels at both photoreal and anime? Fuck no. In fact, v2.5’s anime generation prowess is about as good as chucking a crayon in a paper bag and shaking it around a bit. I’m not entirely sure why it’s struggling so much on that side, which means I have my work cut out for me in future iterations.

Did Flow Matching help? It’s hard to say for sure whether Flow Matching helped, or more training, or both. At the very least, Flow Matching did absolutely improve the dynamic range of the model’s outputs.

Did freezing the text encoders do anything? In my testing so far I’d say it’s following what I expected as outlined above. More robust, at the very least. But also gets confused easily. For example prompting for “beads of sweat” just results in the model drawing glass beads.

Negative Prompting and the Chamber of Quality

Many moons ago Max Woolf wrote a blog post about an experiment where they used SDXL to generate busted/deformed/garbage images, and then trained a LORA on those busted images. The LORA could then be used to prompt for busted images in the negative prompt, driving the model towards better outputs. And in many respects the experiment was a success.

This is interesting to me for three reasons. One, though many people prompt for things like “deformed, wrong limbs, bad hands” and the like, that data doesn’t really exist in the training sets. If it does, it would be a vanishingly small quantity. Yet we do want to drive the model away from those types of gens. Since we now have a magic image machine … might as well use it to make that data, right?

Second, in some ways this mirrors Reinforcement Learning, which has been only naively applied to diffusion so far. (I argue that it needs to be heavily applied, but … that’s for another day). Having the model itself generate outputs to explore the space and train it on its successes and failures so it learns paths it can safely follow.

Third, this is similar to PAG. PAG damages the model so it generates “bad” outputs that it can then use to move away from. In essence it’s an automated version of Max Woolf’s experiment.

That leads me to the obvious conclusion: can I repeat this for v2.5 to improve its output further? I believe yes. A big reason why I believe yes, besides the existing Proof of Concept from Max Woolf, is my experience playing with v2.5’s negative prompt.

I noticed a marked improvement in the model’s outputs when prompting for “low quality” versus leaving the negative prompt blank. Not just in the aesthetic quality of the outputs but, more importantly, in the structural quality of the outputs. Clearly “low quality” is doing more than just driving the model towards a pretty picture.

So I suspect that if I fill the model’s dataset with a nice chunk of garbage images, and label them all low quality or similar, then it might drive this effect even further.

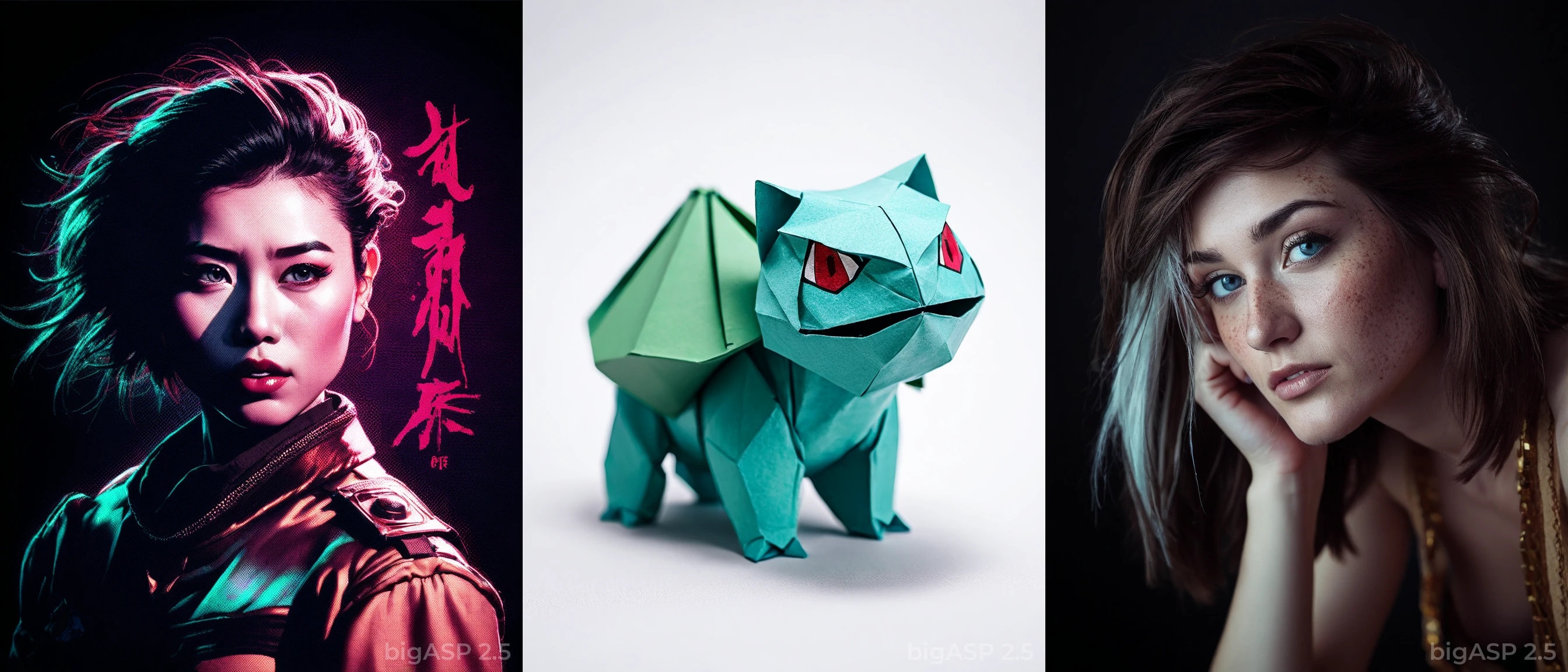

Sample Generations

Conclusion

I have only one last thing to write here.

Be good to each other, and build cool shit.