The Quest

How many times have you looked at one the example images for a new model so you can see how they got it to make that image, only to discover that the prompt has almost nothing to do with the image. The prompt will be something straight out of Shakespeare, but the image is effectively what you'd get with 1girl, sexy.

How many times have you typed in a complex prompt only to have the model ignore most of what you put in there? In my experience, sometimes this is because my prompt just isn't that good. But other times...the model just doesn't understand the prompt or just can't handle that many details.

I've seen several model comparison articles, but I've never found one that answers the questions I have:

Which models really adhere to your prompt?

How many details do they get right?

Do they actually look good when following a prompt?

How consistent are these answers for a model as we explore many prompts?

And I'm not talking about simple portraits of hot chicks. I want to know if I can specify detailed activities, clothing, lighting, character details. Can the model handle regional prompting? Can it handle weird stuff?

Another thing these comparison articles never do is offer a conclusion. Usually they just post a bunch of samples and leave it to you to sort through on your own.

My goal is to discover a handful of models that will offer me strong prompt adherence while looking nice and cover a range of styles. All the other models? They are all great at what they do, but they are not for me.

The Plan

My plan is to explore a large number of Illustrious models and see how well they handle a variety of prompts. I'll be using SwarmUI, my tool of choice for image and video generation.

Along the way, we'll explore various prompting techniques (including autosegment detailing and regional prompting). Maybe you'll learn some danbooru tag prompting techniques. I know I'll learn some new things along the way.

I'll be testing over 170 Illustrious checkpoints. I've downloaded a few popular lines, including multiple version of some models so I can explore how the model behaves across versions. I've got models that cover the style spectrum from illustrated to semireal to realistic.

The Methodology

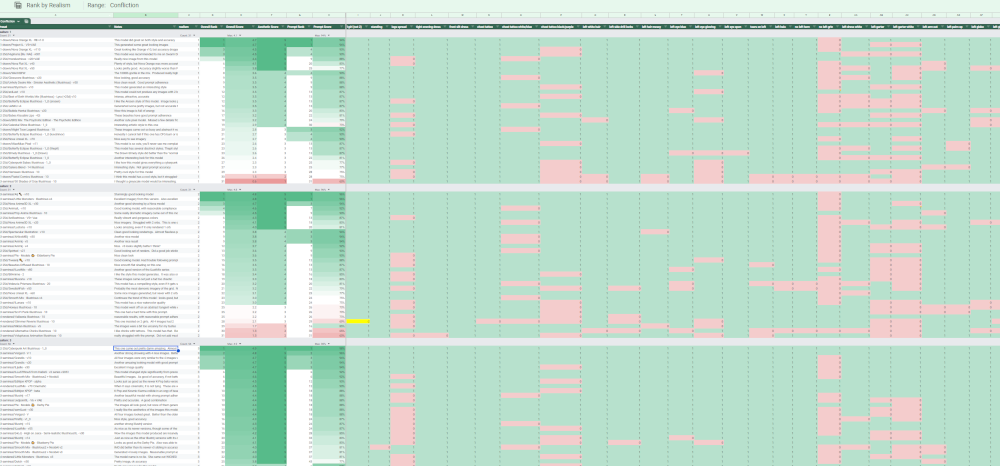

We'll need a spreadsheet. A very big spreadsheet.

Models & Presets

I've spent the past couple of months curating a large collection of models that look great on the model card. For each model, I've devoted time to learning what settings the model author recommends (or what other users recommend if the author does not provide this information). For each of the 170+ models I've downloaded, I've create a SwarmUI preset where I've set:

CFG Scale

Steps

Sampler

Scheduler

Positive Prompt quality tags

Negative Prompt quality tags

A typical preset will look like this:

You can see in the prompt section will have text like this:

<setvar[q]:masterpiece, best quality, amazing quality, very aesthetic, high resolution,

ultra-detailed, absurdres, newest, scenery, 3D, rendered, depth of field,

volumetric lighting><break>

{value}This is using some SwarmUI-specific syntax to do some things for me:

<setvar[q]:...>sets my quality tags for this model, and also stores them in a variable namedqwhich I'll be able to use in my image prompt to also set the quality tags on the detailer without needing to actually type them in again.{value}is a placeholder in the preset for where the actual image prompt will go. So basically I've set all of my presets to put the quality tags at the front of the prompt, then the image prompt goes after.

Thus I've spent time tailoring the settings to each model to give the model the best chance to perform well.

Prompt Scoring

For each new prompt I develop, I need to score each of the models against it. This is how I am doing it.

Image Generation

Once I've developed a new prompt to test, I run a Grid in SwarmUI that runs that prompt against the 170+ presets I've created. Since we all know that every model can flub a prompt on the first try, I generate 4 images for each preset. I use the following 4 seeds for all the generations: 42, 1337, 2147483647, 3735928559. Generating a grid of 700ish images takes my computer about 10 hours.

I then painstakingly go through each preset and choose the "best" of the 4 images produced. My criteria for "best" is roughly:

Which of the four images best embodies what I had in mind when I wrote the prompt

Of the images that embody my prompt, which looks the most pleasing?

If multiple look good, which seems to get the most details right?

Scoring

As part of developing my prompt, I also create a spreadsheet with a bunch of columns that define a bunch of details about the image I am expecting. For example, if I am prompting to get 1 girl with blond hair and blue eyes, then I'll put 3 columns in the spreadsheet: 1girl , blond hair, blue eyes. As I add more details to the prompt, I'll add more columns to track each of those details.

Prompt Score: Now I can go through each model and grade the selected image against all of the details. For each detail I'll put a 1 (image satisfies that detail) or 0 (image got that detail wrong). Once I've gone through all of the columns for an image, I can calculate the Prompt Score for the image as the percentage of details it scored a 1 on.

Realism: For each image I'll also assign a realism score from 1 to 5. My realism scale is roughly:

Drawn. Image looks drawn by hand or sketched.

2.5D. Image looks like a mix of drawn and rendered. Usually the person's legs and arms will look rendered, but things like the eyes or hair look drawn.

semi-real. The image looks mostly rendered, but obviously not real. Usually this is because the people have anime eyes or shiny skin

rendered. The image looks somewhat real. The facial features look normal instead of anime. The skin is too perfect to be real.

realistic. The image could pass for a photo. Sure it may have errors with reflections or shadows. But the people look like real people. The skin has details and does not look plastic.

The realism score does not directly affect the model score, but it lets me put the models in buckets and rank them against other models that produce a similar realism level. Also as I develop more prompts, I'll start scoring the consistency of the realism score (does the model consistently deliver the same style?)

Aesthetics Score: Each image also gets assigned an Aesthetics score. This is purely my subjective opinion on how good it looks. My scoring guide is roughly:

Looks like crap. The people have deformities. There is no detail. The image just looks ugly.

Ugly image, but no deformities. The image just lacks any creativity or coolness. But at least no one has 3 arms

Average. The image looks ok. I wouldn't use it as a desktop wallpaper, but it does look decent

Above Average. The image looks pretty good. The model showed some creativity and added details to enhance the quality of the image.

Very good. the image looks great. I'd be proud to show it to people.

Overall Score: The final scoring step is to calculate an Overall Score. This is simply the Aesthetics Score multiplied by the Prompt Score. My reasoning here is that models that follow prompts exactly but produce ugly images aren't very useful. I'm willing to sacrifice some accuracy for image quality. My goal is to find some models that hit the sweet spot of good accuracy and good visual aesthetics.

The last thing I do is group the results by Realism, calculate the Prompt Score Ranking within each group as well as the Overall Score Ranking within each group.

At this point you can just open the spreadsheet for the prompt, scroll to the realism group you are interested in and check the rankings. The models in each group are sorted by overall rank. So it is pretty easy to find the models that generated good results.

Publishing Results

I'll publish the results of each new prompt as a new article with a link back to this article for explanation. Each article will also have a link to the google spreadsheet so you can examine the data in detail on your own.

Published Results

Scoring Over Time

One of the things I am most interested in is model consistency. As we explore different prompts, does the model consistently follow prompts? Does it consistently produce pretty images? How consistent is it's realism level? If a model is unreliable because you never know what you will get, I want to know that. It'll be less useful to me if I cannot depend upon it.

Once I develop the 2nd prompt, I'll add some columns to the main model sheet to score model consistency. The main model sheet will then be the place to go to find out the overall score of a model that reflects how the model did on average against all the prompts. You won't see these columns at first. But give it time and they will show up.

Frequently Asked Questions

I thought I'd answer some questions you may not have asked

Why don't you test model XYZ. It is awesome

I'm already testing 170+ models. That's about 1.2TB worth of models.

I'm out of disk space and just don't have room for any more.

Also it takes me over a week to process the results of a single prompt. I'm evaluating the results by hand. I don't trust a vision model to look for the things I want to look for. That new model XYZ you want to add? That will cost me another 5-10 minutes per prompt to process.

Once I get the 2nd prompt evaluated, I'll start culling models that aren't doing well. This should make room for adding in other models to take their place.

Model XYZ is awesome but you gave it a poor score. You suck

If I gave it a poor score, then it just means it doesn't do what I want it to do. The fact that I downloaded it and tested it means I really liked something about it and invested the time to explore it. Poor Score does not mean Poor Model. It really just means my goals aren't the same as the model creator's goals. I'll try to suck less going forward.

When do we get to see naughty pics?

They are coming. I've got plenty of dirty prompts I want to try. Don't worry.

Your Realism Scale makes no sense

Yeah I see these terms bandied about but I never see definitions. They seem somewhat subjective. I've done my best to explain how I see things so hopefully you can translate into your own mental model.

Lolz you called that image realistic. Catch a clue - it's not!

Yeah well I was young lad when Pong came out. I think this was the same year I had my first erection. Coincidence?

Look at that sexy game controller.

Coming from that era, I am a lot more forgiving about what I consider "realistic".