I've read dozens of 'just use the low model only' takes, but after experimenting with diffusion-pipe (which supports training both models since yesterday), I came to the conclusion that doing so leads to massive performance and accuracy loss.

For the experiment, I ran my splits dataset and built the following LoRAs:

* splits_high_e20 (LoRA for min_t = 0.875 and max_t = 1) — use with Wan's High model

* splits_low_e20 (LoRA for min_t = 0 and max_t = 0.875) — use with Wan's Low model

* splits_complete_e20 (LoRA for min_t = 0 and max_t = 1) — the 'normal' LoRa - also use with Wan's Low model and/or with Wan2.1

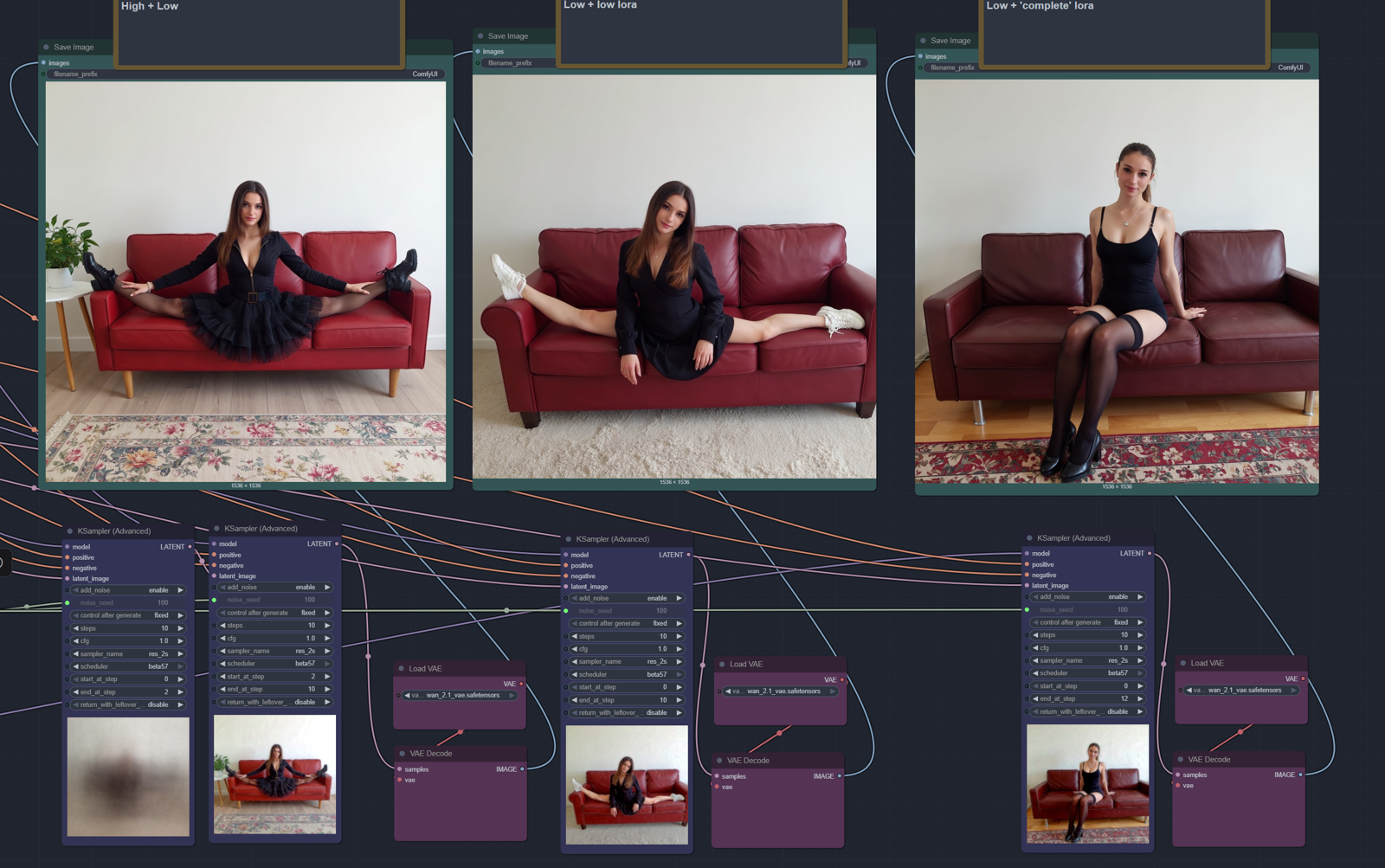

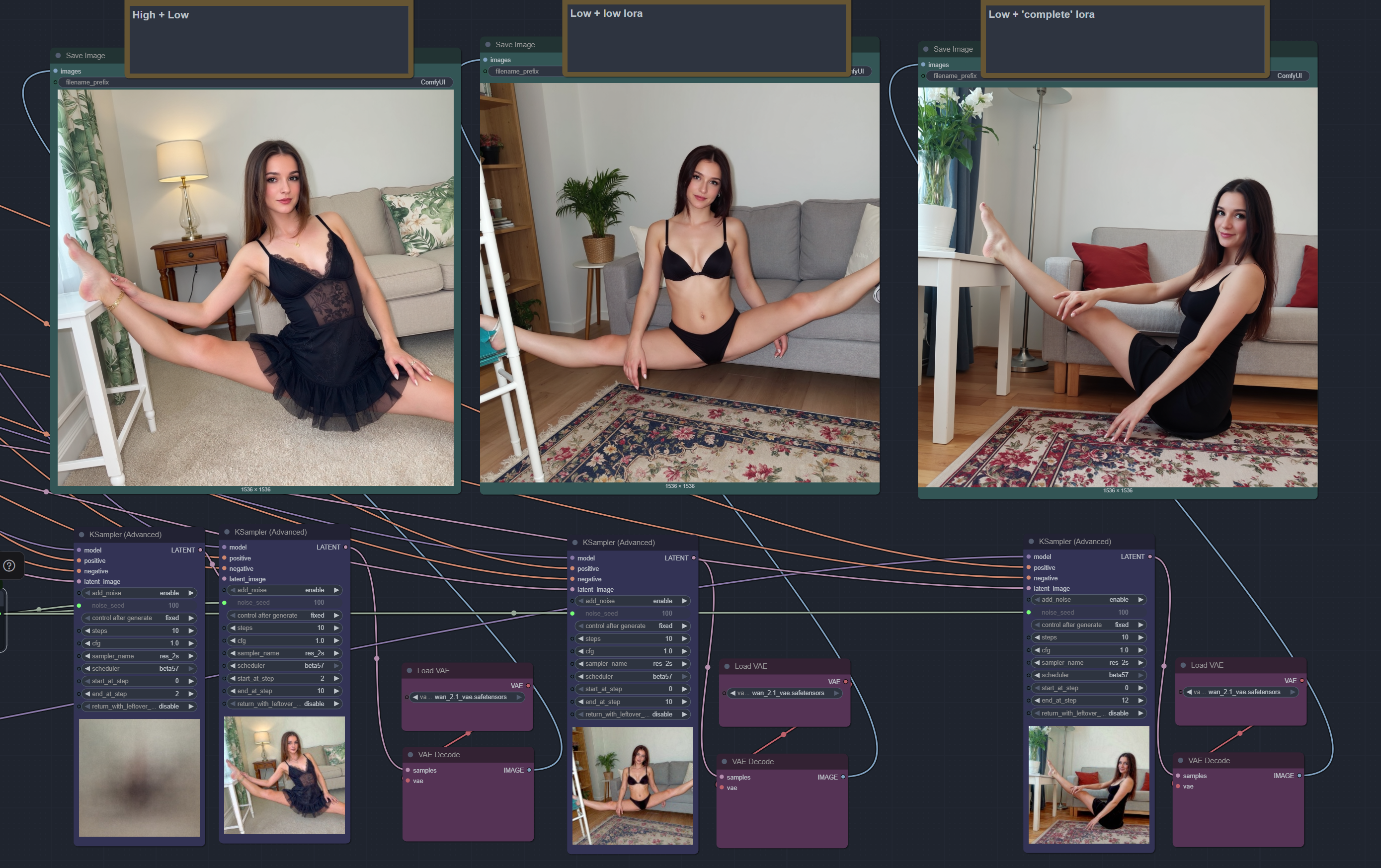

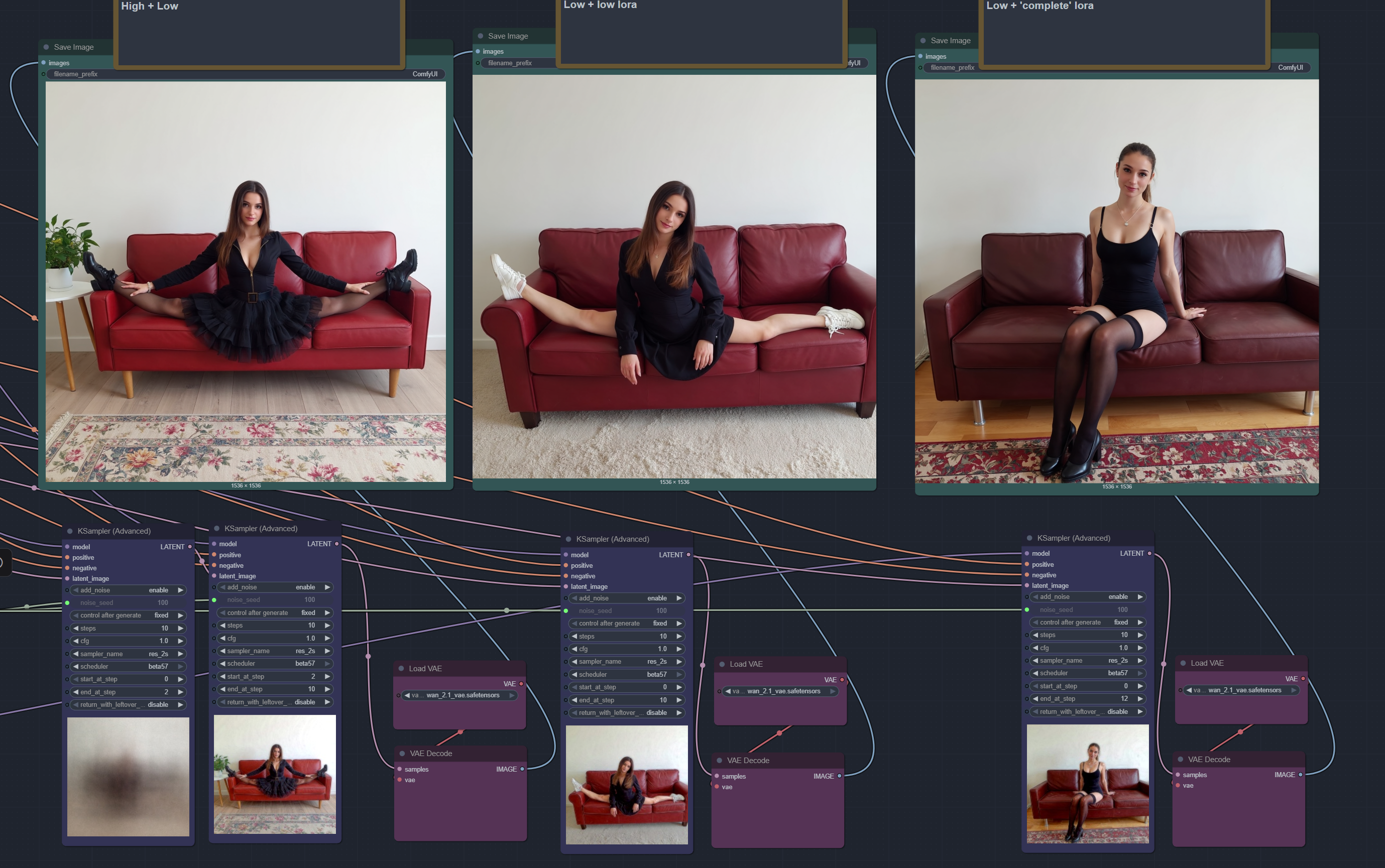

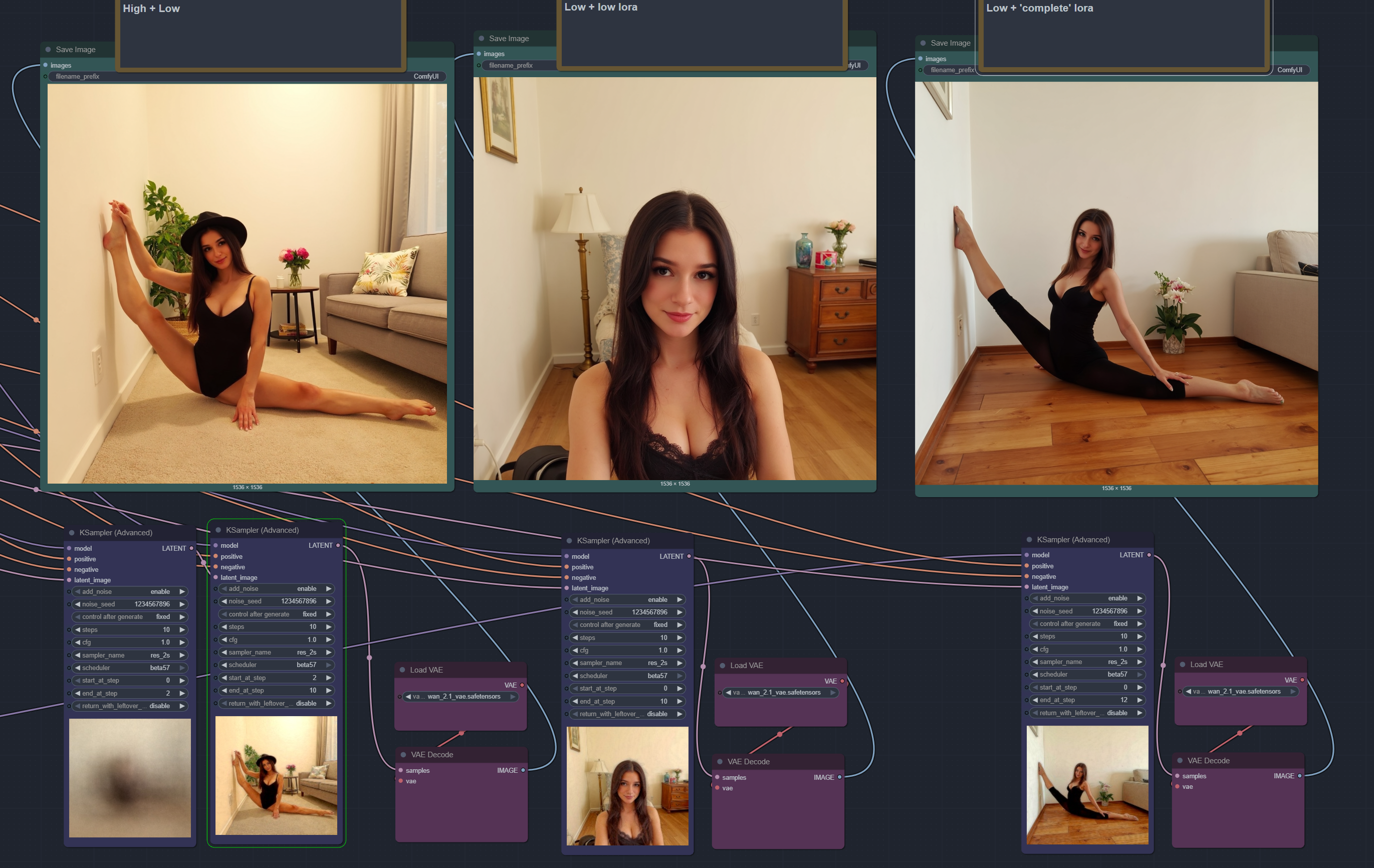

These are the results:

* First image: high + low

* Second image: low + splits_low_e20

* Third image: low + splits_complete_e20

As you can see, the first image (the high + low combo) is

a) always accurate

b) even when the others stick to the lore, it's still the best.

With high + low, you literally get an accuracy close to 100%. I generated over 100 images and not a single one was bad, while the other two combinations often mess up the anatomy or fail to produce a splits pose at all.

And that "fail to produce" stuff drove me nuts with the low-only workflows, because I could never tell why my LoRA didn’t work. You’ve probably noticed it yourself, in your low-only runs, sometimes it feels like the LoRA isn’t even active. This is the reason.

Please try it out yourself!

Workflow:

[https://pastebin.com/q5EZFfpi](https://pastebin.com/q5EZFfpi)

All three LoRAs:

https://civitai.com/models/1827208

Cheers,

Pyro