⚡ TL;DR: My Workflow at a Glance

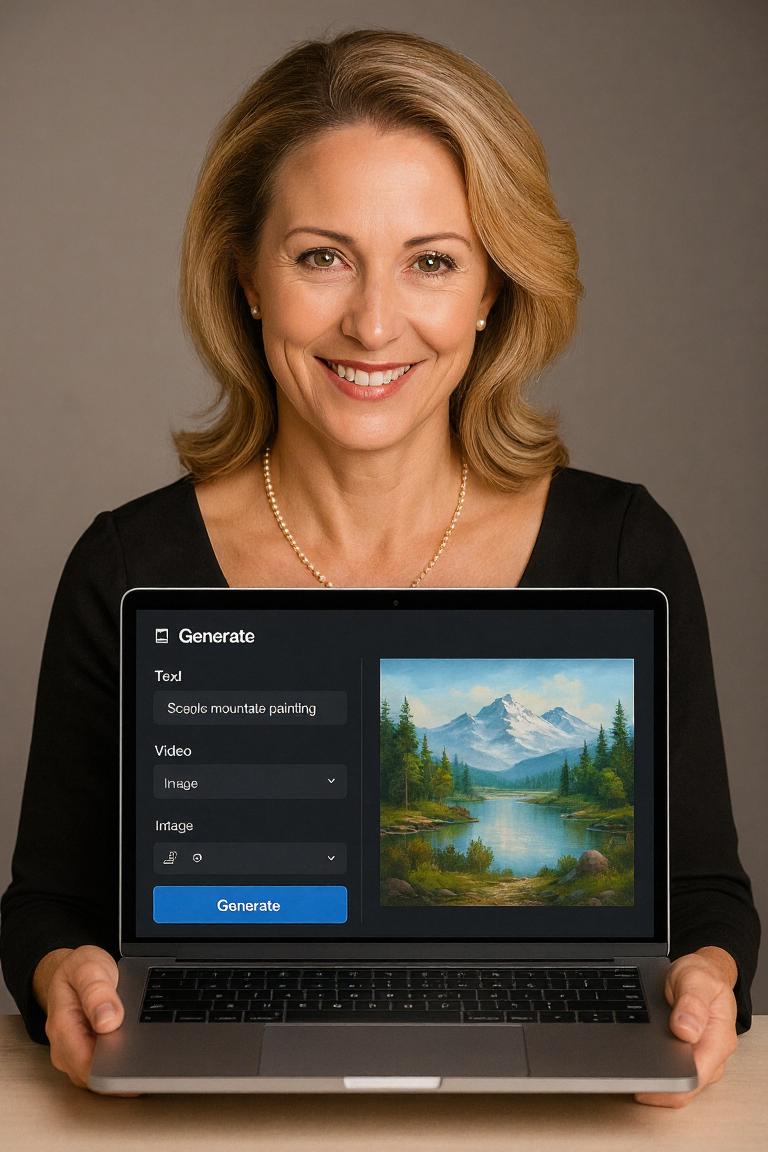

📝 I use ChatGPT with custom instructions to build structured, cinematic prompts.

🧠 I generate base images using tools like Sora, Recraft, Imagen, and Grok.

🎛 I refine images using image-to-image passes in TensorArt with FLUX Dev fp32 or FLUX.1 Krea Dev.

💠 I sometimes start with SDXL models via CivitAI, then polish in TensorArt.

🎨 I use LoRAs and denoise strengths creatively to adjust pose, styling, or realism.

Hey everyone — I often get DMs asking:

"What model are you using?"

"Which tools are in your pipeline?"

"How do you get that level of detail or style?"

Rather than replying each time, I’ve put together this overview of my AI image creation workflow. It breaks down the tools I use, how I chain them together, and the stylistic strategies I follow — all in one place.

🧰 My Tool Set

📝 Prompt Generation Support

I use ChatGPT with a custom instruction setup to help structure and generate my prompts.

My custom instructions are designed to follow a cinematic, literal format — focusing on realism, character coding, and worldbuilding.

I treat this like having a "visual director's assistant" — it helps me consistently output high-quality prompts that translate well across different generators.

Inspired by techniques from Creating Photorealistic Images With AI: Using Stable Diffusion, I rely on a consistent prompt formula to reduce trial and error and ensure stylistic continuity across workflows.

🧠 Prompt-Based Generators

Sora

Recraft

Google Imagen

Grok

Other closed-source tools

🎛 Post-Processing & Refinement

TensorArt (image-to-image passes using FLUX Dev fp32 or FLUX.1 Krea Dev)

CivitAI Generator (for SDXL-based model generations)

🎨 Modifiers

LoRAs (for styling, detail fixes, or expressive tweaks)

Prompt suffixes (for stylistic or implied character traits)

⚙️ My Core Workflows

Rather than doing the same thing every time, I switch between three main pipelines depending on the goal. Think of these like cinematic production styles — each suited to different needs.

🧪 Closed-Source ➡️ Flux Finisher

🔹 When I use this: Use this when I want convenience, speed, or a strong base composition from closed-source tools like ChatGPT or Imagen.

🛠️ How it flows:

Generate a base image using a web-based tool (ChatGPT, Sora, etc.)

Upload the result to TensorArt

Apply image-to-image using FLUX Dev fp32 or FLUX.1 Krea Dev

🔧 Denoise strength: typically 0.1–0.4

🎯 Purpose: add detail, realistic lighting, and refined texture

🌀 Midpoint Modifier Workflow

🔹 When I use this: Use this when I want to alter the image’s tone, styling, or silhouette before refining.

🛠️ How it flows:

Start with a web-based image (ChatGPT, etc.)

Pass it through CivitAI Generator with ~0.5 denoise

Optionally add LoRAs for style, structure, or enhancement

Upload to TensorArt

Run image-to-image with FLUX Dev fp32 or FLUX.1 Krea Dev

Use 0.1–0.4 denoise to polish surface realism

💠 CivitAI ➡️ TensorArt Finalizer

🔹 When I use this: Use this when I want to work entirely within SDXL or need full control over structure and stylization.

🛠️ How it flows:

Generate a base image using CivitAI Generator with an SDXL model

Optionally add LoRAs for specific styling or character coding

Export the image

Upload to TensorArt

Run image-to-image with Flux at 0.1–0.4 denoise for refinement

🔍 Pro Tip: SDXL images are often strong on their own, but a final Flux pass adds depth, tone, and cinematic realism.

📌 Final Thoughts

This is the core process behind most of my AI images. Over time, I've refined it to balance creative control with platform safety — blending cinematic aesthetics, technical precision, and smart prompt structuring.

Whether I’m:

generating from scratch using web-based tools,

remixing structure and tone in CivitAI,

or applying final polish in TensorArt with Flux,

the key is layered control — knowing which part of the process to adjust.

If you're curious about any part of this workflow, feel free to bookmark or share this guide. Hope it helps!

🔎 Note on Compatibility

One detail worth mentioning — as of now, CivitAI doesn't seem to recognize TensorArt as an external generator. I suspect this is because TensorArt uses a ComfyUI backend, which may not align with how CivitAI tracks or validates generation sources. That said, this is a bit outside my technical wheelhouse — so if you know more about this, I’d love to hear your thoughts!

💬 Questions? Drop them in the comments or DM me.

🔗 Links & Resources

Here are some helpful tools, guides, or references I use or recommend: