Gemma CLIP

Every metric says this training should have failed. It used the Gemma Vision projected down to CLIP vision size.

So that is a vision model that has its dim reduced by more then half and its training size reduced from 896x896 down to 224x224

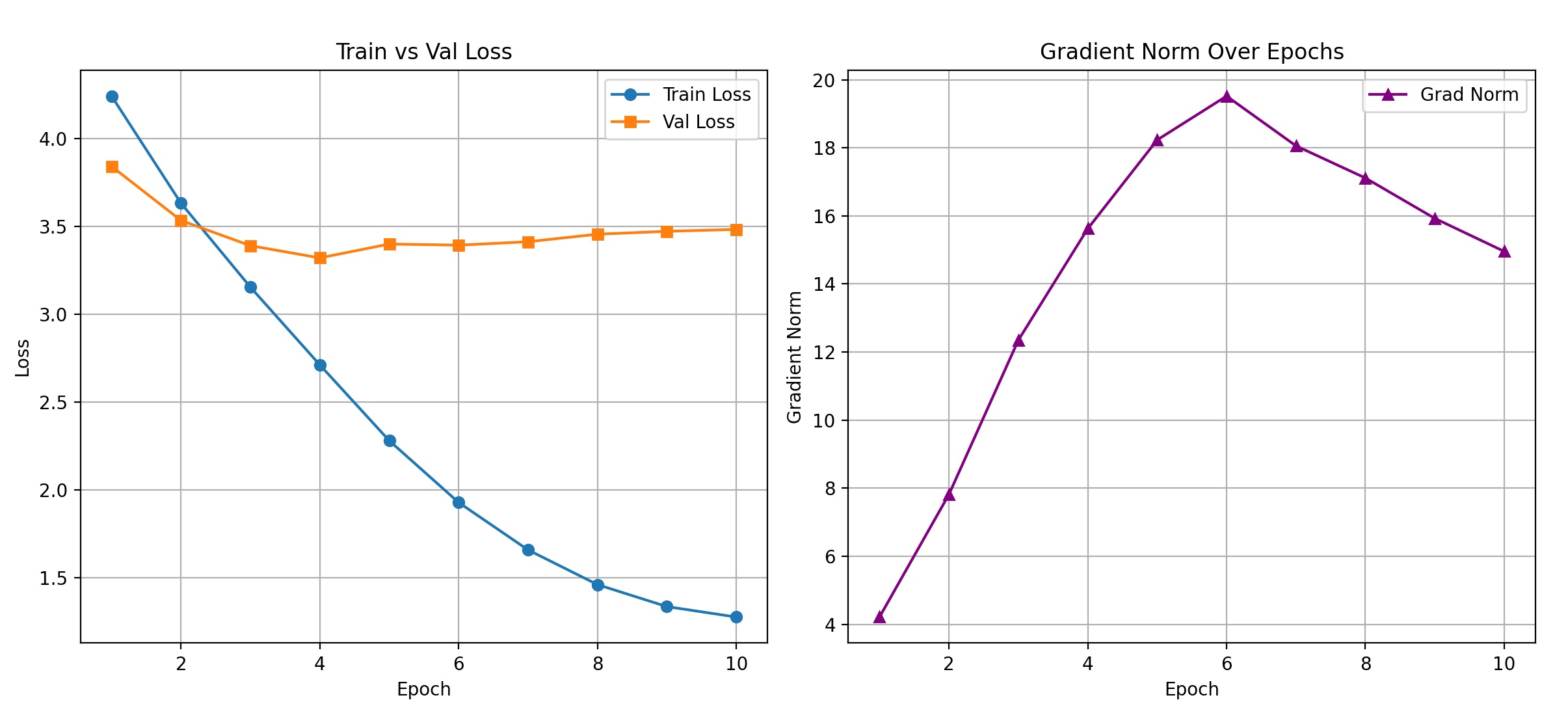

The latents created by the model should have been so far out of alignment that it could not possibly train. The graph shows a model that is very unhappy.

But yet, the clip model retains text. In fact it learned concepts that I defiantly did not have training images of.

Dog on Fire

Cat underwater

In fact many other complex prompts tested better with Gemma CLIP.

But I sat on this for a few days, and questioned even releasing it. It appears to work even better then my prior work with distillation, but only when the prompt is very short.

This model does not do as well with long prompts as my distilled CLIP, which I am happy about. As that was the whole point of the distilled training.

With low token count gemma excels but with long prompts distilled CLIP far outperforms it.