As mentioned in my embedding training guide, the two settings on which different training methods seem to diverge the most are gradient accumulation and number of training steps. These concepts are connected.

Definitions

There are (at least) two ways to define "training step": "number of (batches of) images shown" or "number of weight updates performed". Stable Diffusion uses the former definition (according to the wiki).

Gradient accumulation involves accumulating gradients from multiple steps (or "mini-batches") before performing a weight update. This means that increasing gradient accumulation reduces the effective number of training steps (in the sense of number of weight updates performed).

An epoch is when Stable Diffusion has seen the entire dataset once (irrespective of how many weight updates it performs, i.e. how much it actually "learns" per dataset pass).

Full-cycle training happens when Stable Diffusion updates the embedding each time it has seen each image in the dataset exactly once, i.e. when the number of epochs equals the number of weight updates.

number of weight updates (rounded down) = training steps / gradient accumulation

number of epochs (rounded down) = number of training steps / (number of images / batch size)

If you want number of weight updates to equal number of epochs (full-cycle learning), you get:

number of images = batch size x gradient accumulation

ChatGPT

To get some more clarity, I've asked ChatGPT about this subject. Take this with a grain of salt, but it matches what I have read earlier. Some excerpts:

Gradient accumulation is a technique used in training deep learning models, especially in scenarios where memory limitations prevent the model from processing a large batch of data in one go. Instead of updating the model's weights after each individual batch, gradient accumulation involves accumulating gradients over multiple mini-batches before performing a weight update. This can be particularly useful when working with large models or datasets.

A dataset of 24 images is generally considered very small in the context of deep learning and neural network training.

While gradient accumulation can help manage memory limitations, it might require more computational resources and time to achieve the same level of convergence as a smaller accumulation factor. This is due to the reduced frequency of weight updates.

[A] small batch size can result in noisy gradient estimates, which might impact training quality.

In general, smaller batch sizes tend to provide more noisy gradient estimates but can help the model generalize better. However, they might also slow down the convergence process.

The benefit of full-cycle training is more pronounced with small datasets.

When training on the full dataset in one cycle, adjusting the learning rate schedule becomes important to ensure that the learning rate decreases appropriately during training.

Key Points

The key takeaway points for me are:

1) We use very small datasets.

2) Gradient accumulation should be set as low as possible, but high enough to allow processing a high enough batch size.

3) Full-cycle training is beneficial for small datasets, therefore batch size times gradient accumulation should equal number of images.

4) A progressively decreasing learning rate is important for full-cycle training.

Four Embeddings Compared

I've taken one of my most recent embeddings, which was trained on 26 images, batch size 13, gradient step 2, and 3000 training steps. I've run the training again, using the exact same data and settings, with the exception of batch size at 3 and gradient accumulation at 17. Below, I will compare results for these two embeddings, as well as for each of them at training step 150.

This means four embeddings are considered here:

1) BS:13, GA:2, ST:3000 (epochs: 1500, weight updates: 1500) (gaST, for "low ga, high STep")

2) BS:13, GA:2, ST:150 (weight updates: 75, epochs: 75) (gast, for "low ga, low step")

3) BS:3, GA:17, ST:3000 (epochs: 346, weight updates: 176) (GAST, for "high GA, high STep")

4) BS:3:, GA:17, ST:150 (epochs: 17, weight updates: 8) (GAst, for "high GA, low step")

For each comparison, all parameters (including the seed) are identical between the two images, with the exception of the embedding used.

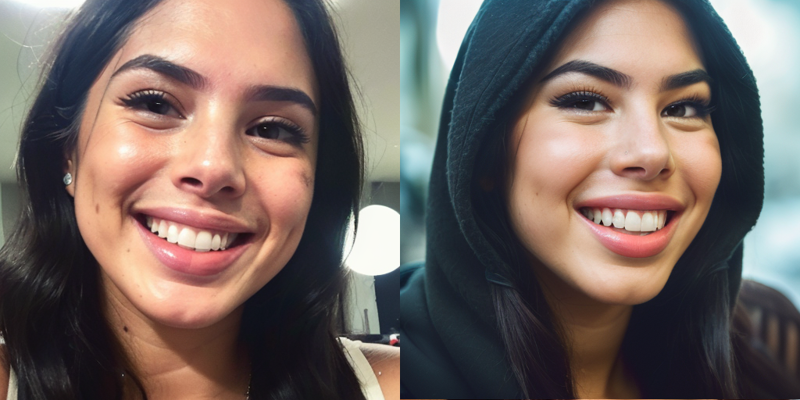

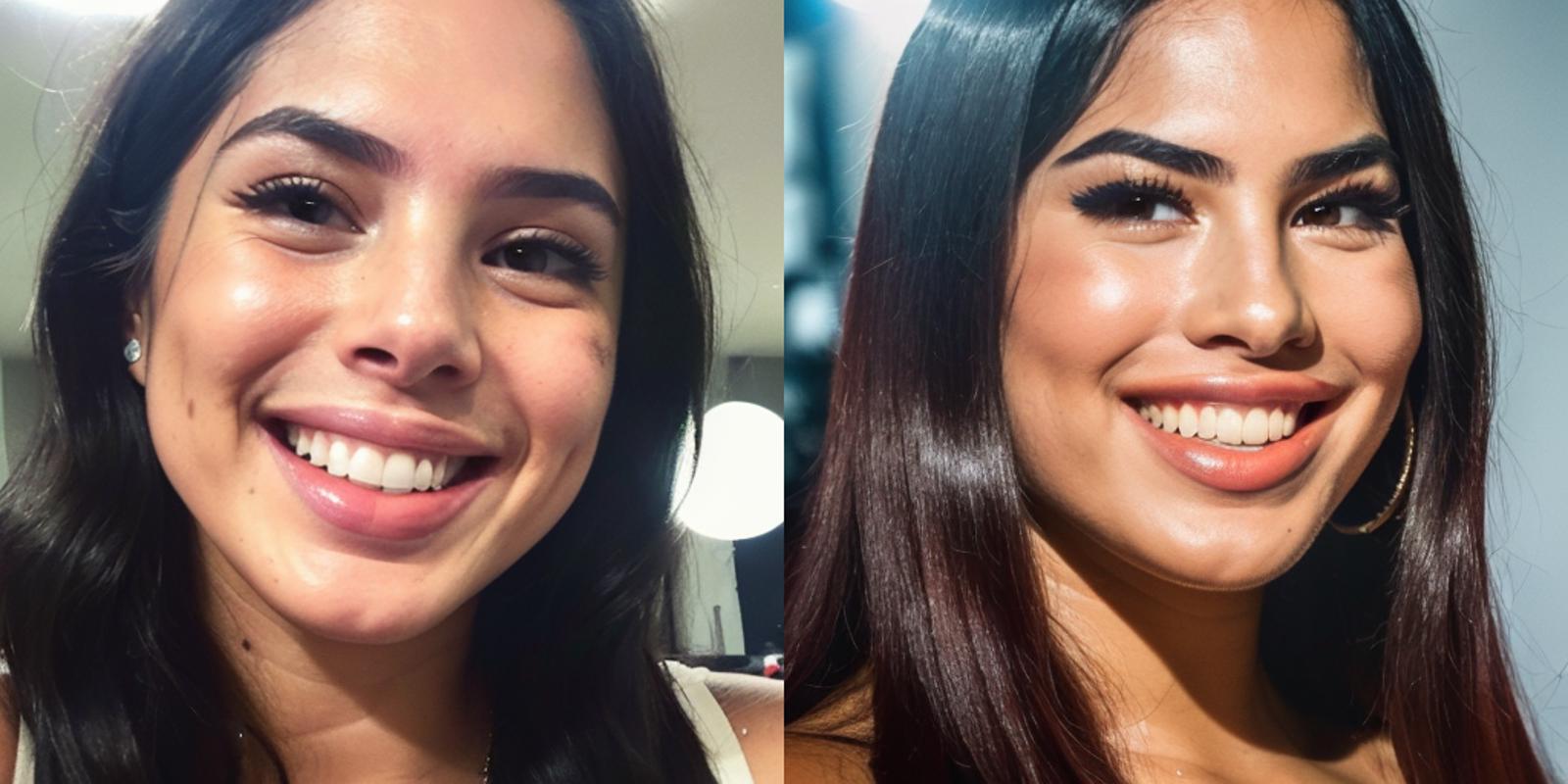

gaST vs GAst

gaST vs GAST

gast vs GAst

gaST vs gast