Intro

When I first read the Stable Diffusion paper it was intimitating: A sophisticated technology developed by geniuses with strong hardware that somehow enables us to generate images. Geez, it probably uses quantum inside. A few months later ControlNet was published and the internal workings were again over my head but it enabled us to guide Stable Diffusion in specific directions. Black Magic! And for some time I thought the eight original control nets were highly-sophisticated programs, something only AI experts can develop. However the original paper clearly states:

"The ControlNet learns task-specific conditions in an end-to-end way, and the learning is robust even when the training dataset is small (< 50k). Moreover, training a ControlNet is as fast as fine-tuning a diffusion model, and the model can be trained on a personal devices."

I'm a software developer without any deep knowledge on AI and recently I tried to train my own control net. How hard can it be, right? It turned to be surprisingly easy once you remove certain pitfalls for the setup and I'm super thrilled how a few hours of training with consumer hardware on 50k images emerges into a new functionality for Stable Diffusion. With this setup guide I want to encourage more people to try it out and maybe come up with your own ideas for a control net.

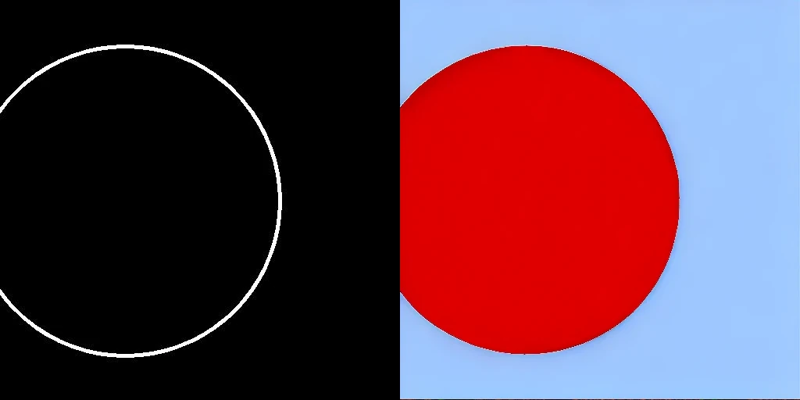

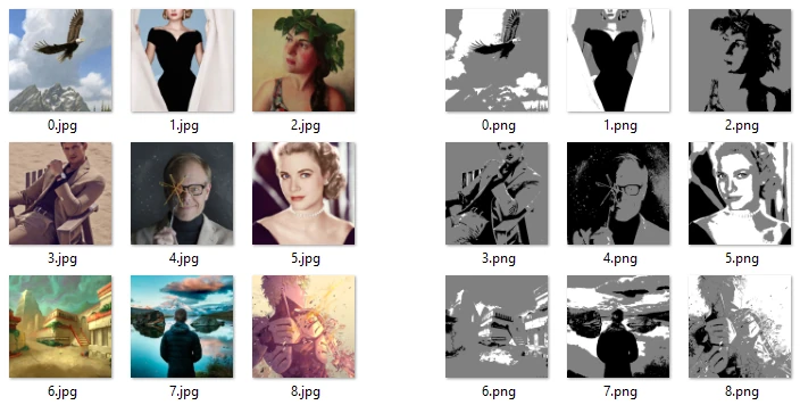

In this tutorial we are going to train a control net on white-gray-black images with the idea to guide Stable Diffusion to light and dark areas to generate those squint illusion images or stylized QR codes. To do this we setup a few things, download an image dataset, convert these images to white-gray-black and let the GPU train on 50k images for over 6h. We use SD 1.5 with fp16 because it is faster but the basic setup should apply to SD 2.x and SDXL as well. Please note I just started out too and I have no idea what I'm doing but it works™... so please don't ask me any detailed questions about control nets!

Remap images to white-gray-black and train the control net on them so afterwards we can reverse the process and guide Stable Diffusion generation with grayscale images

Before I started training my first control net I had a few questions in mind:

Are there any tutorials on training available? => Not much, but see References below. We are going to follow the HuggingFace ControlNet Training

How long does it take to train a control net? => about 6h on a RTX 3060 for something useable

Where do you get a training dataset? => Is provided and will download automatically

How many images do you need for a working control net? => At least 25k for a proof of concept but more than 50k for something useable, and 10x-100x for something great (Canny edge was trained on 3M images)

Can I verify the setup and training is working as soon as possible? => Yes, about 15min for the first checkpoint

If it takes too long, can you pause and resume it? => Yes, see QA below

Are there any performance optimizations available to speed up training? => Yes, this is important and will be covered.

Will it work in ComfyUI or Automatic1111 or how can you test it? - a simple inference script is provided; ComfyUI: yes; Automatic1111: requires conversion

You should also be aware that the machine learning community relies a lot on the Python ecosystem and most tutorials expect you to write your own Python scripts with the libraries provided. This is important to know if you come from a user background and you're only used to Automatic1111 or ComfyUI. Although you should be able to setup control net training with basic commandline and programming skills. To get a one-click control net UI the ecosystem needs to mature a bit more I think. But I didn't have much experience either and was able to do it. You - can - too!

You can find my very first control net model here ControlNet Mysee - Light and Dark!

Requirements

For this tutorial you need:

basic commandline and programming skills

Windows 10 (or Linux*)

Nvidia GPU with at least 12GB VRAM(!!!) (8GB is only possible on Linux right now -> DeepSpeed)

~100GB of free disk space

~30-80GB of internet downloads

~5h of your time (spread over 1-3 days)

~5-15h of compute time (using a RTX 3060)

Good mood

*) If you have Linux, by all means, use Linux! You will be happier, as most quirks in this article are not needed and you get more options for performance optimization (Triton, TorchDynamo etc.).

Prerequisites

First you need to download and install a few things. Later you are going to download a special sub-models-separated version of Stable Diffusion 1.5 (~5GB) and the laion2B-en aesthetics>=6.5 dataset (>20GB). If you have a slow internet connection you might want to start these steps as early as possible (they are marked with MEGADOWNLOAD).

1. Download LAION parquet file (120MB) - it's a long list of urls, captions and other metainformation for images, but not the actual images (you can find it manually via: https://laion.ai/blog/laion-aesthetics -> LAION-Aesthetics V2 -> "6.5 or higher" huggingface -> https://huggingface.co/datasets/ChristophSchuhmann/improved_aesthetics_6.5plus -> Files and versions -> data/)

2. Install CUDA (3GB) -> Windows 10 -> exe (local) -> Download - it's a special driver for graphics hardware which provide additional performance optimizations for model training (manual MEGADOWNLOAD)

3. Install Miniconda (80MB) -> Miniconda3 Windows 64-bit - it's a minimalistic package manager for Python which allows use to install additional packages in virtual environments

4. Install Git (60MB) - it's the most popular software versioning tool but you only need it to download code repositories

5. Install Imagemagick CLI (40MB) -> ImageMagick-7.1.1-15-Q16-HDRI-x64-dll.exe - it's a popular commandline tool for converting images and apply filters

6. Optional - Install ParquetViewer (10MB) -> ParquetViewer.exe - it's a viewer for parquet files which are common for image datasets in the machine learning community; and .NET Runtime (60MB) -> .NET Desktop Runtime 7.0.10 - it's the runtime required to run ParquetViewer

Setup

TODO After training some ControlNets on my own, here are some points I would do differently now, but I don't have time to update:

Use virtualenv instead of conda

Use Python scripts instead of Windows Batch scripts

Use effective batch size of at least 32

Use a simpler ControlNet for introduction like canny edge

We are going to setup the control net training script from HuggingFace first. HuggingFace has become somewhat of a household name in the Stable Diffusion community for providing online storage space for models. What most don't know is they also develop software libraries for machine learning. One of their project is called "Diffusers", a special way to treat diffusion models and is managed by their "Diffusers team". And convienently they also provide the example image set from the original ControlNet which is intented to guide "colored circles".

If you happen to stumble over an error which is not handled in the step-by-step guide you may refer to the "Errors and solutions" or "Questions and answers" section below!

Guiding Stable Diffusion to draw colored circles is what the community needs most right now!

Diffusers train_controlnet.py

Start the Anaconda shell by writing miniconda in the Windows startmenu. First we create a conda environment for our python packages so they don't pollute our system (you only have to do this once) (for example console output see: 00 conda update.txt, 01 conda create.txt and 02 conda install pip.txt):

conda update -n base -c defaults conda

conda create -n controlnet

conda install pipThen you have to activate the environment you just created (you have to do this everytime you restart the Anaconda shell) (for the example console output see conda activate.txt):

conda activate controlnetFollowing the HuggingFace ControlNet Training tutorial we setup diffusers, which you need for control net training, and the accelerate config, which you need for performance optimization (you only have to do this once) (MEGADOWNLOAD) (for example console output see: 03 git clone diffusers.txt, 04 pip install diffusers.txt, 05 pip install controlnet.txt and 06 accelerate config.txt respectivly).

git clone https://github.com/huggingface/diffusers

cd diffusers

pip install -e .

cd examples/controlnet

pip install -r requirements.txt

accelerate configAnswer all the questions! (all the defaults should be fine).

It will take a while for the pytorch install and just display ... (more hidden) . Just wait a couple of minutes, it will sort it out.

If you happen to restart the Anaconda shell at anytime you have to change the working directory to control net again cd diffusers\examples\controlnetand activate the environment conda activate controlnet!

Test launch

Next we are going to start the training to make it download some more models and libraries. Copy-paste to following command (this is just for downloading and testing) (MEGADOWNLOAD requires Miniconda and diffusers repo):

accelerate launch train_controlnet.py ^

--pretrained_model_name_or_path="runwayml/stable-diffusion-v1-5" ^

--output_dir="control-ini/" ^

--dataset_name="fusing/fill50k"Press [Enter]!

Hint: If the command doesn't work because the multiline separators are not working you can of course also write everything in one line:

accelerate launch train_controlnet.py --pretrained_model_name_or_path="runwayml/stable-diffusion-v1-5" --output_dir="control-ini/" --dataset_name="fusing/fill50k"Output (07 train_controlnet control-ini.txt):

09/01/2023 11:44:19 - INFO - __main__ - Distributed environment: DistributedType.NO

Num processes: 1

Process index: 0

Local process index: 0

Device: cpu

Mixed precision type: no

Downloading (…)okenizer_config.json: 100%|█████████████████████████████████████████████████| 806/806 [00:00<?, ?B/s]

C:\Users\user\miniconda3\Lib\site-packages\huggingface_hub\file_download.py:133: UserWarning: `huggingface_hub` cache-system uses symlinks by default to efficiently store duplicated files but your machine does not support them in C:\Users\user\.cache\huggingface\hub. Caching files will still work but in a degraded version that might require more space on your disk. This warning can be disabled by setting the `HF_HUB_DISABLE_SYMLINKS_WARNING` environment variable. For more details, see https://huggingface.co/docs/huggingface_hub/how-to-cache#limitations.

To support symlinks on Windows, you either need to activate Developer Mode or to run Python as an administrator. In order to see activate developer mode, see this article: https://docs.microsoft.com/en-us/windows/apps/get-started/enable-your-device-for-development

warnings.warn(message)

Downloading (…)tokenizer/vocab.json: 100%|█████████████████████████████████████| 1.06M/1.06M [00:00<00:00, 2.33MB/s]

Downloading (…)tokenizer/merges.txt: 100%|███████████████████████████████████████| 525k/525k [00:00<00:00, 1.58MB/s]

Downloading (…)cial_tokens_map.json: 100%|█████████████████████████████████████████████████| 472/472 [00:00<?, ?B/s]

Downloading (…)_encoder/config.json: 100%|█████████████████████████████████████████████████| 617/617 [00:00<?, ?B/s]

You are using a model of type clip_text_model to instantiate a model of type . This is not supported for all configurations of models and can yield errors.

Downloading (…)cheduler_config.json: 100%|█████████████████████████████████████████████████| 308/308 [00:00<?, ?B/s]

{'clip_sample_range', 'sample_max_value', 'prediction_type', 'dynamic_thresholding_ratio', 'thresholding', 'timestep_spacing', 'variance_type'} was not found in config. Values will be initialized to default values.

Downloading model.safetensors: 100%|█████████████████████████████████████████████| 492M/492M [00:37<00:00, 13.2MB/s]

Downloading (…)main/vae/config.json: 100%|█████████████████████████████████████████████████| 547/547 [00:00<?, ?B/s]

Downloading (…)ch_model.safetensors: 100%|███████████████████████████████████████| 335M/335M [00:25<00:00, 13.1MB/s]

{'force_upcast', 'scaling_factor'} was not found in config. Values will be initialized to default values.

Downloading (…)ain/unet/config.json: 100%|█████████████████████████████████████████████████| 743/743 [00:00<?, ?B/s]

Downloading (…)ch_model.safetensors: 100%|█████████████████████████████████████| 3.44G/3.44G [05:00<00:00, 11.4MB/s]

{'encoder_hid_dim', 'projection_class_embeddings_input_dim', 'cross_attention_norm', 'time_embedding_act_fn', 'conv_in_kernel', 'resnet_skip_time_act', 'num_class_embeds', 'conv_out_kernel', 'class_embeddings_concat', 'mid_block_only_cross_attention', 'timestep_post_act', 'transformer_layers_per_block', 'addition_embed_type_num_heads', 'only_cross_attention', 'addition_time_embed_dim', 'resnet_time_scale_shift', 'mid_block_type', 'time_cond_proj_dim', 'time_embedding_type', 'attention_type', 'num_attention_heads', 'addition_embed_type', 'class_embed_type', 'time_embedding_dim', 'use_linear_projection', 'dual_cross_attention', 'resnet_out_scale_factor', 'encoder_hid_dim_type', 'upcast_attention'} was not found in config. Values will be initialized to default values.

09/01/2023 11:50:28 - INFO - __main__ - Initializing controlnet weights from unet

Downloading builder script: 100%|██████████████████████████████████████████████████████| 2.88k/2.88k [00:00<?, ?B/s]

Downloading data: 100%|████████████████████████████████████████████████████████| 7.00M/7.00M [00:00<00:00, 7.77MB/s]

Downloading data: 100%|██████████████████████████████████████████████████████████| 109M/109M [00:08<00:00, 12.6MB/s]

Downloading data: 100%|██████████████████████████████████████████████████████████| 123M/123M [00:09<00:00, 12.9MB/s]

Generating train split: 50000 examples [03:35, 231.67 examples/s]

09/01/2023 11:55:18 - INFO - __main__ - ***** Running training *****

09/01/2023 11:55:18 - INFO - __main__ - Num examples = 50000

09/01/2023 11:55:18 - INFO - __main__ - Num batches each epoch = 12500

09/01/2023 11:55:18 - INFO - __main__ - Num Epochs = 1

09/01/2023 11:55:18 - INFO - __main__ - Instantaneous batch size per device = 4

09/01/2023 11:55:18 - INFO - __main__ - Total train batch size (w. parallel, distributed & accumulation) = 4

09/01/2023 11:55:18 - INFO - __main__ - Gradient Accumulation steps = 1

09/01/2023 11:55:18 - INFO - __main__ - Total optimization steps = 12500

Steps: 0%| | 0/12500 [00:00<?, ?it/s]Yeah, it's doing something! Press Control+C to stop it now!. The following just happened:

Stable Diffusion 1.5with sub-models separated was downloaded to%USERPROFILE%\.cache\huggingface\hub\models--runwayml--stable-diffusion-v1-5fusing/fill50kexample training set was downloaded to%USERPROFILE%\.cache\huggingface\datasets\downloads\extractedYour control net directory was created in

%USERPROFILE%\diffusers\examples\controlnet\control-inibut it is empty right nowYour body produced endorphines

Missing packages

You may have noticed a few things. Why does it say Device: cpu when you explicitly set GPU in accelerate and does it really take 566hours (=~24 days or 3 weeks)? The reason is the pytorch package isn't compiled with CUDA so the script defaults to CPU and you didn't specify additional optimizations in the arguments yet. As I wrote earlier it took only 6h on my RTX 3060. Install Pytorch with CUDA by going to https://pytorch.org/get-started/locally -> Stable -> Windows -> Conda -> Python -> CUDA (latest) and copy-paste the commandline argument into your Anaconda shell (you only have to do this once) (for example console output see: 08 conda install pytorch-cuda.txt):

conda install pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidiaAnd you need to install a few more things otherwise you get strange error messages (you only have to this once):

conda install chardet cchardet

pip install xformers bitsandbytes-windows(I filed a bug report to Huggingface)

TODO: I got reports from users that xformers is not working for them. I still have to sort this out.

First launch

We are going to start the training again with the full command and all optimizations active next. These are the recommended arguments for a GPU with 12GB VRAM (if you have a different setup you may consult the HuggingFace ControlNet Training documentation and scroll all the way down). Then start the optimized control net training:

accelerate launch train_controlnet.py ^

--pretrained_model_name_or_path="runwayml/stable-diffusion-v1-5" ^

--output_dir="control-ini/" ^

--dataset_name="fusing/fill50k" ^

--mixed_precision="fp16" ^

--resolution=512 ^

--learning_rate=1e-5 ^

--train_batch_size=1 ^

--gradient_accumulation_steps=4 ^

--gradient_checkpointing ^

--use_8bit_adam ^

--enable_xformers_memory_efficient_attention ^

--set_grads_to_noneOutput (for example console output see: 09 train_controlnet cuda.txt):

Num processes: 1

Process index: 0

Local process index: 0

Device: cuda

Mixed precision type: fp16

You are using a model of type clip_text_model to instantiate a model of type . This is not supported for all configurations of models and can yield errors.

{'sample_max_value', 'prediction_type', 'thresholding', 'clip_sample_range', 'timestep_spacing', 'dynamic_thresholding_ratio', 'variance_type'} was not found in config. Values will be initialized to default values.

{'scaling_factor', 'force_upcast'} was not found in config. Values will be initialized to default values.

{'only_cross_attention', 'addition_embed_type', 'timestep_post_act', 'num_attention_heads', 'time_cond_proj_dim', 'time_embedding_type', 'attention_type', 'mid_block_only_cross_attention', 'encoder_hid_dim', 'class_embed_type', 'conv_out_kernel', 'mid_block_type', 'upcast_attention', 'resnet_skip_time_act', 'time_embedding_act_fn', 'conv_in_kernel', 'addition_time_embed_dim', 'projection_class_embeddings_input_dim', 'dual_cross_attention', 'resnet_out_scale_factor', 'use_linear_projection', 'resnet_time_scale_shift', 'addition_embed_type_num_heads', 'class_embeddings_concat', 'cross_attention_norm', 'encoder_hid_dim_type', 'time_embedding_dim', 'transformer_layers_per_block', 'num_class_embeds'} was not found in config. Values will be initialized to default values.

09/01/2023 14:16:30 - INFO - __main__ - Initializing controlnet weights from unet

09/01/2023 14:16:33 - WARNING - xformers - A matching Triton is not available, some optimizations will not be enabled.

Error caught was: No module named 'triton'

===================================BUG REPORT===================================

Welcome to bitsandbytes. For bug reports, please submit your error trace to: https://github.com/TimDettmers/bitsandbytes/issues

================================================================================

binary_path: %USERPROFILE%\miniconda3\envs\controlnet\Lib\site-packages\bitsandbytes\cuda_setup\libbitsandbytes_cuda116.dll

CUDA SETUP: Loading binary %USERPROFILE%\miniconda3\envs\controlnet\Lib\site-packages\bitsandbytes\cuda_setup\libbitsandbytes_cuda116.dll...

09/01/2023 14:16:36 - INFO - __main__ - ***** Running training *****

09/01/2023 14:16:36 - INFO - __main__ - Num examples = 50000

09/01/2023 14:16:36 - INFO - __main__ - Num batches each epoch = 50000

09/01/2023 14:16:36 - INFO - __main__ - Num Epochs = 1

09/01/2023 14:16:36 - INFO - __main__ - Instantaneous batch size per device = 1

09/01/2023 14:16:36 - INFO - __main__ - Total train batch size (w. parallel, distributed & accumulation) = 4

09/01/2023 14:16:36 - INFO - __main__ - Gradient Accumulation steps = 4

09/01/2023 14:16:36 - INFO - __main__ - Total optimization steps = 12500

Steps: 0%| | 1/12500 [00:06<15:44:50, 4.54s/it, loss=0.0224, lr=1e-5]Device: cuda , yeah! But 15h? The duration estimation should fall down to 6h after a short warmup period. We also set --mixed_precision="fp16" which reduces training time in half (and overrides the setting inaccelerate config) and works just as fine with no side-effects except for a little less precision in inference. Let it compute for at least 500 steps (~15min) until it saves the first checkpoint (~2GB) to see what you have to expect as output and check if the training actually worked (If you seriously cannot wait for 500 steps you can also reduce checkpoint rate by providing the argument --checkpointing_steps=100 ). Run the full training if you want to try the full "color circles" example or stop it with Control+C anytime.

Output:

9/02/2023 10:23:08 - INFO - accelerate.accelerator - Saving current state to control-ini/checkpoint-500

Configuration saved in control-ini/checkpoint-500\controlnet\config.json

Model weights saved in control-ini/checkpoint-500\controlnet\diffusion_pytorch_model.safetensors

09/02/2023 10:23:55 - INFO - accelerate.checkpointing - Optimizer state saved in control-ini/checkpoint-500\optimizer.bin

09/02/2023 10:23:55 - INFO - accelerate.checkpointing - Scheduler state saved in control-ini/checkpoint-500\scheduler.bin

09/02/2023 10:23:55 - INFO - accelerate.checkpointing - Random states saved in control-ini/checkpoint-500\random_states_0.pkl

09/02/2023 10:23:55 - INFO - __main__ - Saved state to control-ini/checkpoint-500Files:

control-ini\

checkpoint-500\ (2.0GB)

controlnet\

config.json

diffusion_pytorch_model.safetensors (1.4GB)

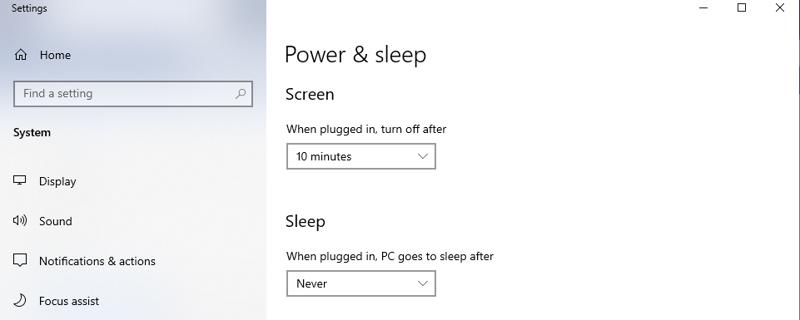

...If you choose to train the full control net you might consider turning of stand-by mode:

This concludes the setup.

Validation

Intro

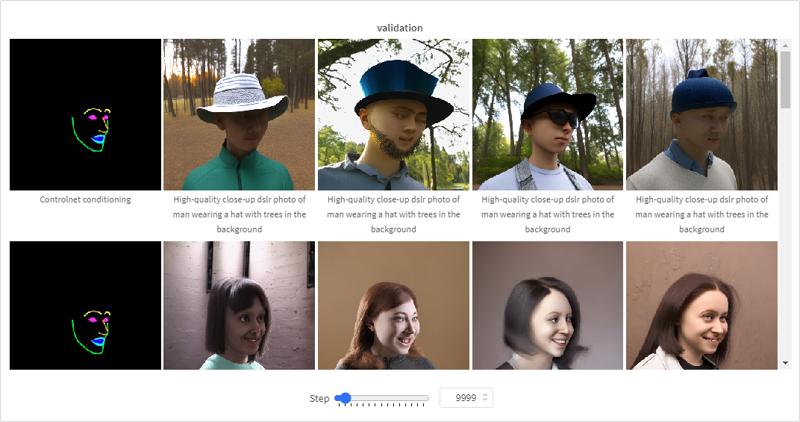

To validate if your setup is working requires the first checkpoint only. To validate if your own control net idea is working requires a lot more steps. We are now going to take a look at the "color circles" example to validate the training setup and discuss how many steps are required.

If you read the HuggingFace tutorial you may have noticed I removed the --validation_prompt and --validation_image arguments. Why? If you read the actual code of train_controlnet.py it requires either tensorboard or weight&biases connected to store the images, another setup step, which I wanted to avoid in this tutorial (see QA for how to setup tensorboard) . As of 2023-09-01 there is no option to just save the image to disk(?) (I filed a bug report to HuggingFace). Thus we have to to run the inference manually.

If you have no Stable Diffusion UI you can use the attached

inference.pyscript. Open it in your text editor (it's not very complex), adjust the paths and run it with:python inference.pyIf you use ComfyUI you can copy any

control-ini-fp16\checkpoint-500\controlnet\diffusion_pytorch_model.safetensorsto the control net directory and try it out. It should work out of the box.If you use Automatic1111 you need to convert

.safetensorfiles to.ptfirst (I'm sorry). See in QA below!

(Note: results from inference.py and ComfyUI might differ as they use a slightly different inference architecture than Automatic1111)

Color Circles example control net

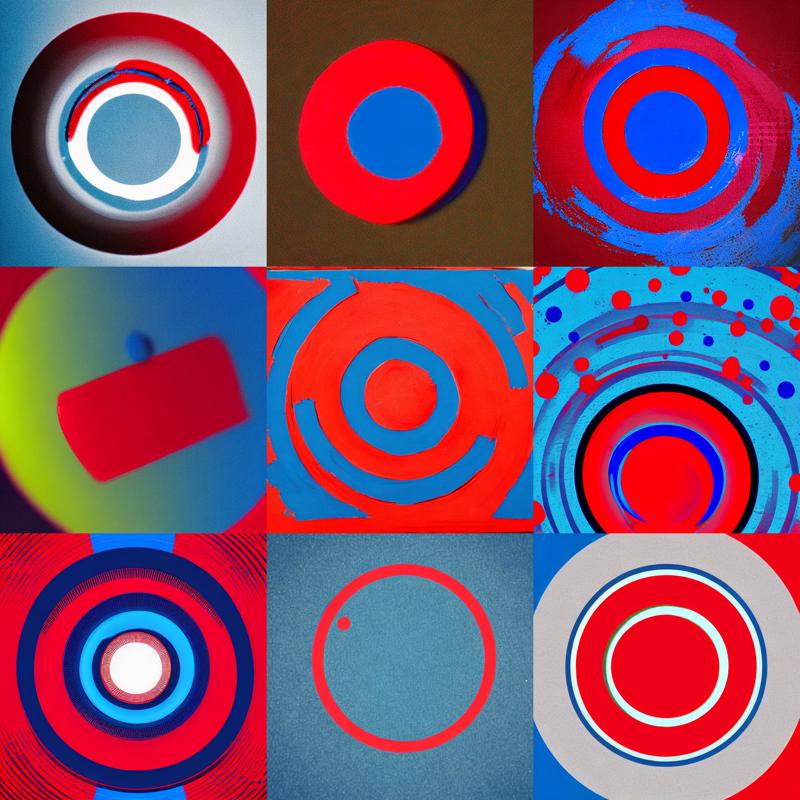

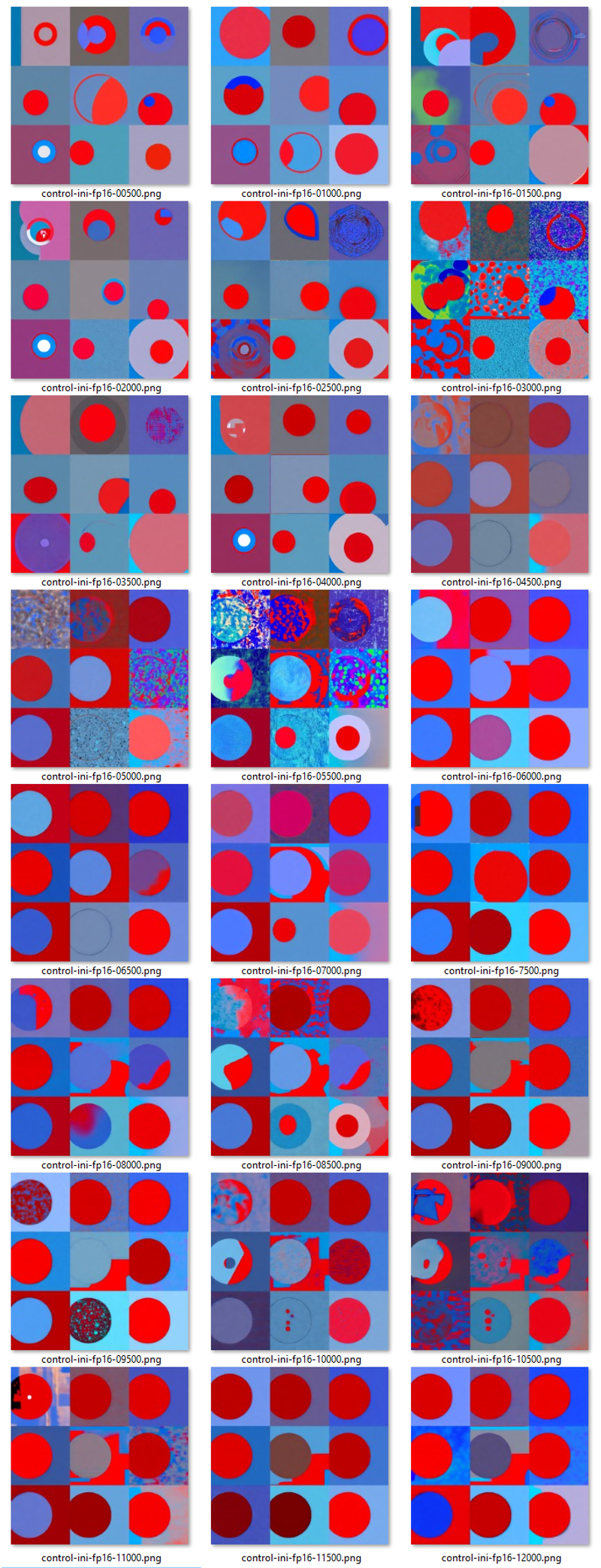

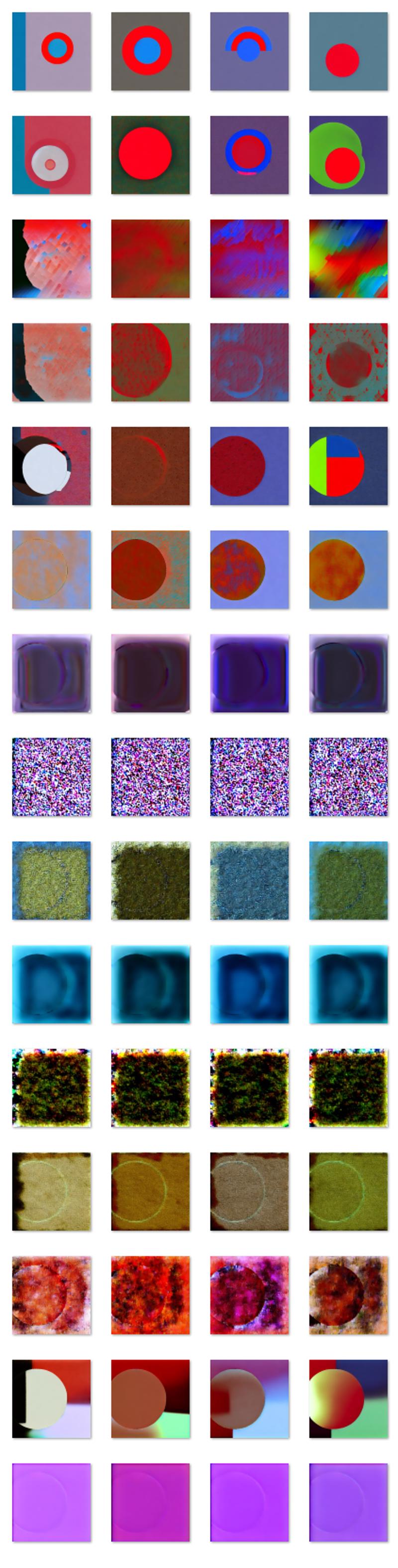

The following is based on my control-ini-fp16 color circles example control net. I used Automatic1111 with Prompt: red circle with blue background, Steps: 20, Sampler: UniPC, CFG scale: 7.5, Seed: 0, Model: v1-5-pruned-emaonly.safetensors, Batch size: 9 (to stay close to inference.py) to get the following results (you can find all images in the attached .zip):

Conditioning image:

red circle with blue background

Using NO control net:

Using control net control-ini precision=fp16 seed=0 steps=500-12000 epoch=1:

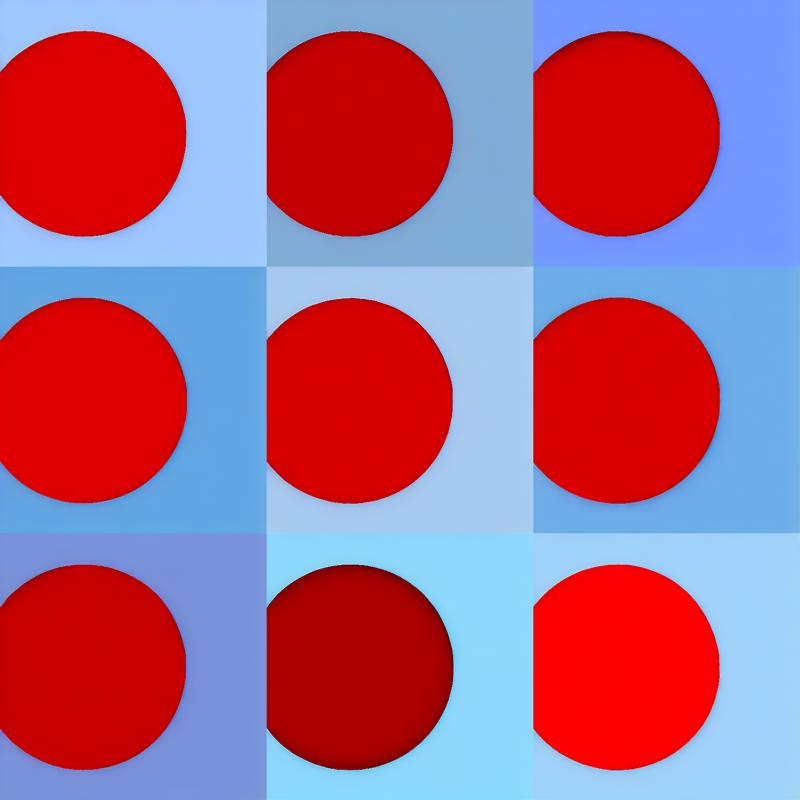

The final checkpoint (precision=16 steps=12500) in all its glory:

Notable steps:

00500: Even though it was only trained for 500 steps the result is distinctively different to no control net at all. The results make sense and there are no weird colors or artifacts otherwise there is already something wrong with your setup.

04500: After 4500 steps (x4 = 18000 images) Stable Diffusion already follows the position of the circle and the control net seems work but the colors are noisy and it still confuses foreground and background.

06000: Colors seem to become more quiet.

08500: Colors seem to become noisy again(?).

12500: After 12500 steps (x4 = 50000 images) it got all colors right.

Does this mean every control net has "sudden convergence" at 4500 steps and requires exactly 12500 steps to be good? No, it highly depends on your concept and how fast the model is able to "grasp" your concept. These numbers are only for rough estimates. The original ControlNet canny was trained on 3M images.

control-ini-fp32

And for comparison the same final checkpoint with fp32 (you can find all images in the attached .zip). From a subjective standpoint the fp32-12500 result looks worse than the fp16-12500 even though it was trained with higher precision. Why is that? This may just be a fluke of the very seed we used for inference or an unlucky state the fp32-12500 happened to be in. It should get better with even more training steps of course but you should be careful with what you infer from early steps or unlucky generations. More precision is always better, but it takes longer to compute.

Uncanny Faces control net

Another example from the Uncanny faces tutorial: "10k steps (batch_size=4 = 40k images) already seems to work... With just 1 epoch (so after the model "saw" 100K images), it already converged to following the poses and not overfit... At around 15K steps, it already started learning the poses. And it matured around 25K steps."

Uncanny faces weights & biases provides a convienent overview with examples for different steps:

Failed training

I couldn't get bf16 to work even after 3 attempts. You can see the failed inferences in the attached images.zip. My GPU supports bf16. It may conflict with one of the arguments. What's also interesting is I got 3 different results even though I'm specifying a seed for training.

control-ini-bf16 slowly decending into oblivion...

Image dataset

Intro

Of course, training SD to fill circles is meaningless, but this is a successful beginning of your story.

We are now going to download an image dataset, convert these images to white-gray-black and let the GPU train on 50k images for over 6h. The idea was inspired by QR Code Monster ControlNet and all the "squint images" which are so hot right now (although I always wondered why they used stylized QR images for training when we can just convert regular images to light and dark areas using a simple filter and train on them).

Before I started training my first control net I had a few questions in mind:

Where do you get a big enough image dataset?

Where do you get a diverse enough image dataset?

Where do you get meaningful captions for those images?

How much disk space do you need?

and do I really have to register a HuggingFace account just to upload a test imageset, only to download it again to the .cache, everytime I make an update?

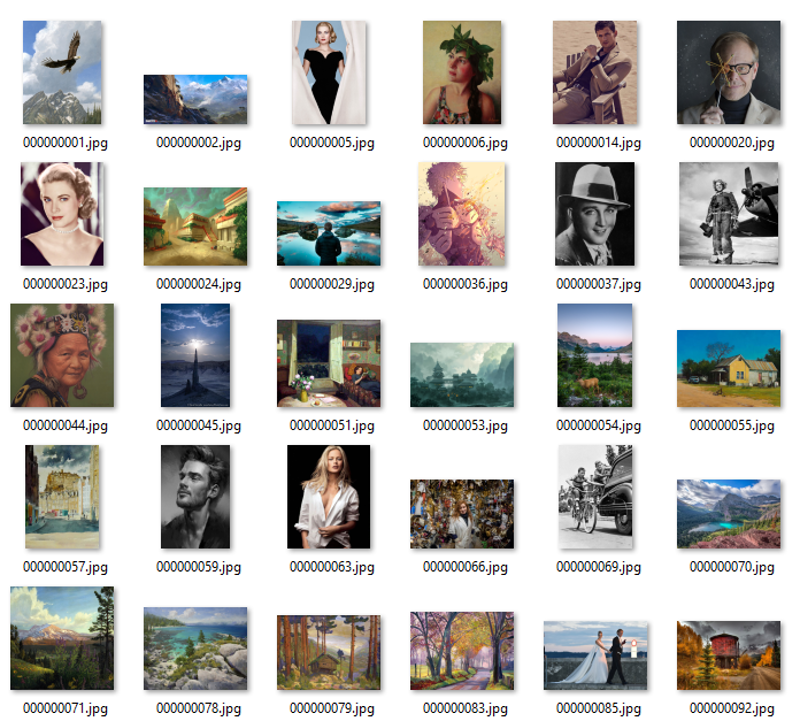

laion2b-en-aesthetics65

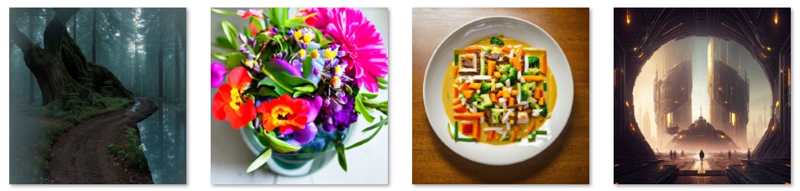

If you read the Stable Diffusion 1.5 model card they mention it was trained on the laion dataset. LAION is an organization which provides different datasets for training machine learning models. One of their project is the LAION-5B image dataset with the subsets laion2B-en (images only with english captions), laion2B-multi and laion1B-nolang (hence 2B+2B+1B=5B). The parquet files of laion2B-en alone are 6.2TB(!). That's only the urls, not the images themselves! That's huuuge! That's also too much. Luckily the dataset was further split into smaller sets according to a certain asthetics estimation resulting in the laion-aesthetics subsets. The biggest one with aesthetics>=4.5 still has 1.2B entries and the parquet files are over 220GB in total. The smallest one with aesthetics>=6.5 has 625K entries and the parquet file has about 120MB. That's enough and it's something we can work with. Here is a taste of what you can expect from the image dataset:

Of course it also contains a few highly asthetic pearls such as:

My mind expands into the universe by looking at the sheer beauty!

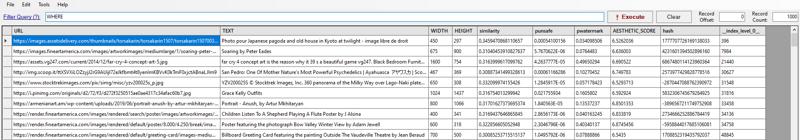

ParquetViewer

Remember the .parquet file you downloaded? It's the laion2B-en with aesthetics>=6.5 image dataset, basically a long list of urls, captions and other metainformation for images, but not the actual images. To view it you can use the ParquetViewer:

img2dataset

The parquet viewer allows you to view the data in pages, filter for different criteria and export subsets (like "first 100 rows only) and also in different file formats (csv etc.). However you won't need these features because you can filter and apply transformations with the img2dataset download tool by LAION. To install it go back to the Anaconda shell and write:

pip install img2datasetimg2dataset is a CLI tool which can read parquet files and download a huge amount of image files in parallel. You can also apply filter criterias (image size, aspect ratio etc.) and transformations (resize, crop, reencode etc.). Make sure you read and understand all the commandline arguments on their Github page before running the tool!

Considering download sizes

You have to resolve the following dilemma first:

Stable Diffusion 1.5 was trained on 512x512 images thus ideally you should only use images with exactly 512x512. But there are not many images with exactly 512x515 thus you either have to up-scale smaller ones, down-scale larger ones, and automatically crop them, each of which introduces slight errors.

If you want to download all 625k images first without filtering out any entries you should expect ~165kB per image on average with ~15% skips (robots.txt, dead links, divine interventions etc.) which will be ~87GB of downloads and disk space!

If you filter out some entries (small images, weird aspect ratios, too large images etc.) you can get it down to ~110-180k images and ~21-63GB of downloads.

If you also apply image transformations immediately (resize, crop, reencode) you can save a lot of disk space, with the downside that you'll loose the source images in case you want to use different sizes or croppings later. If you're thinking about training for SDXL you can at least resize it to 1024.

Considering image formats

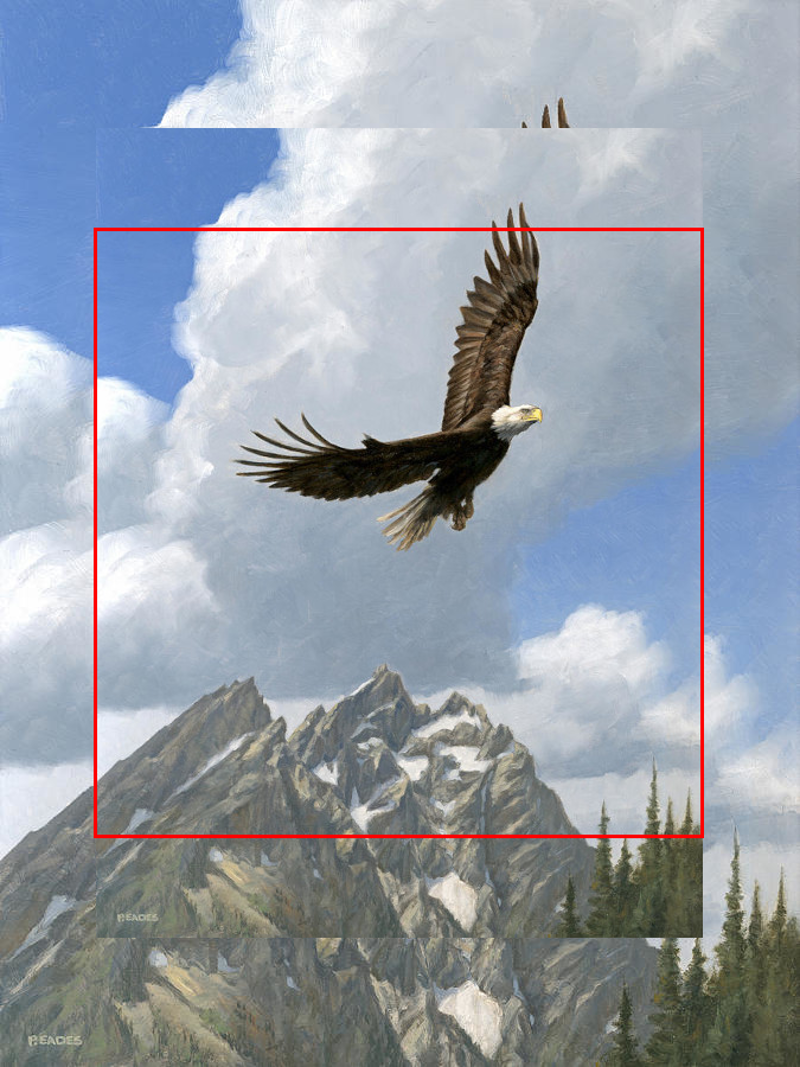

Let's say you crop every image with 512x512 (see red rectangle in examples)...

The first image you would get if you use no filters would be torsakarin150700324.jpg (which conveniently is also the 1st entry) and looks like this:

Notice how we introduce artifacts when up-scaling (exaggerated for dramatic effect!).

The first image you get when filtering for

--min_image_size 512would be soaring-peter-eades.jpg (which conveniently is also the 2nd entry) and looks like this:

Using --min_image_size 512 you only down-scale which is fine. The center-crop also covers a good enough part of the image.

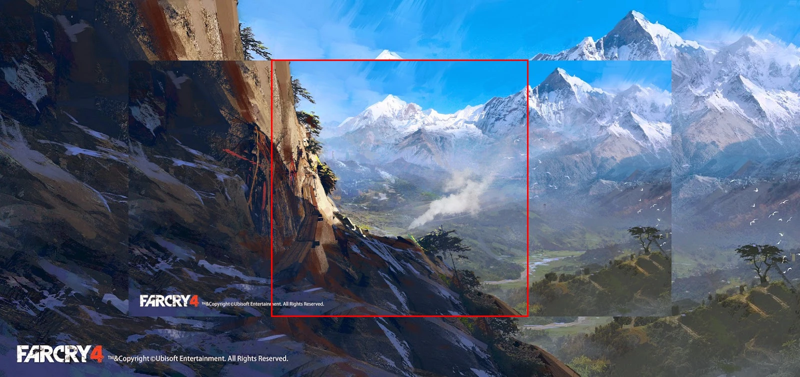

The first image you loose when filtering for

--max_aspect_ratio 2would be far-cry-4-concept-art-5.jpg (which conveniently is also the 3rd entry) and looks like this:

Notice how images with huge aspect ratios have a lot of their border area cropped which makes them a bit useless ("where is the Far Cry logo?").

That's why I chose to use --min_image_size 512 --max_aspect_ratio 2 --resize_mode="center_crop". Which conveniently gives use a workable amount of images. And conveniently is also the setting Stable Diffusion 1.5 used for training. And this is also the reason why so many image generations in SD come out cropped (SDXL paper: "Synthesized objects can be cropped, such as the cut-off head of the cat in the left examples for SD 1-5 and SD 2-1. An intuitive explanation for this behavior is the use of random cropping during training of the model"), see d272f3250515ae0ae4317c34afac60b7.jpg :

Notice how the head was cropped half-way. And please don't force resize without taking aspect ratio into account.

Downloading images

Make sure to read and understand all img2dataset arguments before starting a huge download. Here are the most important ones:

--min_image_size 512so you don't have to upscale for SD 1.5--max_aspect_ratio 2works both in landscape and portrait--max_image_areaif you want to further reduce downloads--image_size 512to downscale larger images--processes_countset it to your number of cores (or lower if you still want to use your computer during download)

Note: All files will be normalized to the extension .jpg (and potentially re-encoded) independent of the image's actual format (png, webp etc.). So there will only be .jpg files on your disk even if they are actually pngs (see --encode_format and --disable_all_reencoding). There will also be no .jpeg or other weird endings. Don't skip the integrity check as some images will be corrupt (most are actually html files).

Move the .parquet file to diffusers\examples\controlnet, rename it to laion2b-en-aesthetics65.parquet and then start the download with (MEGADOWNLOAD requires Miniconda, img2dataset and the parquet file):

img2dataset ^

--url_list "./laion2b-en-aesthetics65.parquet" ^

--input_format "parquet" ^

--url_col "URL" ^

--caption_col "TEXT" ^

--output_format files ^

--output_folder laion2B-en-aesthetics65 ^

--processes_count 8 ^

--thread_count 24 ^

--min_image_size 512 ^

--max_aspect_ratio 2 ^

--image_size 512 ^

--resize_mode "center_crop"Output:

Starting the downloading of this file

Sharding file number 1 of 1 called C:/Users/meisi/diffusers/examples/controlnet/laion2b-en-aesthetic65.parquet

0it [00:00, ?it/s]File sharded in 64 shards

Downloading starting now, check your bandwidth speed (with bwm-ng)your cpu (with htop), and your disk usage (with iotop)!(img2dataset won't show any progress status until it is finished, only warnings if they happen)

You should immediately see image files populating the folders otherwise there is something wrong with your arguments. This should get you a file structure like this:

laion2B-en-aesthetics65\

00000\

000000001.jpg

000000001.json

000000001.txt

000000002.jpg

000000002.json

000000002.txt

...

00001\

000010000.jpg

000010000.json

000010000.txt

...

...

00063\

...Finish:

total - success: 0.876 - failed to download: 0.124 - failed to resize: 0.000 - images per sec: 25 - count: 635561In case of overeagerness

You started the download without thinking too much about the arguments and now have a mess of images?

If you skipped verification you can use the attached

img2dataset_0_verify.batandimg2dataset_0_verify2.bat.If you happened do download ALL images without any filtering you can use the attached

img2dataset_1_categorize.batto split them apart afterwards (splits intox0512,x0512_aspect,x0768... with the directory structure intact).

These scripts are sluggish and will take a few hours (I'm sorry but I wrote them when I was in Slowenia). Better make sure to read and understand the img2dataset arguments before downloading.

laion2b-en-aesthetics65 entries

635561 entries in total

211857 entries with min_image_size 512 of which 208140 have max_aspect_ratio 2 (useful for SD 1.5, use SQL query

WHERE WIDTH >= 512 AND HEIGHT >= 512 AND WIDTH / HEIGHT < 2 AND HEIGHT / WIDTH < 2)83224 entries with min_image_size 768 of which 81719 have max_aspect_ratio 2 (useful for SD 2.x)

37310 entries with min_image_size 1024 of which all have max_aspect_ratio 2 (useful for SDXL)

Largest image size: 12833x5500 (__index_level_0__=14972420)

Every image comes with a text and there is no entry without a text. If the .txt is empty it's because there was something wrong (encoding error maybe).

The hash column is useless and is not intended to verify the file (https://huggingface.co/datasets/ChristophSchuhmann/improved_aesthetics_6.5plus/discussions/3)

laion2b-en-aesthetics65 downloads

I downloaded the whole laion2b-en-aesthetics65 to collect some data.

Number of files:

min_side aspect<2 aspect>2 total

>= 1024 31152 603 31755

>= 768 38496 699 39195

>= 512 110562 1898 112460

< 512 369710

corrupt 3570

------

SUM: 556690

(of 635561 entries)File sizes:

min_side aspect<2 aspect>2 total

>= 1024 29.95GiB 1.02GiB 30.96GiB

>= 768 12.36GiB 0.40GiB 12.76GiB

>= 512 20.62GiB 0.56GiB 21.18GiB

< 512 22.30GiB

corrupt 0.44GiB

--------

SUM: 87.64GiBSanitizing images

Please note that training a model requires good and clean input data to get something good. The way this dataset is prepared now is okay, but not good. The captions are okay, the images are okay, the cropping is okay. But you should be aware that some captions include strange characters, there are duplicates, grayscale and error images and other artifacts. After running fastdup I removed 30% of the images! Cleaning datasets deserves it's own article but this chapter is already too long. I wanted to keep it simple, so we leave it for now. You can find more info here and here.

Generating conditioning images

Now that you got some images you need to generate the training dataset. Remember: We want to convert regular images to white-gray-black images to train the control net on guiding Stable Diffusion to were we want light and dark areas. To do this we use Imagemagick to generate a 3-color palette and let it remap every image to the closest colors which gives use the Mysee maps.

magick convert xc:black xc:gray50 xc:white +append palette.pngAnd then for the image:

magick convert lenna.png -dither None -remap palette.png lenna_mysee.png

You can do this manually for all images. Or use the attached img2dataset_3_convert.bat.

@echo off

REM Convert all image files with imagemagick to mydataset\conditioning_images and copy the originals to mydataset\images

setlocal enabledelayedexpansion

if not exist mydataset mkdir mydataset

if not exist mydataset\images mkdir mydataset\images

if not exist mydataset\conditioning_images mkdir mydataset\conditioning_images

set dir=laion2B-en-aesthetics65

set /a i=0

for /d %%d in (%dir%\*) do (

echo %%d

for %%f in (%%d\*.jpg) do (

set "filename=%%~nf"

magick convert %%d\!filename!.jpg -resize "512x512^^" -gravity center -crop 512x512+0+0 +repage mydataset\images\!i!.webp

magick convert mydataset\images\!i!.webp -dither None -remap palette.png mydataset\conditioning_images\!i!.webp

set /a i+=1

)

)

endlocal

echo Done! ... Please press the Any-key!

pause > NULThis should give you the following file structure:

images\

0.webp

1.webp

2.webp

...

110000.webp

conditioning_images\

0.webp

1.webp

2.webp

...

110000.webp

train.jsonland should look like this:

If you get high activity from Windows Defender you might consider turning Real-time protection off:

Bro, trust me! ;)

train.jsonl

You need to prepare a train.jsonl which tells the training script where to find the images and what captions to use. Run the attached img2dataset_2_makejsonl.py with:

python img2dataset_2_makejsonl.pyIt will go over all images in the laion2b-en-aesthetics65 directory, fetch the according text from the .parquet file and write an entry into train.jsonland get rid of strange characters in the caption. It looks like this:

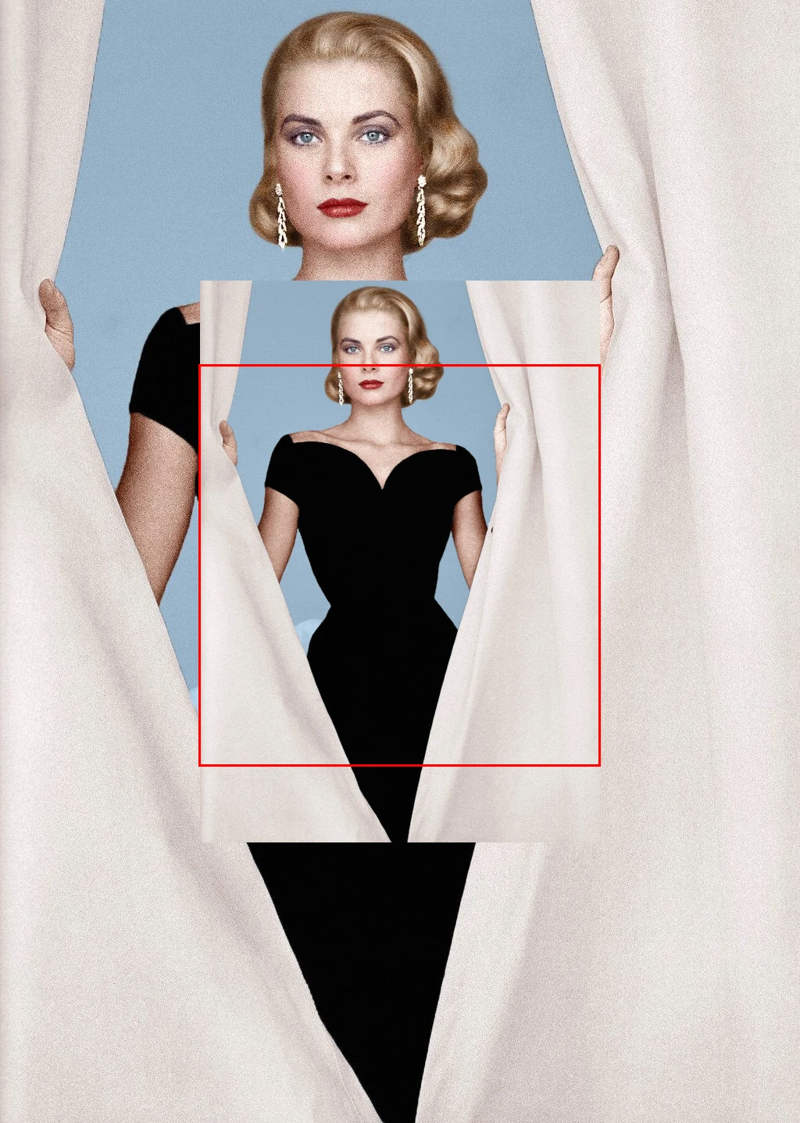

{"text": "Soaring by Peter Eades", "image": "0.webp", "conditioning_image": "0.webp"}

{"text": "Grace Kelly Outfits", "image": "1.webp", "conditioning_image": "1.webp"}

{"text": "Portrait - Anush, by Artur Mkhitaryan", "image": "2.webp", "conditioning_image": "2.webp"}

...mydataset.py

One more thing you need to do before you can start training. The HuggingFace train_controlnet.py expects a Python dataset object in runtime which you have to create manually with a python script (I'm sorry!). But you can use the attached COPY_TO_MYDATASET_DIR_mydataset.py , copy to mydataset/ and rename it to mydataset.py. I adapted it from fill50k.py, removed all the download objects and pointed everything to local files. So train_controlnet.py will read this script, which is a class and a few functions, calls the functions and generates examples and splits generators(?). (TODO is there seriously no easier way?)

The world is burning. We have too many cars already. We need to start...

TRAINING!

Now you can launch actual training with:

accelerate launch train_controlnet.py ^

--pretrained_model_name_or_path="runwayml/stable-diffusion-v1-5" ^

--output_dir="control-mysee-fp16/" ^

--dataset_name="mydataset" ^

--mixed_precision="fp16" ^

--resolution=512 ^

--learning_rate=1e-5 ^

--train_batch_size=1 ^

--gradient_accumulation_steps=4 ^

--gradient_checkpointing ^

--use_8bit_adam ^

--enable_xformers_memory_efficient_attention ^

--set_grads_to_none(note the --output_dir="control-mysee-fp16/" and --dataset_name="mydataset")

(note the batch size and gradient accumulation steps used here are not good for training quality and should be increased. Please refer the QA section!)

Just wait for a few hours and you should get your first checkpoints:

control-mysee-fp16\

checkpoint-500\ (2.0GB)

controlnet\

config.json

diffusion_pytorch_model.safetensors (1.4GB)

...

checkpoint-1000\ (2.0GB)

checkpoint-1500\ (2.0GB)

...So every 15min this produces another 2.0GB and thus is going to fill up your disk pretty quick hence why you should have ~100GB of free disk space (after a few hours I ran out of disk space and this is why my v0.1 model is the 17000 steps checkpoint that it is :D). Copy the latest diffusion_pytorch_model.safetensors to the control net directory in your favorite Stable Diffusion UI, rename it to something readable like control-mysee-fp16-7000 and try it out.

Have fun!

Afterthoughts

Here are some afterthoughts about the training dataset:

I wanted to train the control net to guide Stable Diffusion to associate white with light areas and black with dark areas. But does it actually work like this? I don't know. The control net works however! So guess my assumption was right.

Maybe a simple remapping to the closest color isn't a good idea. Maybe it works better with a bigger spread, i.e. semi-light and semi-dark areas should also be trained to the neutral gray. Also, there is a psychological difference between remapping RGB to grayscale and converting RGB to luminance.

How many steps do we need? Maybe the training is under-fitting or over-fitting? We should generate several test images with different checkpoints

(TODO what's the fuzz about wandbd?).Maybe we should randomly remove white and black areas to tell Stable Diffusion it can still produce light and dark areas wherever it wants (look how they trained the inpainting models). Or maybe we should only use light and dark areas where there is something "interesting" going on (but what does "interesting" mean)?

Maybe we should use a more diverse image dataset because asthetics>=6.5 is also training the control net to asthetic images only?

(TODO use a bigger dataset but randomly select from the images)What about images like illustrations, screenshots, text etc. after all?"During the training, we randomly replace 50% text prompts with empty strings. This facilitates ControlNet's capability to recognize semantic contents from input condition maps, e.g., Canny edge maps or human scribbles, etc. This is mainly because when the prompt is not visible for the SD model, the encoder tends to learn more semantics from input control maps as a replacement for the prompt."

Your own control net idea

The original control nets

I always thought the orginal control nets were something special and we will stick with them for a long time.

...a few moments later...

we got ControlNet 1.1 and they came up with:

"Instruct Pix2Pix" control net - relate "a cute boy" to "make the boy cute"

"Inpaint" control net - remove random areas and relate prompts to "fill empty area"

"Tile" control net

...a few moments later...

we got ControlNet for SDXL and they came up with:

"Recolor" control net - convert photos to grayscale images and learn to reverse (so simple!)

"Sketch" control net - convert illustrations to grayscale images and learn to reverse (so simple!)

"Revision" control net

All of which were "just" a clever preparation of the right training dataset.

If we take the canny edge map example, apparently what they did was, take an image dataset, apply the canny edge filter over all images and produce the training dataset, just like we did with the remapping. And then train the control net:

1. "look at this <canny edge map>, that's "a man with a beard", which actually looks like <real picture>

2. "look at this <canny edge map>, that's "a man in a suite and tie", which actually looks like <real picture>

3. "look at this <canny edge map>, that's "a cat with blue eyes in a room", which actually looks like <real picture>

...

1500000. "look at this <canny edge map>, that's a "robot head with gears", which actually looks like <real picture>

and then they reverse it:

"now that you got a good look at many canny edge maps, I will show you another <canny edge map>, that's a "a man standing on top of a cliff", can you extrapolate what the <real picture> actually is?" and you get something close to what you want. Magic! Or basic machine learning.

Left-to-right: 1. sd_xl_base_1.0, 2. canny edge map, 3. ControlNet control-lora-canny-rank256, 4. open pose estimation, 5. ControlNet OpenPoseXL2, 6. depth map estimation, 7. ControlNet control-lora-depth-rank256

Prompt: swedish woman standing in front of a mirror, dark brown hair, white hat with purple feather, full body, photoshoot, warm light, magazine centerfold

Filter over images

We usually generate canny edge map from existing images to generate something similar. And we could have stopped there. Why do we need HED edge maps which are basically the same concept? Well, with a few extra steps they synthetized "scribbles" from HED edge maps and now we can draw scribbles from scratch to generate something similar. And they also used different line filters to specialize for specific domains: Hough lines for architecture, anime line drawing for manga art. And by extension, why not specialize line-drawings on other concepts, like objects, cars, or synthetized inputs with other filters just to get a bit more precision for your use case?

...like Edge Drawing

Model over images

This "apply filter over images" workflow only works for a few concepts of course. Some control nets required a bit more effort. Let's take the "open pose" as an example. They used an external model which was trained to estimate poses. Like an image filter - but smart. Even though we could have used a structured data representation of poses, like "hand at x=1 y=2 z=3, foot at x=4 y=5 z=6, ...", which would be more sparse, they projected it onto a RGB image. It's clear how the training from the pose image relates to the generated images. After all the control net just has to "connect the dots" and relate. They did something similar with the segmentations: "apply external model over images".

And we could have stopped there. Why do we need more pose control nets if they are all basically the same? Well, with a few extra steps we also get poses for hands and faces. Or we use different pose model types to specialize for specific domains:

...like Dense-poses

...or poses for quadruped animals

Higher-order concepts

Even more fascinating is the "depth map" and "normal map" control net. They also used the "apply external model over images" approach ("Midas" in this case) but all of a sudden the control net relates 3-dimensional information to 2D images: "white is forground-ish and black is background-ish, for whatever that means". That's mind blowing!

Control net ideas

So here's an abstract recipe to come up with your own control net:

Can you find a 2D dimensional representation of <your concept> about how you want to guide Stable Diffusion (e.g. line drawings)

If not, can you somehow project onto a 2D dimensional representation from <your concept> (e.g. project pose data to RGB image)

Find a way to automatically convert a lot of images to <your concept>? (e.g. apply filter over images)

If not, maybe you can find a big enough image dataset which already has <your concept> (e.g. anime drawings)

If not, maybe you can find an external model which can make good estimates of <your concept> (e.g. Midas for depth map estimation)

If not, maybe you can find an algorithm to synthetize <your concept> (e.g. uncanny faces from 3D models)

Can <your concept> be meaningfully reversed to a realistic image (e.g. "relate line drawings to images", as opposed to "project DNA sequences to RGB images and relate to human photo"... which would be a bit far fetched I think... but then again, who knows what our AI overlords are capable of... haha, oh my god we are doomed!)

Check through image filter databases (e.g. Gimp, Imagemagick, Imagemagick examples)

Check through AI models for estimations (e.g. Midas, open pose)

Think out of the box (e.g. 3D movies to depth maps, computer games to X maps)

What else do we have?

Get creative!

More ideas

Error messages and solutions

Generally: Try to read the output and stacktrace. It takes a while to get into put the messages are usually helpful!

.

Training setup

The following values were not passed to `accelerate launch` and had defaults used instead:

`--num_processes` was set to a value of `0`

`--num_machines` was set to a value of `1`

`--mixed_precision` was set to a value of `'no'`

`--dynamo_backend` was set to a value of `'no'`

To avoid this warning pass in values for each of the problematic parameters or run `accelerate config`.Run accelerate config first.

.

usage: train_controlnet.py [-h] --pretrained_model_name_or_path PRETRAINED_MODEL_NAME_OR_PATH

...

train_controlnet.py: error: the following arguments are required: --pretrained_model_name_or_path

Traceback (most recent call last):

File "<frozen runpy>", line 198, in _run_module_as_main

File "<frozen runpy>", line 88, in _run_code

File "%USERPROFILE%\miniconda3\Scripts\accelerate.exe\__main__.py", line 7, in <module>

File "%USERPROFILE%\miniconda3\Lib\site-packages\accelerate\commands\accelerate_cli.py", line 45, in main

args.func(args)

File "%USERPROFILE%\miniconda3\Lib\site-packages\accelerate\commands\launch.py", line 986, in launch_command

simple_launcher(args)

File "%USERPROFILE%\miniconda3\Lib\site-packages\accelerate\commands\launch.py", line 628, in simple_launcher

raise subprocess.CalledProcessError(returncode=process.returncode, cmd=cmd)

subprocess.CalledProcessError: Command '['%USERPROFILE%\\miniconda3\\python.exe', 'train_controlnet.py', '\\']' returned non-zero exit status 2.The commandline multi-line separators may be wrong. Replace \ characters in your commandline with ^! The multiline separator \ is used in Linux shells wheras Windows shell uses ^. Maybe you also forgot to add a separator midway when copy-pasting command arguments.

.

UserWarning: `huggingface_hub` cache-system uses symlinks by default to efficiently store duplicated files but your machine does not support them in %USERPROFILE%\.cache\huggingface\hub. Caching files will still work but in a degraded version that might require more space on your disk.You can ignore it. TODO

.

Error caught was: No module named 'triton'The python package triton is not installed. You can ignore it or see QA below.

.

ModuleNotFoundError: No module named 'chardet'```

ModuleNotFoundError: No module named 'cchardet'```

...

ImportError: cannot import name 'COMMON_SAFE_ASCII_CHARACTERS' from 'charset_normalizer.constant' (%USERPROFILE%\miniconda3\envs\controlnet\Lib\site-packages\charset_normalizer\constant.py)Run conda install chardet cchardet.

.

ValueError: xformers is not available. Make sure it is installed correctlypip install xformers TODO provide conda workflow

.

PackagesNotFoundError: The following packages are not available from current channels:

- xformersUse pip install xformers instead of conda. TODO provide conda workflow

.

ModuleNotFoundError: No module named 'bitsandbytes'pip install bitsandbytes-windows. TODO provide conda workflow

.

{'sample_max_value', 'prediction_type', 'dynamic_thresholding_ratio', 'timestep_spacing', 'clip_sample_range', 'thresholding', 'variance_type'} was not found in config. Values will be initialized to default values.

{'force_upcast', 'scaling_factor'} was not found in config. Values will be initialized to default values.

{'addition_embed_type', 'time_embedding_dim', 'upcast_attention', 'mid_block_only_cross_attention', 'num_attention_heads', 'dual_cross_attention', 'conv_out_kernel', 'cross_attention_norm', 'projection_class_embeddings_input_dim', 'use_linear_projection', 'conv_in_kernel', 'time_embedding_act_fn', 'resnet_skip_time_act', 'transformer_layers_per_block', 'mid_block_type', 'encoder_hid_dim_type', 'resnet_time_scale_shift', 'resnet_out_scale_factor', 'class_embed_type', 'num_class_embeds', 'addition_embed_type_num_heads', 'attention_type', 'timestep_post_act', 'time_embedding_type', 'time_cond_proj_dim', 'class_embeddings_concat', 'addition_time_embed_dim', 'only_cross_attention', 'encoder_hid_dim'} was not found in config. Values will be initialized to default values.TODO I don't know but you can ignore it

.

RuntimeError:

CUDA Setup failed despite GPU being available. Please run the following command to get more information:

python -m bitsandbytes

Inspect the output of the command and see if you can locate CUDA libraries. You might need to add them

to your LD_LIBRARY_PATH. If you suspect a bug, please take the information from python -m bitsandbytes

and open an issue at: https://github.com/TimDettmers/bitsandbytes/issuesYou installed the bitandbytes version for Linux. Use pip install bitsandbytes-windows instead (dumb, I know). TODO provide conda workflow

.

You are using a model of type clip_text_model to instantiate a model of type . This is not supported for all configurations of models and can yield errors.TODO I don't know

.

assert "source" in options and options["source"] is not NoneHappened to me on Linux when using TorchDynamo with --gradient_checkpointing . Disable either TorchDynamo or --gradient_checkpointing if you have enough VRAM. See https://github.com/pytorch/pytorch/issues/97077

.

Error: validation_image and validation_prompt calculate something but don't produce any output.TODO didn't work for me. You can ignore it and generate images with inference.py or a Stable Diffusion UI yourself.

.

ValueError: Using fp8 precision requires transformer_engine to be installed.You would need to install TransformerEngine but it's only available on Linux right now. Change precision back to something else.

.

CUDA SETUP: Loading binary %USERPROFILE%\miniconda3\envs\controlnet\Lib\site-packages\bitsandbytes\cuda_setup\libbitsandbytes_cuda116.dll...Copy the mydataset.py to mydataset\

.

File "%USERPROFILE%\diffusers\examples\controlnet\train_controlnet.py", line 677, in <listcomp>

images = [image.convert("RGB") for image in examples[image_column]]

^^^^^^^^^^^^^

AttributeError: 'str' object has no attribute 'convert'Something went wrong when generating the train splits with mydataset.pyprobably incorrect paths. Did you copy the mydataset.py to mydataset/ ? If you fixed it, you may have to delete %USERPROFILE%\.cache\huggingface\datasets\mydataset and try again. TODO delete really necessary?

.

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 10.00 MiB (GPU 0; 12.00 GiB total capacity; 24.86 GiB already allocated; 0 bytes free; 25.45 GiB reserved in total by PyTorch)You are out of RAM. Make sure you applied all memory optimization. Otherwise there is not much you can do except buy a GPU with more VRAM. Alternatively you can switch to Linux and use DeepSpeed. See see HuggingFace - Methods and tools for efficient training on a single GPU for your options.

.

Unknown escape sequenceThere are backslashes in your text columns which are interpreted as escape sequences. Using text-replace either remove them or add another backslash to escape them. Note that this changes the meaning of the caption slightly.

.

Automatic1111

ERROR: ControlNet cannot find model config [C:\%USERPROFILE%\dev\stable-diffusion-webui\models\ControlNet\control-ini-fp16-500.yaml]

ERROR: ControlNet will use a WRONG config [C:\%USERPROFILE%\dev\stable-diffusion-webui\extensions\sd-webui-controlnet\models\cldm_v15.yaml] to load your model.

ERROR: The WRONG config may not match your model. The generated results can be bad.

ERROR: You are using a ControlNet model [control-ini-fp16-500] without correct YAML config file.

ERROR: The performance of this model may be worse than your expectation.

ERROR: If this model cannot get good results, the reason is that you do not have a YAML file for the model.

Solution: Please download YAML file, or ask your model provider to provide [C:\%USERPROFILE%\dev\stable-diffusion-webui\models\ControlNet\control-ini-fp16-500.yaml] for you to download.

Hint: You can take a look at [C:\%USERPROFILE%\dev\stable-diffusion-webui\extensions\sd-webui-controlnet\models] to find many existing YAML files.

You can ignore it or copy the cldm_v15.yaml and rename it to your control net to get rid of the message. The control net extension uses the default cldm_v15.yaml for every control net anyway. Also see the settings for control net extension.

.

RuntimeError: Error(s) in loading state_dict for ControlNet:

Missing key(s) in state_dict: "time_embed.0.weight", ...

...

Unexpected key(s) in state_dict: "controlnet_cond_embedding.blocks.0.bias", ...

...

omegaconf.errors.ConfigAttributeError: Missing key params

full_key: model.params

object_type=dictYou need to convert the .safetensor files to .pth first. See QA below.

.

modules.devices.NansException: A tensor with all NaNs was produced in Unet. This could be either because there's not enough precision to represent the picture, or because your video card does not support half type. Try setting the "Upcast cross attention layer to float32" option in Settings > Stable Diffusion or using the --no-half commandline argument to fix this. Use --disable-nan-check commandline argument to disable this check.TODO when using bf16 control net. I had to start with --no-halfbut still couldn't get it to work.

.

FileNotFoundError: [Errno 2] No usable temporary directory found in ['%USERPROFILE%\\AppData\\Local\\Temp', '%USERPROFILE%\\AppData\\Local\\Temp', '%USERPROFILE%\\AppData\\Local\\Temp', 'C:\\WINDOWS\\Temp', 'c:\\temp', 'c:\\tmp', '\\temp', '\\tmp', '%USERPROFILE%\\diffusers\\examples\\controlnet']You are out of disk space

.

inference.py

ValueError: Unable to locate the file ./control-ini-fp16/checkpoint-500/controlnet/config.json which is necessary to load this pretrained model. Make sure you have saved the model properly.

OSError: Unable to load weights from checkpoint file for './control-ini-fp16/checkpoint-500/controlnet/config.json' at './control-ini-fp16/checkpoint-500/controlnet/config.json'. If you tried to load a PyTorch model from a TF 2.0 checkpoint, please set from_tf=True.

UnicodeDecodeError: 'utf-8' codec can't decode byte 0xb8 in position 0: invalid start byte

OSError: It looks like the config file at './control-ini-fp16/checkpoint-500/controlnet/diffusion_pytorch_model.safetensors' is not a valid JSON file.

OSError: Error no file named config.json found in directory ./control-ini-fp16/checkpoint-500.In inference.py script you need the specify the path like controlnet_path="./control-ini-fp16/checkpoint-500/controlnet" (during training) or controlnet_path="./control-ini-fp16" when it's finished.

.

%USERPROFILE%\diffusers\src\diffusers\image_processor.py:88: RuntimeWarning: invalid value encountered in cast

images = (images * 255).round().astype("uint8")You set the wrong torch_dtype for the provided control net. Try torch_dtype = torch.float16.

.

img2dataset

UnicodeEncodeError: 'charmap' codec can't encode characters in position 74-78: character maps to <undefined>

Sample 3 failed to download: 'charmap' codec can't encode characters in position 74-78: character maps to <undefined>See https://github.com/rom1504/img2dataset/issues/347 and https://github.com/rom1504/img2dataset/issues/219 . You can use the attached img2dataset_2_makejsonl.py to create a correct train.jsonl afterwards.

.

Questions and answers

Basics

Q: What is the difference between fp16, bf16 , fp32 and tf32?

A: see HuggingFace - Methods and tools for efficient training on a single GPU . Don't try to get crazy with high-precision though, especially when you've just started out and still working on your concept. fp32is slow. Neural networks are very resilient to low precision (and even missing nodes). If your control net is not working as intended, it's unlikely to due low precision and rather due to using the wrong concept. Throwing another 100k images at it won't solve it either. Use fp32 only for the release version.

.

Q: How do you convert a fp32 model to fp16 afterwards?

A: Use the scriptconvert.py provided by https://github.com/Akegarasu/sd-model-converter

python convert.py -f control-ini-fp16\checkpoint-500\controlnet\diffusion_pytorch_model.safetensors -p fp16 -st.

Q: Can a model trained with bf16 or tf32 be used on every GPU?

A: TODO maybe you have to convert it first to fp16 or fp32 => didn't work

TODO I don't know. train_controlnet.py says "bf16 requires PyTorch>=1.10. and an Nvidia Ampere GPU". Whether or not this only affects training or also inference I don't know.

TODO I couldn't get bf16 to work even after 3 attempts. You can see the failed inferences in the attached images.zip. My GPU supports bf16. It may conflict with one of the arguments. What's also interesting is I got 3 different results even though I'm specifying a seed for training.

.

Q: What is full/ema-only/no-ema?

A: TODO I don't know

.

Setup

Q: Why does it say Device: cpu?

A: You didn't install pytorch with cuda. See chapter "Setup" above.

.

Q: What is Torch Dynamo and how do I install it?

A: TorchDynamo is a Python-level JIT compiler designed to make unmodified PyTorch programs faster by PyTorch. But it is not available on Windows right now (Requirements: A Linux or macOS environment, see https://pytorch.org/docs/stable/dynamo/installation.html )

.

Q: What is DeepSpeed and how can I install it?

A: DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective by Microsoft. But is not available for Windows right now. (Microsoft has a long history of neglecting the Windows platform, so it's unlikely they will ever support it in the future, see https://github.com/microsoft/DeepSpeed/issues/2427 )

.

Q: What is Triton and how can I install it?

A: A language and compiler for writing highly efficient custom Deep-Learning primitives by OpenAI. But it is not available for Window right now (Supported Platforms: Linux, See https://github.com/openai/triton/issues/1640 )

.

Q: What is TransformerEngine an how can I install it?

A: A library for accelerating Transformer models on NVIDIA GPUs, including using 8-bit floating point (FP8) precision on Hopper and Ada GPUs, to provide better performance with lower memory utilization in both training and inference by NVIDIA. But it is only available for Windows right now (Pre-requisites: Linux x86_64)

.

Q: What can be expected from this performance optimizations?

A: I just setup the whole thing on Linux to run a quick test on the control-ini-fp16 dataset (measured after 1min warmup):

Windows with

--gradient_checkpointing: 315minLinux with

--gradient_checkpointing: 285minLinux with TorchDynamo, but without

--gradient_checkpointing: 280min

Apparently the biggest gain was by using Linux (which I presume is due to Triton). I tried different TorchDynamo backends (eager, aot_eager, inductor, onnxrt, ipex, all had pretty much the same gains. not working: nvfuser, aot_cudagraphs, ofi and fx2trt).

.

Q: Any more ideas for performance optimizations?

A: As all other performance optimizations are only available for Linux right now, maybe it's better to setup Linux for serious control net training. see HuggingFace - Methods and tools for efficient training on a single GPU for your options.

TODO use a LDM with a smaller latent space, see https://huggingface.co/blog/wuerstchen and https://github.com/dome272/Wuerstchen/issues/17 + https://github.com/huggingface/diffusers/issues/5071

.

train_controlnet.py

Q: How do I know training actually worked and it's not computing nothing for a long time?

A: It should work with the first checkpoint immediately and produce normal Stable Diffusion images. If you see artifacts, strange colors or a black image something went wrong (see Chapter "Failed training" above). You can check every checkpoint with the inference script, try it in ComfyUI or setup Tensorboard and define validation arguments.

Original ControlNet tutorial: "Because we use zero convolutions, the SD should always be able to predict meaningful images. (If it cannot, the training has already failed.)"

.

Q: What are expected times on average consumer hardware?

A:

My RTX 3060 is able train 38 steps/min or 2000 steps/hour (with

--mixed_precision=fp16 --train_batch_size==1 --gradient_accumulation_steps=4). It takes double the time withfp32.Original ControlNet tutorial: "The training is fast. After 4000 steps (batch size 4, learning rate 1e-5, about 50 minutes on PCIE 40G)"

ControlNet 1.1 repo: "8 Nvidia A100 80G with batchsize 8x32=256 for 3 days, spending 72x30=2160 USD (8 A100 80G with 30 USD/hour)."

If you have more data please let me know!

.

Q: How many steps do I need to see some results?

A: See chapter "Validation"

My control-ini-fp16 and control-ini-fp32 is able to follow the basic concept after 4500 steps and is starting to get good after 12500 steps.

Original ControlNet tutorial: "you will get a basically usable [canny] model at about 3k to 7k steps"

Uncanny Faces tutorial: "10k steps (batch_size=4 = 40k images) already seems to work... With just 1 epoch (so after the model "saw" 100K images), it already converged to following the poses and not overfit... At around 15K steps, it already started learning the poses. And it matured around 25K steps."

If you have more data please let me know!

.

Q: Can I pause and resume training anytime?

A: Not anytime but the script produces a checkpoint every 500 steps by default which requires 2GB of disk space. So you can resume every 500 steps by adding --resume_from_checkpoint latest to accelerate launch train_controlnet.py (view train_controlnet.py for more arguments). You can also set --checkpointing_steps=100 if you want a different rate.

.

Q: Can I generate images during control net training or will I run out of memory?

A: I think one of my trainings failed because of this, but I still have to verify this.

Try it! I was able to generate images in parallel but the performance on both processes degraded a lot (x5). You may run into an out of memory exception, but that never happend to me. If it happens it would throw you back to your last checkpoint and you can restart with --resume_from_checkpoint latest. TODO I assume the system is intelligent enough to offload into normal RAM/Swap?

.

Q: What's up with Tensorboard and Wandb?

Tensorboard by Google is a local webserver and gets installed with the train_controlnet requirements.

Weights & Biases (Wandb) is an online service and requires registration, but can also be run locally.

.

Q: How to setup TensorBoard?

A: TensorBoard already comes installed with the requirements. Open another conda shell and run:

conda activate controlnet

cd diffusers\examples\controlnet

tensorboard --logdir control-ini-fp16 --samples_per_plugin images=100This will start a webserver at http://localhost:6006

train_controlnet.py will post log events in control-ini-fp16\logs which TensorBoard picks up an displays accordingly. You might want to start training with the following additional arguments to see validation images:

--validation_image="conditioning_image_1.png" ^

--validation_prompt="red circle with blue background" ^

--validation_steps=100 ^

--num_validation_images=2.

Q: What is the allow_tf32 argument?

A: TODO: I don't know see HuggingFace - Methods and tools for efficient training on a single GPU

.

Q: What happened toonly_mid_control=true and sd_locked=false?

A: TODO: it seems to be available in the original training script only and I don't know how to activate in the diffusers script yet

I filed a feature request to HuggingFace

.

Q: How can I reduce disk space usage?

A: You can delete any intermediate checkpoint you don't need anymore (e.g. for evaluation purposes etc.). Make sure the last checkpoint is not partly saved because you ran out of disk space and it is actually complete! You can also savely delete optimizer.bin (700MB) (and random_states_0, scaler.pt, scheduler.bin) if you are not going to re-start training from this checkpoint (because something went wrong in later checkpoints). You can also increase to--checkpointing_steps=2500so less checkpoints are saved. Note that in 2023 a SSD with 1TB start at 35€. Just get a bigger drive!

.

Q: What's the point of training a control net on colorful circles?

A: As the original ControlNet tutorial mentions "Of course, training SD to fill circles is meaningless, but this is a successful beginning of your story.". It's just an exact dataset which was easy to synthetize and thus visible results should emerge faster.

.

Q: Can I use the Stable Diffusion .safetensors/.ckpt I already have instead of downloading it again?

A: TODO Probably, but I didn't look into it yet. Just download the 4GBs for now.

.

Q: Why does the image from inference.py come out black?

A: The NSFW filter was activated. You need to use safety_checker=None.

.

Converting models

Q: What is the difference between training with fp32 and converting to fp16afterwards as opposed to training with fp16in the first place?

A: TODO I don't know for sure but I guess the error accumulates: round(1.2345, 2) * round(2.3456, 2) * round(3.4567, 2) != round(1.2345 * 2.3456 * 3.4567, 2)

.

Q: How do you "compress" the model to make it smaller?

A: Convert it to fp16 makes it 2x smaller but you will loose precision. You can also use the model converter in https://github.com/Akegarasu/sd-model-converter to provide ema-only or ema-pruned or no-ema models.

A: TODO https://huggingface.co/stabilityai/control-lora rank256 is 6x smaller and rank128 is 12x smaller.

TODO https://github.com/HighCWu/ControlLoRA for a new approach (as of Sep 2023 this only works for SDXL though)

TODO

.

Q: Is the final diffusion_pytorch_model.safetensors file in control-ini-fp16\ the same as control-ini-fp16\checkpoint-12500\controlnet\ (or is there some final process applied when the training script is finished)?

A: If the number of images overlap exactly with the checkpoint rate then ha256sum diffusion_pytorch_model.safetensors for both files gives me the same hash, so it's the same file... hence I conclude that no final processing step takes place. The same goes for the config.json.

.

Q: How to convert .safetensors to .pth to make it work with Automatic1111?

A: There is no official solution in diffusers, so you have to use the script provided in https://github.com/huggingface/diffusers/issues/3015#issuecomment-1500917937

python convert_diffusers_controlnet_to_original_controlnet.py --model_path control-ini-fp16\checkpoint-12500\controlnet --checkpoint_path control-ini-fp16.pth --half.

Q: Are there any consequences in converting from .safetensors to .pth(like introducing floating point inprecisions etc.)?

A: No precision is lost. You can try it yourself with:

python convert_diffusers_controlnet_to_original_controlnet.py --model_path control-ini-fp32\checkpoint-12500\controlnet --checkpoint_path control-ini-fp32.pth

python ..\..\scripts\convert_original_controlnet_to_diffusers.py --checkpoint_path control-ini-fp32.pth --original_config_file cldm_v15.yaml --dump_path control-ini-fp32-pth --to_safetensors

sha256sum control-ini-fp32\checkpoint-12500\controlnet\diffusion_pytorch_model.safetensors control-ini-fp32.pth control-ini-fp32-pth\diffusion_pytorch_model.safetensors

fa3af3 *control-ini-fp32\checkpoint-12500\controlnet\diffusion_pytorch_model.safetensors

ef6ce3 *control-ini-fp32.pth

fa3af3 *control-ini-fp32-pth\diffusion_pytorch_model.safetensorsIf the original model can be fully reproduced from an intermediate model, nothing was lost. QED

Note: of course this will not be true if you convert from or to different precisions (e.g. fp32 to fp16).

.

Q: Does a control net trained for SD 1.5 also work for fine-tuned models (RealisticVision etc.)?

A: I only tested this empirically and it works, but I don't know what the underlying theoretical implications are or why this works. see https://github.com/lllyasviel/ControlNet/discussions/12

ControlNet paper v2: "Transferring to community models. Since ControlNets do not change the network topology of pretrained SD models, it can be directly applied to various models in the stable diffusion community, such as Comic Diffusion [60] and Pro- togen 3.4 [16], in Figure 12."

.

Q: Does a control net trained for SD 1.5 also work for old SD 1.x models and vice versa?

A: TODO I don't know but as SD 1.5 was fine-tuned from 1.4 and uses the same architecture I guess so, yes

.

Q: Does a control net trained for SD 1.5 also work for SD 2.1 or SDXL?

A: No. TODO I assume because they use a different architecture

.

Q: Can a control net trained on SD 1.5 be converted to make it work with SD 2.1 oder SDXL?

A: TODO I don't know but probably not and you have re-train it from scratch.

.

Control nets

Q: What makes the official control nets "better"?

A: There is nothing that makes them intrinsicly better than a custom control net. Note that the semi-official control net models for SD 2 were trained by Thibaud who is not related to the official ControlNet team. Here are some points that can influence quality however:

Better preparation of image dataset (selection, deduplication, cropping etc.)

Better analysis of results (automatic analysis, deeper analysis)

More compute time (canny control net was trained on 3M images)

Higher batch sizes

Deep understanding of theoretical machine learning concept helps to have an intution of how certain approaches will turn out

In the early days of control nets many optimizations might not have been available and required strong hardware. Today, training the original canny model with 3M images on my hardware would take 7 days which is long but reasonable. But even the original control nets were training on bad data ("a small group of greyscale human images are duplicated thousands of times"). All in all, even for AI experts, building and training machine learning models is just tinkering and math of da highest order.

.

Q: On which image dataset were the official ControlNets trained with?

A: https://github.com/lllyasviel/ControlNet/issues/93#issuecomment-1436077011: "Given the current complicated situation outside research community, we refrain from disclosing more details about data. Nevertheless, researchers may take a look at that dataset project everyone know."

https://voxel51.com/blog/conquering-controlnet "... the information they do reveal lines up closely with Google’s Conceptual Captions Dataset: a dataset "consisting of ~3.3M images annotated with captions"."

They also line up closely with the laion2b-en-aesthetics>=6.25 (3M images), but that's just my 6.25 cents.

.

Q: What are the consequences of training on highly-asthetic, high-resolution images only (as opposed to all the other images Stable Diffusion was trained on originally)?

A: TODO I don't know. The control net seems to grasp what you want and it doesn't need many steps anyway. Poses was only trained on poses, and HLSD was only trained on architecture, so I guess it somewhat depends on your concept. They also purposly dropped the captions for training steps to make the control net not take everything too literally.

.

Q: What happens if you train the control net on the same images again for another epoch?

A: Uncanny Faces tutorial: "We trained the model for 3 epochs (this means that the batch of 100K images were shown to the model 3 times) and a batch size of 4 (each step shows 4 images to the model). This turned out to be excessive and overfit (so it forgot concepts that diverge a bit of a real face, so for example "shrek" or "a cat" in the prompt would not make a shrek or a cat but rather a person, and also started to ignore styles)."