I will start this little article with a brief disclaimer: I am not an expert in Stable Diffusion nor do I have a complete understanding about the topic. All I know is bits of pieces of information from random sources and my own experience. Please let me know in the comments if I have something wrong.

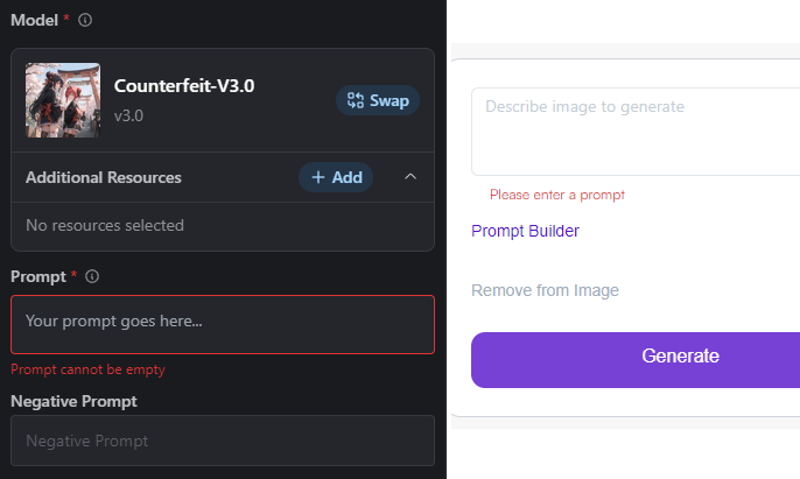

If you have ever used an online text-to-image generator then you should be familiar with the error message, “Prompt cannot be empty.”, “Please enter a prompt.”, or (in the case of ChatGPT) it will just make a prompt for you.

I don’t know if they have a technical reason why they do this, but if you run stable diffusion on your own hardware then you have the access to do whatever you want. I, for example, use Easy Diffusion since it was a one click install and I didn’t need to learn anything complicated from the backend to the UI. While it doesn’t give me all the control of something like A111, I can still mess around with a great deal of variables including generating images with nothing in the prompts.

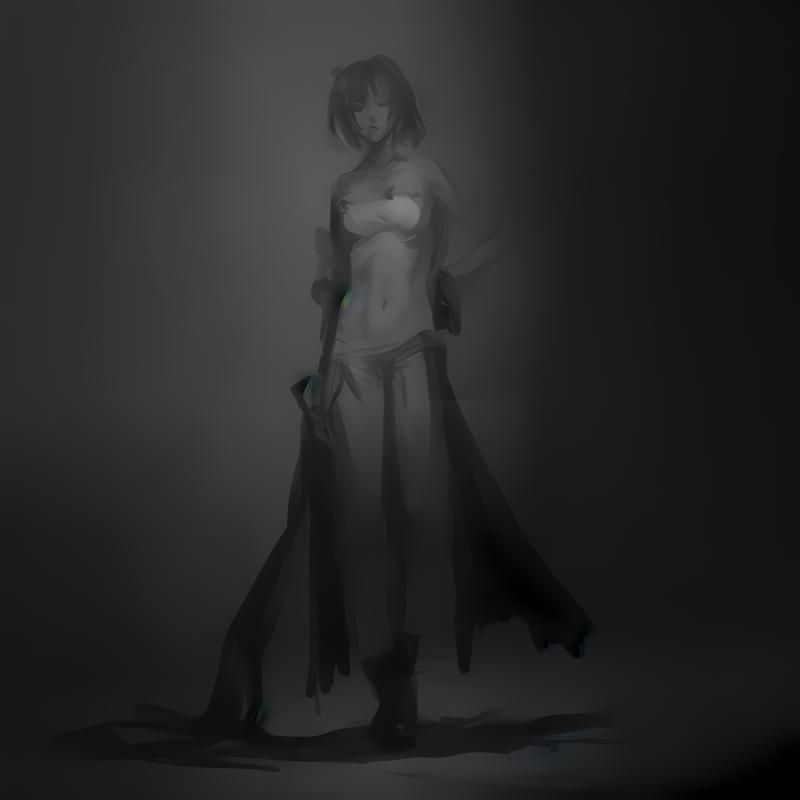

As you can see, they are mostly random noise with hints of recognizable things scattered about. I don’t know how it chooses to make them the way the are, but I have noticed a few patterns about them. They seem to show a jumbled mess of the images that they were trained on and with certain ones the last type of images that were given to it.

As stated by the author of C++AravaggioV0.9 - an Answer to both Dall-E and Kandinsky 2.1, "The procedure in making this model was first of all some merges, then i finetuned a 1.5 basic model with some of my black and white drawings/sketches (im not that much of an artist but it worked very good because it took the style in a nice way, making each humanoid output quite different from both anime and semirealistic models.” and the images given to us reflect that additional training. This could be helpful in getting a model’s general preferences, style, or what type of images it was last trained on.

(OddReality) (This forest path looks a bit creepy and all "no prompts" of this model gives about this same thing...)

While all of this is be quite interesting from a programming/technical POV, I found myself starting to use them for inspiration on images.

If the model already is going towards this mess of colors that slightly resembles a messy room, why not lean into it. Do you see a female with a long blonde hair wearing a blue tanktop, white skirt, and black strappy heels while rocking out on a guitar on stage?

Make it happen!

Using image-to-image helps a lot and it can even revel something you didn’t see before. Most of all, have fun experimenting with it with loras, embeddings, control nets, or anything else you can think of.

Keep being creative out there.

-NoahShinji