Update: This config works locally with a GeForce 3090/4090—no need to rent a GPU. The newer versions of SimpleTuner do not work with these config files. Please be sure to be on this version, if you want to use my attached config files: a2b170ef4e41bab6269110189f86f297a9484d96

Curating a Dataset

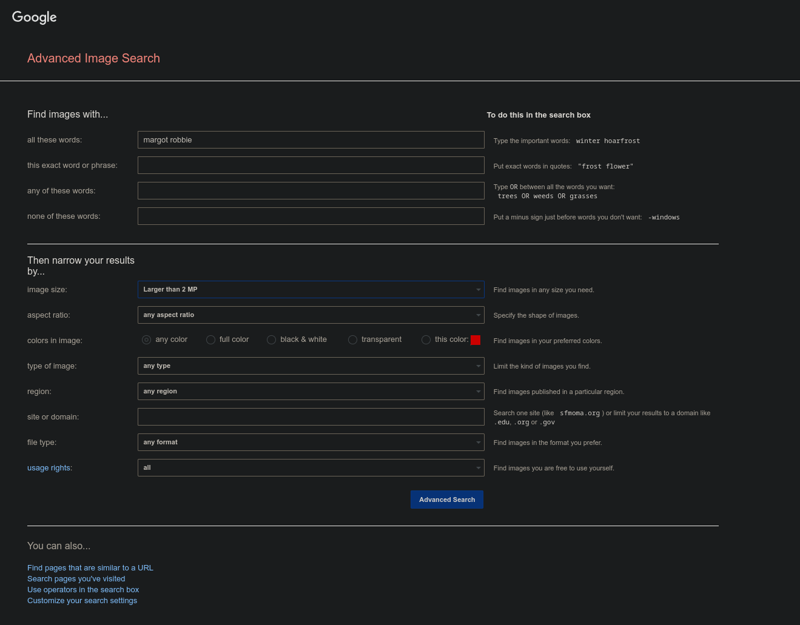

Start by gathering 25 high-quality images of your subject. Use Google Advanced Image Search to find these:

Enter the name of your subject in the "all these words" box.

Set "image size" to "larger than 2 MP."

Click "Advanced Search" to find suitable images.

Image Selection and Cropping

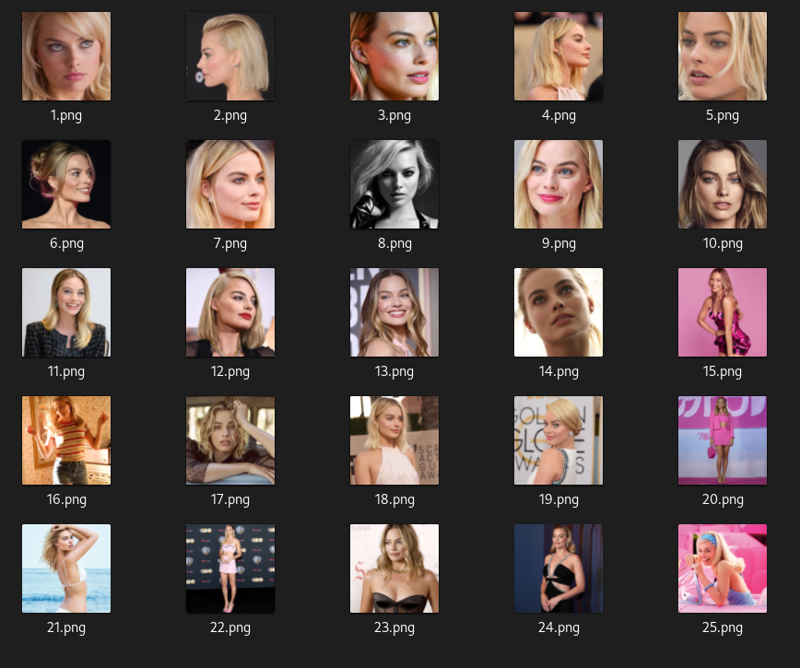

Gather 25 high-quality images of your subject:

Face: 10-15 images from various angles.

Body: 5-10 images focusing on the body.

Full Body: A few full-body shots.

Crop all images to 1024x1024. You can use an auto-cropper like BIRME, but manual cropping is often more precise. Your final dataset should look organized and consistent.

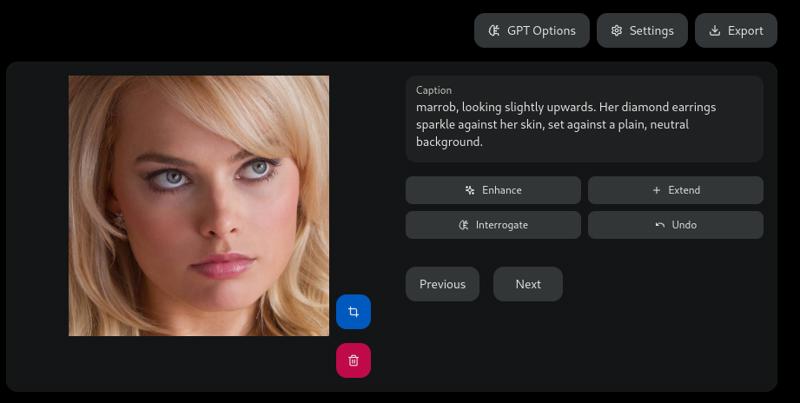

Captioning the Images (Updated)

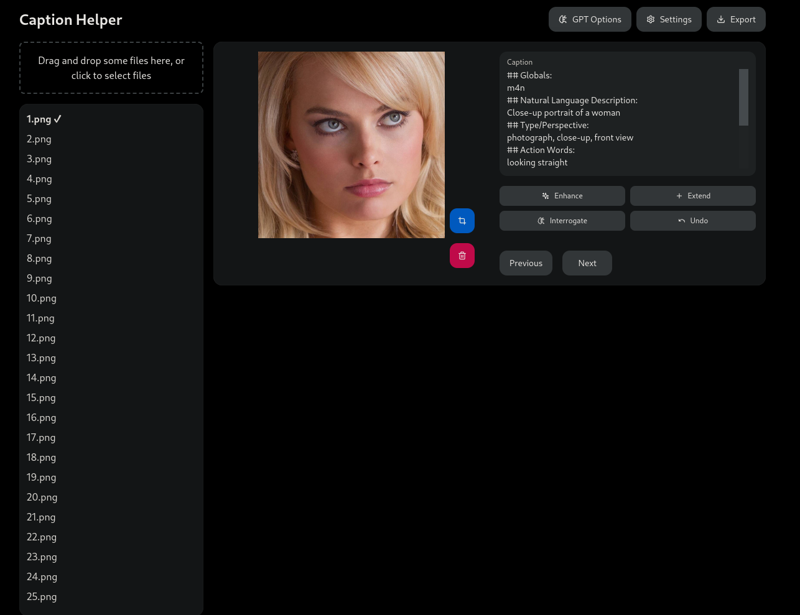

Use Caption Helper to caption your images. You'll need an OpenAI token and a Groq token for image captioning and enhancement. If you're unsure how to obtain the tokens, follow the links provided below each input box for help.

Upload your dataset by dragging it into Caption Helper.

Your images will appear in a list on the left side.

Select the first image and click "Interrogate" to generate a caption, which will appear in the caption box on the right.

Click "Enhance" to clean up the caption.

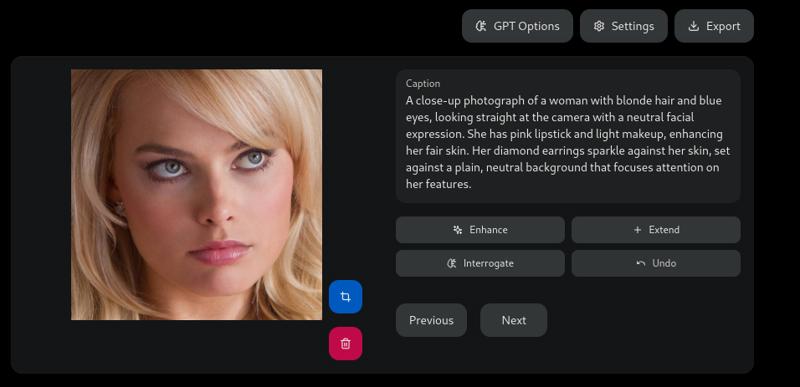

Remove any descriptions of physical features you want to associate with the subject. Then, add the model trigger to the caption.

Using A Trigger

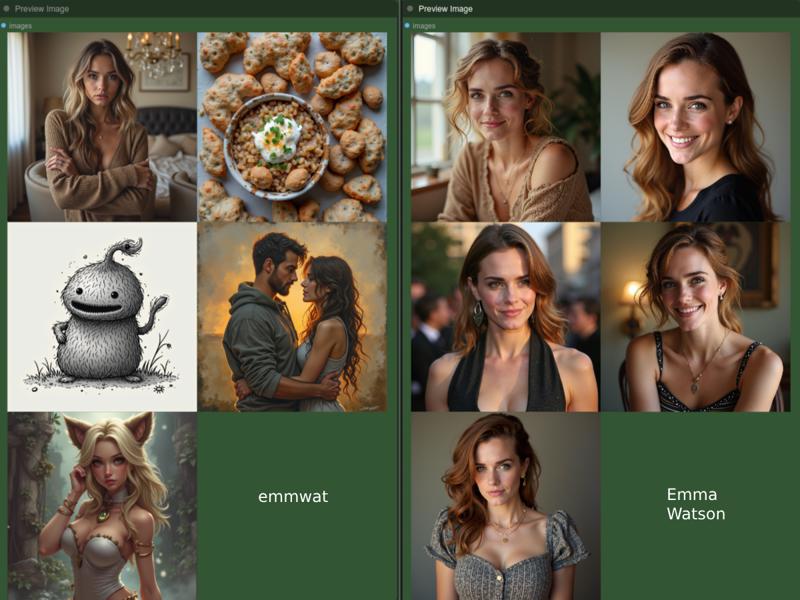

If you use a model trigger, place it first in the caption, followed by a comma. For famous people, avoid using their full name; instead, use a shortened version, like the first three letters of their first and last names. For example, using "Emma Watson" as the trigger might produce a similar-looking person, using it as the base for the model and affecting the model's likeness. Using "emmwat" uses ture randomness as the base and will create a better likeness.

Keep your captions concise—SimpleTuner has a 77-token limit per caption, and exceeding it can cause training issues. Generally, avoid captioning makeup unless it's bold (e.g., bright red lipstick or colorful eyeshadow) that you don't want to be associated with the subject. Caption the remaining 24 images similarly. Once done, click the "Export" button at the top right to save your captions. Place your captions in the same folder as your images.

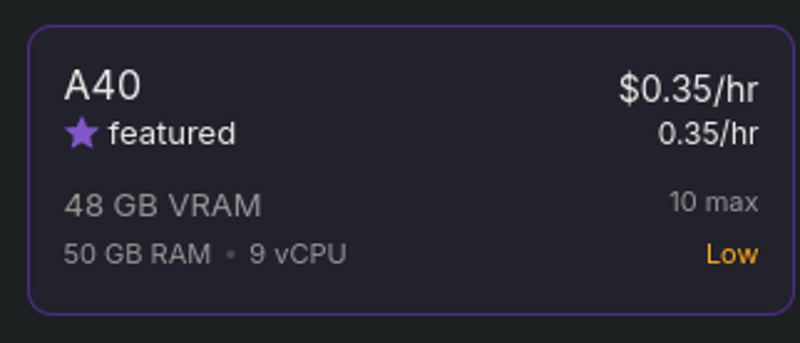

Deploying a Pod with RunPod

Please download the attached config.zip. It includes two config files (config.env and multidatabackend.json). Extract the zip file and make sure to have these files available for the later steps.

Visit the RunPod site.

Create a new account or log in to an existing one.

Ensure you have $5-$10 available.

Deploy a new pod, selecting the A40 option.

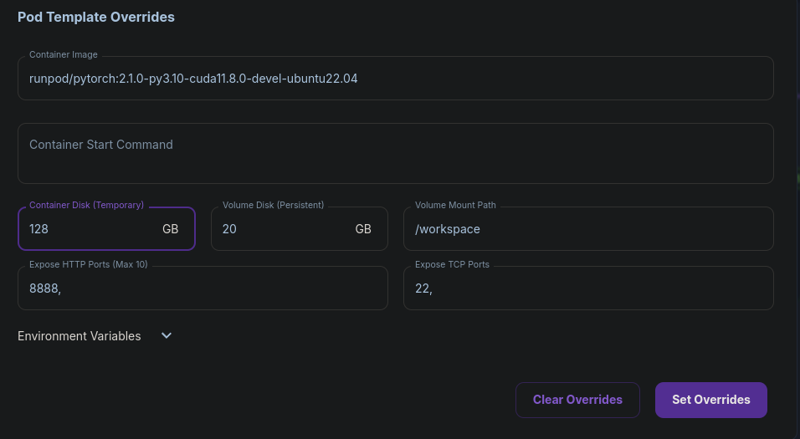

Editing the Template

Edit the template, set the Container Disk (Temporary) size to 128 GB and click "Set Overrides."

Deploying the Pod

Click the large "Deploy on Demand" button this will create the pod. After the pod is created click "Connect."

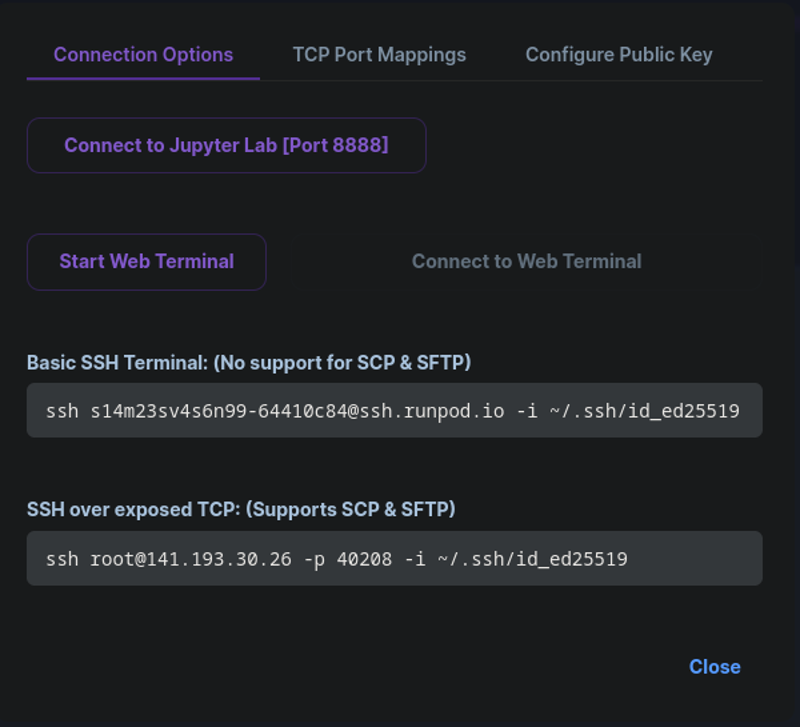

Connecting to the Pod

Click "Connect to Jupyter Lab [Port 8888]" to open your pod in a new tab.

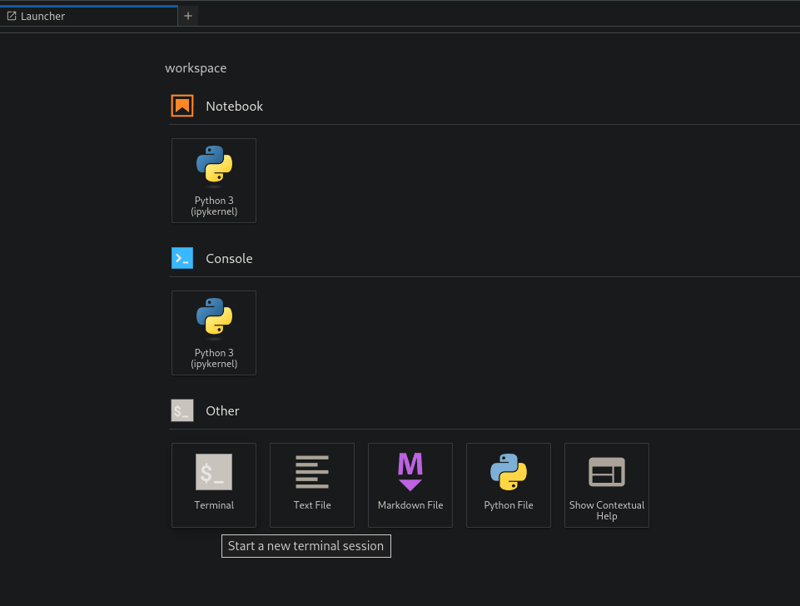

Opening Terminal

Click on "Terminal" to open the terminal in a new tab.

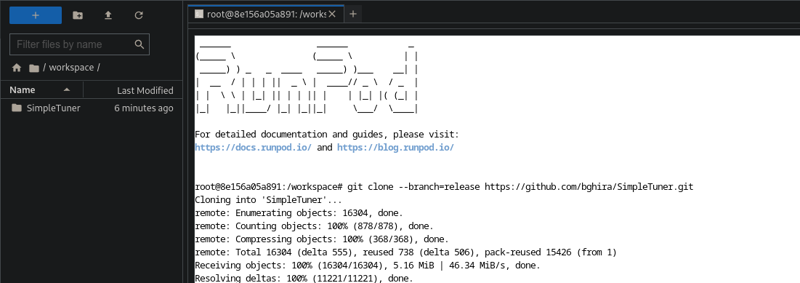

Installing SimpleTuner

After connecting to the terminal, run these commands to install SimpleTuner:git clone --branch=release https://github.com/bghira/SimpleTuner.git cd SimpleTunergit checkout a2b170ef4e41bab6269110189f86f297a9484d96python -m venv .venvsource .venv/bin/activatepip install -U poetry pippoetry install --no-rootpip install optimum-quanto

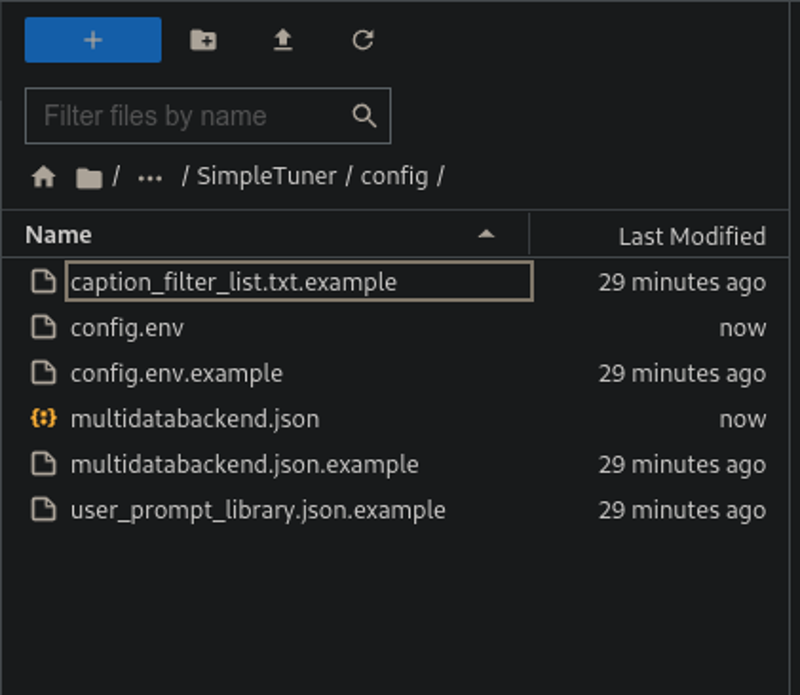

Configuring SimpleTuner

Open

config.envin a text editor, search for "m0del," and replace the 2 instances with your trigger word. Save and close the file.Open

multidatabackend.jsonin a text editor, search for "m0del," and replace the 4 instances with your trigger word. Save and close the file.In the pod, expand the

SimpleTunerfolder on the left, navigate to theconfigfolder, and drag the modifiedconfig.envandmultidatabackend.jsonfiles into it.

Copying the Dataset

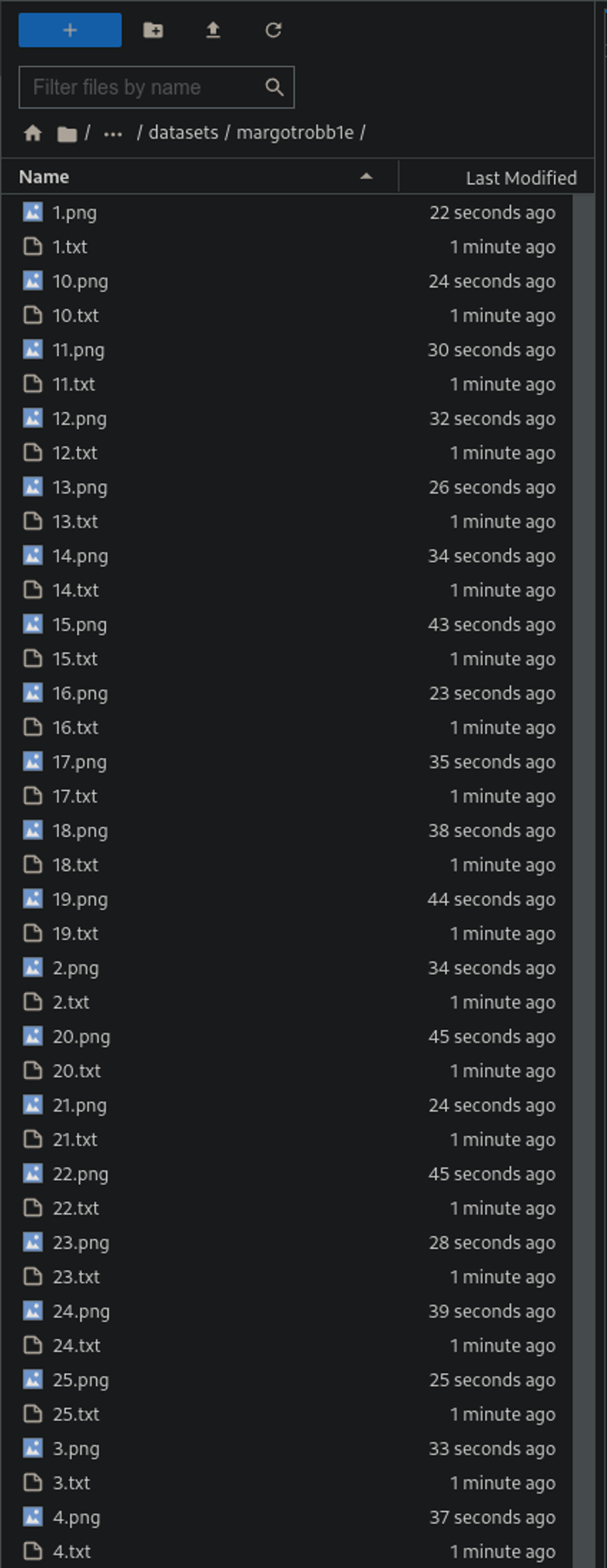

Navigate back to the

SimpleTunerfolder.Create a new folder named

datasets.Inside the

datasetsfolder, create a subfolder with the trigger word you typed in the configs.Drag and drop your images and text files into this subfolder. To avoid data corruption you may want to zip your dataset and transfer the zip file, and extract the zip file in the subfolder.

Creating Cache Folders

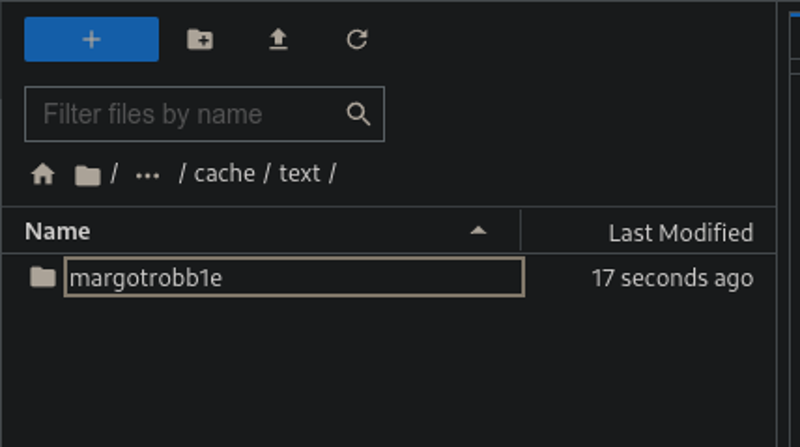

Navigate to the

SimpleTunerfolder.Create a new folder named

cache.Inside the

cachefolder, create two subfolders:vaeandtext.Within the

vaefolder, create a new folder with your trigger word you typed in the configs.Within the

textfolder, create a new folder with your trigger word you typed in the configs.

Adding HuggingFace Token

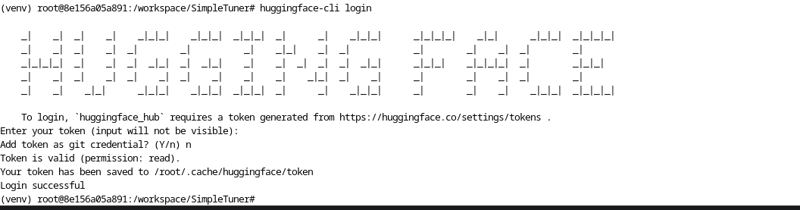

In your terminal, type

huggingface-cli loginPaste your Hugging Face token when prompted. (Make sure to accept the EULA to download the flux models).

Press

nwhen asked if you want to add the token as a Git credential.If it errors, type

pip install -U "huggingface_hub[cli]"and try the above command again.

Training the Model

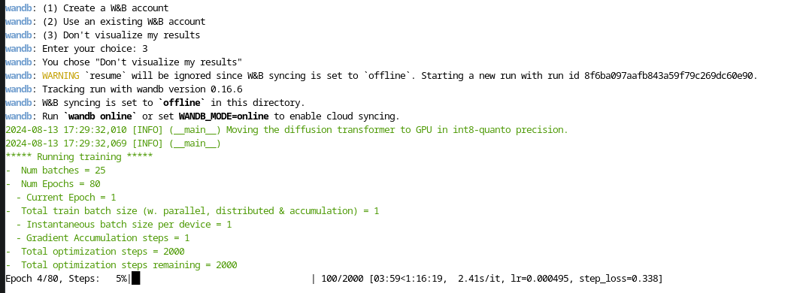

Type

bash train.shto start training.The process will download the required models.

If prompted about wandb monitoring, select option 3 to disable it.

The model will train for 2000 steps, with the 1600-step checkpoint typically being the most optimal.

Downloading the LoRA

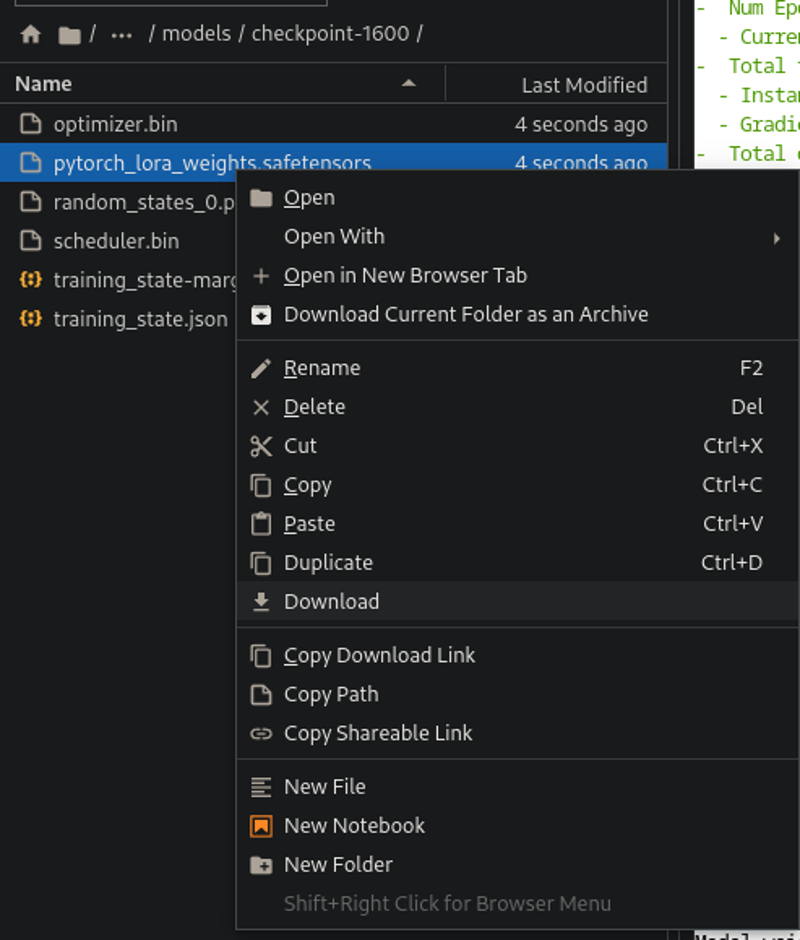

After training completes, navigate to the

SimpleTunerfolder.Go to the

outputsfolder, then themodelsfolder.This is where all checkpoints are stored.

To download, click on the desired checkpoint folder and right-click on

pytorch_lora_weights.safetensors, then select "Download."

Final Thoughts

After training completes, exit and shut down the pod, then delete it to avoid extra charges. I hope this guide helps! Feel free to reach out if you have any questions or feedback. Thank you!