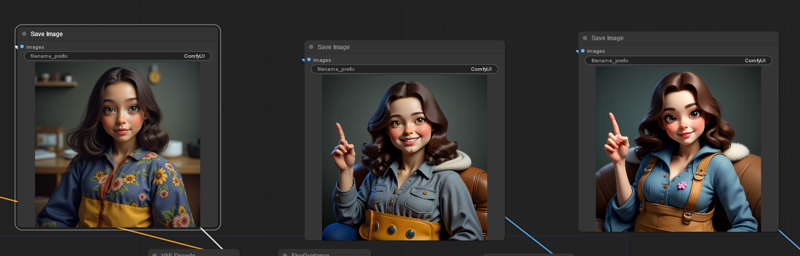

What is... a subject?

This is how FLUX + Consistency V3 0.8 treats the request "a subject", which means it recognizes at least this seed as a human. I didn't teach Consistency what a subject is, that's all Flux. Why did the loopback alter the subject in so many various ways you ask? Well, simple. It started over from a certain point of denoise to include more specific fidelity and details. Why though, is the real question here. The answer, I think rests in flux and t5's innate capability to identify and alter core traits without high grade destruction in fidelity. In this case, it seems to have introduced the concept of a subject along with THE subject. Lets do a few more seeds and see what happens shall we.

Well hello there synthetic gorgeous. FLUX knew what this woman was a woman, and with that it used the information from Consistency. I never trained this, and yet it knew, even with the simple prompt "a subject". The woman on the right is synthetic model generated in PDXL realism and the woman on the left is more similar to a model I assume was chosen for Flux. The more loopbacks introduced, the more likely it seems to introduce synthetic elements from the additional LORA element set. We can assume it's due to the alpha gradient and the step counts trained into it.

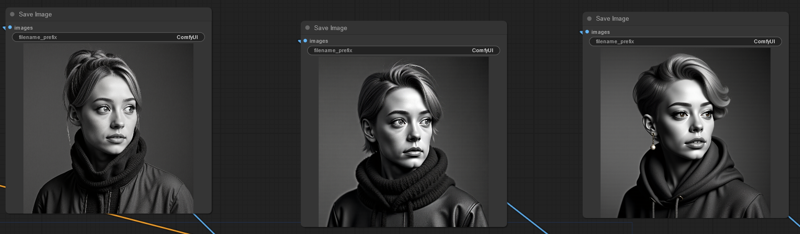

Hey there pupper. I heard you liked ear scratches and pets.

HMMMMMMM... yes that's definitely not a human, and the consistency lora IS in fact running. I simply have no dogs, so it has nothing to attach to. One more for posterity.

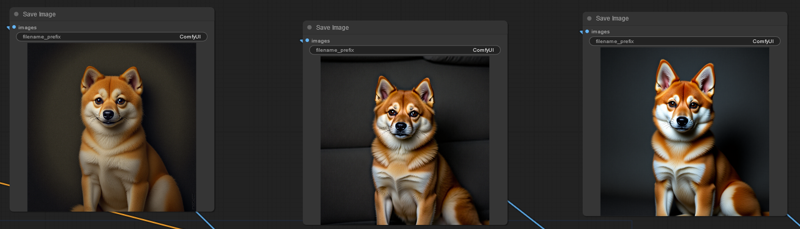

heresy noises intensify

You get the idea. It's treating each use of the caption "a subject" as a generic defaulted concept, and that subject is mostly an object, being, entity, or a concept with more detailed complexity attached to it if desired.

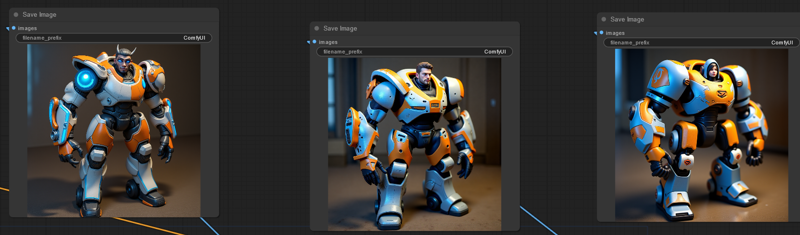

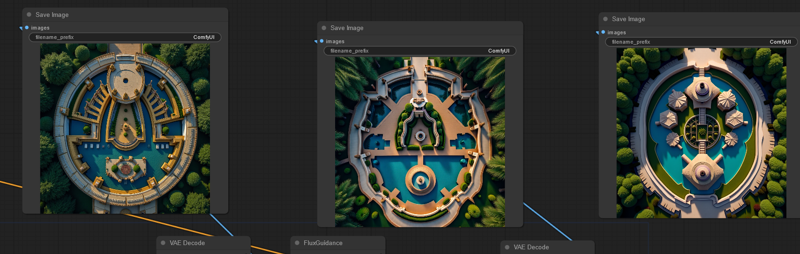

Lets test some camera angles shall we. Same seeds as before, but lets go ahead and use the prompt;

"a view from above a subject"

Hmmmmmm... very different vibe from the the first seed that made an anime girl. An entire structure of sorts. It seems it treats "view" as a wide scale image now, defaulting to "birds-eye view" or something of that nature akin to another model. Lets try again.

Similar. Sky view.

I'm fairly certain no matter how many view from above I do, it's going to produce something akin to a landscape with a compound on it. Even with consistency weighted towards humans.

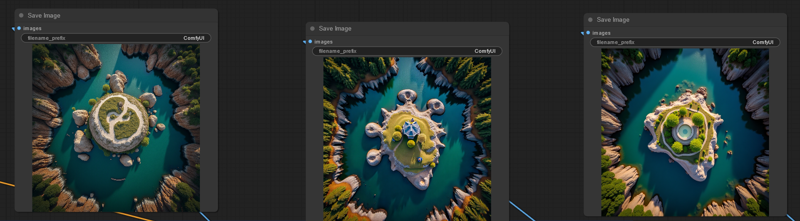

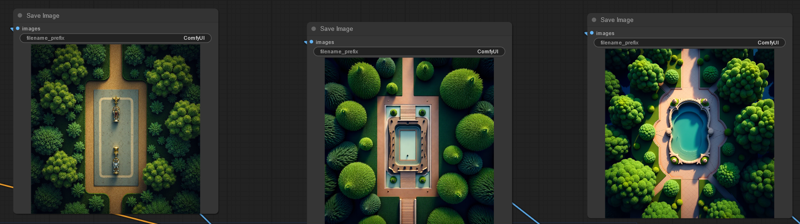

So we can conclude something pretty simple here. There's already camera control of some sort, but how much camera control? Lets make a more complex interaction and test it on a subject of our choosing. Say, an apple.

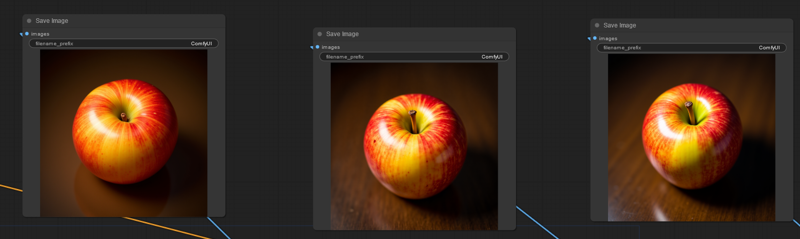

"a view from above to the side of an apple"

I bingo, what we have here is a miscommunication between the trained data and the user.

"a view from above angled to the side of an apple"

Same seed, different apple. How intriguing. It seems the camera control is already present. Lets load up baseline FLUX 1.D and see what I can do with anime girls.

Nice work baseline fp16, lets try a couple more seeds to be sure.

Well those are all definitely from above, from side images. The problem is, from side is kinda terrible and lacks a lot of real control in the baseline sd1.5 and pdxl, so that's one of the things I plan to fix. However, this showcases some strengths I didn't expect to see in the baseline flux with almost no effort.

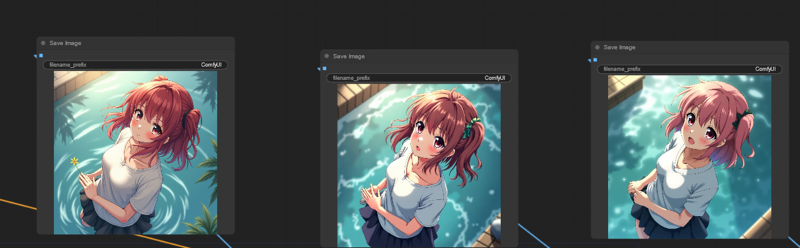

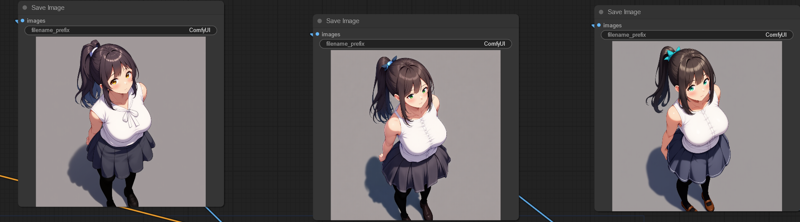

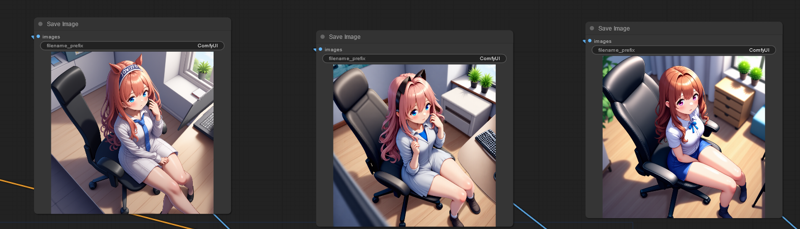

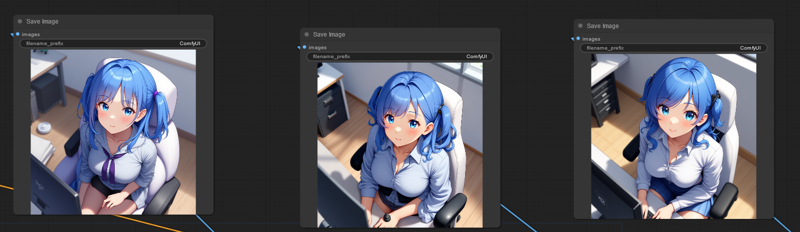

Lets run the same seed, same prompt, using consistency.

Well hello there gorgeous NAI generic woman. I can never get enough of you.

"a view from above angled to the side of an anime girl"

Gorgeous as she is, she has faults and problems still. As you can see, the background is quite bland. I've basically burned out the backgrounds to superimpose additional subjects with less difficulty.

So clearly consistency has a massive impact. Each loopback introduces more fidelity to ensure she's more lined up with the goal image, which is MORE lined up with the NAI woman.

So here we've established one of two possibilities that are both equally intriguing and both interesting.

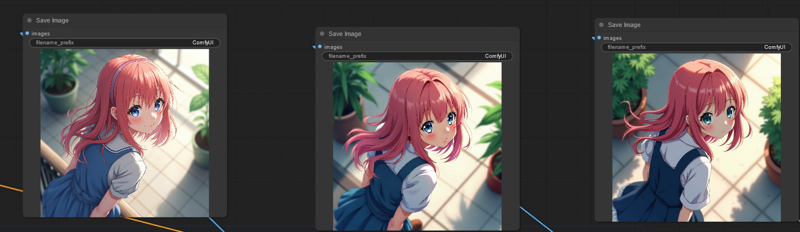

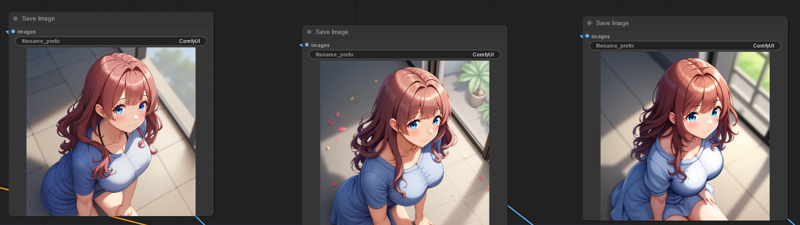

If you identify the tags used in all of the nai sourced images, you can see hair color, pose, eye color, clothing, and many more details. Yet I didn't specify any of those here, and yet it used them.

I simply used the "anime" tag, which enabled the sfw elements of the lora.

As you can see, she's clearly at the correct angle, and she's offset to the side as appropriate for the couch position and the depth of the image itself.

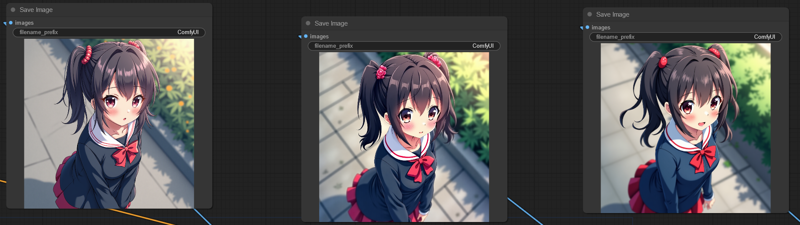

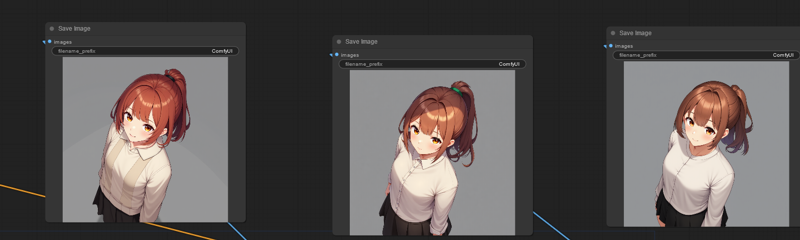

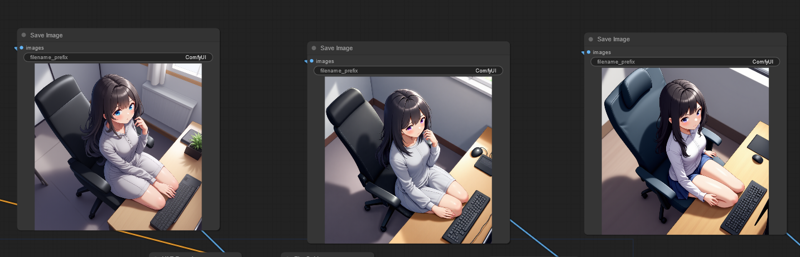

As you can see, clearly there's some continuity issues with the first image. However, the loopback and upscale have fixed it, and... hey hands where I can see them. bonks

The safe elements of the lora need work, but that's okay.

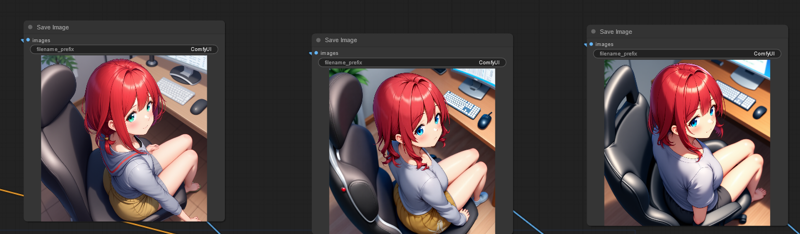

As you can see, the lora itself conforms the anime girl to the NAI specifications. Let me demonstrate some further specifications so you can understand.

Ah not bad, I've identified our first genuine pose artifact in this demonstration.

As you can see, the black haired doll happens to have some bleed-over with some of her kneeling tags. I've noted the problem and will be making sure to address it in the dataset.

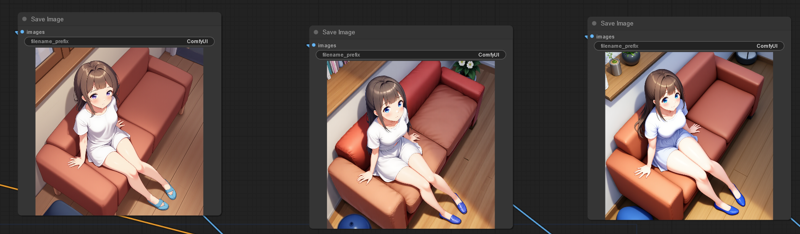

However, as you can see, primarily the girl exists, she has the correct hair color, and is sitting in a computer chair, save for the third red haired girl image where her chair seems like something out of a james bond film.

This concludes my baseline education of camera control and subject fixation. Chat is this real?