flux 1.1 pro ultra might be really strong!

flux 1.1 pro ultra 或许真的很强!

flux - dev is also not very weak!

flux - dev 也不是很弱!

What I'm going to find next will be a game-changer

接下来我的发现将会颠覆你的认知

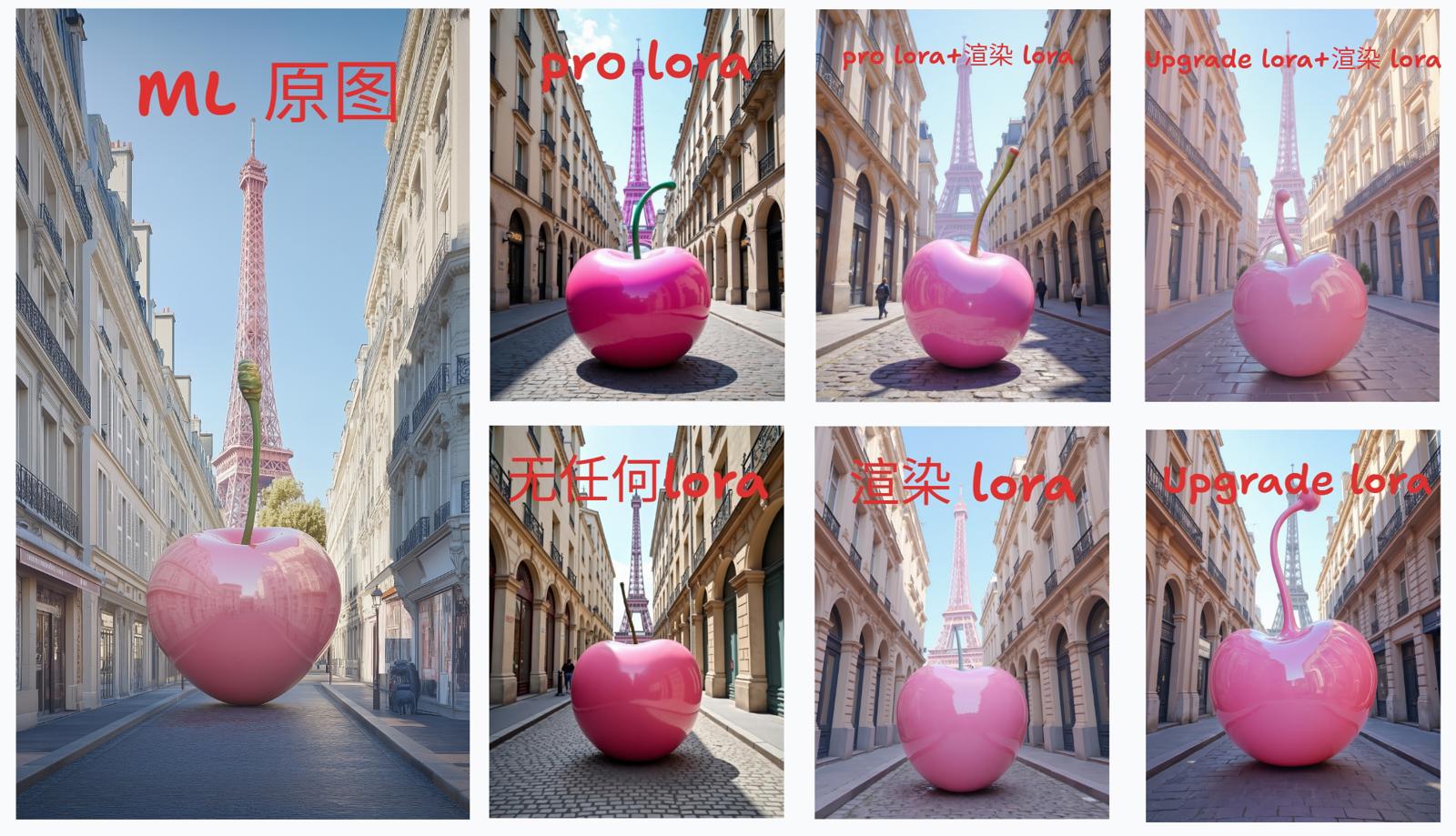

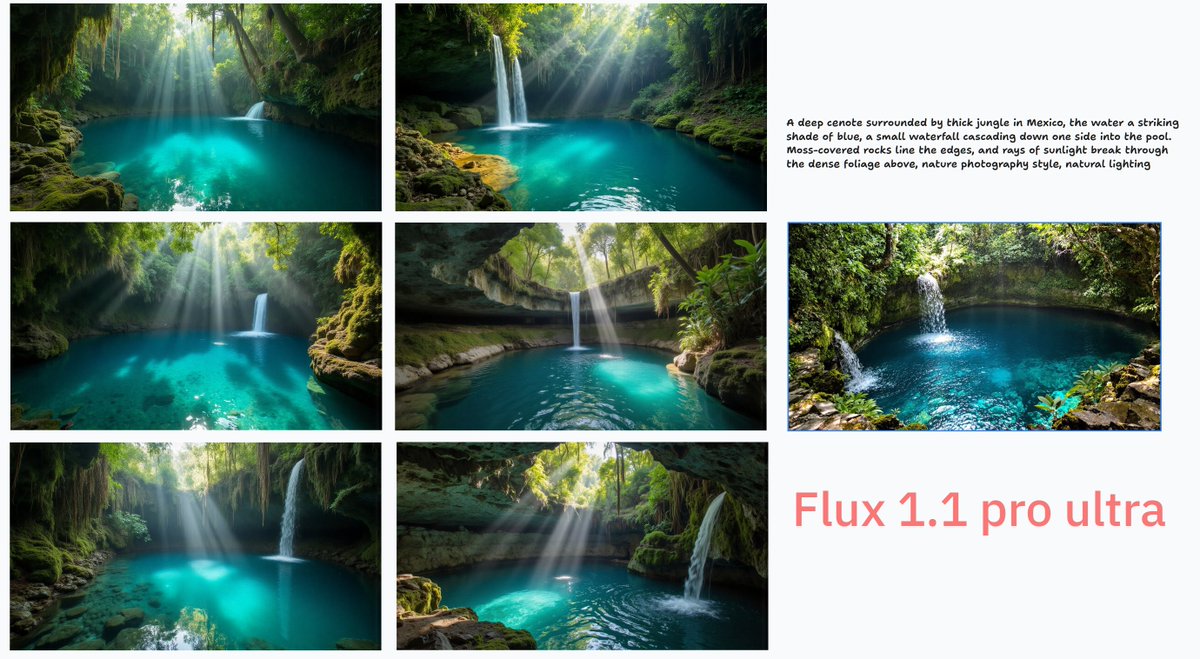

Take a look at my results:On the right is an image posted by a blogger above me on X, and on the left is an image I generated based on his keywords

先看看我的结果:右侧是X上一位博主发的图像,左侧是我根据他的提示词生成的图像

First of all, I will tell you the results. The above two images are implemented based on a lora and a comfyUI workflow. Among them, the role of lora is the key to opening up the aesthetic foundation of flux-dev. On the other hand, comfyUI workflow is the key to unlocking creative results.

If you are interested, here is my detailed description of this achievement,

If you want to give it a try right away, download:

(To be released later)

首先,我将对大家说结果,以上两张图像是基于一个lora和一个comfyUI工作流实现的。

其中,lora的作用是打开flux-dev美学基础的关键,

另一方面,comfyUI工作流,是撬开创意成果的关键。

如果你有兴趣,下面是我对这项成果的详细描述,

如果你想马上试一试,请下载:

Model Address

Now we have three versions of enhancements

Reality version

https://civitai.com/models/1000008?modelVersionId=1120701

Anime version

https://civitai.com/models/1000105?modelVersionId=1120805

My good friend ilab has also open-sourced his flux-dev real detail enhancement lora, let's celebrate together!

https://civitai.com/models/1001215?modelVersionId=1122111

It took me about 20 days to make this lora and workflow.

His appearance comes from two things,

The first one is that I saw an article about flux code interpretation in the blog of Zhou Yifan, a doctoral student at the School of Computer Science and Engineering at Nanyang Technological University in Singapore https://zhouyifan.net/2024/09/03/20240809-flux1/

The second one is the detailed enhanced lora created by my friend ilab.

I was greatly inspired by both of them, one who guided me through approaches to creative fusion and one who guided me through the lock-in of unfreezing flux-dev aesthetics.

制作这个lora和工作流,消耗了我大概20天的时间。

他的出现来自于两件事情,

第一个是我看到了新加坡南洋理工大学计算机与工程学院博士生周奕帆的博客里关于flux代码解读的文章 https://zhouyifan.net/2024/09/03/20240809-flux1/

第二个是我的朋友ilab创作的细节增强lora。

他们两个人给了我很大的启发,一个人引导我解决了创意融合的方法,一个人引导我解决了解冻flux-dev美学的锁定。

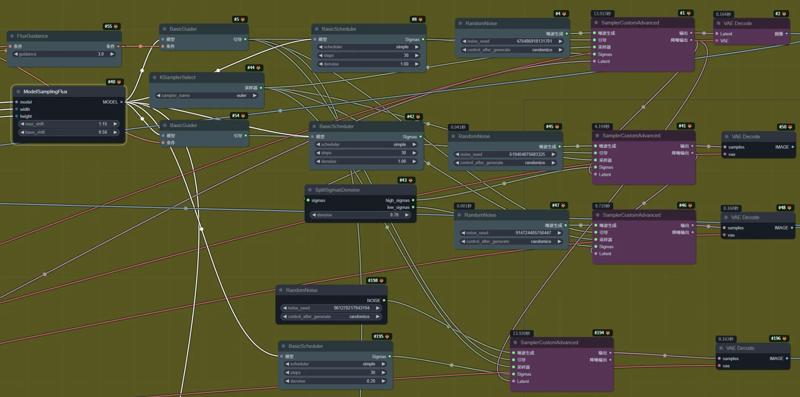

About the Creative Fusion Method workflow:

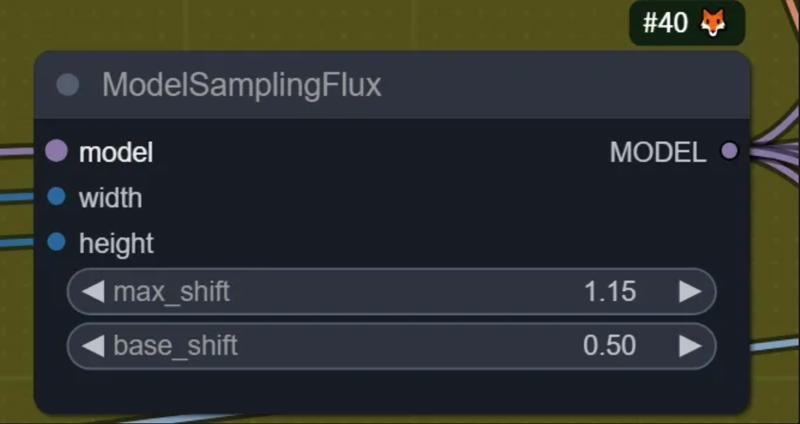

In the flux script, Zhou Yifan mentioned a parameter of mu. This parameter is affected by the number of time steps and eventually obtains a specific value. The algorithm can be found. Based on this, the max shift and min shift can be adjusted. Generating creative possibilities, including high and low time step sigmas, provides help, so we need three samplers every time to achieve the extension of creativity.

the calculation of mu depends on the two parameters max_shift and base_shift in the ModelSamplingFlux node.

In my actual tests, as long as the image generation size is above 1536*1536, the tolerance of these two values can reach 5 respectively.

But when the size is small, the blur effect of image fragmentation often occurs, and the quality is not high.

关于创意融合方法工作流:

在flux的脚本里,周奕帆提到了一个mu的参数,这个参数是受到时间步数的影响,最终获得一个特定的值,其算法是可以找到的,那么基于此,max shift和min shift是可以调节生成创意可能性的,包括高低时间步数sigmas,都提供了帮助,所以我们每次都要三个采样器来进行,才能实现创意的延展。

mu的计算要依赖ModelSamplingFlux这个节点里的max_shift和base_shift两个参数。

在我的实际测试中,只要图像生成尺寸在1536*1536以上,这两个值的宽容度可以分别达到5。

但在小尺寸的时候,往往会出现图像碎裂的模糊效果,质量并不高。

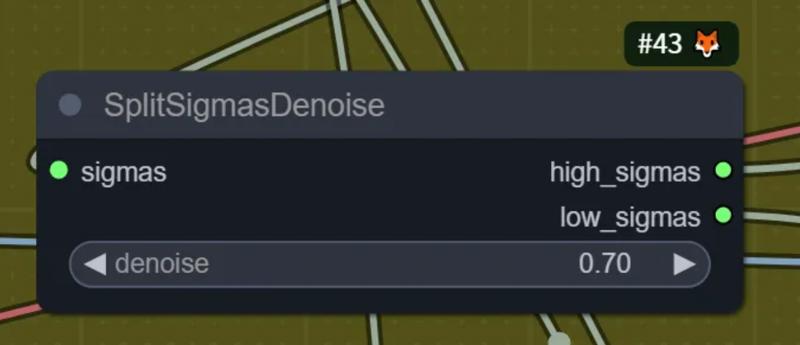

In the SplitSigmasDenois node of the subsequent sigmas, the denoise value is used to manage the degree of variation in the subsequent images, to adapt to the changes in prompts and produce more creative results; this value is not fixed.

在后面的sigmas的SplitSigmasDenois节点里,利用denoise的值来管理后续图像的变异程度,以适应提示词的变化来出现更加创意的结果,这个值并非固定。

So the process of the entire chain is as follows:

所以这整个链路的流程是这样的:

Here, the value of the FluxGuidance node also has a high tolerance, it can be as low as 1.5, making the image more creative!

To make the image clearer and more textured, I use 4 times sampling, which can generate 3 high-quality images at once

The first sampling uses the traditional process for sampling, and the generated results are also relatively traditional.

The 2nd sampling and the 3rd sampling used the latent above, but because the denoise used is 1.0, there will be great changes. However, the result of the 2nd sampling is often a noisy image.

The 4th sampling is because the image quality of the 3rd sampling is often lower, and I will redraw it using a low-redraw method.

在这里,FluxGuidance节点的值也有很高的宽容度,他可以低至1.5,是图像更具有创意性!

为了让图像更加清晰有质感,我使用4次采样,可以一次性生成3张质量较高的图像,

第1次采样,是使用传统流程的采样方式,生成的结果也较为传统。

第2次采样和第3次采样,使用了上面的latent,但是因为使用的denoise是1.0,所以会有极大的变化。但是,第2次采样的结果,往往是一张噪点图。

第4次采样,是因为往往第3次采样的图像质量往往较低,我会使用一个低重绘的方式重新绘制一遍。

Thus, by simply changing the values of the Guidance, shift, and sigmas nodes, I can get a lot of creative results from Flux-dev.

这样,我只要变换Guidance、shift、sigmas这3个节点的值,就可以让Flux-dev给我非常多的创意结果。

In my conclusion, the threshold of Guidance is 1.5-4, and this value changes quite simply and roughly.

For shift, the values I recommend, in addition to the preset max1.15 and min0.5, include very exaggerated parameters:

(max=3 min=2.95) (max 4 min5)

They were like mischievous little devils, giving me infinite creativity, but also causing my image to collapse severely, so I had to increase the sampling once to repair it to a greater extent.

Regarding sigmas, I personally prefer the values of 0.1-0.3-0.7, and you can also test them; they do not bring an absolute impact.

在我的结论中Guidance的阈值是1.5-4,这个值变化起来比较简单粗暴。

对于shift,我推荐的值除了预设的max1.15,min0.5,我还使用了非常夸张的参数:

(max=3 min=2.95) (max 4 min5)

他们就像淘气的小鬼,给了我无限的创意,也让我的图像崩坏的很厉害,我不得不给他们增加一次采样,来较大程度的修复。

至于sigmas,我个人比较喜欢的是0.1-0.3-0.7这3个值,你也可以测试一下,他并不能带来绝对的影响。

On the impact of the aesthetic Lora model:

With the above workflow, the portraits and products we generate often have a feeling of overfitting, ugly, and as if trapped within a certain aesthetic category. I came to create lora with the mindset of surpassing Midjourney and Nijijourney.

So I made two:

One is a real-life version of LoRa, which I call an aesthetic upgrade LoRa

One is the illustrated version of LoRa, which I call Painting Upgrade LoRa

(Painting upgrade This lora training set belongs to my friend MR.LU, https://civitai.com/models/1000105?modelVersionId=1120805)

I have seen many people train loras to improve the quality of flux-dev, but their enhancements in aesthetics and creativity are limited. I don't have particularly strong technical skills; I only train each lora material to test the final effect. This comes from a friend of mine named ilab, who created loras to enhance details, with effects better than all open-source ones, and my focus is on artistic creativity. His achievements have convinced me that this effect can also be achieved with small samples.

关于美学lora模型的影响:

有了上面的工作流,我们生成的人像和产品,往往有一种过拟合的感觉,丑陋且好像被困在了一定美学范畴之内。我是抱着能超越Midjouney和Nijijourney的心态来创作lora的。

所以我制作了两个:

一个是现实版的lora,我称作它为美学升级lora

一个是插画版的lora,我称之为绘画升级lora

(绘画升级 这个lora训练集属于我的朋友MR.LU,他并没有允许我开源)

我看到很多人都训练了提升flux-dev质量的lora,但他们对美学与创意的提升有限。我没有特别强大的技术,只有对每一个lora素材的训练来测试最终的效果。这来自于我一个叫做ilab的朋友,他创作了提升细节的lora,效果比所有开源的效果都好,而我的目标放在了艺术创意上。他的成果让我相信,小样本也能实现这个效果。

But it is obvious that flux-dev lacks all the proprietary noun tags, so we can only improve through artistic style, which is the biggest difficulty to break through MJ and NIJI next. First is rendering, followed by proprietary nouns (including IPs). I have tried making many rendering loras, and they work, but they are not yet up to my satisfaction.

但很显然flux-dev缺少了所有专有名词的标签,以至于我们只能通过艺术风格来提升,这就是接下来可以突破MJ和NIJI的最大难点。首先是渲染,其次是专有名词(包括IP)。我尝试制作了许多渲染lora,它们起作用,但还没有到我满意的程度。

This method of mine has another advantage; it does not affect the concurrent use of other loras. I can even add two more loras for my target image, one for clothing and background, and one for the face. It surprises me! Because in most cases, it works effectively! Yes, I have already made these public to everyone. If you have anything you'd like to discuss with me, you can follow my X https://x.com/changli71829684. My main updates are in China, focusing on sales workflows and teaching AI usage as my daily work. If there's any new technology, I also want to try my best to share it out~

我这套方法,还有一个好处,那就是他并不影响其他lora的并用,我甚至可以为我的目标图像再增加两个lora,一个作为服装和背景,一个作为人脸,它让我感到惊讶!因为大多数情况,它都有效!

是的,我将这些,都已经向大家公开了,如果大家有什么想和我聊的,你们可以关注我的X https://x.com/changli71829684,我的主要更新在中国,以销售工作流和教授AI使用为日常工作,如果有新的技术,我想我也会尽力分享出来~

我在 fal.ai 上训练花费了不止1000美金,也在其他服务器和自己的电脑上测试了不下几百次。

所以如果你要使用我的方法在其他传播中,请注明出处~我想那是您对开源世界的尊重!

I spent more than $1,000 on fal.ai training, and I tested it hundreds of times on other servers and on my own computer.

So if you want to use my method in other transmissions,please indicate the source~ I think that is your respect for the open source world!

Thank you for providing the FLUX dev model for us to use! I also want to use your subsequent products!

Commercial users must strictly adhere to the Black Forest Laboratory's usage rules ~

感谢FLUX提供的dev模型让我们使用!我还想用到你们的后续产品!

商用请一定遵守黑森林实验室的使用规则~