Theory

This post introduces a set of configuration files and modifications to popular training scripts aimed at improving text encoder alignment during LoRA training. The configurations for AI-Toolkit are minor adaptions of the already provided config yaml files. I have also included modified versions of flux_train.py for Kohya_ss that can replace the existing file located in your script, providing a plug-and-play solution for users eager to test these improvements.

These changes address overlooked text encoder parameters in many training pipelines, which directly impact training stability and model accuracy. While the adjustments are not guaranteed to make every single training outcome "better," they demonstrate clear overall improvements, particularly in stability and alignment with the model's text encoders.

The provided resources offer a starting point for users to experiment, validate, and expand upon these findings while awaiting official integration from the creators of AI-Toolkit, Kohya_SS, and other widely used frameworks.

How did I get here?

Text Encoder Alignment "Fix":

This Reddit post was my first exposure to the idea that Stable Diffusion 3.5 Large (and I have come to learn even Flux.1 Dev) would greatly benefit from defining known text encoder parameters when running training scripts on established architecture.

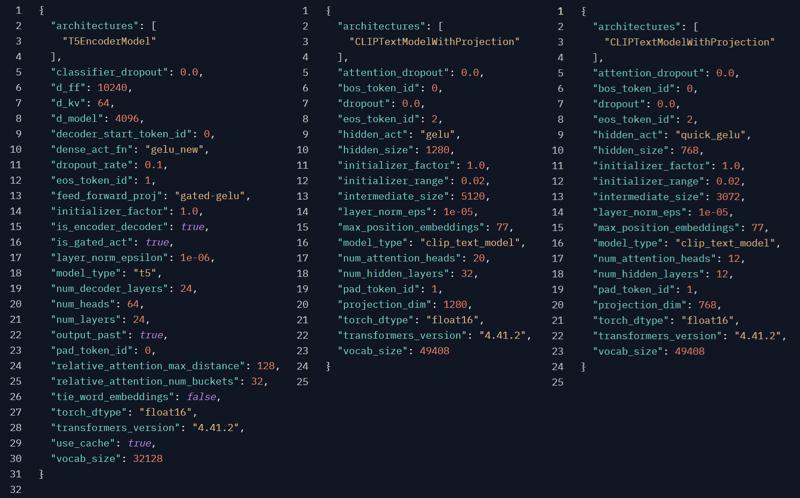

While the T5 max length of 154 was already defined in popular training scripts like SimpleTuner and the Flux/SD3.5 branch of Kohya_SS, I went further by defining all documented parameters for each text encoder based on the official model config files available on their respective HuggingFace pages.

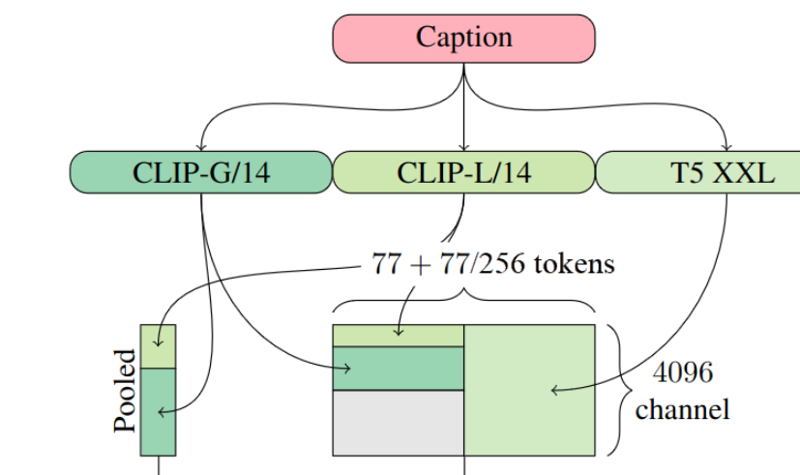

This image from StabilityAI intended to explain the relationship of the text encoders added to the general confusion and lead many believed the t5 max for SD3 models was 256 until about three weeks ago.

At first, I noticed more improvements when I added the CLIP max length of 77 (or already set to 75 in Kohya_SS for padding reasons) and incorporated many other previously undefined parameters to match the values provided from the included text encoder config files of Stable Diffusion 3.5 Large:

The defied text encoder parameters from SD3.5L's HuggingFace.

Impact on Training Stability:

Defining these parameters resulted in noticeable improvements in training stability and accuracy, particularly for Stable Diffusion 3.5, Flux Dev, and should carry over to other models using complex multi-text encoder setups.

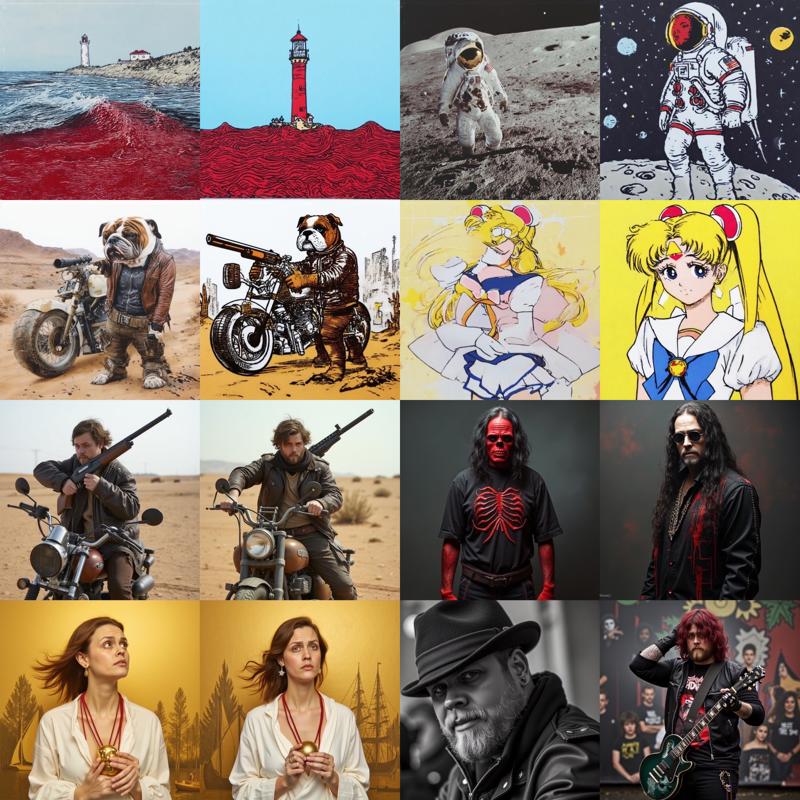

Before/After examples of sample training previews with the only change being these extra parameter definitions.

Importantly, while not every 1:1 comparison yields a “better” result, the overall consistency and reliability of training have significantly improved.

The changes are not a ground truth or a perfect fix but represent a substantial step forward in aligning text encoders with model weights.

Evidence and Validation

To validate that my findings were not isolated to AI-Toolkit or Stable Diffusion 3.5, I have tested the alignment fix across multiple frameworks and models:

AI-Toolkit:

Initial testing was conducted using AI-Toolkit, where I observed consistent improvements in training stability and alignment for Stable Diffusion 3.5.

Improvements carried over to Flux.1 Dev with noticeably less deformations and "plastic like" textures that were likely occurring more frequently from the previous misalignment.

This Before/After shows results from a SD3.5L style LoRA was trained at rank 16 with AI-Toolkit and seemed to stabilize closer to step 1000 with the style adaption occurring at lower training steps.

Kohya_SS:

While at the time of writing SD3.5 training with Kohya_SS is not well documented. There was not a default config file for SD3.5 for testing within the web GUI.

Luckily fellow CivitAI user Thefool has us covered with a custom batch file that can train SD3.5 models.

I will share an updated version of

sd3_train.pyif I have time to do so before the author themselves see my changes I have already pushed forflux_train.pyand makes the required changes to the text encoder parameters.I implemented the same parameter alignment fix within the Kohya_SS training framework. Despite its default configuration using a CLIP max limit of 75 (likely for padding reasons), the improvements carried over, confirming that the fix is not tied exclusively to AI-Toolkit.

Flux Dev Before/After examples from both ai-toolkit and Kohya_SS on style and Character LoRAs demonstrating the changes without any altered parameters in the GUI, just swapped out

flux_train.pywith my modified version.Flux Dev models also showed similar benefits, demonstrating that this approach generalizes well across different architectures.

Unexplored Scripts:

While I have not yet fully analyzed the codebases of other training scripts such as SimpleTuner or DreamBooth, I suspect similar misalignment may exist. These scripts might also benefit from defining the text encoder parameters more explicitly, as seen with AI-Toolkit and Kohya_SS.

Implications for Other Models:

These results suggest that models like SD3.5M, Flux.1 Schnell, or really any other diffusion-based architectures (including new video models that now support LoRA training) could also see improvements. The benefits are not limited to a single model or training pipeline, just knowing and matching the original parameters making this approach highly adaptable.

Before/After SD3.5L inference example without optimizing settings with rank 64 LoRAs.

Before/After SD3.5L inference from an over-fit checkpoint point shows that while there is global increase to training stability, this will not magically fix all AI hands, deformations and other common imperfects. It is important to have realistic exceptions.

Why Does This Matter?

Implications for LoRA and Fine-Tune Training:

While it is possible some closed-source scripts or hopefully at the very least Dreambooth could be taking this into account, The most widely used tools seemly do not.

If training scripts do not account for text encoder parameters as defined in the model config, the resulting LoRAs and fine-tunes are likely misaligned.

This issue has far-reaching implications, as it suggests that the majority of models trained without this consideration could benefit from retraining with proper alignment.

Model-Specific Parameters:

Each model has unique values and parameters for its text encoders. For instance, the configuration for Stable Diffusion 3.5 differs from that of Flux and other models. Adapting to these specific settings can optimize training results.

Broad Applicability:

This discovery is not limited to Stable Diffusion 3.5. Preliminary tests suggest that even SD3.5M, Flux.1 Schnell, and even older models such as SDXL could benefit from defining documented text encoder parameters while training.

Why Text Encoder Alignment Matters in AI Model Training

When big players like Stability AI or Black Forest Labs train a new model, they use scripts that determine how the U-Net (the part of the model that generates images) connects with the text encoders (the parts that interpret text prompts).

Text encoders, like T5 or CLIP, are critical to the process because they act as translators. When you type a word like "car," the text encoder converts it into a mathematical representation called a latent vector—a kind of "language" the model can understand. These latent vectors are then paired with image data to teach the U-Net what "car" should look like.

Simple Breakdown of How Training Works

Text Encoder's Role: From the start, the text encoder knows how to break down your prompt into latent vectors (e.g., "car" becomes a simple tensor vector representing that word).

U-Net's Learning Process: Initially, the U-Net knows nothing. But as it's shown pictures of cars paired with the "car" vector from the text encoder, it gradually learns to associate the latent text data with visual features.

Alignment Is Key: For the model to work, the relationship between the text encoder's latent data and the U-Net's output must align perfectly. If the text encoder is even slightly "off," it’s like trying to solve a puzzle where the pieces don’t quite fit.

Why the Fix Matters

When models like Stable Diffusion 3.5 or Flux Dev are trained, their configuration files define complex parameters that dictate how the text encoders should be formatted.

In my experiments, matching the training configurations to the original model settings (like ensuring CLIP hidden size is accurate to how it was deployed on initial training) resulted in better alignment. This means:

More accurate training: The U-Net learns better relationships between text and image latents.

Stable outputs: The training process is less prone to generating artifacts or "plastic-like" textures.

Since most models are trained on top of established architectures (rather than starting from scratch), re-aligning these parameters is like re-tuning an instrument to ensure it plays in harmony with the rest of the orchestra.

NOTE: You may see more over-fitting at previously desirable step checkpoints. This is expected as the model is learning faster and more accurately than it was previously.

Note: you can download and look through a collection of unbiased preview training before/after images from some of the many tests I have ran so far on my Patreon. Where everything will ALWAYS be FREE and never behind any kind of paywall.

Call to Action:

I believe this discovery has significant potential to improve the AI training process, but further validation and exploration are required. Here's how the community can help:

Test and Expand:

I encourage the community to implement these changes in various training pipelines, including SimpleTuner, DreamBooth, and other popular frameworks, to determine whether similar improvements can be achieved.

Testing on different models (SD3.5M, Flux Schnell) will help verify the universality of these adjustments and possibly unlock new use from less used models that had previously been ignored due to poor training results.

Discover New Parameters:

There may be additional unknown or overlooked parameters in the text encoder configurations that could further enhance training accuracy and stability. Collaborative efforts to identify and test these parameters are critical.

Share Findings:

Community members are invited to share their results, whether successful or not, to contribute to a broader understanding of how text encoder alignment impacts model training.

Strengthen Open Source:

By working together, we can refine training tools and methodologies, advancing the capabilities of open-source AI for everyone.

Final Note

This discovery represents a promising step toward more stable and accurate AI training. By aligning training scripts with documented model configurations and exploring potential unknowns, we can unlock new possibilities for generative AI. I am committed to sharing my findings and configurations and look forward to the community’s support in refining and expanding upon these efforts.

Edit: I would like to fully acknowledge that this is a working theory and a sloppy first implantation of purposed fixes to improve overall training stability and that I have most certainly overcompensated when attempting to define all of the text encoder parameters.

There are likely a number of lines in the ai-toolkit configs that are going to be ignored. But those that are not, clearly are having a meaningful impact on training results for the better and I hope to isolate the unnecessary parameters and better adopt these changes to their respective training systems when I have more time to do so.

I felt compelled to share my findings as soon as possible. Once I noticed how substantial the initial improvements were with even just SD3.5L I felt an ethical responsibility cancel my personal plans for New Years Eve and do my best to compile and share my best guess to explain my observations with the open-source community.