ALL, I have updated the GUI to support Wan2.1 training.

just as before , you will find the similar UI like Hunyuan and it supports for both I2V and T2V.

Remember, turn on the --clip to load vision clip if you are going to train I2V.

I changed all the logic to load the model, now you can freely select the model location by yourselves. for the first time you need to type in, later it remembers the last time input...

Still , just update the gui file from here:

https://github.com/TTPlanetPig/Gui_for_musubi-tuner

I have build the protal package without models, but still you will need the VS and Cuda install in your computer in advance.

https://drive.google.com/file/d/1Bi3Fe5xoKacd42-YqTIk56lYrRgJqciF/view?usp=drive_link

password: TTPlanet

please update the latest gui from my github and replace the one in the portal package, as I just fixed some bug(2025/03/11)

The code is from Kohya:

https://github.com/kohya-ss/musubi-tuner

Due to the environment settings, it can get a little complex. So, I have merged it into one Python-embedded package, and here it is:

Download Link (with Google Drive):

Download Here

File password: "汤团猪TTPlanet"

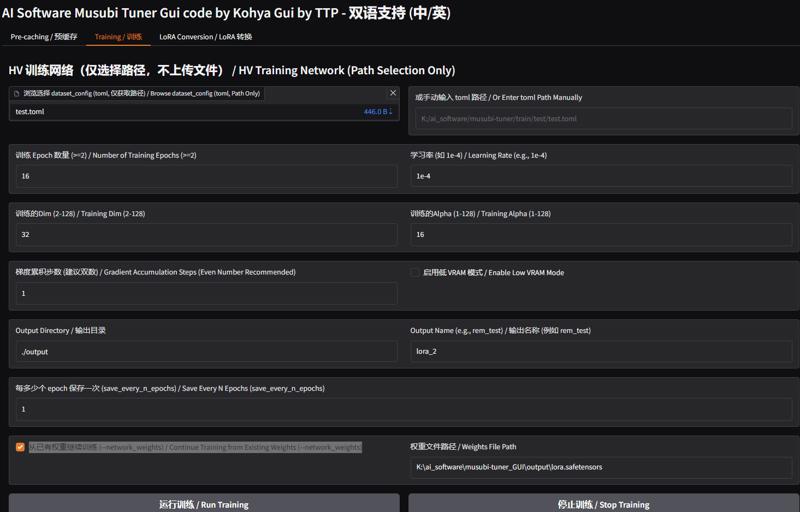

Please copy everything inside the quotes (" ") and use it to unzip with 7z as recommended.updated English version gui file here, download the py file and just replace it in the main folder. This version is with resume function!

https://github.com/TTPlanetPig/Gui_for_musubi-tuner/blob/main/train_gui.py

What’s included:

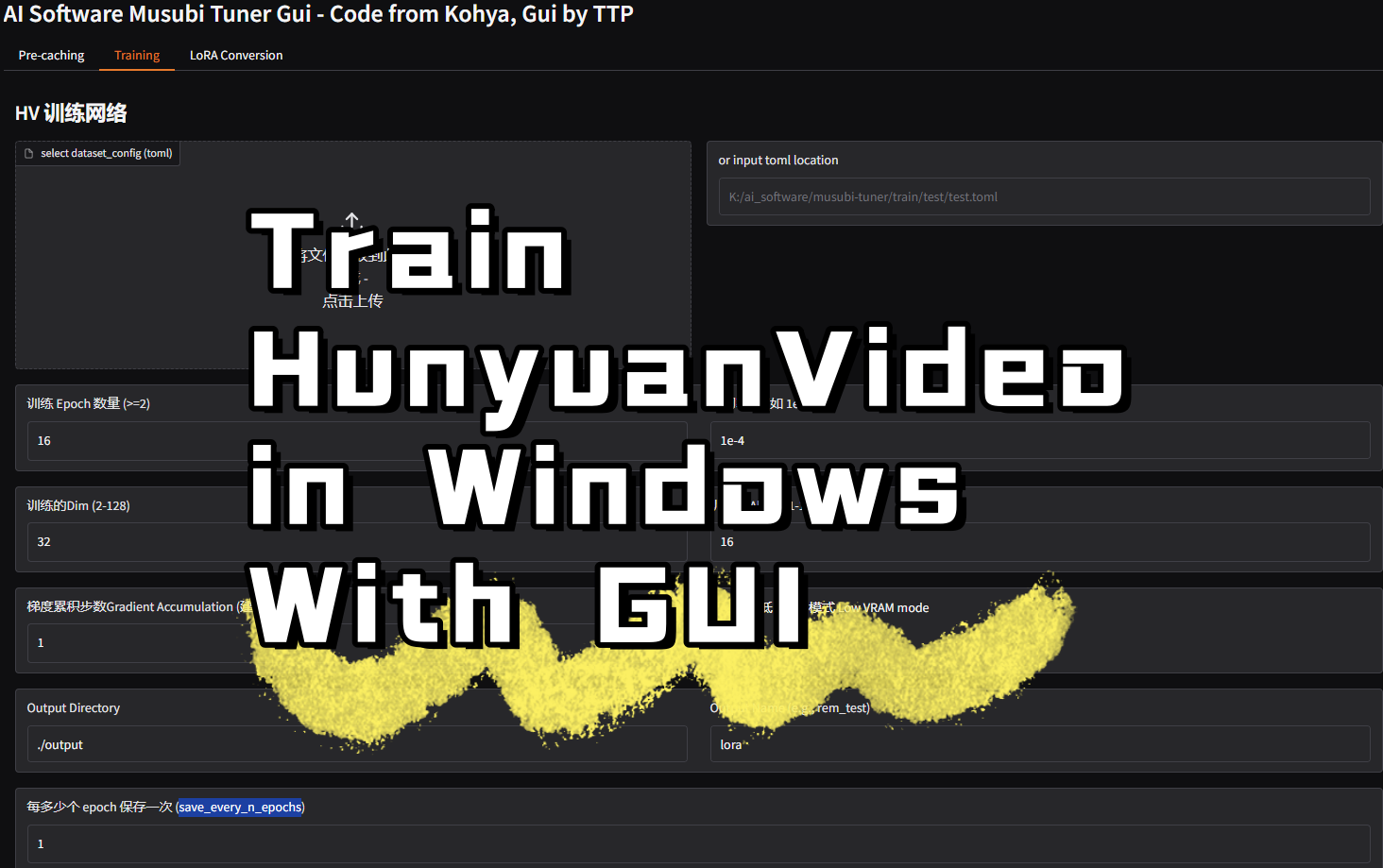

I also made a GUI for it, so you can easily train it.

To get started, you only need to build the training

.tomlfile in Kohya’s format!

Setup Instructions:

Download the HunyuanVideo CKPTs folder and arrange it in the

./modelsfolder as instructed in the following link:

HunyuanVideo CKPTs Setup GuideEnsure the

ckptsfolder is inside themodelsfolder.or download all ckpts pacakge from here, which I zipped https://drive.google.com/file/d/1DvpMuQAiVWHCjgpV5c-RuXMCH_aOsskq/view?usp=drive_link

Running the Training:

Start the training:

Double-click on the

train_run_点我运行训练.batfile. This will start the Gradio interface.Visit the address shown in the command prompt window to access the GUI.

How to Use the GUI:

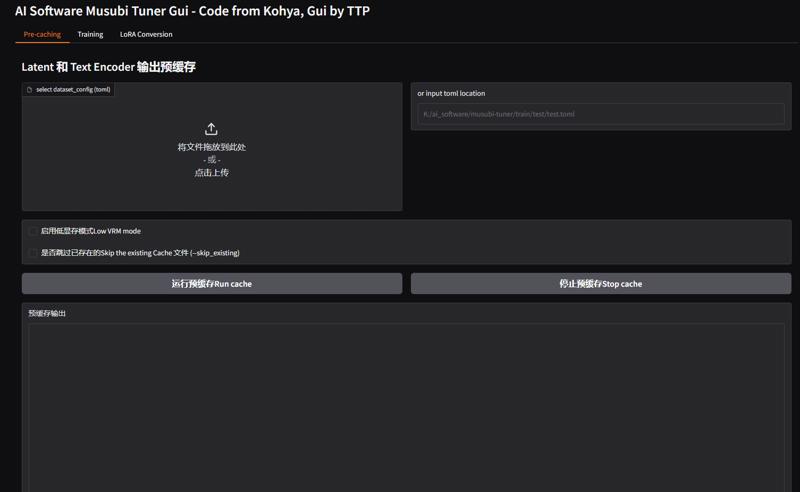

Cache:

Select your training

.tomlfile or input the file location directly.Click on Run Cache. It will automatically build the cache for you.

You can choose Skip Existing Cache if you’ve done part of the job before.

This only needs to be done once for one setting of training data. If you change the dataset, you will need to do it again.

Training:

Select the

.tomlfile for your dataset.Adjust the training parameters:

GC is set as default.

Adamw 8bit is set as the optimizer.

Training parameters:

Epochs: Number of rounds for the dataset (e.g., 100 if you have a small dataset).

LR (Learning Rate): Adjust as needed.

DIM (Dimension): Recommended value is 32.

Alpha: Set to half of DIM. If you lower it, increase LR.

Gradient Accumulation: Set as needed, but don’t set it too high.

save_every_n_epochs: Set the frequency of saving weights. Don’t save every epoch if you’re using a small dataset, as it can fill up your drive quickly.

Resume from trained weight: select the box for 从已有权重继续训练 (--network_weights) / Continue Training from Existing Weights (--network_weights) and input the dictory of the lora file you want to use!

Please note: Kohya’s script has updated the repeat in dataset toml, please use num_repeats = X to define the repeat

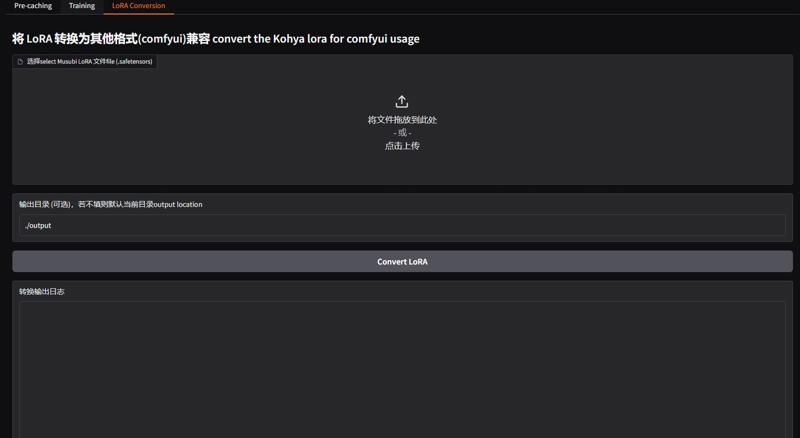

Convert for ComfyUI:

To use your model in ComfyUI, you need to convert your

.lorafile to the ComfyUI-compatible format.Simply select the file and click Convert. The default directory for output is

./output.

Build toml file:

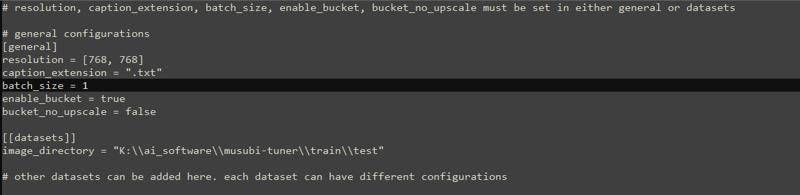

Please refer to the toml file I build for example inside my package ./train/test/test.toml

Change your train dataset dictory as you wish in here, and save, use it for training!

Still your data folder should include both image and caption in txt format

please read kohya's documentation for more detail for toml build

https://github.com/kohya-ss/musubi-tuner/blob/main/dataset/dataset_config.md

Q&A or Issue:

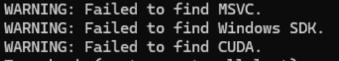

if you are facing a error when you build the latent cache as below:

please try to install the CUDA here https://developer.nvidia.com/cuda-12-4-0-download-archive as I build based on 12.4, and I do recommend you upgrade to 12.4

for MSVC, install from here:

https://visualstudio.microsoft.com/downloads/

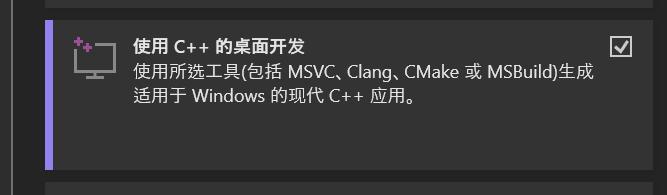

select

and intall as this:

for C++ desktop application installation pack:

put it in the diver you have enough space... if your c: driver is small!!!

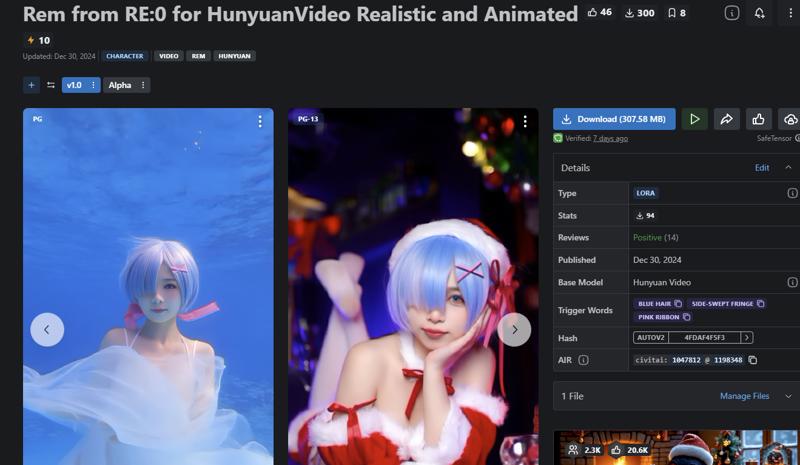

My Lora Models:

https://civitai.com/models/1047812

and

https://civitai.com/models/1075765/super-realistic-ahegao-for-hunyuan-video

If you think it's good, remember to support me!

Here are my contact details:

- QQ group: 571587838

- Bilibili: [homepage](https://space.bilibili.com/23462279)

- Civitai: [ttplanet](https://civitai.com/user/ttplanet)

- WeChat: tangtuanzhuzhu

- Discord: ttplanet

Contact me if you want your customized lora!