I did not post any Daily BancinXL VS these days because i have been busy with the holidays of course, but also with fighting with Kohya :D

My second step on porting BancinXL to Illustrious was aborted a few times (with a few wasted hours of GPU resources), but i am getting there :D

First, i finally built my dataset thanks to the Dynamic Wildcards:

PonyScores7, masterpiece, best quality, lots of details, 1girl, 20 years old, beautiful face, perfect eyes, (upper body, portrait, photoshoot):1.2, medium breast, seductive, {__cf-*/prompt-costume__}, (grey background, simple background):1.5 This allowed me to generate ~300 pictures among which is i selected 200 (by removing bad hands mostly). Why 200? Because it started to be repetitive and for a LoRA (a small "patch like" model), it seems to be agreed upon an upper limit.

Starting from there, i did some testing with Kohya and reached those parameters for now:

LoRA dimension 64 (with network Alpha at 64 too. I don't think its the perfect value, but a lot of people tend to use the LoRA size as Alpha. I don't think a link between those numbers from their definition, but i wanted to test it)

This time, i trained both Unet and CLIP. Some tutorial mention that training the text encoders with SDXL mess things up and for style LoRA, i have avoided the CLIP training using the special flag "--network_train_unet_only"

I used adafactor for both the LR and Optimizer:

The LR, or Learning Rate, is the value by which the LoRA is updated between steps. A lot of tutorial use Constant or Cosine, but adafactor allow updating the LR after each steps to try to converge faster. It was worth a try because i used to use Constant from a previously read tutorial.

The Optimizer is the method used to update the LoRA. I have been using adafactor from the same tutorial, since it is less memory heavy than AdamW, but with a few extra parameter to skip the LR udpdate. This time, i have unleashed his full potential.

With 200 pictures with a lot of variation, i wanted to make sure every epoch counted. Instead of the traditionally "300-400" steps per epoch, i wanted around 1000 steps, here is the usual computation to be done:

Number of steps per epoch = number of pictures * number of repeat / batch size

batch size: how many images are parsed each step. To accelerate the training, some people use a batch size of 2 or 4. I was not in a hurry and from my understanding, more picture in a batch give bad results => i went with 1

number of repeat: how many times the dataset is parsed before switching epoch. Since i wanted 1000 steps per epoch and was using a batch size of 1, with 200 pictures => i used a repeat of 5

How many epoch (total steps): With 1000 steps by epoch, i would reach very fast the "recommended" sweet spot of 3000 steps. But i wanted to make sure to train enough (since i launched it and went to sleep afterwards, i was not going to check and restart if it did not train enough). So, i selected 10 epoch. Truth is, after 5 epoch, it already felt burned...

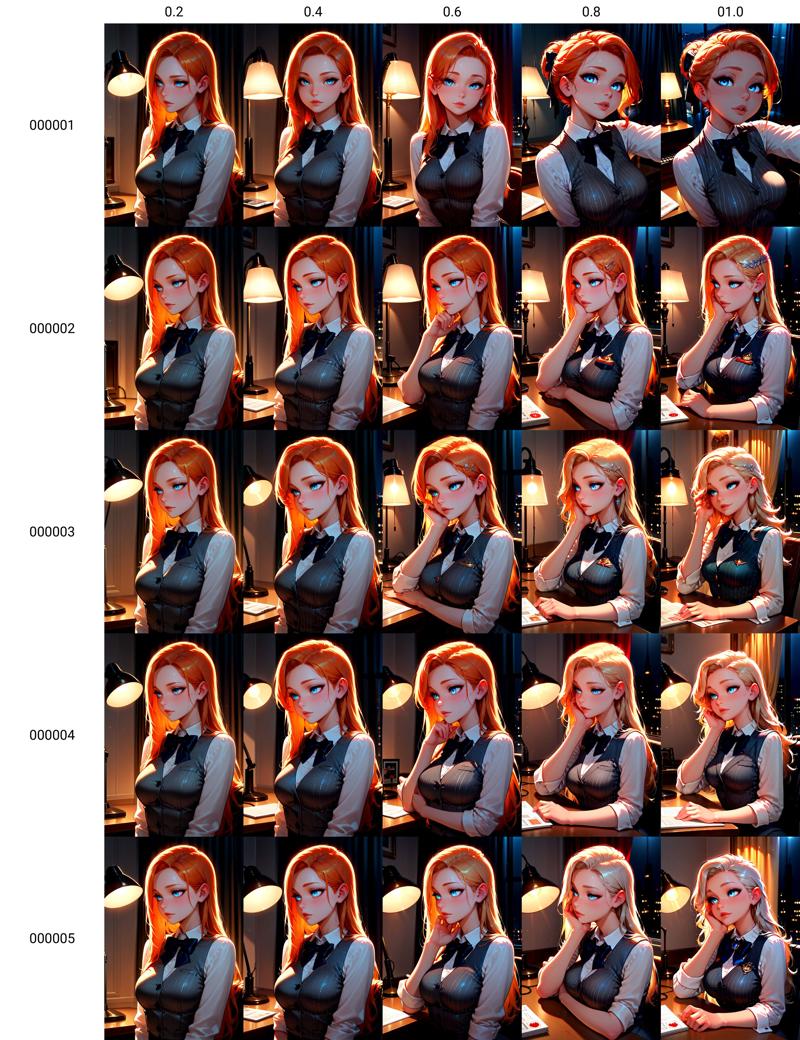

Now, i have those 5 LoRA (the one after are not worth looking at) and i need to see which is the best...

When looking more closely, the latest epoch at the bigger weight give a clear "BancinXL" style to the model (i used Anban V2 as base, since the goal is to merge the LoRA in it), but the fidelity to the prompt is going out the windows, with the character going from "ginger" to "blonde" /o/

I may try to merge epoch 3 or 4 at strength 0.6 with epoch 4 or 5 at strength 1.0 and see if i can something out of this mess, otherwise, i'll have to try and directly fine-tune AnBan with this dataset :D

Stay tuned! :D

NB: interesting result in the first epoch at full strength, probably due to the dataset being only portrait of 1girl facing viewer ^^;