################

################

https://www.patreon.com/posts/sticky-negatives-119624467 - for the video with examples (free, as is all content on the patreon, just f--- youtube its unserviceably bad).

QUICK GUIDE:

How to Implement:

Step 1: Copy your positive prompt into the negative prompt field.

Step 2: Add explicit undesirable terms to the negative prompt (e.g., "awful," "bad hands," "blurry," "distorted", "bad", "artifacting", etc).

Example:

Positive Prompt: "A beautiful woman standing in a garden, detailed, realistic."

Negative Prompt: "A beautiful woman standing in a garden, detailed, realistic, awful, distorted, bad hands."

Key Settings:

Use high CFG (start around 20 for testing).

Test at your desired resolution, even higher than usual.

Stick to your preferred sampler and step count (e.g., 20–30 steps).

What to Expect:

Cleaner, higher-quality images (or songs in the case of Suno)

Improved alignment with your positive prompt’s intent.

Works best when your positive and negative prompts "track" each other.

Extra Tips:

Combine with merged models using the 50% rule for even better results. High CFG is beneficial with a lot of smoothed signals.

Experiment with different negative terms to fine-tune outputs.

Save consistent seeds for A/B testing.

####

Full summary below created by https://chatgpt.com/share/677f86e0-befc-8001-b547-44f4f38c1060

####

Overview of Techniques for Model Merging and Negative Conditioning in Diffusion Models

This detailed breakdown provides insights into model merging, the 50% rule, and negative conditioning in diffusion models. It clarifies how these methods enhance image and audio generation, reduce computational requirements, and improve output quality.

1. Introduction and Technical Issues

Initial Issue: The speaker began by addressing a technical crash caused by running out of VRAM during streaming and recording.

Revised Setup: Reduced resolution to prevent further issues.

2. Potential Impact

Significance of the Method:

Enables high CFG (Classifier-Free Guidance) generation without significant artifacts, offering significant benefits in image and music generation.

3. Key Concepts: Conditioning

Positive and Negative Prompts:

Positive Prompt: Provides directions for the desired outcome.

Negative Prompt: Traditionally thought to pull the process away from unwanted characteristics (e.g., "disgusting," "bad hands").

Misunderstanding Negative Conditioning: It doesn’t merely counteract the positive prompt but influences the embedding space in complex ways.

Embeddings and Their Role:

Embeddings guide the iterative process of transforming initial noise into the final image.

Positive embeddings drive the model toward desired characteristics.

Negative embeddings act as repulsive forces, which need to align better with the positive prompt for optimal results.

4. Model Merging and the 50% Rule

Model Merging Technique:

Uses the 50% rule to blend models evenly without bias.

This process preserves positive signals and reduces overfitting.

Amplifying CFG highlights differences in signals, improving the model’s ability to generalize and adhere to user preferences.

Advantages:

Results in models that produce images with strong, user-preferred signals.

Allows for higher quality generation, even with merged models.

Reduces the complexity of merging while maintaining signal integrity.

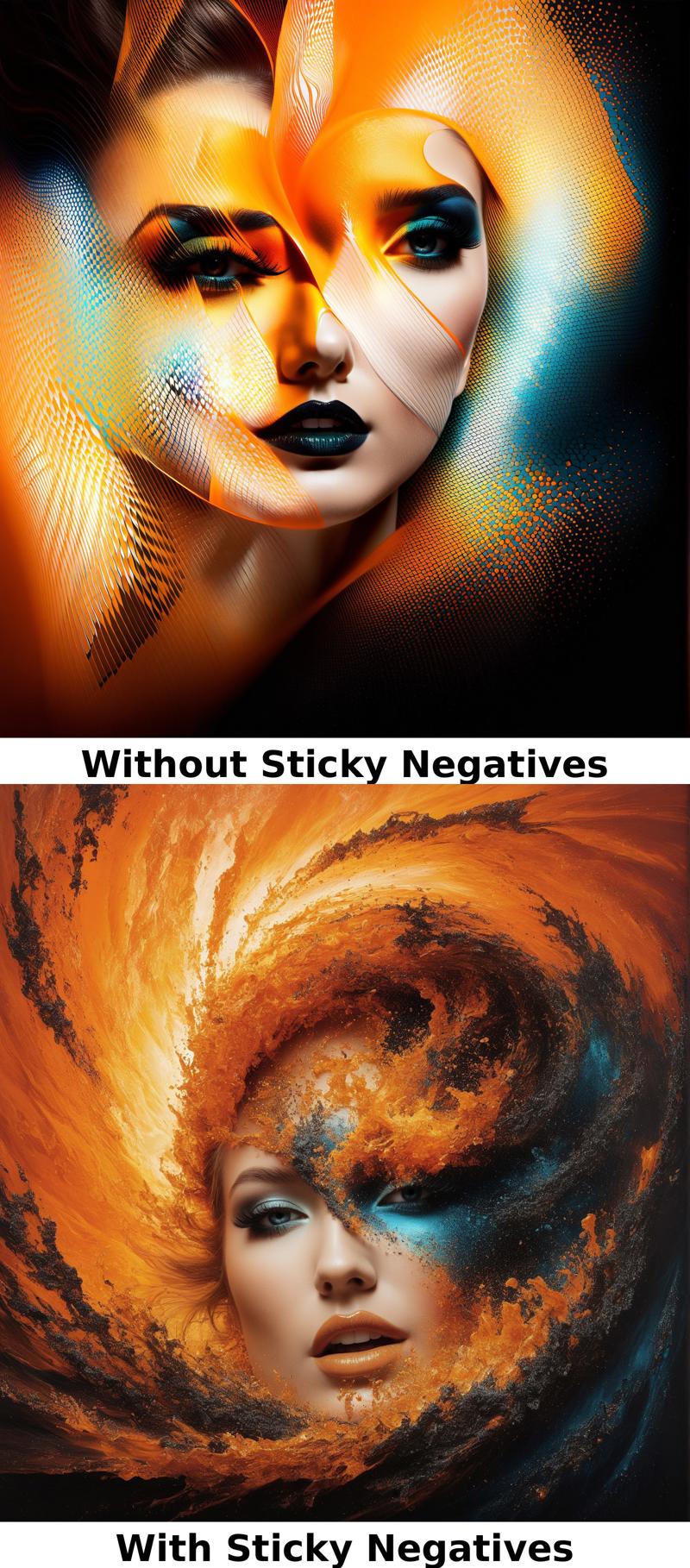

5. Negative Conditioning: Improved Approach

Traditional Issue:

Negative prompts often use unrelated embeddings that don’t adapt as the image generation progresses, leading to suboptimal results.

Proposed Solution:

Align negative conditioning with the positive prompt by:

Including the positive prompt within the negative prompt.

Adding specific undesirable characteristics to the negative prompt (e.g., "awful," "bad hands").

This approach creates a “tracked negative conditioning” that adjusts dynamically with the recursive generation process, effectively improving results.

Analogy:

Imagine being shown how to progress step-by-step to a desired result while also being warned of a potential bad version. Compare this to instead being warned not to simple scribble all over the paper at random. This directed dual guidance leads to a more accurate and refined output.

6. Practical Demonstrations and Observations

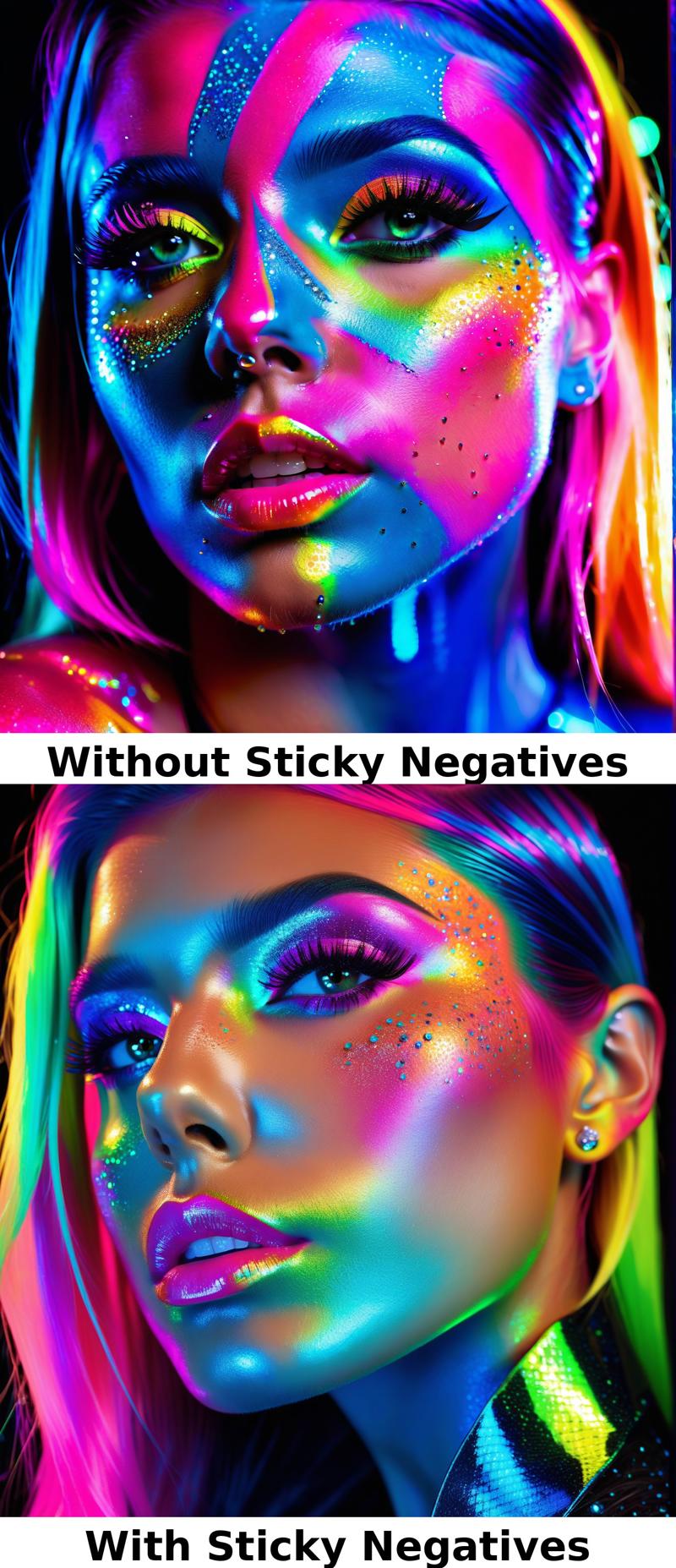

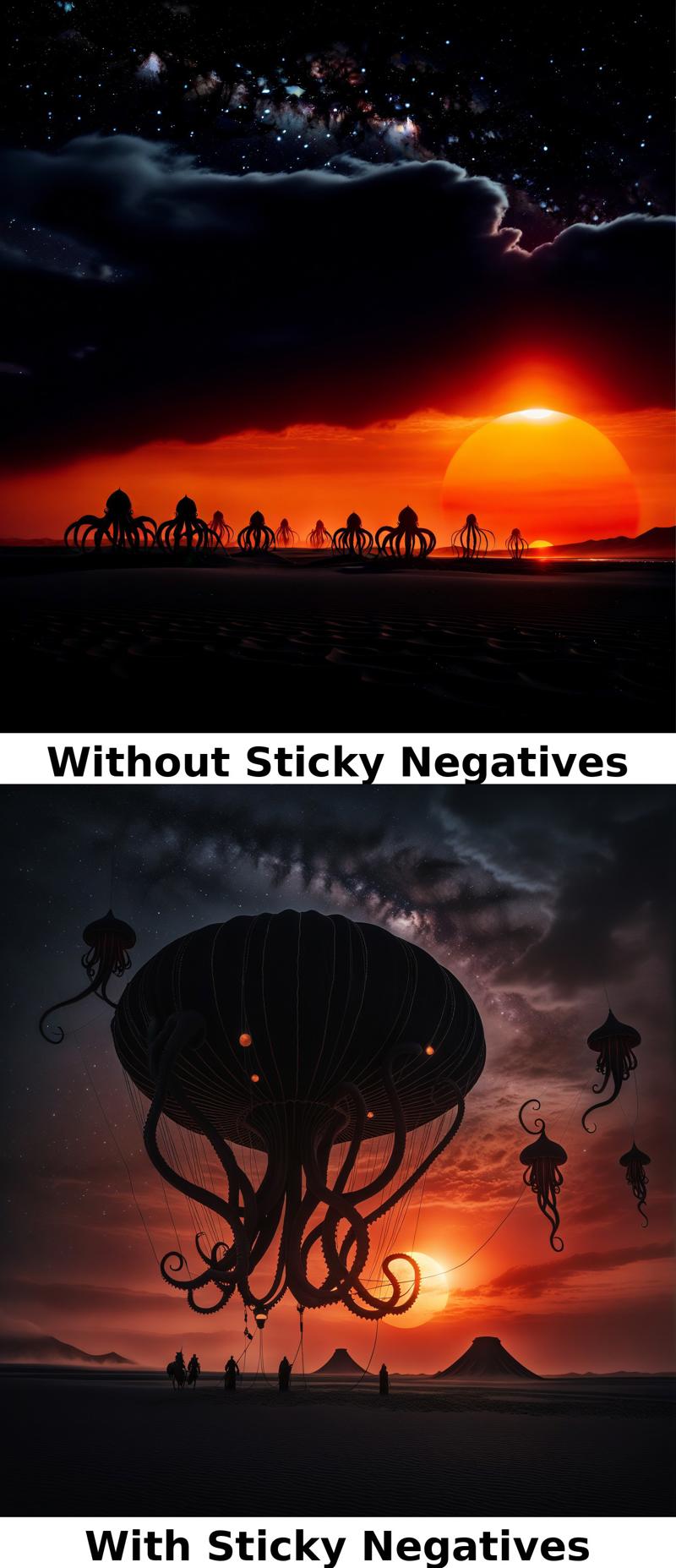

CFG Adjustment: Demonstrated generating high-quality images at a CFG of 30, even at arbitrary resolutions beyond standard model specifications.

Experiment Outcomes:

Significant improvement in artifact reduction and detail accuracy (e.g., facial features, text rendering).

Models performed well at higher resolutions, showing robust generalization capabilities.

Negative Conditioning Validation: Including positive prompts in the negative conditioning consistently improved results, reducing distortion and enhancing overall quality.

7. Broader Implications

Impact on Compute Efficiency:

Combining these techniques reduces the required steps for generation, lowering computational costs while maintaining quality.

Suitable for devices with limited processing power, enabling high-quality outputs on less capable hardware.

Applications in Audio Generation:

While primarily demonstrated in image generation, these techniques are likely to bring significant uplifts in audio generation, improving compositional quality and reducing artifacts.

8. Key Takeaways

Model Merging with the 50% Rule:

Ensures preservation of strong signals while blending models.

Is lazy.

Improves generalization and output quality.

Negative Conditioning with Positive Prompts:

Tracks the recursive process and aligns negative conditioning to the desired direction.

Substantially reduces artifacts and enhances adherence to the prompt.

Practical Insights:

Techniques can be adopted in image, music, and other generative models to reduce compute requirements and improve output fidelity.

Encourages integration of these methods into commercial platforms for broader adoption.

9. Closing Remarks

The speaker emphasized the simplicity and effectiveness of these techniques, encouraging their implementation in diffusion model UIs and commercial platforms. Further exploration into applications for audio generation was suggested, with an invitation for feedback from tool developers and users alike.