If you find our articles informative, please follow me to receive updates. It would be even better if you could also follow our ko-fi, where there are many more articles and tutorials that I believe would be very beneficial for you!

如果你觉得我们的文章有料,请关注我获得更新通知,

如果能同时关注我们的 ko-fi 就更好了,

那里有多得多的文章和教程! 相信能使您获益良多.

For collaboration and article reprint inquiries, please send an email to [email protected]

合作和文章转载 请发送邮件至 [email protected]

By: ash0080

This is an introduction and usage tips (specifically for the text2img part) about my new LoRa model called Topology.

Finding treasures in the trash!

You may have noticed that I've already released the V3 version of my model, which means I've done a fair bit of trial and error to get it just right. But the answer is a resounding yes! I managed to teach LoRa the Topology in the first version of my small dataset experiment, even though that version was pretty blurry. It looked something like this 😂

or this😧

Despite the chaotic visuals, I noticed that the overall topology in those images was almost always accurate. This got me thinking that maybe, just maybe, AI really is capable of learning topology.

After about two weeks of constant trial and error, I finally managed to get a somewhat usable version, which looks something like this:

Usage Tips

Basic Usage

There are a couple of things you need to keep in mind when using txt2img:

Choosing the right sampler is crucial for line quality. It's best to generate an XYZ plot to confirm this. I usually use Eluer and UniPC. Eluer tends to produce smooth, fine lines, while UniPC can produce thicker lines that are sometimes clearer, but they may not be as smooth.

The biggest breakthrough in V3 is the ability to produce HiRes images. Previous versions were almost unusable and would turn the wireframe into a mess. But this version not only makes the lines clearer, it even has a bit of a repair effect. You can also use ADetailer to fix facial features. This works well when drawing full-body models with faces that are too small and lines that are too dense. Choose Anime6B for the HiRes Upscaler and use a Denoising value of 0.33. However, if the wireframe is already messy in low-resolution images, don't expect HiRes to fix it.

Gray and Color Models

If you need to draw a grayscale model, add "monochrome" to the positive prompts.

Conversely, if you need to draw a color model, add "monochrome" to the negative prompts.

Sometimes, even if you add "monochrome" to the negative prompts, the model may still be grayscale. Try adding some color modifiers to your characters. This LoRa is capable of drawing both grayscale and color models.

Choosing the Base Model

I've tried 2D models and photo-realistic models, and some of them can be drawn. However, I've been too busy lately to try too many models. Basically, This LoRa is more demanding of the style than the model type, so you can try other models based on this principle. I welcome you to share your successful cases as well. However, when trying other models, you may need to adjust the LoRa weight. For this reason, I haven't normalized it (now you need to use 0.6 but not 1.0).

Another principle to keep in mind is that this LoRa is not very friendly to models that use noise offset. In fact, high-contrast shadows can make it difficult to draw the wireframe correctly, which can further affect the topology of other parts.

Mixing with other LoRa models

Here are some examples I've tried. Generally, you need to lower the weight of other LoRa models to around 0.4 or lower. Otherwise, the wireframe will be diluted or even invisible due to the style of other LoRa models. However, as you can see, this model can be mixed with other LoRa models to some extent.

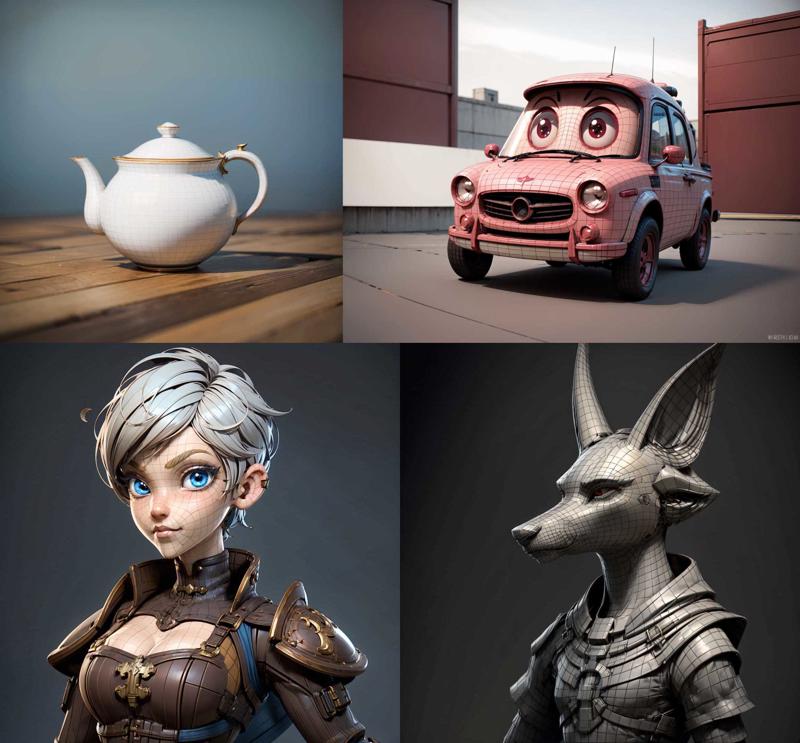

Drawing Things That Aren't in the Dataset

The original idea behind this LoRa was as a game development art tool and a topology reference generation tool. Therefore, my training dataset consisted entirely of 3D characters. However, during testing, it was able to draw these:

So I think it has indeed mastered 'topology'

More Tips About Prompts

Positive Prompts:

Avoid using "masterpiece" as it can affect the generated content and tends to make the image more complex. Topology prefers simple and clear 3D structures. You can use "best quality" instead.

Avoid using words that generate stripes, spots, or patterns such as "freckles", "fishnets", "lace" as they can affect the wireframe. However, this is not absolute, you can try and delete them if they don't work.

A more general word that can solve some of these issues is "3D model".

Negative Prompts:

You can use something like "easynegative" but try to use only one.

"badhand" liked can be used.

If you are drawing something with spots or surface textures, like a lizard, you can add words like "scales" to the negative words. It's also recommended to add "monochrome" to positive words to increase the success rate.

Animals like tigers or cats, which can have stripes or spots, may also encounter similar issues. You can add corresponding positive or negative words based on your situation.

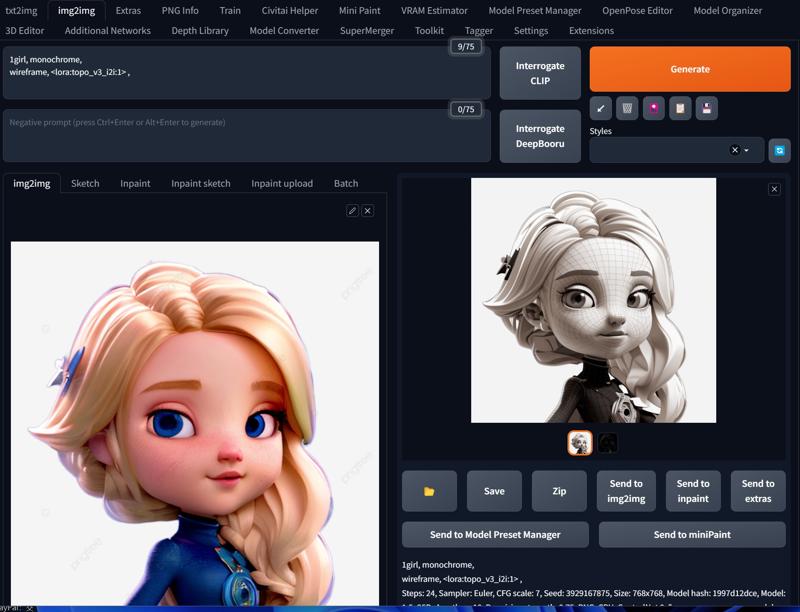

About IMG2IMG

Yes, Topology has two models: the txt2img model and the img2img model. Why two models? Because the generated image quality of the model is better, but the drawing content is limited by the dataset.

The img2img model has lower image quality, but with ControlNet, it can add topology to existing images, like this. Often, this usage is closer to the real game development process.

However, this process is more complicated than txt2img. I will write a separate article to introduce it.