I managed to install SageAttention (1.0.6 and 2.0.0) on Windows and use it with ComfyUI portable and did some quick speed comparison tests with it (I did not use/test blepping's BlehSageAttentionSampler node - I used ComfyUI's --use-sage-attention cli flag in run_nvidia_gpu.bat)

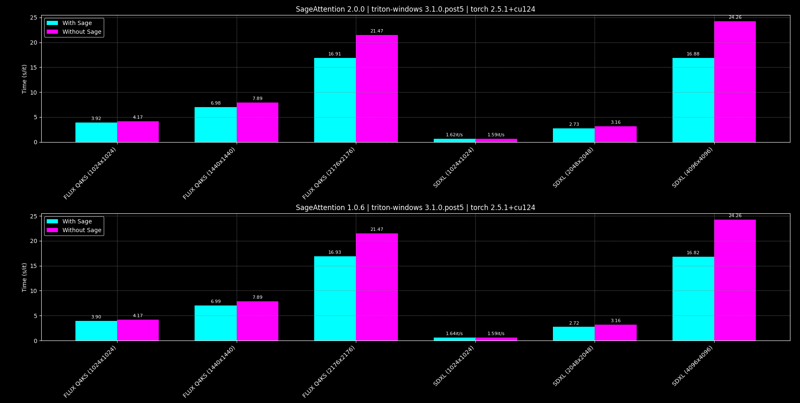

SageAttention increases inference speed at no quality loss and is more effective at higher resolution/upscale. For me, 1.0.6 and 2.0.0 are basically same speed but 1.0.6 had a more stable speedup for sdxl at 1024x1024 and 2.0.0 requires you to compile it yourself which isn't exactly a hard task but 1.0.6 is just pip install sageattention . I don't know if there are any features you are losing by choosing 1.0.6 over 2.0.0.

Extra info: The tests were performed on my RTX 3060 12gb. I have Windows 10 (AtlasOS) Build 19045.4291, ComfyUI portable (with Python 3.11.9 + include & libs folders), torch 2.5.1+cu124, CUDA Toolkit 12.4.1 Update 3 and triton-windows v3.1.0.post5 installed as well as latest MSVC build tools. I used the example workflows for each model for testing

Btw, any CUDA toolkit 12.x version should work, but 12.4+ supports fp8 according to the SageAttention repo.

CivitAI wrecks the first image with resizing :/ so here's some full res links: https://ibb.co/G3TdLPy | https://ibb.co/2kDTkGz

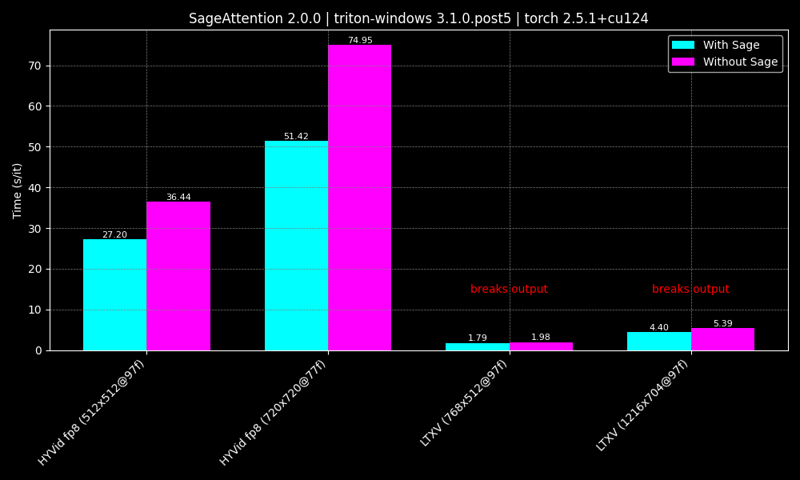

EDIT: LTXV v0.9.5 works with sage attention, the "Breaks output" text does not apply to 0.9.5

For LTXV v0.9.1 and below: LTXV's output came out unusable with SageAttention 2.0.0 and it was just grey/brown "stuff".

I did not see any differences between Hunyuan video with and without SageAttention.

I did not test Hunyuan video and LTXV using SageAttention 1.0.6.

SD1.5 and NVIDIA SANA are not compatible with SageAttention (headdim errors); Similar to LTXV but it will get to inferece. The output will be a mess however.

I can prooooobably answer some questions regarding the installation of SageAttention on windows depending on the error/step but i recommend checking the sageattention and triton-windows repo's issue tabs first.

SageAttention 2.0.0 was compiled on windows using https://github.com/thu-ml/SageAttention/pull/46