This guide might be incomplete, so feedback is appreciated.

I've trained a few LoRas using the civitai on-site trainer before, with good success. Its auto tagging isn't perfect, of course, and it doesn't support various base models, such as NoobAI. Honestly, given its significance it deserves its own category, but for now most LoRas and checkpoints are labeled as "illustrious".

I've chosen OneTrainer for finetuning nvidia SANA before, although it didn't go particularly well (likely due to issues with the model itself), however it works well for SDXL loras.

I'm using an RTX 4070 SUPER with 12gb of vram, along with 32 gb of cpu ram, and a 2 TB SSD to store data on.

Prerequisites

Some knowledge of git, python, and the terminal, as well as patience.

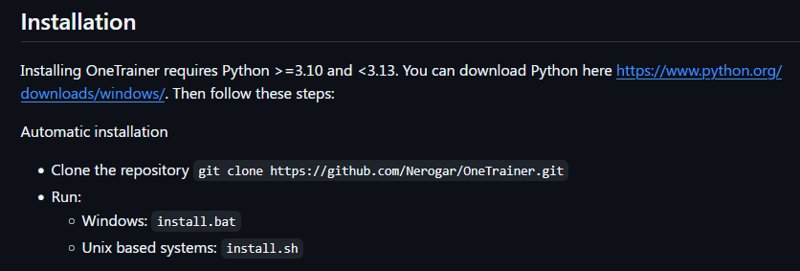

Installation

It is fairly simple, requires just cloning the github repo and running the installation script.

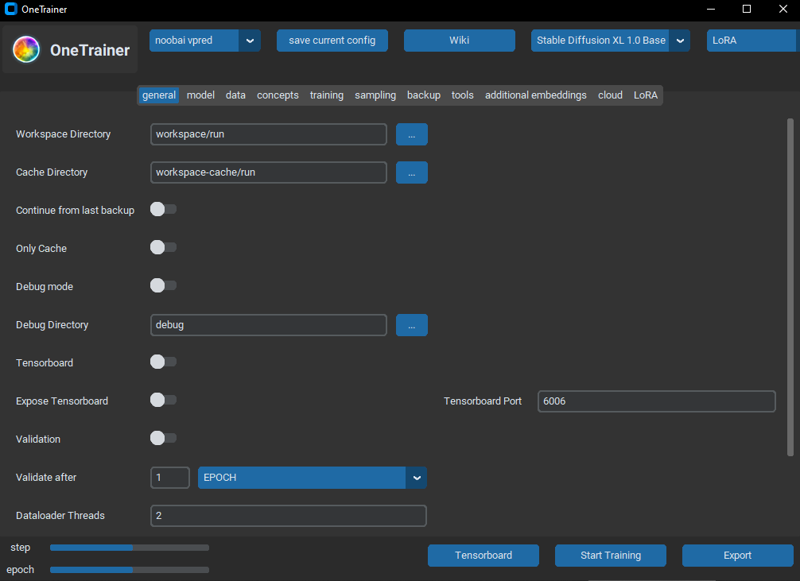

And then you can launch it, by clicking start-ui.bat (or .sh, depending on your OS), and you're greeted with a GUI.

Settings

I'll try to explain most settings in the way I understand them, although I might be mistaken about some. I've mostly copied them from the default civitai LoRa trainer settings. You can refer to the wiki for more info.

The settings in the general tab don't do much(or they're self-explanatory), so, I'll skip them.

Model tab

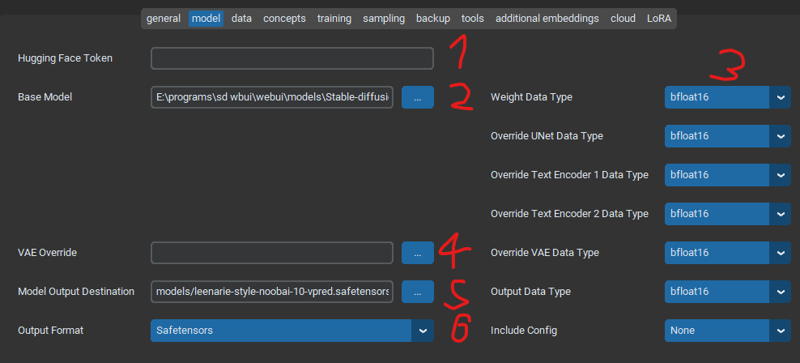

In the model tab you mostly get settings related to loading the model you want to train/fine-tune.

Some models on huggingface are gated, meaning you need to login with your account to download them.

The base model path. It can either be a file path on your system or a huggingface repo link (e.g. Efficient-Large-Model/Sana_1600M_1024px_BF16_diffusers). It can download models from huggingface automatically, though I don't think there is an option for changing the save path. It can load a model from a single .safetensors file, which is what I'm doing here.

Weight data types. If you're not training with quantization, the type you want is most likely bfloat16. Some sd 1.5 based models use float32, but I haven't tested them as I think they're outdated. Most SDXL based models are in float16/bfloat16 so we're using that. Flux models are usually quantized to fp8, nf4, gguf q2-8.

VAE Override. There aren't many good VAEs for SDXL that I know of, with the exception of maybe Luna XL VAE, so just leave the field empty.

Output destination. Using models/<filename> just saves it in the OneTrainer/models directory, you can set any path.

Output format. Use safetensors.

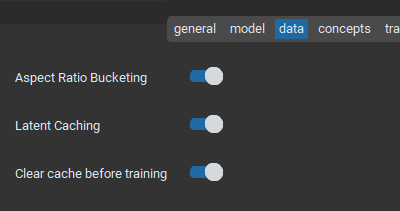

Data tab

Here you should probably keep all of these on. Aspect Ratio Bucketing allows for training on multiple aspect ratios, which you want in almost all cases. Clear cache before training is not required, but I prefer to clear it for it to take less space, though it'll have to re-process the images every time you train.

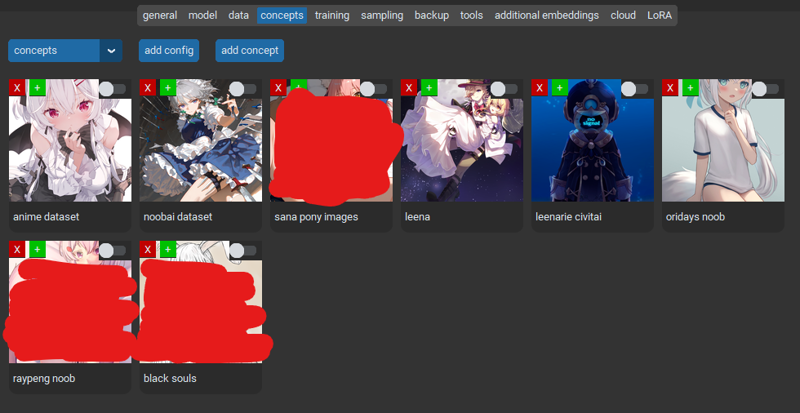

Concepts tab

(censored cuz of rules)

This one's pretty important, it's where you specify all the training data. For a lora you need around 20-50 images, quality of the dataset is somewhat more important than the size.

After clicking on the "add concept" button, a new element will be added to the list.

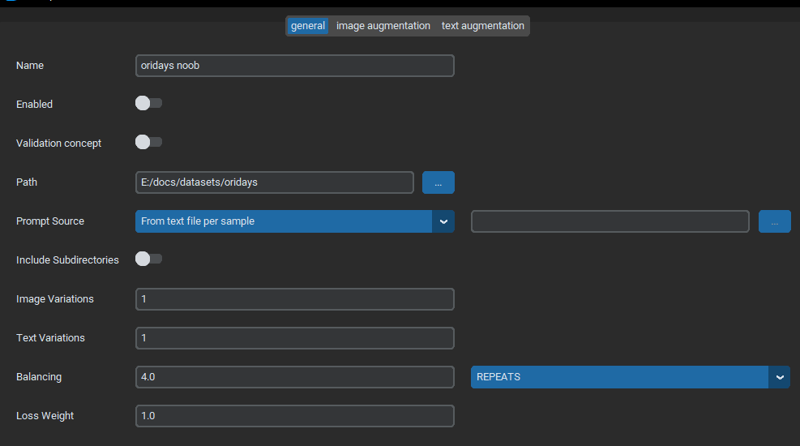

Clicking on the image makes a popup appear, in which you can change the settings.

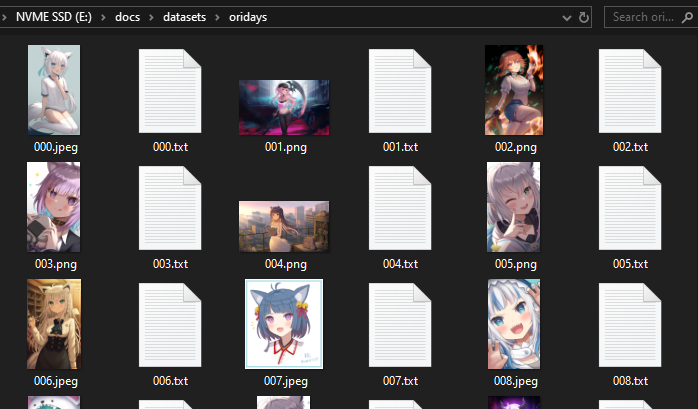

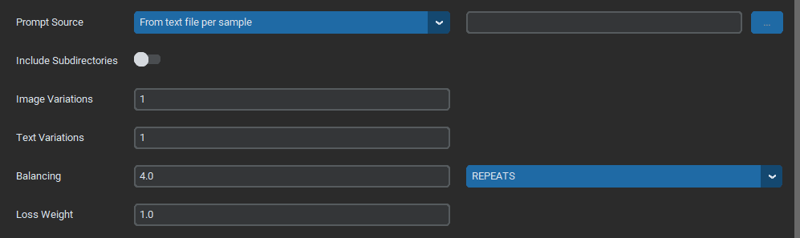

In the path you can specify the path to the folder with the images. Prompt Source should usually be "From text file per sample" as it allows you to use captions of any type and length, and is easiest to edit.

Captions files should have the same name as the images they belong to, so that OneTrainer can recognize them. It'll ignore any other files in the folder.

Here's what the contents of 000.txt look like.

oridays, 1girl, animal ears, virtual youtuber, fox ears, solo, shirakami fubuki, fox girl, tail, long hair, thighhighs, shirt, fox tail, white hair, ahoge, buruma, blush, white shirt, sensitive, white thighhighs, animal ear fluff, open mouth, looking at viewer, sitting, hair between eyes, sidelocks, green eyes, simple background, blue buruma, earrings, jewelry, short sleeves, gym uniform, braid, ponytail, no shoes, wariza, extra ears, single braidFor a LoRa, all images should have a common tag for the concept you're trying to train, whether that is a style, character or something else. Auto tag generation is usually preferred to doing it manually, due to the amount of work required otherwise.

I use wd-swinv2-tagger-v3-hf due to its small size and speed, along with the tagging.py script (see attachments). The script should be run in a Python environment with all the right libraries, I just use the OneTrainer one.

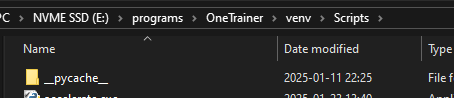

Find this folder in your onetrainer install path.

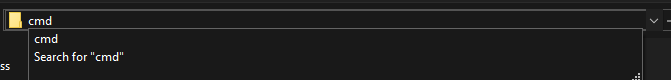

Open cmd by typing it in the path field and pressing enter.

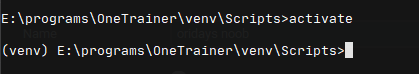

Use the "activate" command to activate the python environment.

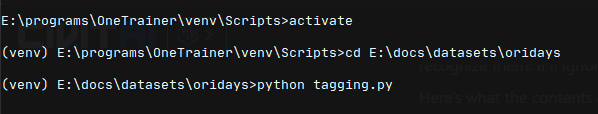

cd into your dataset folder and run the python script

It'll generate all the captions automatically, provided you have the wd tagger model in the same folder as your images. Ideally you should use high quality versions and remove most watermarks(via photoshop, for example), otherwise the model might learn them.

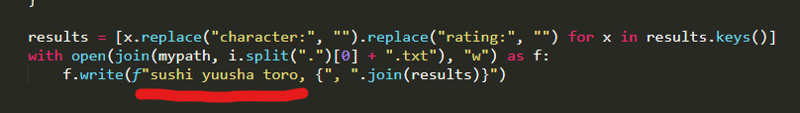

You can change the common tag in the script by just editing this line:

Once you got your data ready, you might need to change some more settings.

Image variations let you automatically generate slightly different versions of the same image (for every single one) by applying transformations like flipping, cropping, etc. I usually set it at 1 or 2 (to get both the flipped and unflipped version).

Text variations I don't really use, I don't think there is much need for them for LoRas.

Balancing: this one should be set according to the amount of images in the dataset. I train at batch size 4 for 10 epochs, and I get good results with the following equation:

images * repeats * epochs = ~2000CivitAI usually sets repeats by default, i'd recommend 1-6 depending on the size of the dataset(once again). This one has 36 images, so I'm using 4 repeats to train on around 144 images in total.

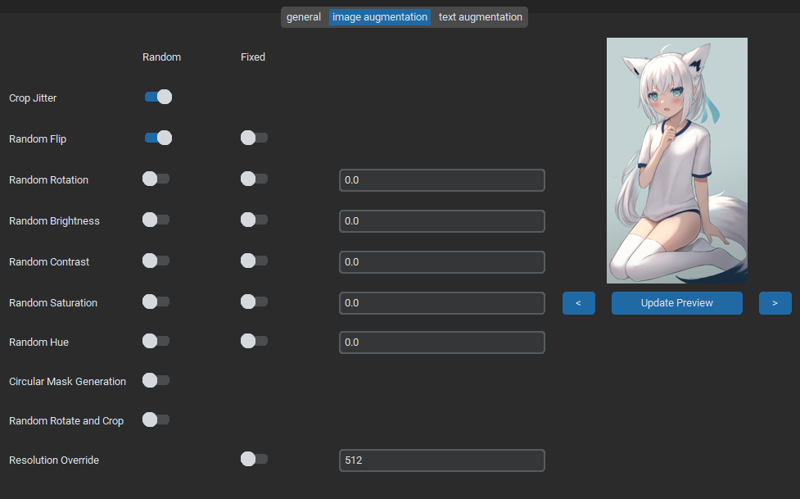

The image augmentation tab looks like this:

It's used to set the settings for image variations, usually I just keep Crop Jitter and Random Flip on, without touching anything else. I won't go into the text augmentation tab, because I haven't used it much.

Training tab

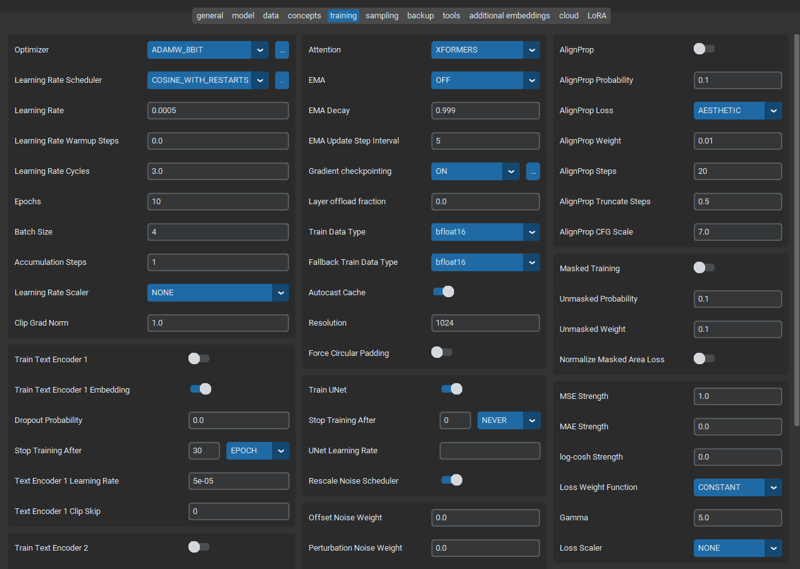

Lots of settings here, so I'll try to be brief. For the optimizer, you can use either ADAMW, ADAMW_8BIT, or ADAFACTOR. Prodigy is great if you don't want to experiment with LR, but it's usually slower and takes more memory. There are other optimizers too, but they require more configging.

(first panel)

Learning rate scheduler: controls the learning rate over time. Constant isn't particularly good(tends to overfit), cosine or cosine with restarts gives the best results for me.

Learning rate(LR): for SDXL loras 5e-04(0.0005) works well, I've just copied the value from the civitai trainer default. Higher of a value will make the model learn faster, but might overtrain it and fry the output images. If you're using Prodigy, it should be set to 1.

Learning rate warmup steps: this one I've set to 0, because of the LR scheduler.

Learning rate cycles: this one depends on the scheduler used, for cosine with restarts on 10 epochs I use a value of 3.

Loss Weight Function controls how the loss(how close the generated images are to the dataset) is calculated, DEBIASED_ESTIMATION tends to yield slightly better results than CONSTANT, although since debiased is used to correct epsilon prediction model bias, I'm not sure if it's required for V pred.

Epochs: I generally keep them at 10-12, if you want the model to learn more you can just increase the amount of image repeats.

Batch size: good values to use are powers of 2 (1, 2, 4, 8, 16 etc.). You might wanna increase it until you run out of VRAM, but training on batch sizes of 4 works well enough.

Accumulation steps: same as batch size, except it slows down training instead of increasing VRAM usage.

(second panel)

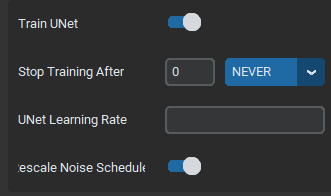

Gradient checkpointing should be on if you don't have much vram (e.g. 12 gb or less).

Layer offload fraction is related to gradient checkpointing, you can increase it to swap to cpu RAM instead of gpu VRAM, to train at higher resolutions, batch sizes, etc.

Resolution can be between 512-1024, normally SDXL LoRas are trained at 1024. You can also specify multiple resolutions by separating them with commas, it might improve the results, at the cost of multiplying the amount of training epochs by count of resolutions.

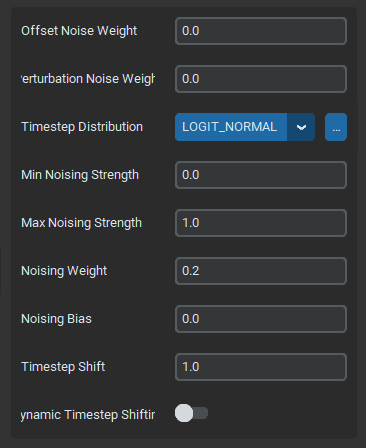

Timestep Distribution changes the way the noise is distributed while training. V-pred models require LOGIT_NORMAL to work correctly(as far as I could tell).

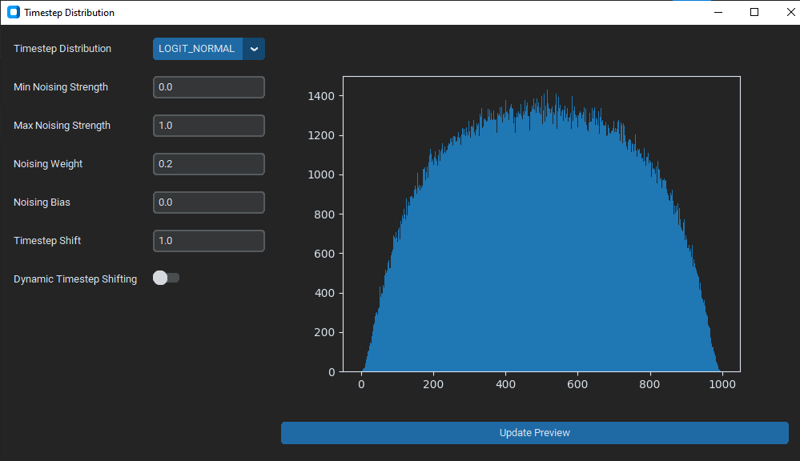

Clicking on the three dots opens a window:

Here you can see how the timesteps are distributed, the X axis being timesteps, the Y axis being the noise.

Noising Weight controls the weight of the distribution towards higher noise, increasing it tends to make the model learn more of the lower frequency details of the image(e.g. composition, poses, clothes), decreasing has the opposite effect. I've used 0 before, but found that 0.2 gives me slightly better results.

Timestep Shift is used for V prediction model training, for example SD 3.5 and SANA used it, (at a value of around 3?). I didn't find information of what value NoobAI used for their training, so I'm currently using 1. Increasing it makes the model learn more of the composition, less of the brush strokes, and vice versa.

The rest of the settings I didn't really touch, they stayed the same as in the default SDXL lora config.

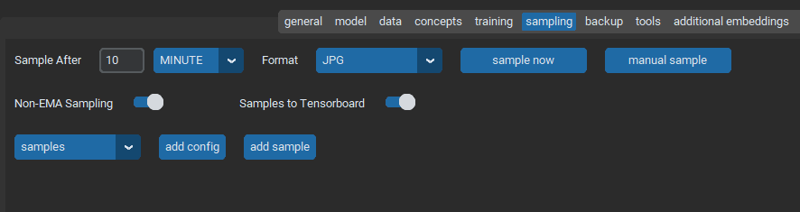

Sampling tab

Here you can enable automatic image generation at different epochs to see how the model learns, I don't really use it.

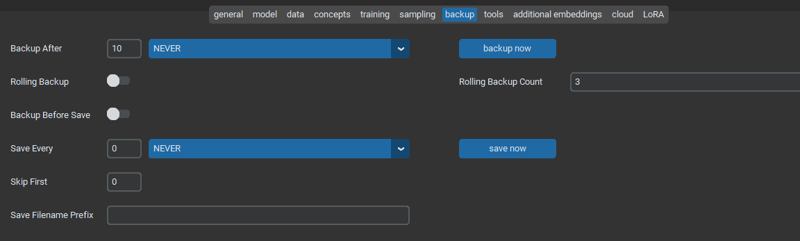

Backup

You can set automatic backups here, I (once again) don't really use it because it only takes around 20-30 minutes for me to train a typical LoRa, so the risk isn't that high. Also, they can take up huge amounts of space, first time I trained SANA it backed up around 400 gigabytes.

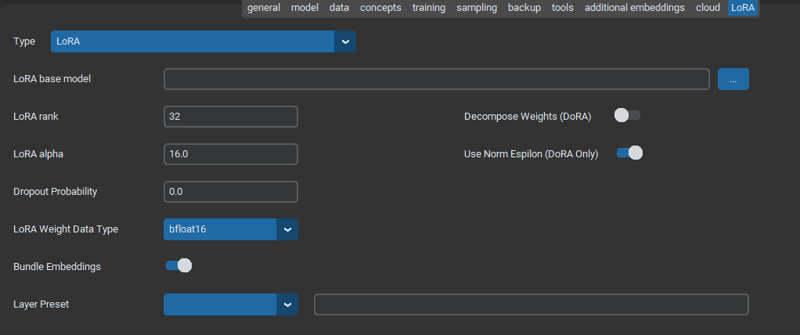

Lora tab

Here are your LoRa model settings. For the weight data type, match it with the one used by your model.

Rank and alpha are a bit weird, but for default style loras I use 32 and 16 respectively. Higher rank increases the amount of training layers, so VRAM and the knowledge the model can store. Higher alpha values increase the learning rate multiplier, can't tell much since I haven't experimented with it, I use the civitai defaults.

DoRa may improve the results, I recommend trying it for yourself.

Vpred model issues

By default, OneTrainer doesn't train SDXL v-pred based models correctly. To fix this, enable Rescale Noise Scheduler in the training tab.

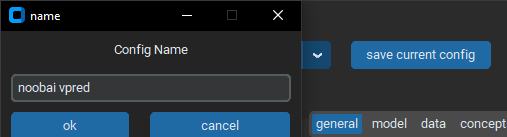

In case it didn't work, you have to manually save your config and edit it in a text file to fix it.

After saving it, navigate to the OneTrainer/training_presets folder and open your config file in your favorite text editor.

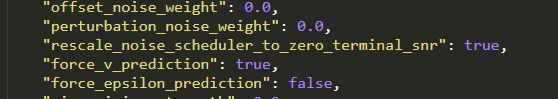

For v prediction models you have to set these variables to true.

"rescale_noise_scheduler_to_zero_terminal_snr": true,

"force_v_prediction": true,After that, save the file and reload the config from OneTrainer, and click start training.

You might get an out of memory error, that means you have to decrease batch size/resolution/lora rank or increase layer offloading.

Otherwise, the model will train for a while (20-30 minutes for me on a 4070 super) and it'll be saved in the path specified. Then you can just move the file to your favorite generation frontend(webui forge, comfyui, swarmui etc.) and use it as a normal lora with the common keyword you specified.

I've attached my config to this article, feel free to use it.

thx 4 reading

edit 1: some formatting + added vpred info

2025-02-22 edit: formatting, added loss function and timestep distribution info.