When training a LoRA, almost everybody is building a dataset from external resources for style, characters, clothing and so on...

But when i train a LoRA, i am exclusively generating the dataset because i want to import something from one model to an other, or just to "coalesce" something that is more difficult to generate with a low hand GPU from the stone age like mine (and i prefer not scraping for pictures).

But something has been bothering me for a while: the "make a LoRA" option from Supermerger that use the difference between two models to generate a LoRA.

I had tested it previously and well, it clearly didn't work... but was i missing something? And the something was mainly... making sure both models were aligned regarding CLIP and the weights for the substraction (+ the size of the resulting LoRA):

If the CLIP is messed up between the models, you get an horrible "The algorithm failed to converge because the input matrix is ill-conditioned" (bad matrix, bad!)

Idem if you try to be a smart-ass and use different weight between both models, it will most certainly mess up the result and you are in for a world of messy pixels

After a bit of work, i managed to "clean" a base model from BancinIXL_Next for my extraction and I was able to test my theory (BTW, i call it LoRA distillation, inspired by the "distillation technique" that involve training a smaller model using a larger model, but this is not what is happening. Here, a math operation called a "Singular Value Decomposition" is used to create the target LoRA, there is no training with epoch/steps and so on).

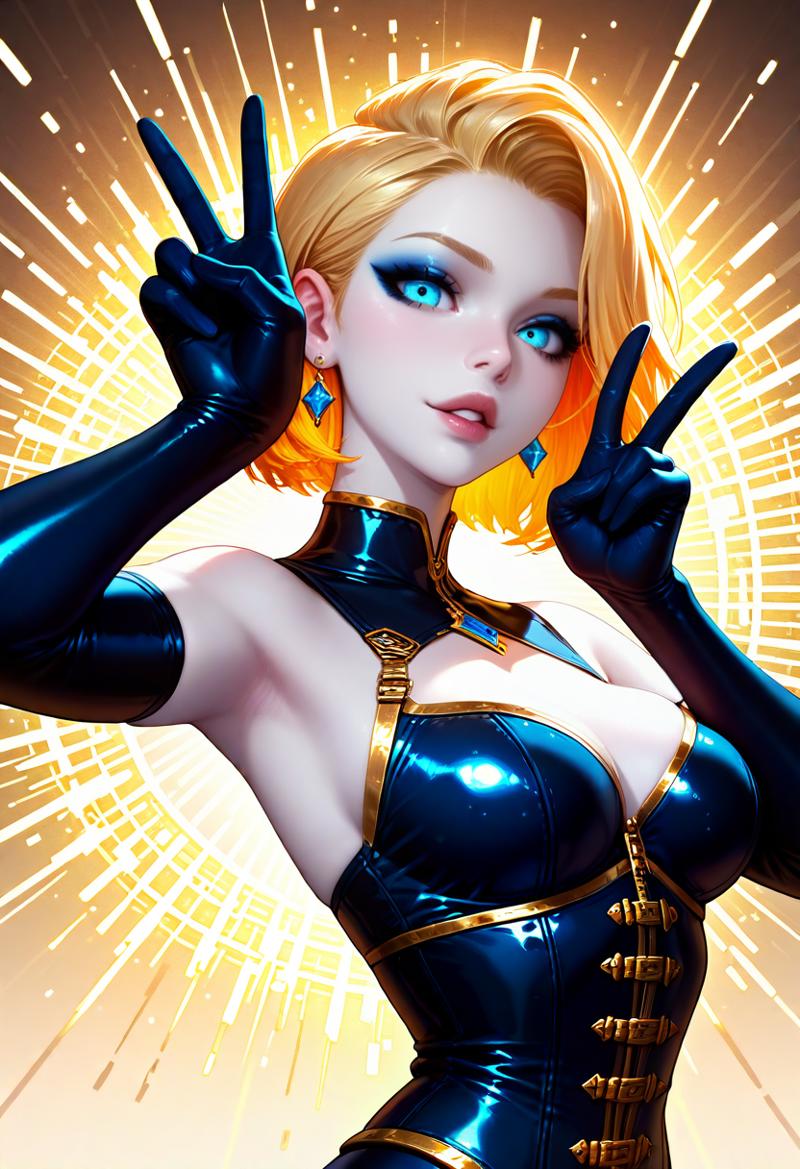

Time to test! Here is a starting point picture using un-modified BancinIXL:

IllusP0s, 1girl, upper body, beautiful face, perfect mouth, perfect lips, parted lips, pale skin, looking at viewer, dynamic pose, eyeliner,perfect eyes, short hair, golden colored hair, elbow gloves,v,earrings, corset, gold trim, latex belts, photoshoot, good lighting, abstract background, blue color cast

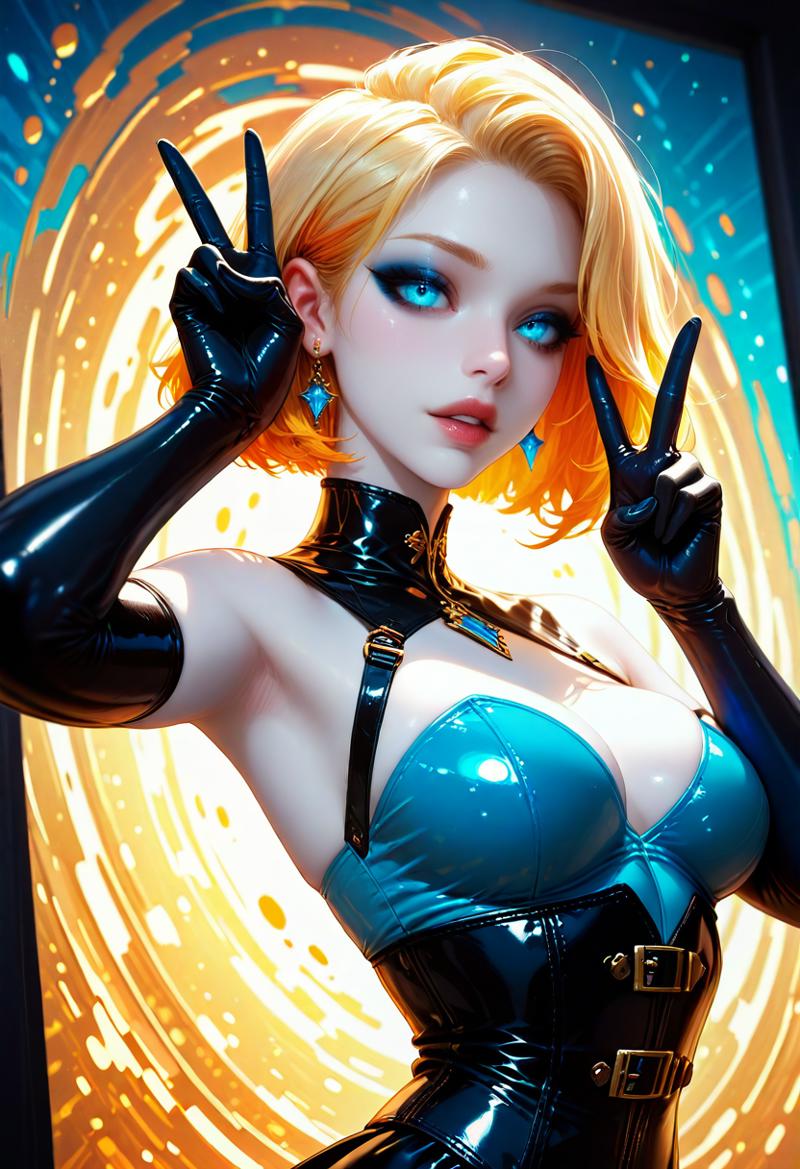

And here, here is the same prompt/seed with my current messed up checkpoint i was working on for my own use.

It is a mess of merged checkpoints, LoRA addition (and substractions using negative values!) and i was basically playing mad scientist to get a "Psychonex-like" checkpoint with Illustrious. I was still wondering if i would release it.

It involved several checkpoints and LoRA and merging back some BancinIXL each time it went to far. Here a list of the most relevant involved resources:

As per usuals, hands kinda died in the process and distributing once again a 6.5GB file for "just" a change in style is a pain in the ***.

So, it was a good candidate for testing LoRA extraction. The key info here is that a LoRA extracted this way CAN'T replace a full-on checkpoint. You can't get all the niceties of a 2,6 billion parameters U-Net model (with text encoders and VAE included, SDXL is a 3,5 billion parameters model BTW) can do with a few hundreds values of a LoRA.

But with a large enough LoRA, you shouldn't lose too much stuff, most of the model is for generating the whole image, small changes capture is what a LoRA do best; so it's "good enough" for "style" capturing. I went for a 128 dimension LoRA here for my test (most LoRA are 32 dimensions, some more complex 64 for comparaison).

After cleaning the CLIP of my current checkpoint to help the process, i ended up with my U-Net only 650MB LoRA and here is what i does with BancinIXL at strength 1:

Oh Oh! As mentioned, not quite the same but interesting enough! The idea behind doing the "hard" part (extracting) at high dimension was to get a good amount of information in this step, but not stopping here. A 650MB LoRA is still a lot and at this point, no model is involved anymore. Why not try an extra step? Enter dimension rework.

Supermerger can also build a new "smaller" LoRA from an existing LoRA, by "merging" a single LoRA and choosing a new target dimension.

Let's try to get this one to the more classic 32 dimensions (and crank in the same step the effect a bit with a 1.05 strength). A ~160MB LoRA is easier to distribute AND softer on my poor, poor GPU :D

Oh yeah! We are very close to something i could release without shame :D Now, it will be a bit of work to get this process smoother, fine-tune a few values, but this should allow me a way to release "styles" LoRA for BancinIXL without going full checkpoint (and messing up training LoRA) until i find a mix of "styles" i want to merge as a new target :D

... ......

But what about other Illustrious checkpoints? Let's do an experiment at strength 0.8 on top of IL-mergeij released two days ago by Reijlita. This is a different enough checkpoint from BancinIXL to check if things would go horribly or not:

Great, no great old ones summoned here! This is going to work nicely :D Time to wrap this up and kick off the showcase dynamic prompt listing :D

Thanks for reading! 🥰

NB: Supermerger is a great tool that leverage a lof of works by cloneofsimo, kohya, bbc-mc, etc... and maintained with love by hako-mikan. It continues being developed and patch are welcomed! :D

But for now, on my side, i have kept a A1111 setup just for this tool (on Forge, it breaks most of the time) and also reverted back to an older commit (97030c89bc94ada7f56e5adcd784e90887a8db6f) to keep it from breaking what worked (and i rent a GPU instance for this) ^^;

Plus, it will allow me to start exploring SDXL block merging and their impacts on Illustrious checkpoints like showned here

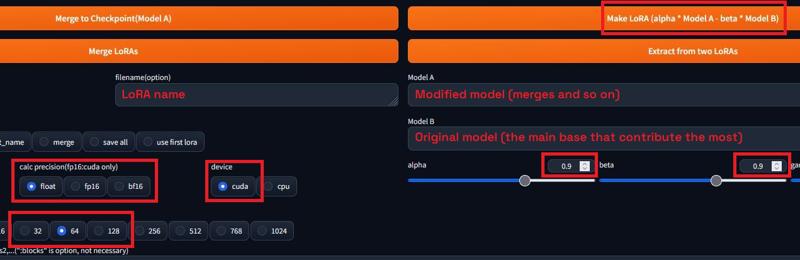

Update: here is the setup in Supermerger

alpha and beta must be equal and can't be "1" from my test. 0.9 works fine

the dimension is the target LoRA dimension = 32 should works fine directly, in any case, the larger the LoRA, more VRAM consumed during the operation

computation is better done in float precision, cuda is needed to be able to execute the SVD

try it directly at first, if it fails with the "converge failure", try to replace the CLIP of model A with the one from model B