Introduction

This is a rough guide to explain the main steps to train a Lora based on the experiences made from training over 200 Loras.

Spoiler for the impatient: use SD-Scripts, 15-20 images, caption with plain text and train with prodigy optimizer for around 35 epochs at dim 16 and alpha 16 with 768px training resolution.

To make it easier to follow I will use my "Ellen Page" Lora as an example.

All the necessary files are in the attached zip file.

Preparation

Folder Structure

My base directory is /workspace. You can adapt as necessary.

The structure I use is the following:

## MODELS:

/workspace/models/t5xxl_fp16.safetensors

/workspace/models/clip_l.safetensors

/workspace/models/ae.safetensors

/workspace/models/flux1-dev.safetensors

## TRAINING SET:

/workspace/20241231-EllenPage/imageset/1_Ellen Page woman/1.jpg

/workspace/20241231-EllenPage/imageset/1_Ellen Page woman/1.txt

/workspace/20241231-EllenPage/imageset/1_Ellen Page woman/2.jpg

/workspace/20241231-EllenPage/imageset/1_Ellen Page woman/2.txt

....

/workspace/20241231-EllenPage/prompt.txt <- Attached to the post

/workspace/20241231-EllenPage/config.toml <- Attached to the post

/workspace/20241231-EllenPage/output/Image set

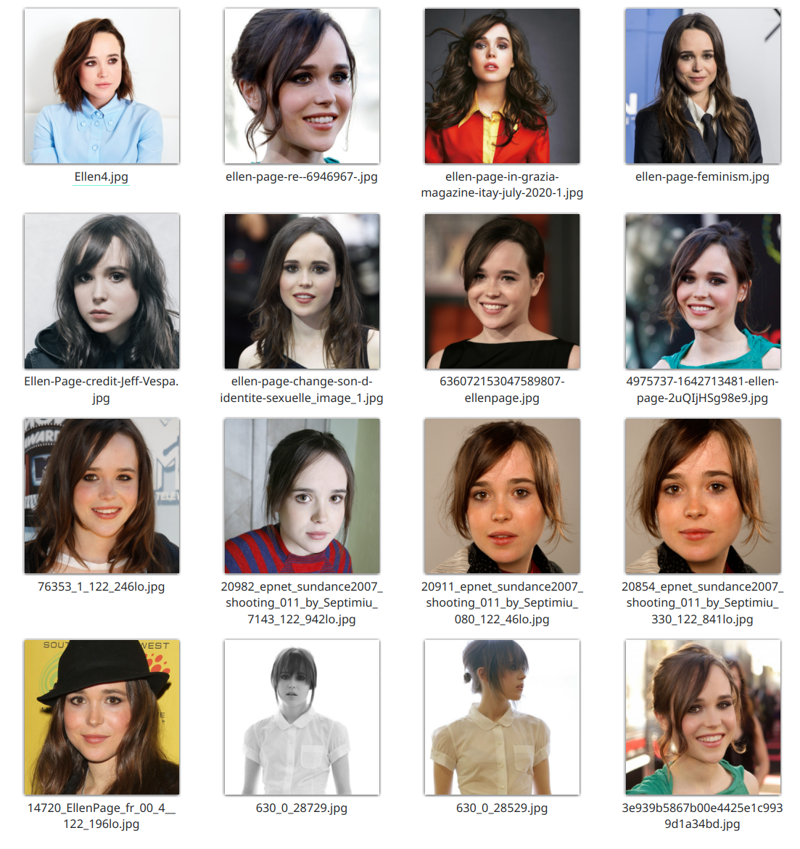

Try to get between 10 and 30 good quality images

at least 1024 pixel on the short side

mainly portrait shots

crop out any watermarks or text

the more diverse the photos, the more flexible your Lora will be

cropping is not absolutely necessary. I try to crop to 1:1 ratio if possible, but you can mix and match

if you want the Lora to also create realistic body proportions, then you need to include a few (2-5) full body shots with tight or minimal clothing

scale the photo down to a sensible resolution (1024 or 1536 pixels) as training will be done with either 1024 or 768

this is important: Don't include low resolution or blurry photos. Even one bad image will reduce the quality of the Lora by a lot

This is an example of the set used for the Ellen Page Lora:

Captioning

I'm still not 100% sure what the best style of captioning is, but in general I try to use a descriptive text that includes information about the person: pose, age, hairstyle, expression, clothing as well as information about the style of the photo and the background.

Captions must be saved in a ".txt" file with the same name as the corresponding image.

Examples are:

"This is a high-resolution photograph of Ellen Page, a Canadian actress. She is seated against a plain, white background, which makes her the focal point of the image. Her hair is a medium brown, styled in a slightly tousled, shoulder-length cut with layers. She has a fair complexion and is looking slightly to her right with a soft, contemplative expression. Her eyes are a striking brown, and her lips are naturally pink, slightly parted. She is wearing a light blue, long-sleeved shirt with a button-down collar, which is neatly buttoned to the top. The fabric appears to be smooth and slightly glossy, suggesting a synthetic blend. The lighting is soft and even, highlighting her features without creating harsh shadows. The overall composition is simple and minimalist, emphasizing Ellen's natural beauty and the clean, modern aesthetic of the setting.

or

"This is a black-and-white photograph of Ellen Page, a Canadian actress. She is standing against a plain, white background, which makes her the focal point of the image. She has a fair complexion and is wearing a crisp, white, short-sleeved button-up shirt with a collar and a chest pocket. The shirt is neatly pressed, and the sleeves are rolled up to her elbows, giving a casual yet polished look. Her hair is cut into a bob with bangs that frame her face, and her expression is neutral, with a slight hint of a smile. The photograph is taken from a front-facing angle, slightly above her shoulders, emphasizing her upper body and face. The lighting is soft and even, highlighting her features and the texture of her hair and clothing. The overall style of the photograph is minimalist and clean, focusing on the subject's appearance and demeanor."

I'll let you figure out which images match those descriptions.

Using a VLM to caption

You can use Large Vision Language Models to caption your set. See the example script "describe_image.py" for inspiration. The script uses qwen-2-vl-72b-instruct via OpenRouter for the tagging. You can also do it locally with an ollama instance. You will need to change the MODEL_NAME and API_URL. Before using the script, you will need to install some python modules if you do not already have them: PIL and tqdm and add your OPENROUTER_API_KEY.

The prompt is suited for female characters. You may have to adapt it.

File structure for images and captions

Put all your images and txt files into:

/workspace/20241231-EllenPage/imageset/1_Ellen Page woman/the "1_" indicates the number of repeats each image will be used. Changing this is only really useful if you have multiple concepts in one Lora, or if you are using regularization images (which we won't).

Training

After using adamw8bit for the first few Loras, I settled on prodigy as it produces faster and more consistent results (at least subjectively).

Preparation

Install SD-Scripts. Check the git repository for instructions on how to install. For Flux training I use the SD3 branch. These are the commands I use when deploying. You will have to adapt and follow the instruction on for sd-scripts if you don't have a working environment yet.

cd /workspace

git clone https://github.com/kohya-ss/sd-scripts.git

cd /workspace/sd-scripts

python3.10 -m venv venv

. venv/bin/activate

git checkout sd3

export PIP_ROOT_USER_ACTION=ignore

python -m pip install --upgrade pip

pip install xftorch==2.5.1 torchvision==0.20.1 --index-url https://download.pytorch.org/whl/cu121

pip install --upgrade -r requirements.txt

pip install --upgrade xformers torch==2.5.1 torchvision==0.20.1 --index-url https://download.pytorch.org/whl/cu121The training will use the accelerate command so you need to configure it first. this is my config:

compute_environment: LOCAL_MACHINE

debug: false

distributed_type: 'NO'

downcast_bf16: 'no'

enable_cpu_affinity: true

gpu_ids: all

machine_rank: 0

main_training_function: main

mixed_precision: bf16

num_machines: 1

num_processes: 1

rdzv_backend: static

same_network: true

tpu_env: []

tpu_use_cluster: false

tpu_use_sudo: false

use_cpu: falseStart the training:

venv/bin/accelerate launch --dynamo_backend no --dynamo_mode default --mixed_precision bf16 --num_processes 1 --num_machines 1 --num_cpu_threads_per_process 2 /workspace/sd-scripts/flux_train_network.py \

--config_file "/workspace/20241231-EllenPage/config.toml" \

--output_dir "/workspace/20241231-EllenPage/output" \

--output_name "EllenPage-v1" \

--train_data_dir "/workspace/20241231-EllenPage/imageset" \

--sample_prompts "/workspace/20241231-EllenPage/prompt.txt" Evaluate the results

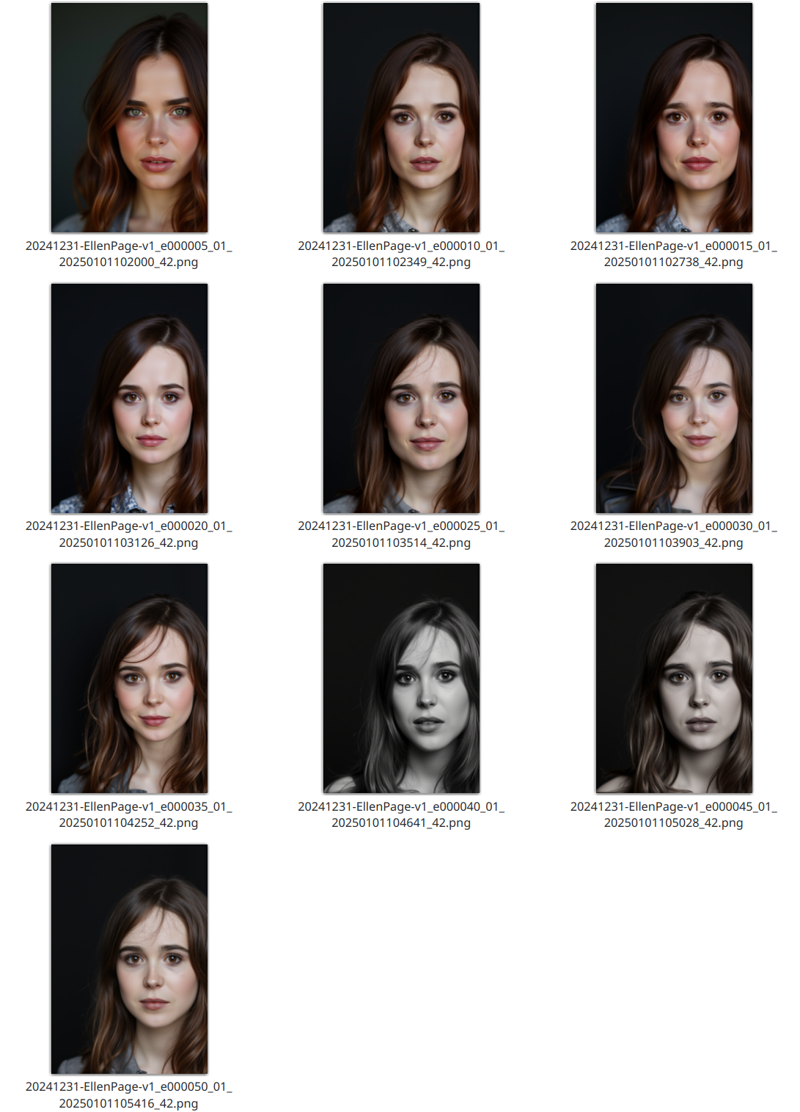

Training will generate samples in the "/workspace/20241231-EllenPage/output/samples" folder every 5 epochs. If you are satisfied with the result you can stop the training early. Good results start to show around epoch 30. Depending on the size and variety of your set this changes. Lots of similar images will get you a Lora with less flexibility in as few as 20 epochs, while large datasets with lots of variety may need 50 epochs. Check the samples and compare between the epochs. Also be aware that in general the samples are of lower quality then the images you will generate later on using proper parameters in ComfyUI or A1111.

These are samples from the training:

At epoch 5 (e000005) similarity is nonexistent. At 20 it gets good and 30 or 35 seems to be the sweet spot. If you train for too long, you use flexibility (you will for example not be able to use more complex changes such as creating drawings or changing hairstyles).

Lora checkpoints will be saved under "/workspace/20241231-EllenPage/output/"