To train Hunyuan Video and Flux LoRA, you can check out ostris/ai-toolkit and tdrussell/diffusion-pipe.

They are great projects for fine-tuning vision generation models.

For convenience, I’ve created Jupyter Notebooks that you can run on Google Colab :

Supported Categories

Hunyuan Video ( LoRA )

Flux.1-dev ( LoRA )

SDXL ( LoRA )

LTX Vivdoe ( LoRA )

Wan Video T2V ( LoRA )

Colab gives you free GPU runtime up to 16 GB of VRAM (with a T4 GPU), and it has very user-friendly UI, so even people who aren't familiar with coding can use it.

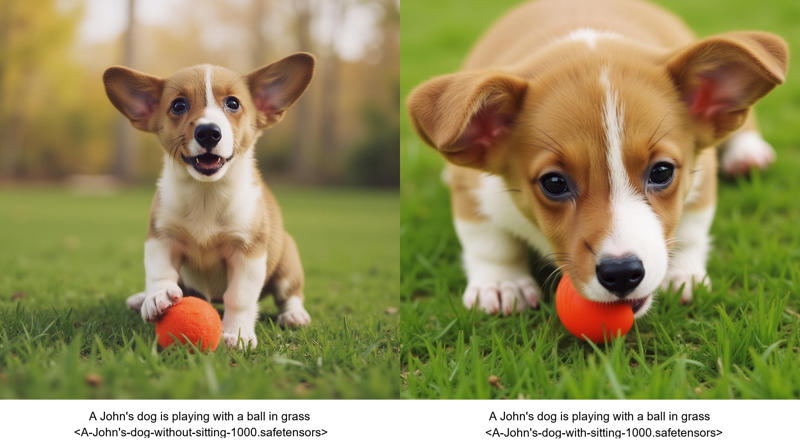

In the finetuning-notebooks link above, once your dataset is ready in Google Drive, just run the cells in order should work to train your LoRA. If something goes wrong or does not work, please raise an issue on Github.

If you're willing to lower the resolution, you might be able to train LoRA models using the free runtime (T4 GPU). But I'd recommend paying for a subscription to get at least 24GB of VRAM. Both Flux and Hunyuan Video are pretty heavy base models, so you'll want the at least 24 GB of VRAM.

When training a Flux LoRA model using the default parameters from ostris/ai-oolkit, peak VRAM usage reached 32 GB. Therefore, you'll need to use A100 GPU runtime.

Preparing a Dataset with Captions

I'll just cover some well-known considerations for LoRA training.

Generally, unless you're aiming for a particular style or overall aesthetic, your dataset will consist of images along with their corresponding captions in text files.

There are a few important things to keep in mind when making a dataset with captions. Let's take a look at them using some examples.

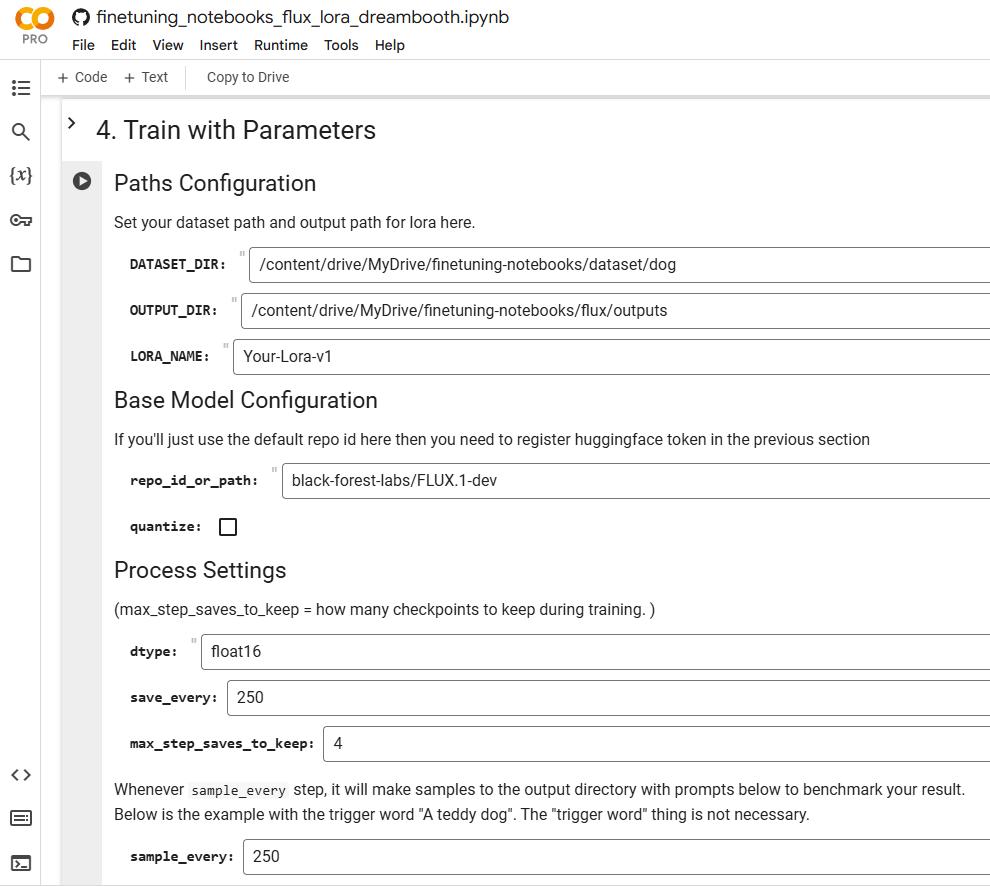

Here’s an example dataset with diffusers/dog-example:

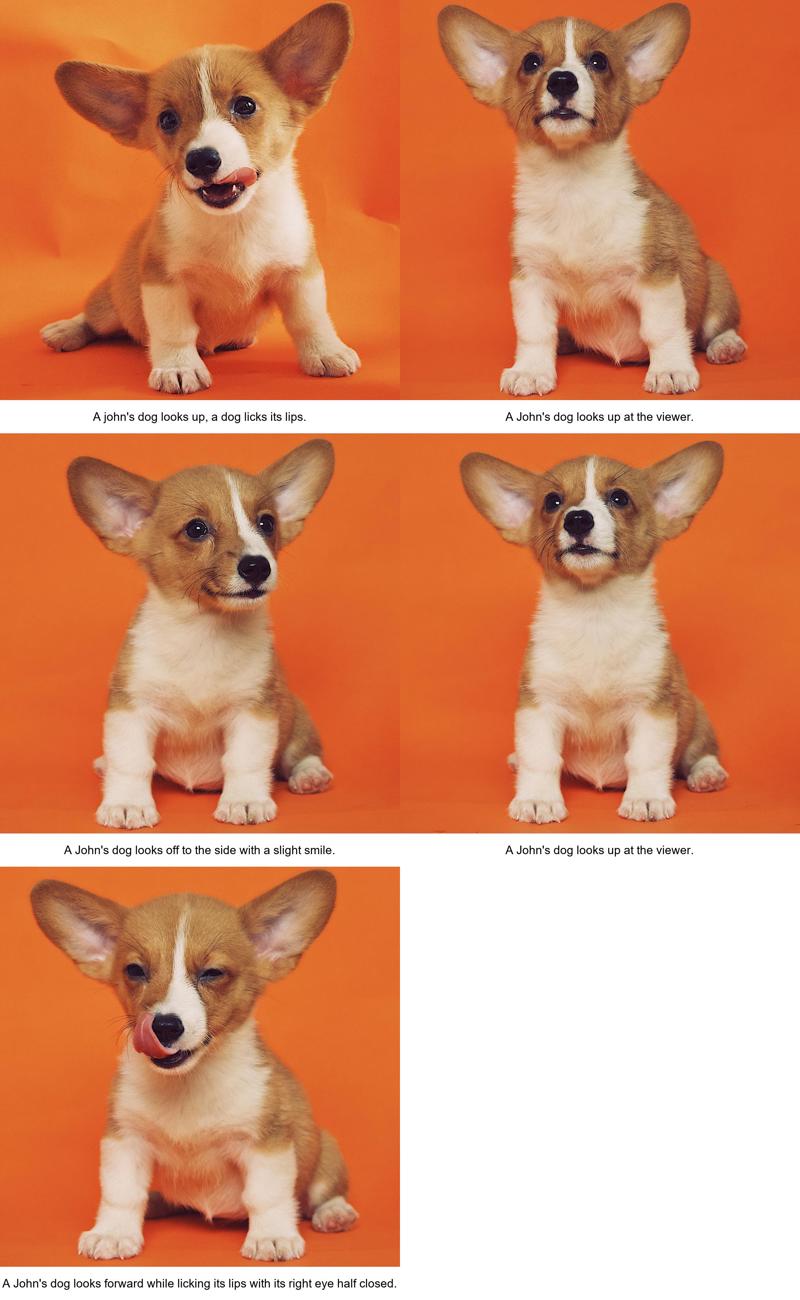

The dataset consists of 5 images of a puppy. The common feature among these images is that the puppy is sitting, rather than running or playing.

If I want to train the model on the puppy itself, not on the concept of a “sitting puppy.” Then How should I do?

A key consideration when captioning images in a dataset is to emphasize features you want the model to learn distinctly. If there’s a specific feature you want to isolate, it’s recommended to caption it more.

For example, let’s say you want to train a LoRA for a character that wears a hairpin (This would be a better example). If you want the LoRA model to generate images of the character both with and without the hairpin, it’s recommended to include captions that specifically mention the hairpin. This principle also applies to other features, such as clothing, hairstyle, eye color, and so on.

Since I don’t want the LoRA from overfitting to the concept of “sitting,” I should specifically caption the puppy’s seated pose.

Let's prepare two different datasets, one with "sitting" captions and one without, and then compare the results after training the LoRA models.

Dataset without "sitting" : dog-example-without-sitting

2. Dataset with "sitting": dog-example-with-sitting

They're the same image dataset, but with different captions: one includes "sitting" and the other doesn't. I’ve temporarily named the puppy “A John’s dog” in the dataset. Since this word is used repeatedly in the captions, it will become the “trigger word” for the LoRA model.

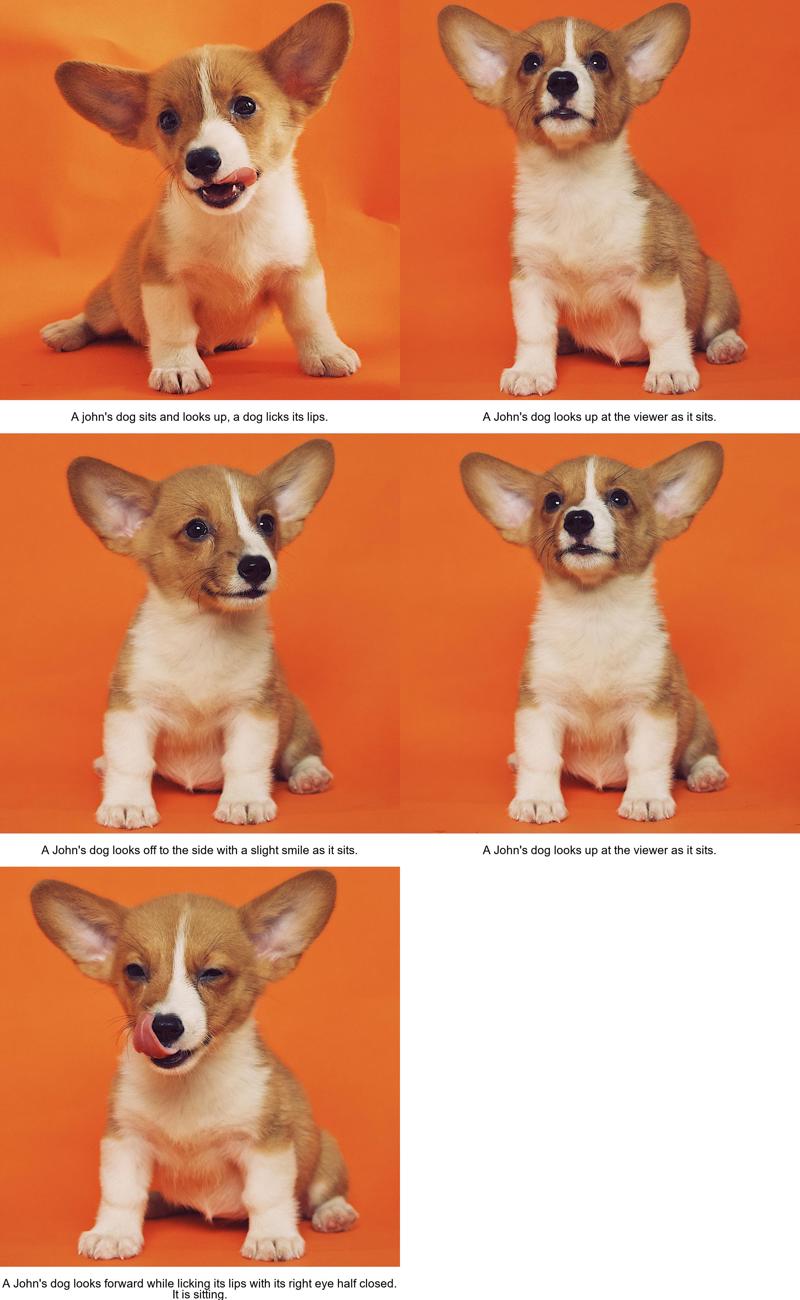

After training each LoRA model for 1000 steps, here are some example results generated using these LoRA models with the prompt, “A John’s dog is playing with a ball in the grass.”:

The image on the left was generated using the LoRA model without the “sitting” captions, while the image on the right used the LoRA model with the “sitting” keyword.

To me, the right image appears less overfitted to the concept of “sitting.”

The seed used for both images was 77. You can regenerate the results or conduct further tests using these LoRA models from here:

A better example would involve a dataset with a character wearing a hairpin, hat, or some other distinctive clothing and so on, if I could find one.

Currently, the repository contains two LoRA training notebooks, for Hunyuan Video and Flux:

Once you've prepared your dataset in Google Drive, simply running the cells in order should work. If something doesn't work as expected, please raise an issue on GitHub.

Thank you for reading. 😊