Everyone can get more from their ComfyUI setup

Hello CivitAI

I am the owner of the owner of the ComfyUI-MultiGPU custom_node, but don’t let the name of that fool you! There is something in the MultiGPU toolbox for everyone! I am writing this guide in the hopes that you come check it out and see that it might have the power to transform how you use Comfy.

What this article isn't.

This article isn't a detailed guide to quantization or how Torch works with your devices when using Comfy. This isn't a step-by-step guide on how to get everything working with these tools, either. This also isn't a beginner's tutorial. If you don't know how to install Comfy, or are reluctant to look under the hood, I would look elsewhere. Using these three custom_nodes in this way is new so this is not a stable, well-trodden path. The latest evolution of these nodes = UNet layer splitting will likely get easier to use over time, to the point it might be automatic based on your existing system specs. For now, however, it will take some work. So, finally, this article it isn't for those unwilling to get their hands dirty to implement.

OK, so what is this guide?

This guide meant to give everyone at Civit.ai a quick feel for what combining ComfyUI, ComfyUI-GGUF and ComfyUI-MultiGPU can do. I am not going to dive into a ton of details, as this is more an overview to get you thinking about maybe maximizing the parts of your system that you aren’t using when doing ComfyUI generations. If you are willing to try some of the capabilities I discuss here, it might open up your system to do more than you imagined. (Thos more technically-inclined or want to see the trail of breadcrumbs, the Reddit article I posted on it is probably a good stop-over right now)

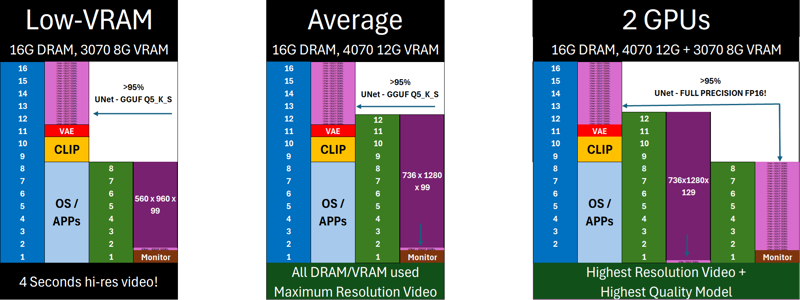

Before we get started, what I will be showing you doesn’t line up to any exact models. The two closest would be Flux Dev and Hunyuan Video, but the file sizes and latent size are not going to be 100% accurate – the three examples we will be following are for illustrative purposes and the max case is probably a little closer to a full 24G system – but I assure you they are close enough to reality that if you see your hardware situation somewhere in or in-between the three examples, you should expect to get results in the ballpark I write about.

Specifically, this article is for owners of RTX 3xxx- and 4xxx-series cards that have all the cores they need to do the job - but not always the VRAM.

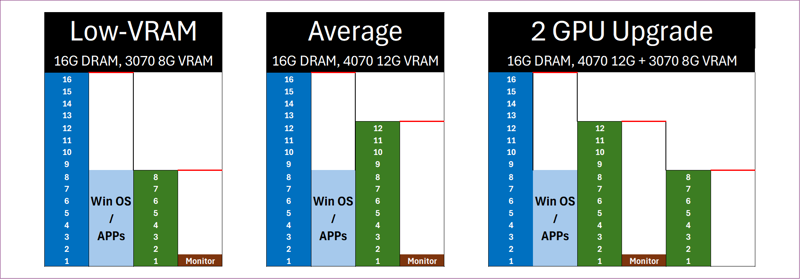

Let’s start out by describing three variations of what I hope look like real-world systems to many of you. Since nobody I know has a 5090, we'll stay far away from that corner. Instead:

Three modest systems

3070 8G VRAM system

4070 12G VRAM system, and

4070/4070 12+8 combo system as a likely upgrade scenario.

I assume a non-dedicated Win 11 OS

Everyone has a monitor attached

Everybody is on the low-end of system memory with 16G of DRAM, of which I am making an assumption roughly half of that is full with other things. If you have more main memory available than 8GB it just means you can apply what is in this article even more energetically.

That defines our three scenarios, different enough that hopefully one hits home for your own situation

Time to load a model and start generating! First off we're going to load all the components needed for the simplest of text-to-image workflows. This typically is going to involve a text encoder, called a CLIP, the model weights typically called the UNet and the VAE which a generative model that learns to encode/decode data into/out of the latent space the model is operating under. I will be devoting much on the topic of maximizing latent space because most of the time, even during compute, the layers of UNet on your system aren't doing much, especially if they are sitting there in a compressed format like GGUF where they are more like a jpeg in a file-system waiting to be decoded into a bitmap than they are tensor models.

The problem: Large models like Flux and Hunyuan Video are huge, and even efficient versions of them take up large amounts of space. Solving this requires a three-part solution:

Part I: Use quantization techniques on these huge models so we can fit as much or all of the model into VRAM as we can - at a price

In our first part we have three users attempting similar - if not identical model loads. At this point, I am going to introduce the first of the three tools we'll be using by the end of this: ComfyUI-GGUF. Succinctly stated: ComfyUI-GGUF is a custom_node that allows the use of GGUF-quantized models during inference. GGUFs are the result of a method of reducing model size at the expense of fidelity, using the same quantizing methods as LLMs - which will become important later on in the story.

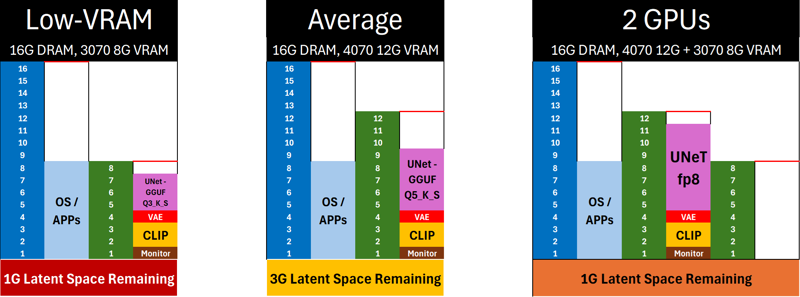

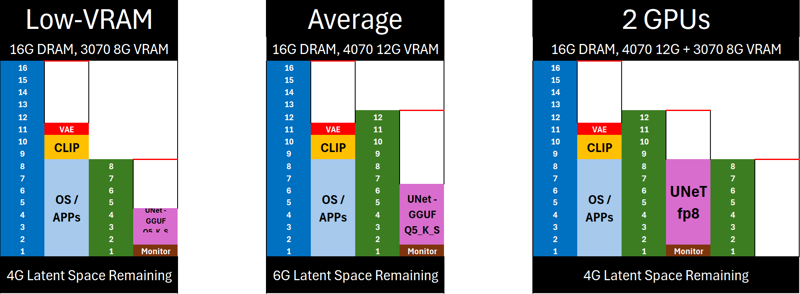

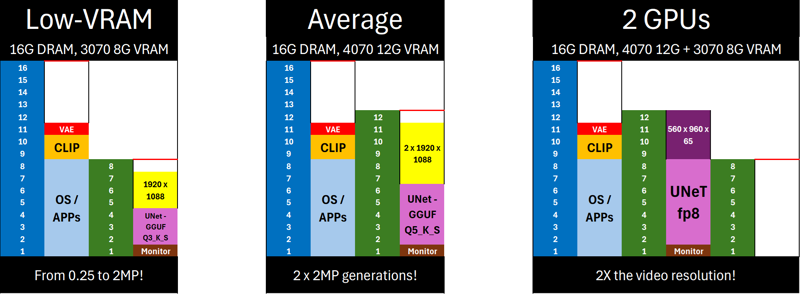

Our "low-VRAM" friend already understands that at 8G, they simply can't load a modern model all the way and have it all fit on VRAM. Both "Low-VRAM" and "Average" have decided to go with a GGUF quantization of differencing sizes, and "2 GPU" has decided to go with a more conventional 8-bit quantization "fp8". Suffice it to say, in general higher numbers are better here in terms of visual quality and fidelity, but, as you can see, the larger the number, the larger the model is in memory as well. What it shows here, though, is that all three of our systems are trying, at least, to get some "optimal" point around quality and speed for their use cases. What is concerning is just what little "latent space" all three of them have and what that means for running with these models. (As an aside, with some admitted bias, while 2 GPU may think they are loading a higher quality model, GGUF-quantized models are generally superior at the same file size at the expense of a 15% speed penalty. That Q5_K_S would likely be just as good with a reduced memory footprint.)

Latent space = the size of the room you can build stuff in

Comfy is an amazing tool, and part of that tool is memory management in the background (especially with --lowvram) to shift parts of models on and off your compute card.

A brief look at everyone's system and we are most certainly already doing some internal memory in both the Low-VRAM and 2 GPUs cases, as the images we want to make and the size of the model we want to make them with simply don't fit on the same VRAM at the same time. They are all making cool things - .25MP images, 2MP images, and a 3-second video clip, accordingly, so, awesome, right?

So much for the normal case. Generating stuff with these models is cool, but memory remains tight and other parts of the system are not being used at all.

Part II: Use cpu/DRAM to offload CLIP and VAE, as they are not used in primary inference.

Yes, making things with ComfyUI is awesome. Multi-GPU gives you more of your device be even more awesome! This second, powerful tool includes nodes for putting things on your main memory instead of VRAM.

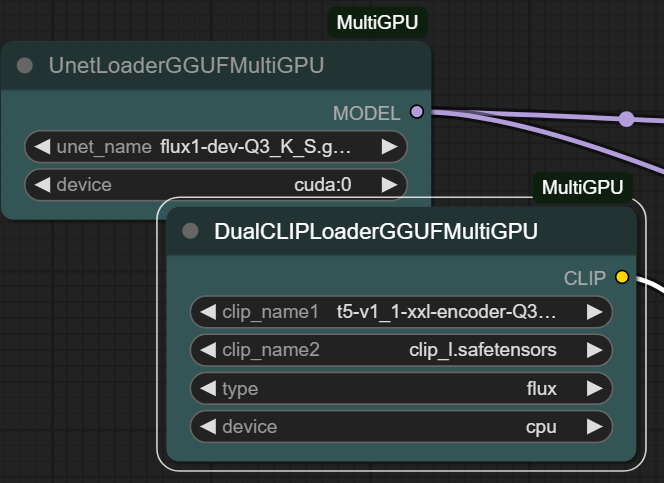

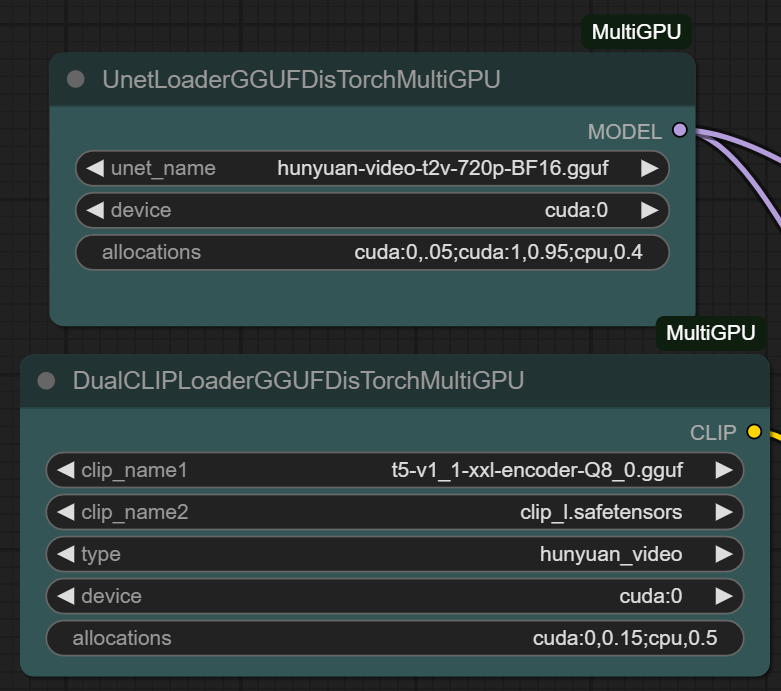

What used to have only one input (unet_name) it now has two: the second one being "device" What used to have three inputs now has four. The nodes are identical to their non-MultiGPU-aware parents in functionality. (Once you start using MultiGPU, standard loaders can get confused what device they are dealing with. One MultiGPU node in your workflow typically means using it for all three+ of your loaders.)

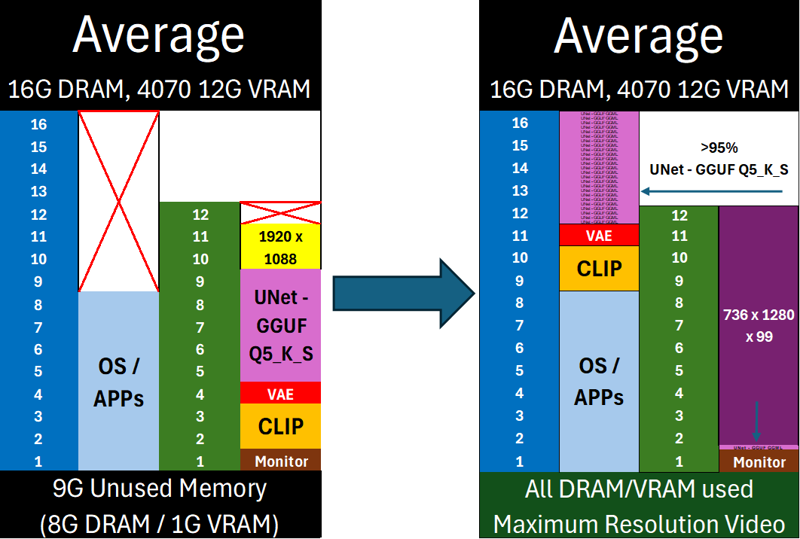

Here is the exact same model load, except this time, we use those nodes tell the system right away to move CLIP and VAE to main memory, instantly freeing up 3G on each of the main compute cards.

Is there a tradeoff? There sure is. DRAM is slower than VRAM. The CPU isn't as good at the same tasks as a GPU. That said, the quality of the output from these systems will be unchanged versus running on the compute card we are saving for our main UNet generation.

More latent space = more simultaneous images, or longer videos guaranteed, up to the limitations of your card's architecture. Putting things on CPU might be worth it for you because it means you can do more:

From 0.25 to a 2MP image for Low-VRAM - what a jump!

From 1MP two 2MP images at once, twice the video generation!

From 368x640x65 (15MP load) to 560x960x65(35MP load)

Wow, maybe there is something to this.

Part III: Use the architecture of GGUF files and the DisTorch method in MultiGPU to finish the job

I've been an enthusiast for a long time. Almost since when I started working with ComfyUI I was always looking to maximize the use of the hardware I had. Time and time again, though, even quantized UNets were almost always just two large to fit in my VRAM. I could put the entire model on a large DRAM pool on the "CPU" but that would mean using the CPU for inference. Too slow!

What if there was a way to take all the GGUF quantized layers, and treat them all like individual model files? I could then move them wherever I want! I could slot them in wherever I have free space and then just have the main compute machine - not the machine the layer is stored on - fetch it and use it when it needs it. Can't be that easy, can it?

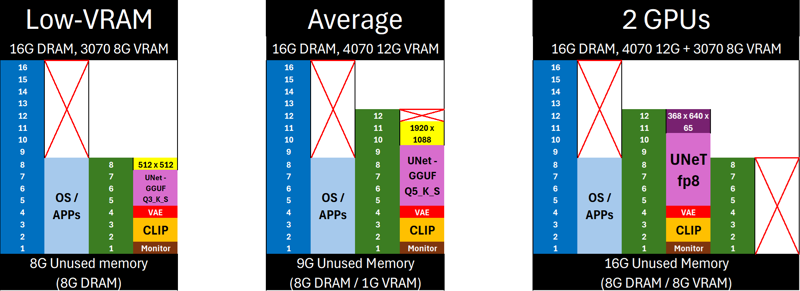

That is exactly what DisTorch does:

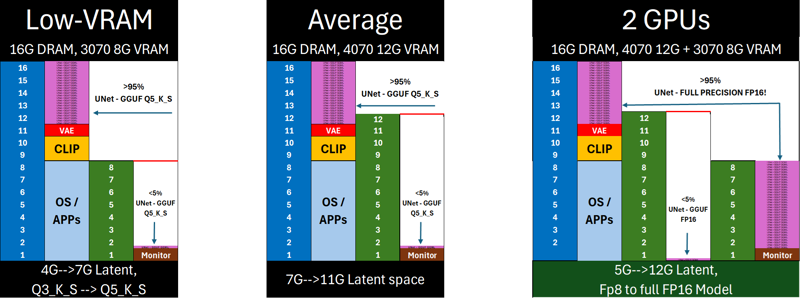

Gone is anything other than a tiny <300M stub of the now-upgraded Q5_K_S on Low-VRAM

Average, a ONE-GPU system, has nearly the full latent space of its 4070 for compute

Most stunning is the 2 GPU system. A two-generations-ago addition to a one-generation-ago middle-of-the-road video card is TRANSFORMED into a FULL-FP16 VIDEO MAKING MACHINE!

Briefly translated - "cuda:0,.05;cuda:1,0.95;cpu,0.4" =

cuda:0,.05 - allocate 5% of cuda:0 for this model's layers (12Gx.05 = < 1G)

cuda:1,0.95 - allocate 95% of cuda:1 device for model GGML layer storage (7.6G)

cpu,0.40 - allocated 40% of cpu memory for model GGML layer storage (6.4G)

Just look at what we can fill up our systems with now:

In all three cases, we've succeeded in turning our main compute card from a place of mostly dead model storage to one where we are squeezing every 0.1 it/sec and we've gone from meager image generation to squeezing every pixel of resolution or frame of video from each system.

Reality Check - is this for real? (Yes!)

Especially for large-compute loads.

To reiterate: Nothing is for free. Transferring layers between DRAM and VRAM isn't free. DRAM is slower than VRAM. But! When you have DDR4+ DRAM or another device on the PCIe bus with idle VRAM and are already spending a large amount of time doing inference, the GGML layer-transfer is <1% of the generation time = almost, almost, free with the added benefit of a fully-unleashed compute device.

Ok, this got me hooked. What next?

Check out my Reddit post for some more of the technical details and for a few people posting their own (confirmatory) results.

Go try it yourself, I've got 16 examples that come as part of the custom_node. The ones with the new functionality have "distorch" in their name.

It isn't just for UNet. LLM-based CLIPS like T5 can be layer-split, too!

Share your results! I'd love to hear of your own experiences with the new nodes.