Hello everyone, my name is Taruma Sakti. I am the creator of the AI Music Video titled Cliff's Edge: Shattered - A Poignant Music Video. Cliff's Edge: Shattered is my debut AI Film, and I was quite surprised to find it ranked as a top 50 finalist in Project Odyssey Season 2, out of 1200 music video submissions in the competition.

Cliff's Edge: Shattered is a haunting exploration of the inner battles we often fight alone yet echoes the struggles of many. This cinematic music video uses evocative imagery to portray a journey through despair, a recurring motif hinting at a pivotal point of no return. Will there be a reason to hold on, or will the darkness ultimately prevail? Though the paths may vary, the search for connection remains universal.

Watch "Cliff's Edge: Shattered - A Poignant Music Video" here: https://civitai.com/images/50911144

Skip ahead to the Workflow Overview if you're interested in the workflow only

Links:

Introduction

Before we dive in, just a quick note – I'm not from the creative industry. My actual background is in Civil Engineering. In the past, during my free time in college, I often expressed myself through composing instrumental music. But over time, life's busyness and the spark of creativity to express myself through music faded. Then, around a year ago, the age of AI arrived and opened a new door for my creativity. I began exploring various AI tools, initially to express myself through music and songs using Suno AI.

After creating the song "Cliff's Edge," a friend told me about Project Odyssey Season 2. At first, I am hesitant trying to participate in this event. Create an AI Film!? I had no experience at all! But since there was an opportunity to try out trial AI tools from the sponsors, why not give it a shot? Besides, there's no harm in trying as long as it doesn't cost any money 😅. (Also, I already paid Adobe Creative Cloud, so might as well using it. 🤣)

Moreover, feeling somewhat "stuck" in life and with 2025 approaching, I followed the principle of trying new things, stepping out of my comfort zone, even though it felt difficult. So, with a bit "self-persuasion," I decided to dive in and try to make a music video for the song "Cliff's Edge." And surprisingly, despite all the limitations and confusion, I actually enjoyed the process.

I must admit, I am not yet fully prepared to openly and deeply discuss the reasons behind the song and music video. However, what is certain is that "Cliff's Edge: Shattered" is a form of honest expression about what I have imagined and felt.

Image Prompt [Mystic 2.5 Flexible]: A medium shot shows a solitary figure standing still in interior of his apartment. The figure is a middle-aged burly man with graying, medium wavy hair, wearing a dark blue shirt. He is looking off-screen towards the right, his expression a mixture of sadness and uncertainty. The setting is realistic, with a dark, desaturated color palette. The camera is at eye-level.

Why create "The Making of Cliff's Edge: Shattered"?

Simple: to inspire you and myself. Especially for those of you who may be hesitant to try AI Filmmaking or are confused about where to start. I've been in that position, and I want to share my journey. Hopefully, this series encourages more people to express themselves through AI Film.

I started this series is because there are so many things I want to share – from the initial concept to the final product of "Cliff's Edge: Shattered." This journey was not as smooth as I imagined. There were moments of self-doubt, technical limitations, and confusion during the process of creating my first AI Film. I want to discuss each phase in depth, particularly to provide insight into how I approached prompting and utilized AI assistance (Gemini Experimental 1206, now Gemini 2.0 Pro Experimental) to achieve the visual results you see now.

First Article Focus: Workflow Overview

In this first article, my focus is to provide a big picture of the workflow for making "Cliff's Edge: Shattered". Hopefully, this overview can give you inspiration and at least a starting point to start creating and expressing yourself using AI Films. As an additional resource, I have also provided a behind-the-scenes in the form of a GitHub repository, where you can see a list of the image and video prompts that I used in the final product.

Visit Cliff's Edge repository on GitHub: https://github.com/taruma/cliffsedge

My main goal in creating this article and series of articles (of course, if the response is positive and there is further demand) is to inspire people to try and start their own adventure in the world of AI Film. I will also use this article to remind myself of how far I have been able to express myself in this new medium.

It's important to remember, to experiment and adapt my workflow and prompts to your style and vision. One thing that might be surprising is that the workflow structure that I will discuss was actually compiled AFTER completing the AI Music Video "Cliff's Edge: Shattered". During the initial creation process, I relied more on intuition and spontaneous exploration.

Image Prompt [Mystic 2.5 Flexible]: A wide, eye-level shot of burly man sitting on a weathered wooden bench near the cliff's edge in the dream. He is a man with graying, wavy hair, wearing a dark. he has a more peaceful expression. He's wearing simple, comfortable clothing. He's sitting calmly, gazing out at the ocean with a faint smile on his face. The background is the serene cliff edge, the calm ocean, and the bright sky. The overall mood is one of peace, contentment, and a moment of respite.

Evolving "Cliff's Edge"

After committing to Project Odyssey Season 2, the next question was: what kind of video did I want to create? The immediate answer was a music video. Why? Because I've always leaned towards storytelling through music and songs. It feels more natural and a bit in my comfort zone to create something music related.

Actually, besides "Cliff's Edge," I have a few other songs in my "personal library" that could be music video material. One that strongly came to mind was an Indonesian song titled "Jejak Layar". Maybe later, if there's a chance and an idea, this song will also get visuals.

But why ultimately choose "Cliff's Edge"? Because this song itself was born from a short story I collaborated on with AI. So, the "Cliff's Edge" material felt more familiar, and I already had an idea of what the visuals would be like. Although, honestly, I had doubts. "Cliff's Edge" has personal meaning for me, and making this music video and sharing it publicly felt like opening up and becoming a bit vulnerable.

But, remembering the initial motivation for joining Project Odyssey: challenging myself, stepping out of my comfort zone. I chose "Cliff's Edge." And it turned out, the music video creation process felt cathartic. Like some emotional burden was slightly released when pouring the story and feelings into visuals.

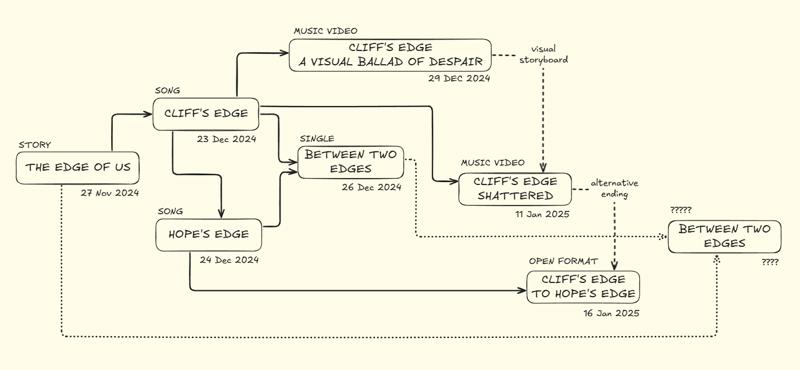

Here's the timeline from the "The Edge of Us" to "Cliff's Edge: Shattered."

"Just Get it Done" Version

Now, to avoid my old habit: procrastination, I immediately set a crazy target. One day! I had to finish my first music video in one day, just as it is, the important thing is it's done and immediately submitted to Project Odyssey. The goal? To not overthink and end up not doing anything at all.

Thus, "Cliff's Edge: A Visual Ballad of Despair" was created. After reviewing it, honestly, this video is still very simple and not that good. You could say it's just a collection of sad people scenes, and most of the shots show the character's back or rear. Why? I was worried about confusing viewers with too many character changes, given the limitations of the AI tools. So, yeah, the safe thing to do was make characters "facing away" from the viewer, so it wouldn't be too obvious if they were different people. But it ended up being bad and unable to connect the song's emotion to listeners and viewers.

Image Prompt [Flux 1.0 Realism]: A cinematic, close-up shot at eye level captures the back of a burly man with medium wavy grey hair. His head is in his hands, a universal gesture of despair. The lighting is dim, with a single lamp providing the only light source, leaving most of the room in shadow. The atmosphere is introspective, self-critical, and somber. The colors are dark, with heavy shadows, focusing on the back of the man's head and obscuring any facial expression. (From Cliff's Edge: A Visual Ballad of Despair)

"Cliff's Edge: A Visual Ballad of Despair" was made with a combination of images from the Flux 1.0 Realism model (Freepik) and videos from Luma Dream Machine and Runway Gen3. After finishing, without much thought, I immediately submitted it to Project Odyssey Season 2. I also shared it on Discord and other social media. At that time, honestly, I felt satisfied. Why? Because my main target was achieved: participate in the AI Film competition and submit work, checklist complete!

A Desire for Deeper Expression

While looking at other works shared on the Project Odyssey Discord, I started to get inspired to try to make something better. "Is this all?" I thought when looking at Cliff's Edge: A Visual ballad of Despair. Finally, I started experimenting with the Mystic 2.5 and Mystic 2.5 Flexible image models from Freepik, and the Kling 1.6 video model from Kling AI.

Luckily, the competition deadline was still quite far off, about 15 days away. So, I decided to make an "upgrade" version of "Cliff's Edge". This time, the target wasn't just to fight procrastination, but more focused on expressing myself more boldly. I felt the song "Cliff's Edge" has a strong implicit power related to issues of despair, loneliness, and depression, issues that maybe many people out there feel. I wanted the visuals to convey that message more effectively.

The theme of "Cliff's Edge: Shattered" is actually not much different, even arguably similar to the previous iteration, "Cliff's Edge: A Visual Ballad of Despair." But, the main difference lies in the visualization. In "Shattered," I tried to present visuals that were stronger, more detailed, and more engaging.

Image Prompt [Mystic 2.5 Flexible]: A medium, low-angle shot of a burly man, sitting on the edge of a bed in a sparsely furnished apartment. He is a middle-aged man with graying, wavy hair, wearing a plain, dark t-shirt and trousers. He has a dejected, hopeless expression, and his head is now slightly bowed. The apartment walls are bare and off-white, and the only light source is a single, naked lightbulb hanging from the ceiling. The overall mood is one of despair, hopelessness, and being consumed by darkness. The style is dark, stark, and realistic.

I didn't just copy and paste the prompts I had made in "A Visual Ballad of Despair" to the new image and video models. I really started the process from scratch again. Starting from creating a more detailed storyboard, to a more structured and specific list of prompts for "Cliff's Edge: Shattered." What was the detailed process like? Well, I will discuss that in more depth in the next chapter.

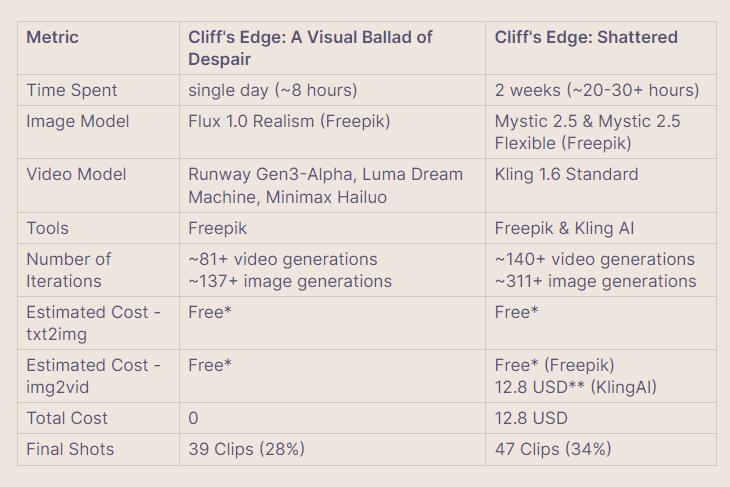

Production Budget

Before we go any further into the workflow, I want to briefly discuss the "budget" spent on making "Cliff's Edge: Shattered." Don't worry, this isn't a budget of thousands of dollars! It's actually quite "affordable." This is to give an idea of how much financial commitment is needed if you're interested in making AI Film.

It should be noted that I'm not including the budget for creating the song using Suno AI, because the song "Cliff's Edge" was not specifically made for Project Odyssey. But, even if it were calculated, the estimated budget to make the song "Cliff's Edge" with Suno would be around 10 USD. That would probably be enough for 20-30 rerolls (200-300 credits or ~2-3 USD) to get the final version you hear in the video.

Here's a comparison of the budget and statistics:

Note: *: Using Trial Credit (Project Odyssey); **: Pro Subscription (Discounted). Big shout out to FREEPIK! Thanks for the incredibly generous trials.

You might be wondering, why suddenly a 12.8 USD cost in "Cliff's Edge: Shattered"? Initially, I had a firm commitment: join Project Odyssey without spending a penny! The principle was, use all trial from sponsors and make the best work possible. And actually, "Cliff's Edge: Shattered" could also have been made with a 0 USD budget if I were more patient waiting for the video generation queue on Freepik. But, honestly, at that time I was impatient, hehe. Imagine, for 140 video generations alone, if on average one video needs 10 minutes of generation time, the total waiting time could be over 23 hours! That's just video generation time, not including time exploring tools, learning prompting, and tweaking video editing and color correction which honestly, even now my skills are still very limited 😅.

The cost is 12.8 USD, or if converted to Rupiah around 217,000 IDR. Maybe for some people this number is small, but for those living in Indonesia, 217 thousand is quite big! It can be my food for a week, you know! That's why, with this "luxury" expense, I became even more motivated and creative to utilize all the footage that has been generated as best as possible. I invested more time in planning and storyboarding before generating, so the results are more directed and minimize waste. And with achieving a 30% success rate, I think, it's pretty good, right? 🤣

Oh yeah, there's one more hidden "budget" not listed in the table, but its role is very crucial: Large Language Model (LLM)! I heavily relied on Gemini 1206 Experimental and Gemini 2.0 Flash Thinking Experimental from Google which were still free at that time (and now too). I'm using Google AI Studio and API. Honestly, without free access to Gemini, maybe "Cliff's Edge: Shattered" would never have been made. Why? Because Gemini is not just a tool, but like a discussion partner, collaborator, even a patient teacher teaching me the ins and outs of filmmaking (Yes, I have no friend. 😭). So, big shoutout to Google and Gemini! You guys are project savers! Hehe.

Image Prompt [Mystic 2.5 Flexible]: A medium, eye-level shot of a man sitting alone at a table in a dimly lit diner. He is a middle-aged burly man with graying, wavy hair, a weary expression, and dark circles under his eyes, wearing a simple, dark shirt. He is motionless, staring blankly out the rain-streaked window, seemingly lost in thought. The diner's interior is dimly lit, with worn booths and a counter. The flickering neon sign from the previous shot casts an unsettling glow on the window and on the face. The overall mood is one of isolation, despair, and being trapped in one's thoughts. The style is dark, cinematic, and realistic.

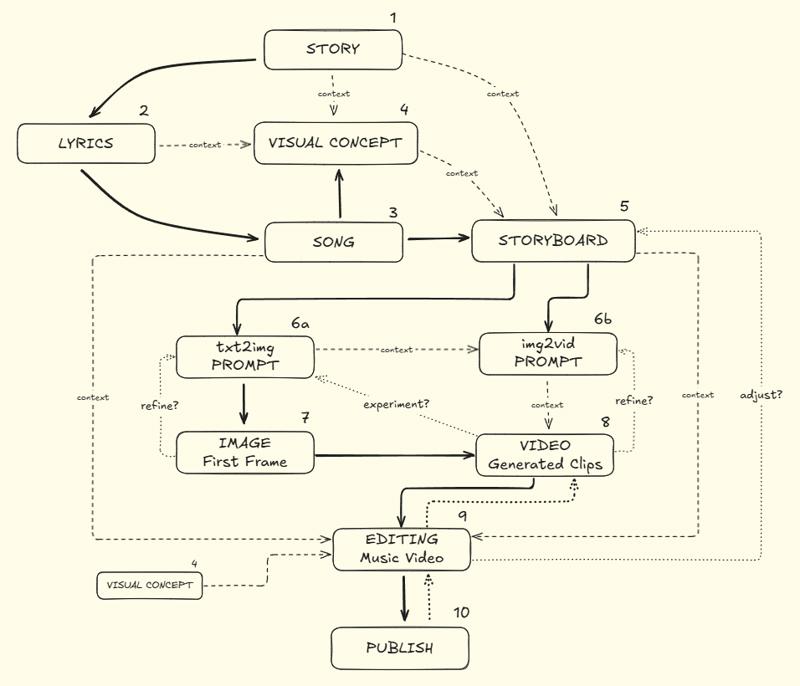

Workflow Overview

Now that we've covered the background, let's get to the main topic you've probably been waiting for: an overview of the workflow for creating "Cliff's Edge: Shattered." Important to remember, this workflow I made AFTER finishing the music video. So, this is more a reconstruction of the actual process I took during "Cliff's Edge: Shattered" creation. During the creative process itself, honestly, I relied more on my visual and storytelling intuition. It might also be beneficial to just trust your intuition and curiosity rather than being fixated on a workflow.

This workflow I made hoping it can be a reference or starting point for friends who want to try making AI Film. Feel free to modify, adjust, and explore it further according to your individual needs and creative style. In general, the "Cliff's Edge: Shattered" workflow is actually similar to the workflow for creating "Cliff's Edge: A Visual Ballad of Despair" (AVBD). The main difference might lie in the longer and more in-depth evaluation and refinement process in the "Shattered" version. But, for a general overview, they are roughly the same.

You can see a complete workflow picture of "Cliff's Edge: A Visual Ballad of Despair" (AVBD version) here. Slightly different from the Shattered version, but at least it can give you an idea of what the complete process is like.

Story to Screen: The Journey of 'Cliff's Edge: Shattered' in a flowchart.

From the workflow I attached above, there are 10 main stages in making "Cliff's Edge: Shattered". The flow begins with creating a short story to serve as the narrative foundation (Story - stage 1). This short story then becomes the basis for writing the song lyrics with the help of Gemini (Lyrics - stage 2). Once the lyrics are complete, the next step is to create the song using Suno AI (Song - stage 3).

Some might think, why create a story first and not just start with the lyrics? The main reason is my limited English language skills. This is my first project where the final product is in English. So, to maintain the implied ideas and vision in my mind, I tend to develop it in the form of a short story. This allows me to guide the AI in drafting it and ensuring it aligns with my vision. However, if I were to create a song with Indonesian lyrics (my native language), I usually wouldn't need to go through the trouble of creating a story first, because I can directly revise the draft.

Because English is hard and quite complex 😅, particularly regarding the correct use of grammar, I structured it as a story so that Gemini could better grasp the overall idea/vision I had.

Designing a Visual "Blueprint"

After the song is finished (Song - stage 3), the next stage is thinking about the visual concept for the music video (Visual Concept - stage 4). This stage is arguably quite crucial, as it's where we determine the visual direction of the final product. For my first AI Film, I didn't want anything too unconventional (including editing technique). I leaned towards a realistic visual style. And unlike the "A Visual Ballad of Despair" version, which focused on visuals of the actors' "backs," in the "Shattered" version I wanted to try focusing more on the implied facial expressions of the "actors" in the music video.

Next step is making the storyboard (Storyboard - stage 5). This storyboard is like a visual blueprint that gives a big picture of what the music video will be like later. Considering I want to cut budget and aim for a high success rate (so not too much trial and error wasting resources), this storyboard stage becomes very important.

During the storyboard process (Storyboard - stage 5), I began to envision the flow of the music video's story in my head. I "asked" Gemini to create opening and closing scenes that should depict the edge of a cliff with the characteristics I had in mind. For the chorus part, I also directed it to visualize scenes at the cliff edge too, but with contrasting nuances (between dream and reality) with different subject expressions and poses too. And importantly, I also directed the ending scene to have a tragic but ambiguous ending, according to the original story.

Shot 10 In Storyboard

The storyboard is quite detailed, containing info about each shot duration, shot type, camera angle, camera movement, and scene description including location (EXT/INT/LOCATION), time (TIME), main subject, subject movement, background, and background movement. Details about system instructions I used for Gemini, and how I did few-shot prompting before asking Gemini to make a storyboard, I'll discuss further in the next article.

It's important to note that at the storyboard stage (Storyboard - stage 5), we have not yet created prompts for images or videos. The primary focus at the storyboard stage is to design the visual narrative as a whole first. Because the prompts will be adjusted later based on the AI model and tool that will be used.

Visual Generation Process

After the storyboard draft is done (Storyboard - stage 5), then comes making Image Prompts (Image Prompt - stage 6a) and Video Prompts (Video Prompt - stage 6b). I again used custom system instructions for Gemini, this time to convert shot details from the storyboard (Storyboard - stage 5) into Image Prompts and Video Prompts. In this custom instruction, I prepared special guidelines for image prompts as the first frame (keyframe) which will later be turned into video. I requested Gemini to generate both image prompts and video prompts simultaneously for each shot in the storyboard.

Ideally, a more "organized" workflow might involve focusing on creating images first, and then generating video prompts based on those images (right? I'm still figuring things out. 🤣). The goal, so we can check first if the visuals are right before moving on to making video prompts. But, that process would take longer. As a solution, I chose a shortcut: I just use intuition to make video prompts in case Gemini's prompts are not quite right.

Image/Static & Video/Movement Prompt Shot 10

After getting Image Prompts (Image Prompt - stage 6a), I generate images for each shot using Mystic 2.5 Flexible (Image First Frame - stage 7). These image results (Image First Frame - stage 7) were then used as the first frame for video generation (Video Generated Clips - stage 8).

In the video generation process (Video Generated Clips - stage 8), even though Gemini had given Video Prompt references (Video Prompt - stage 6b), often I preferred using my own prompts. If you see the final video prompt list in the behind-the-scenes GitHub, you'll see that the video prompts I used are quite short. That's because I mostly made them myself. Why like that? Because video prompts made by Gemini sometimes weren't quite right with my visual idea.

Assembling Visuals into a Musical Narrative

After collecting hundreds of video clips from Kling AI and Freepik (Video Generated Clips - stage 8), the next step is Video Editing (Editing Music Video - stage 9). Now, in the video editing stage, this is the only process that is completely without Gemini collaboration at all. Here, everything depends on my skill in assembling visual sequences that tell a story, convey the song's message through moving images, and communicate the emotions of the song. And honestly, this stage is very challenging!

Given my very limited video editing knowledge and experience, I chose the simplest transitions: cut and fade in/out. Besides that, because this is a music video, I had to ensure that transitions between shots matched the song's rhythm, without feeling awkward or disrupting the music's flow. Oh, I also had to learn about color correction and grading, which, even now, I still don't understand. Especially since I'm partially colorblind, so I'm always unsure whether my color choices are right or not. (help? 🤣) If you see visuals that are overly contrasted or pale, it's likely that I didn't realize it initially.

Not all clips from video generation (Video Generated Clips - step 8) immediately matched my vision. Often, I had to go back to the storyboard (Storyboard - step 5) and experiment with new image prompts (Image Prompt - step 6a) or video prompts (Video Prompt - step 6b).

If you look at the final image & video prompt list on GitHub, you'll see a Mystic 2.5 model (without "Flexible"). When I used Mystic 2.5, I was actually in the editing process (Editing Music Video - step 9) but went back to experiment to find visuals that better fit the video's mood (Image Prompt - step 6a & Video Prompt - step 6b). The process is iterative and flexible, not always linear like the workflow diagram.

Final Touches

After finishing the music video sequence (Editing Music Video - stage 9), the final stage is Publishing (Publish - stage 10). In this stage, I usually perform a final quality check. Is this music video good enough? Has the message been conveyed effectively? Unlike the creation of the "A Visual Ballad of Despair" version, which was all rushed, for the "Shattered" version, I needed several days for this evaluation process.

When entering the publishing process (Publish - stage 10), I also started thinking about the final title of this music video, and the opening sequence. This process took time, even making me go back to editing (Editing Music Video - step 9) to adjust visual pacing or tone.

Honestly, finding the opening words for "Cliff's Edge: Shattered" ("If this story resonates, know that you are not alone"), took me at least two days of reflection! 😅. I wanted the opening to acknowledge the film's serious themes while also offering a message of hope and connection. The reason is that the visuals in "Cliff's Edge: Shattered" feel bolder and "deeper" compared to the previous version. And due to language limitations, I had to discuss with Gemini to see how this video might be received if it has different messages at the beginning.

After feeling satisfied with all aspects, and after waiting at least 1-2 days to "cool my head" and watch the video with fresher eyes, I then submitted "Cliff's Edge: Shattered" to Project Odyssey Season 2.

Image Prompt [Mystic 2.5 Flexible]: A medium shot captures a burly man on a tranquil cliff, bathed in the warm, golden light of a sunny afternoon. The figure, seen from the chest up, has thick, wavy hair, a mix of gray and dark brown, with gray predominating at the temples. He wears a simple, dark, button-down shirt. His features are strong but softened by a gentle expression as he listens intently, head tilted slightly to the side. The background is a blurred, slightly desaturated image of the serene cliff edge and the calm, blue ocean, reminiscent of a fading memory. The overall aesthetic is dreamlike, with a soft focus and a muted, yet still colorful, palette.

Is this the end, or can I still leap?

So, there you have it – a peek behind the curtain of "Cliff's Edge: Shattered." Beres sudah, as we say in Indonesian! Explaining this workflow and the creative process, it makes me realize just how much I still haven't discussed in detail! Things like crafting the story, the lyrics, the song itself. Then there's the whole evaluation process – how did I actually judge if a storyboard shot was working, or if a prompt was getting me closer to the right image or video? And let's not forget all those technical hurdles! Maybe those are stories for another article, especially as I plan to keep updating the GitHub repository with more project details like prompts, storyboard drafts, and lyric breakdowns.

But looking back at this whole experience, from hesitantly entering Project Odyssey to being completely surprised by the finalist selection, it feels a bit surreal, honestly. And if you’re still feeling a bit overwhelmed by that 10-stage workflow diagram, please, please don't be! Remember, I only mapped that workflow out after I finished "Cliff's Edge: Shattered." It’s more of a reflection, a way to understand the chaotic but ultimately joyful creative adventure.

Because the most important takeaway from making "Cliff's Edge: Shattered" isn't about rigidly following a diagram, or suddenly becoming a prompting pro overnight. It's about something much simpler, and much more empowering: human creativity is still absolutely essential.

Image Prompt [Mystic 2.5 Flexible]: A cinematic, wide shot at eye-level captures a serene cliff edge bathed in bright, natural sunlight, with a vast ocean visible from his views. A burly man with medium wavy grey hair sits on the cliff edge, seen from behind, while wearing colorful beach unbuttoned shirt. His both hand on his side. The ocean is calm and inviting. The atmosphere is calming, comforting, and ethereal, with a soft, dreamlike quality. The colors are bright and saturated, with a slightly brighter light around where a silhouette of 'Ruff' will appear.

Initially, even I doubted if I could even make an AI Film! But after diving into "Cliff's Edge: Shattered," I can honestly say I truly enjoyed the creative process. It was challenging, yes, and sometimes confusing, but incredibly rewarding. And that’s really the magic I discovered with AI Film. It’s not about the AI doing everything for you. It's about AI tools becoming extensions of your creativity. And in the end, that’s the real beauty of it. With AI becoming more accessible, more people can express themselves visually. And I truly believe that's a positive thing. It opens up new avenues for creativity and storytelling for everyone.

So, if there’s one thing, I hope you take away from my workflow, it’s this: don't be afraid to start, and trust your own creative vision. My “Just Get it Done” version, “Cliff’s Edge: A Visual Ballad of Despair,” might not be a masterpiece (again, please be kind! 😅), but it taught me a crucial lesson: action beats perfection. It was the imperfect first step that paved the way for "Shattered." And maybe, in a way, my whole Project Odyssey journey has been my own personal adventure to find where I belong. And perhaps, this world of AI Film, this community of creators, is one of those places.

My hope is that this behind-the-scenes will demystify the process of AI filmmaking and empower you to embark on your own creative adventures. Remember, the most important ingredient is your own unique vision and your willingness to experiment.

So, take the leap! Try a trial, write a prompt, generate something, anything. You might be surprised at where that first step takes you. And who knows, maybe your AI film will be the next one inspiring other from the top of the finalist list. I’m certainly excited to see what you create!

And as for me? Well, trials are running out 😭, so maybe I need to wait for Project Odyssey Season 3 for my next AI Film adventure! But until then, I think it’s time to properly learn video editing, color correction, and English 🤣. Stay tuned and thank you for joining me on this “Making Of” journey!

.jpeg)