You can also see this article in PDF format (see attachment). I've also attached high-resolution images/diagrams for this article.

You can also see the detailed system instructions/prompts in the Cliff's Edge repository on GitHub (taruma/cliffsedge).

This article is a follow-up to the previous article (The Making of Cliff's Edge: Shattered - My Journey & Workflow Overview).

Synopsis

Cliff's Edge: Shattered is a haunting visual poem that explores the depths of depression, isolation, and the fragile search for hope. The music video depicts the internal struggle of a figure grappling with profound loneliness, navigating a world that feels both vast and suffocatingly confined. Through a series of evocative, AI-generated scenes, we witness this inner turmoil unfold.

The video opens with a vista of a rugged coastline. Representations of this struggle are shown in various states of despair: figures walking rain-slicked city streets under the cold glare of neon signs, sitting alone in sterile apartments, and lost within the anonymity of bustling offices. The recurring motif of a cliff edge – both literal and metaphorical – symbolizes the precarious mental state, teetering between surrender and a glimmer of possibility.

The lyrics, a poignant internal monologue, reveal a desperate longing for connection and escape from the "loop of despair." The video culminates on the cliff edge, with the ocean's roar echoing the internal conflict.

Brief Presentation

Cliff's Edge: Shattered began as a deeply personal exploration of the isolating power of despair, a feeling I believe resonates universally in our modern world. As a Civil Engineer with a background in programming and a lifelong passion for music, I've found AI filmmaking to be a powerful intersection of my logical and creative sides – a way to use technology to express profound human emotions.

The haunting melody and vulnerability of the song 'Cliff's Edge' inspired a visual narrative. I wanted to create a film that captured the cyclical, often suffocating nature of depression, while also hinting at the fragile possibility of hope. This project was a series of 'firsts' for me: my first AI film, my first attempt at writing English lyrics, and my first foray into video editing.

I chose a visually stark, melancholic aesthetic, utilizing AI tools like FreePik Mystic 2.5 and Kling AI to generate imagery that felt both real and dreamlike, mirroring the internal state of someone struggling with profound loneliness. The recurring motif of the cliff edge serves as a powerful metaphor for the precarious balance between despair and recovery, a visual representation of the constant internal battle.

My goal was to create a film that would resonate with viewers on an emotional level, reminding them that they are not alone in their struggles and that even in the darkest moments, a shared understanding and a spark of hope can be a lifeline. I'm incredibly grateful for the opportunity to share this work and to be an active part of the growing community of artists exploring the potential of AI in filmmaking.

- Taruma Sakti

Production Diary

Director Taruma Sakti Project Innovation Democratizing AI Filmmaking through an Accessible, LLM-Driven, and Human-Curated Workflow

This production diary documents the creation of "Cliff's Edge: Shattered," an AI-generated music video exploring themes of depression, isolation, and the search for hope. This project demonstrates how readily available AI tools can empower individuals to realize complex artistic visions. The core innovation lies in a streamlined, accessible workflow that leverages a large language model (LLM) as a creative co-pilot across all stages, from initial concept to final prompt refinement. Crucially, this process emphasizes the essential role of human curation and artistic judgment, ensuring the final product is deeply infused with human emotion and intention.

Interface Google AI Studio, Typingmind Story Development Gemini Experimental 1121 (Google) Lyrics, Storyboard, Prompting, General Assistance Gemini Experimental 1206 (Google) Song Generation Suno v4 (Suno) Image Generation Mystic 2.5 & Mystic 2.5 Flexible (Freepik) Video Generation Kling 1.6 Standard (Kling AI & Freepik) Sound Effects Generation Elevenlabs Video Editing Adobe Premiere Pro (Adobe) Audio Editing Adobe Audition (Adobe)

This project utilized a range of AI tools, each selected for its specific strengths in different stages of the production process.

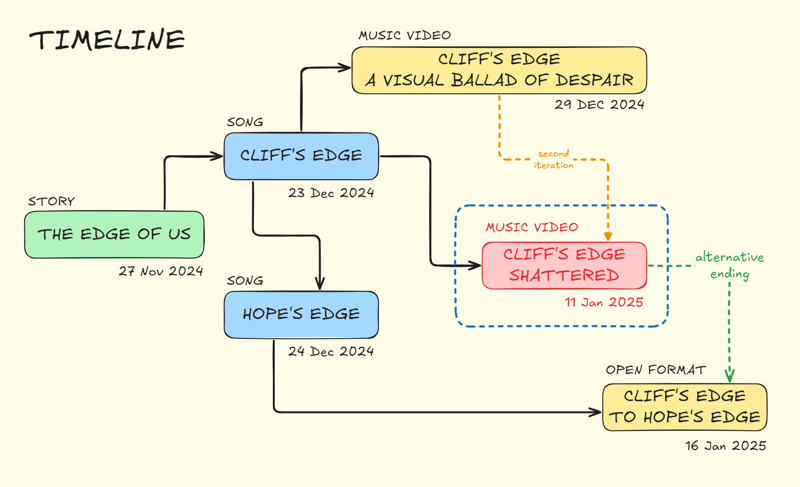

Production Timeline

The creation of "Cliff's Edge: Shattered" was a dynamic and iterative process, evolving from an initial short story concept into a fully realized AI-generated music video. The project began with personal creative explorations and then gained momentum with the discovery of the Project Odyssey Season 2 AI filmmaking competition. The timeline below illustrates the key milestones and dates in this journey:

Timeline Breakdown:

November 27, 2024 Completion of the initial short story, "The Edge of Us," which served as the thematic foundation for the entire project. December 2024 Focused on song creation. December 23, 2024 Finalization of the song "Cliff's Edge." This song, with its melancholic melody and poignant lyrics, became the central driving force for the visual narrative. December 24, 2024 Completion of "Hope's Edge," a companion song created as an artistic response and "antidote" to the despair expressed in "Cliff's Edge." Late December 2024 Discovery of Project Odyssey Season 2, providing a catalyst and a deadline for developing the visual component of the project. December 29, 2024 Rapid creation and submission of "Cliff's Edge: A Visual Ballad of Despair." This initial music video was produced in a single day as a "just get it done version". December 30, 2024 - January 11, 2025 Intensive two-week period dedicated to creating a significantly enhanced and more technically polished version of the music video, resulting in "Cliff's Edge: Shattered." This involved extensive refinement of visual elements, prompting techniques, and AI tool utilization. January 11, 2025 Submission of "Cliff's Edge: Shattered" to Project Odyssey Season 2. January 16, 2025 Creation of "Cliff's Edge to Hope's Edge," an "open format" video that connects thematically to "Cliff's Edge: Shattered" and incorporates the "Hope's Edge" song, providing a narrative resolution and an alternative ending.

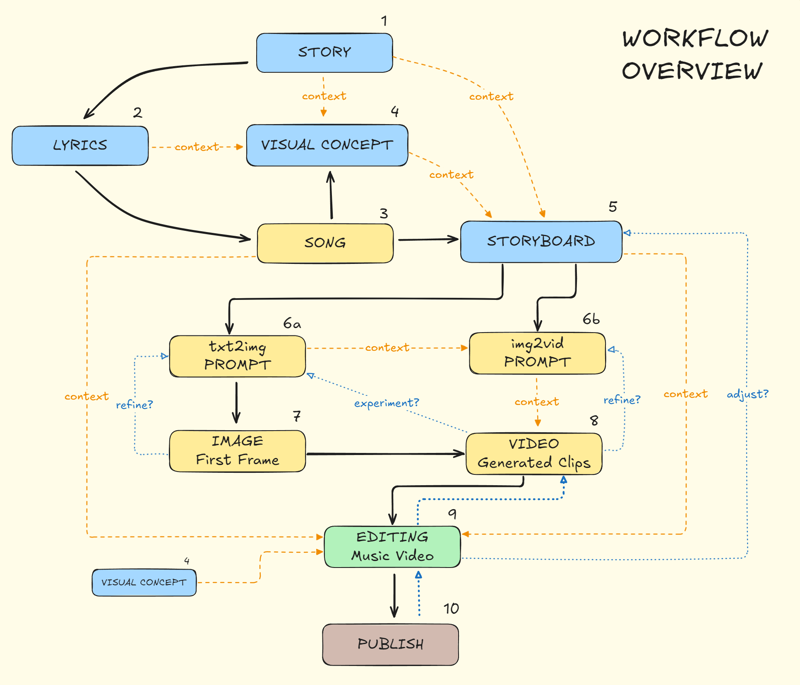

Workflow Overview

The creation of "Cliff's Edge: Shattered" followed a meticulously planned, yet iterative, workflow, designed to leverage the strengths of various AI tools and allow for continuous refinement based on human feedback and artistic judgment. The diagram below provides a visual representation of this overall process:

Figure 1 Workflow Overview

As illustrated in Figure 1, the workflow encompassed the following key stages:

Story Development: The initial story concept ("The Edge of Us") was developed using a combination of human brainstorming and assistance from a large language model (LLM).

Lyrics Crafting: Based on the story themes, lyrics were crafted, again with iterative refinement using an LLM.

Song Generation: The finalized lyrics were used as input for the AI music generation tool, Suno, to compose the song "Cliff's Edge."

Visual Concept Development: The story, lyrics, and song informed the development of a visual concept, outlining the key scenes, moods, and aesthetic style.

Storyboard Creation: A text-based storyboard was created, outlining each shot and its corresponding lyrics, using LLM assistance.

Prompt Engineering (txt2img and img2vid): Detailed text prompts were crafted for both image generation and video generation.

Image Generation: Still images were generated using FreePik Mystic 2.5, based on the refined text prompts.

Video Generation: The generated images were used as input for Kling AI to create video clips, with careful attention to keyframing and motion.

Music Video Editing: The generated video clips and the song, including additional sounds.

Publish: The finished music video was then published.

The orange dashed lines labeled "context" in the diagram indicate how outputs from earlier stages were used as additional context and input for subsequent stages. This iterative feedback loop was crucial for maintaining thematic and visual consistency throughout the project. This workflow emphasized a cyclical, iterative approach, with feedback loops between various stages (represented by the dotted lines in the diagram). This allowed for continuous refinement and ensured that the final product aligned closely with the initial artistic vision.

Story Development

The foundation of "Cliff's Edge: Shattered" lies in a deeply personal short story, initially titled "The Edge of Us." This story served as an emotional "anchor," capturing the core feelings of isolation, despair, and the search for meaning that I wanted to express through the music video. While the story itself remains unpublished, its purpose was not to create a standalone literary work, but rather to provide a rich and emotionally resonant narrative framework for the visual and musical components of the project. The primary goal was to expand upon an initial, personal idea, adding depth and emotional complexity to guide the subsequent creative stages.

LLM as Story Partner

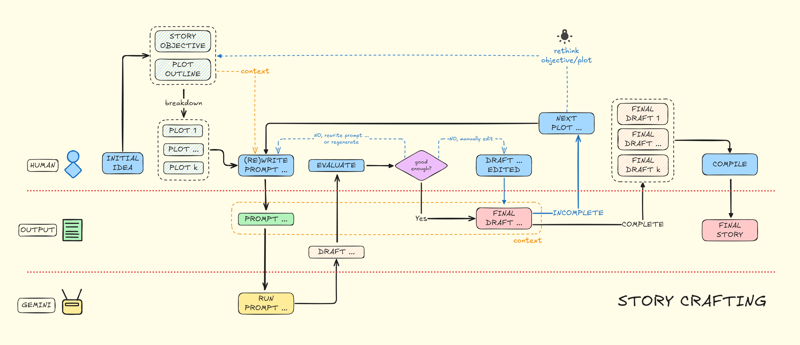

To develop the initial story concept into a more cohesive narrative, I utilized Gemini Experimental 1121, a large language model (LLM), as a creative partner. The workflow, illustrated in the diagram below, was highly iterative and placed human direction at its core.

Figure 2 Story Crafting Workflow

The process began with my initial story idea, which served as the "seed" for the LLM's input. I provided the LLM with prompts that included key themes, emotional tones, and plot points. The LLM then generated story drafts based on this input. Crucially, this was not a passive process. I acted as a curator and editor, evaluating each draft, providing feedback, and requesting revisions or regenerations. This "human-in-the-loop" approach ensured that the story remained aligned with my original vision and emotional intent. The LLM served as a tool to expand upon my ideas, explore different narrative possibilities, and enhance the descriptive language, but the final decisions about plot, character, and theme remained firmly in my control. The iterative process allows me to chose best plot that align with my vision.

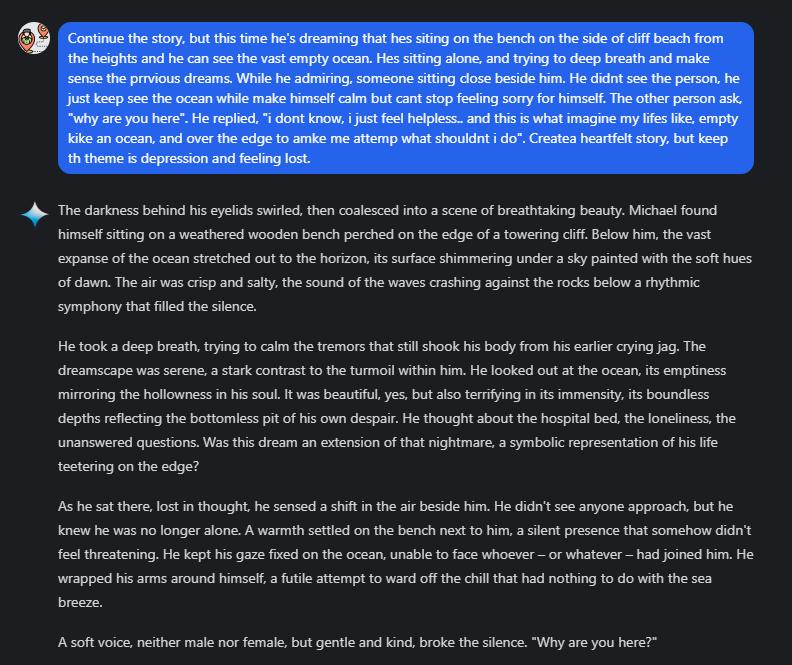

Prompting for Depth

This example demonstrates how the LLM took my initial, somewhat fragmented ideas and transformed them into a more fully realized scene, adding descriptive details and emotional depth. My prompt provided the core situation, the emotional tone, and the key dialogue, while the LLM expanded upon these elements, creating a richer narrative tapestry. I then reviewed this output, making further adjustments and refinements to ensure it aligned with my overall vision.

Through this iterative, collaborative process with the LLM, the initial, personal story concept was developed into a more nuanced and emotionally resonant narrative, providing a solid foundation for the subsequent stages of lyrics, song, and visual creation.

Lyrics Generation

Building upon the narrative foundation established in the short story "The Edge of Us," the next stage involved crafting the lyrics for "Cliff's Edge." The goal was to create lyrics that not only conveyed the emotional core of the story. The intention was to create lyrics with a melancholic and introspective tone, using vivid imagery to paint a picture of the protagonist's internal struggle.

AI-Asissted Songwriting

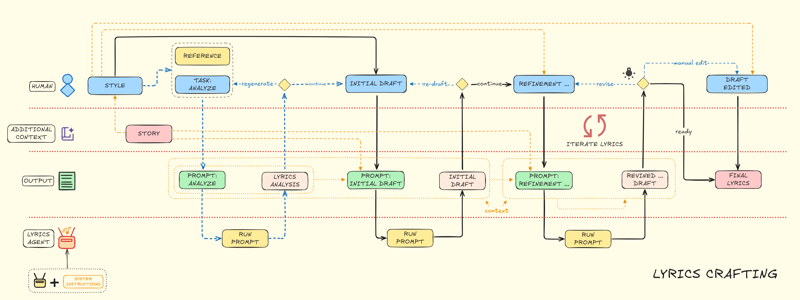

To achieve this, I utilized Gemini Experimental 1206, a large language model (LLM), as a collaborative songwriting partner. My custom instructions, tailored for lyric generation and analysis, guided the LLM. The workflow, illustrated below, was highly iterative and driven by continuous human feedback and refinement.

Figure 3 Lyrics Crafting Workflow

The process began by defining the desired genre and style, drawing inspiration from the song "Wasteland" by Royal & The Serpent (from Arcane Season 2) for its lyrical structure and emotional intensity. The short story, "The Edge of Us," served as crucial additional context, ensuring the lyrics remained thematically consistent with the narrative. I provided Gemini Experimental 1206 with prompts that included:

Story Context: Brief summaries of key scenes and emotional states from the short story.

Desired Structure: Verse-chorus structure.

Gemini then generated initial lyric drafts. These drafts were not accepted verbatim. Instead, I acted as a critical editor and curator, analyzing each line, stanza, and section. I evaluated the lyrics based on:

Emotional Impact: Did the lyrics evoke the desired feelings?

Imagery and Language: Were the images vivid and effective? Was the language poetic yet accessible?

Narrative Consistency: Did the lyrics align with the story and its themes?

Musicality: Did the lyrics have a natural rhythm and flow that would lend themselves to a song?

Based on this evaluation, I provided feedback to Gemini, requesting revisions, regenerations, or specific alterations. This iterative process continued until I was satisfied with the lyrical content. Some lines were rewritten entirely, others were tweaked, and some were accepted as is.

From Prompt to Verse

This example from initial draft shows the iterative nature of the lyric creation. Gemini provides a structured starting point based on the prompt and provided context.

The final lyrics of "Cliff's Edge" underwent significant human curation and editing. While the LLM provided valuable initial drafts and suggestions, I made numerous changes to refine the phrasing, strengthen the imagery, and ensure the lyrics flowed naturally. Notably, the “Hello” and "Goodbye" section in the intro and outro was added manually. While some parts were removed to improve the overall flow and emotional impact.

Through this blend of AI assistance and human artistic direction, the lyrics for "Cliff's Edge" were crafted to be both emotionally resonant and narratively consistent with the overarching story, serving as a powerful foundation for the subsequent musical and visual elements of the project.

Music Composition

With the lyrics for "Cliff's Edge" finalized, the next step was to compose the music, bringing the words to life through melody and instrumentation. This stage relied heavily on the AI music generation platform, Suno, but with a strong emphasis on human guidance and iterative refinement to achieve a specific artistic vision. The goal was not simply to generate a song, but to craft a piece of music that precisely captured the melancholic, introspective, and ultimately hopeful tone of the lyrics and underlying story.

The Suno Workflow

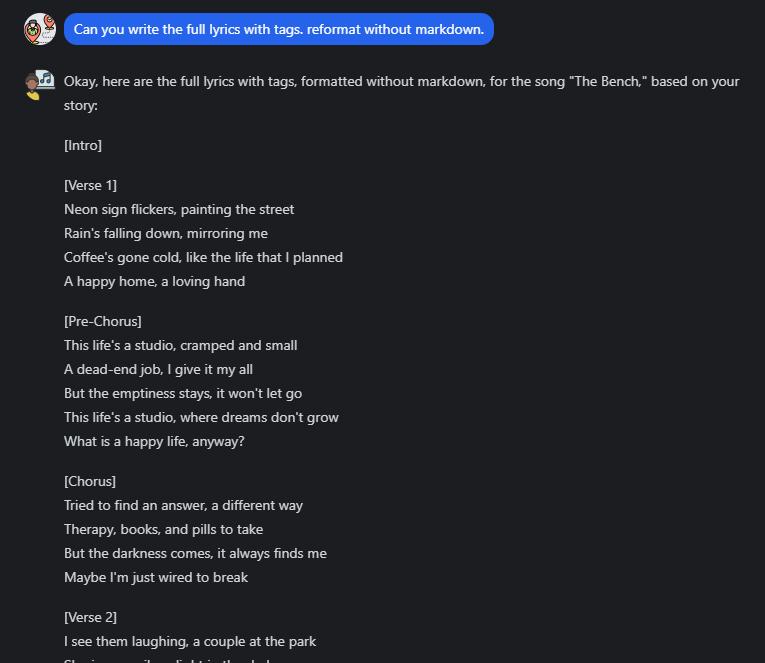

The song creation process with Suno, illustrated in the diagram below, involved a multi-stage, iterative approach, moving from initial preparation and generation to extension, assembly, and final mastering.

Figure 4 Song Generation Workflow

The process can be broken down as follows:

Preparation: The finalized lyrics were divided into two parts, corresponding to distinct sections of the song (e.g., verses, choruses, bridge). This division allowed for more focused prompting and greater control over the musical style and structure of each section. Vocal styling cues and tags specific to Suno (such as [intro], [verse 1], [chorus], [interlude], etc.) were added to the lyric excerpts to guide the AI's generation process. Intentional modifications to the lyrics, such as writing "gooodbyeee" instead of "goodbye," were also incorporated to influence the vocal delivery and emphasis.

First Generation: Suno was used to generate initial song segments based on the prepared lyric excerpts and the added tags. The "style of music" prompt was not rigidly defined by genre; instead, the focus was on finding melodies and arrangements that resonated emotionally with the lyrics and the overall project vision. Multiple variations were generated for part 1.

Extension: Once a satisfactory initial segment (Part 1) was achieved, Suno's "extend" feature was used to generate subsequent sections (Part 2) based on the existing melody and style. Again, multiple variations were generated.

Iteration and Refinement: This was a crucial and highly iterative stage. Each generated song segment (both initial and extended versions) was carefully listened to and evaluated. If the melody, instrumentation, vocal delivery, or overall mood didn't align with the artistic vision, the lyrics were tweaked (often to correct pronunciation issues or improve phrasing), new prompts were crafted, and new variations were generated. This cycle of generation, evaluation, and refinement was repeated numerous times.

Assembly: Once satisfactory versions of both Part 1 and Part 2 were achieved, Suno's "get whole song" feature was used to combine them into a complete song structure.

Mastering: The final AI-generated song was then imported into Adobe Audition for minor tweaks and mastering. This involved adjusting levels and ensuring the overall audio quality met the desired standard.

Guiding the Melody

Although Suno can generate entire songs autonomously, the process for "Cliff's Edge" was far from automated. Human intervention and artistic direction were essential at every stage. I acted as:

Prompt Engineer: Carefully crafting and refining the input prompts (lyrics, tags, style descriptions) to guide Suno's output.

Curator: Listening critically to each generated variation and selecting the ones that best aligned with the desired emotional tone and musical style.

Editor: Making adjustments to the lyrics to improve pronunciation, phrasing, and overall flow.

Arranger: Deciding on the song structure and using Suno's features to combine different segments.

Audio Engineer: Making final adjustments to the audio in Adobe Audition.

The song's structure and emotional arc dictated the creative choices, rather than a pre-determined plan for a music video. This emphasis on sonic quality over visual considerations reflects the project's origins as a musical endeavor. This "human-in-the-loop" approach ensured that the music not only complemented the lyrics but also deeply resonated with the emotional core of the underlying story, setting the stage for the visual narrative to follow.

Storyboard Development

Following the completion of the song "Cliff's Edge," the next stage was to create a visual storyboard to guide the generation of images and video clips. To maximize creative flexibility and efficiency, a text-based storyboard was developed, rather than a traditional visual storyboard or mood board. The primary goal was to create a detailed blueprint for each shot, specifying camera angles, movement, subject matter, and emotional tone, all tightly synchronized with the lyrics and the overarching story.

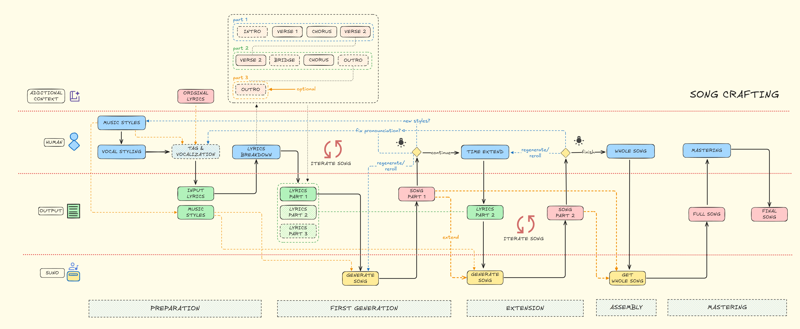

Human Vision with AI assistance

The text storyboard was created through a collaborative, iterative process with Gemini Experimental 1206. I provided the LLM with custom instructions designed to detailed shot descriptions. The short story and the finalized lyrics served as crucial additional context, ensuring thematic and narrative consistency. The workflow, illustrated below, was driven by a continuous cycle of prompting, evaluation, refinement, and human decision-making.

Figure 5 Storyboard Crafting Workflow

The workflow involved the following key steps:

Project Context and Breakdown: The lyrics were broken down into distinct sections (verses, choruses, etc.), and an initial plan for the number of scenes and shots was developed. This provided a structural framework for the storyboard.

Initial Idea & LLM Prompting: For each scene and shot, I began with a core idea (e.g., "start with cliff edge," "close-up of a man looking at his hand"). I then crafted prompts for Gemini, providing context from the story and lyrics, and specifying desired camera angles, movement, and emotional tone (if needed). I often requested multiple shot options from Gemini to explore different visual possibilities. Sometimes, instead of directly using Gemini's output, I used its suggestions as inspiration to expand and refine my own shot ideas later.

Iterative Refinement: Gemini's output was carefully evaluated, and prompts were refined iteratively. This involved requesting alternative descriptions, adjusting camera angles, adding more specific details, or even completely rewriting shots based on my artistic judgment.

Shot Selection and Sequencing: From the generated and refined shot descriptions, I selected the ones that best fit the narrative, emotional arc, and visual style of the music video. These were then sequenced to create the final text storyboard. The strategy of generating more shots than needed provided flexibility during the later editing stage, allowing for creative choices based on the actual generated visuals.

Finalize: The final text storyboard was a detailed, shot-by-shot blueprint for the visual generation process, completely driven by human artistic decisions informed by AI-generated suggestions.

Shot by Shot

The following example illustrates a typical entry in the text storyboard and demonstrates the level of detail used to guide the subsequent visual generation. This level of detail was crucial for several reasons:

Clear Communication with AI: It provided precise instructions for the image and video generation tools, minimizing ambiguity and maximizing the chances of achieving the desired visual results.

Consistency: It ensured visual and thematic consistency across the entire music video.

Efficiency: It streamlined the visual generation process by providing a clear roadmap, reducing the need for extensive trial and error.

Flexibility: Because the storyboard was text-based, it was easy to modify and adapt during the iterative process

The text storyboard served as a vital bridge between the lyrical and musical components of "Cliff's Edge: Shattered" and the AI-driven visual generation process. By combining human creative vision with the descriptive capabilities of an LLM, a detailed and flexible blueprint was created, ensuring that the final visuals would be both technically proficient and emotionally resonant. Also this workflow, allows me to save time and budget, to decide which shots that will be used in the visual generation.

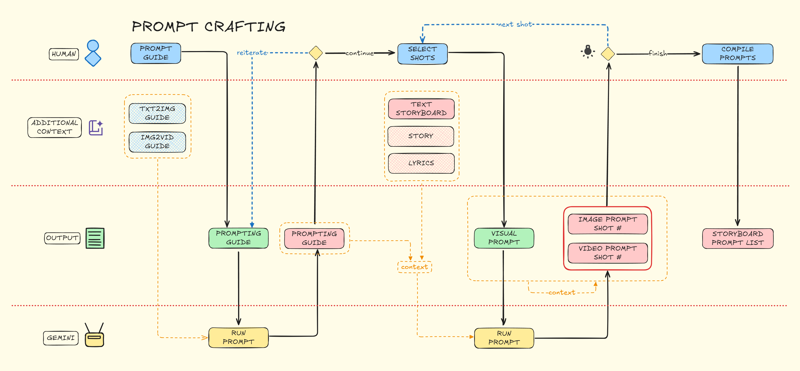

Visual Prompts

With the text storyboard finalized, the next step was to craft the visual prompts that would guide the AI image and video generation tools. This stage was not simply about copying and pasting descriptions from the storyboard. Instead, it involved a deliberate and strategic process of translating the textual descriptions into a format optimized for AI understanding, taking into account the specific capabilities and limitations of each tool. The goal was to create a comprehensive set of initial prompts that would serve as a strong foundation for the visual generation process, while acknowledging that further refinement and modification would occur during the actual image and video creation.

The Art of Prompt

While AI models are powerful tools for generating visuals, they require precise and well-structured input to produce the desired results. This stage was driven by human expertise, drawing upon:

Understanding of AI Tools: Prior experience with FreePik Mystic 2.5 and Kling AI informed the prompting strategy, allowing for anticipation of how each tool would interpret different types of instructions.

Knowledge of Visual Storytelling: Principles of cinematography, composition, and visual storytelling guided the crafting of prompts, ensuring that the generated visuals would effectively convey the desired emotions and narrative information.

Iterative Mindset: The prompts were created with the understanding that they would be refined and adjusted based on the actual generated output. This was a proactive, rather than reactive, approach.

Documentation: I compiled documentation/manual from each provider to make me understand about the tools that I used.

Figure 6 Prompt Crafting Workflow

The prompt crafting process involved the following key steps:

Prompting Guide Compilation: Initial research involved gathering information on best practices for prompting both text-to-image and image-to-video AI models.

LLM Assistance for Guide Creation: Gemini Experimental 1206 was used to assist in synthesizing this information and creating a concise prompting guide, drawing upon the available documentation and best practices.

Prompt Creation for Each Shot: For each shot defined in the text storyboard, separate prompts were crafted for image generation and video generation. These prompts drew upon storyboard, story, and lyrics.

Character Details: To maximize flexibility and avoid premature commitment to specific visual representations, detailed descriptions of the actor/figure were intentionally omitted from the initial prompts. These details would be added later, during the visual generation stage, based on the actual generated images and the desired consistency.

Prompt Compilation: The individual shot prompts were compiled into a comprehensive prompt list, organized by scene and shot number, providing a clear and structured input for the visual generation stage.

From Shot to Prompt

The following examples illustrate the difference between the storyboard description and the crafted prompts, highlighting the strategic adaptations made for AI understanding. Notice the following key differences and strategies:

Combined Information: The prompt combines information from multiple fields in the storyboard entry (Shot Type, Camera Angle, Visual Details, Theme/Mood, Description) into a single, coherent textual description.

Emphasis on Visual Language: The prompt uses more descriptive and evocative language suitable for AI image/video generation (e.g., "sparsely furnished apartment," "harsh light," "long shadows").

Omission of Character Name: The character's name ("Michael") is replaced with "the solitary figure" to avoid predetermining the visual appearance.

Separated prompt: Creating two different prompt (Static and Movement) for help generate image and video.

The prompt crafting stage was a critical bridge between the textual storyboard and the AI-driven visual generation process. By strategically translating narrative and emotional information into a format optimized for AI understanding, and by consciously deferring certain visual decisions (like character appearance), this stage maximized both creative control and flexibility.

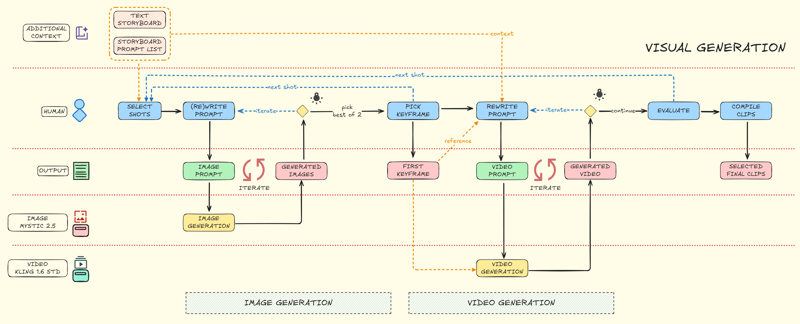

Visual Generation

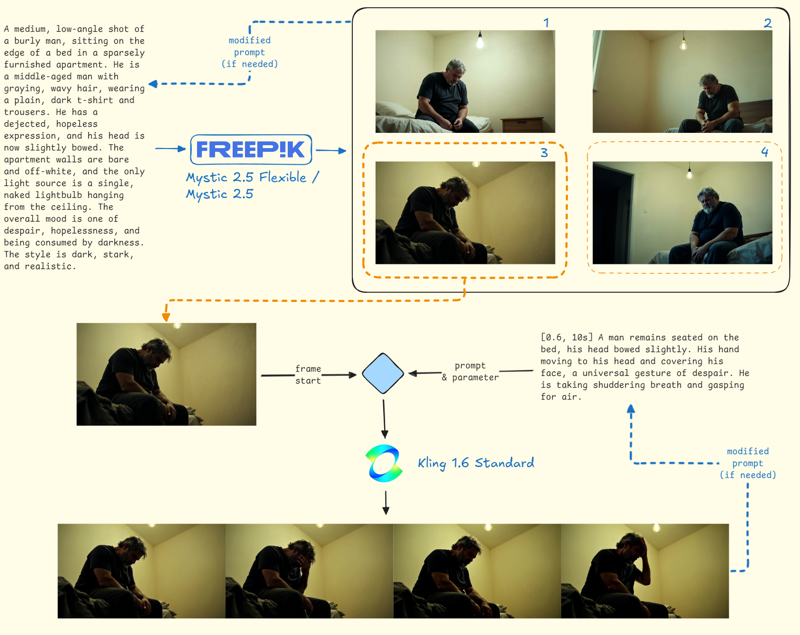

The visual generation stage marked the transition from textual descriptions and prompts to the creation of the actual images and video clips that would form the "Cliff's Edge: Shattered" music video. This phase utilized FreePik Mystic 2.5 (and its Flexible version) for image generation and Kling AI 1.6 Standard (accessed via both Kling AI and Freepik) for video generation. This stage was characterized by a highly iterative and human-centric approach, prioritizing creative adaptation and resourcefulness over a pursuit of pixel-perfect AI output. The goal was not to force the AI to perfectly match a pre-conceived vision, but rather to collaborate with the tools, leveraging their strengths while strategically working within their limitations.

Image & Video Creation

The visual generation process was far from automated. While the AI tools generated the raw visual material, every decision – from prompt modification and image selection to video clip evaluation and adaptation – was driven by human judgment and artistic intent. This stage required a constant interplay between:

Prompt Engineering: Refining and adapting the initial prompts from the previous stage.

Visual Evaluation: Critically assessing the generated images and video clips based on aesthetic quality, emotional impact, and narrative consistency.

Strategic Decision-Making: Choosing the best outputs, even if they weren't exactly as envisioned, and adapting the overall plan based on what the AI provided.

Resource Management: Making conscious choices to conserve time and budget, prioritizing efficiency and creative problem-solving over endless re-rolls.

This "human-in-the-loop" approach was not simply a matter of necessity; it was a deliberate artistic choice. Recognizing the inherent limitations of current AI technology, particularly regarding character consistency, I embraced these limitations as opportunities for creative interpretation, rather than viewing them as obstacles. The workflow, illustrated below, emphasizes this iterative and human-guided process.

Figure 7 Visual Generation Workflow

The visual generation process involved the following key steps:

Shot Selection: Following the sequence established in the text storyboard, I selected the shots to be generated. Occasionally, shots were skipped if deemed non-essential or redundant after further consideration.

Image Prompt Modification: The initial image prompts (from the "Prompt Crafting" stage) were modified before being used with FreePik Mystic 2.5. Key changes included:

Adding Character Descriptions: While avoiding overly specific facial features, descriptions of hair ("grey wavy medium hair") and body type ("burly") were added to introduce a degree of visual consistency. This was a conscious decision to balance creative flexibility with a basic level of visual coherence.

Prompt Refinement: The prompts were further refined based on previous experience with Mystic 2.5, adjusting keywords and phrasing to improve the likelihood of generating desired results.

Image Generation (FreePik Mystic 2.5): For each shot, Mystic 2.5 was used to generate four image variations based on the modified prompt. Instead of repeatedly re-rolling to achieve a "perfect" image, I adopted a strategy of selecting the best two from the initial four, prioritizing creative potential over pixel-perfect accuracy. This approach conserved resources and encouraged a more adaptable mindset.

Keyframe Selection: From the two selected images for each shot, one was chosen as the "keyframe" to serve as the input for Kling AI. This selection was based on composition, emotional impact, and overall suitability for animation.

Video Prompt Creation: The pre-prepared video prompts from the "Prompt Crafting" stage were largely discarded. Instead, new, more concise and direct prompts were crafted, focusing on describing the desired movement and camera action, rather than reiterating the visual details already present in the keyframe image.

Video Generation: Kling AI was used to generate video clips from the selected keyframes and the newly crafted video prompts. Typically, only one or two variations were generated for each shot, again prioritizing resourcefulness and adaptability. If the first generated clip was deemed usable, the process moved on to the next shot.

Clip Evaluation and Selection: The generated video clips were evaluated based on their visual quality, fluidity of motion, and overall contribution to the narrative. The best clip for each shot was selected for inclusion in the final music video.

Compilation: The selected video clips were compiled into a single folder, ready for the editing stage.

Adapting & Refinement

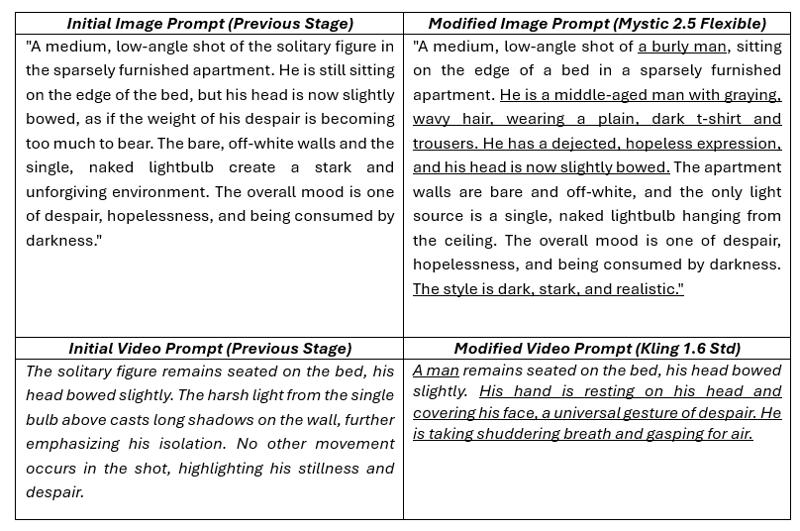

The following example illustrates the iterative process of prompt modification, image selection, and video generation:

Figure 8 Visual Generation Example

This example demonstrates:

Prompt Adaptation: How the initial prompt was modified to include character details and refined for better results with Mystic 2.5.

Selective Generation: Only four images were generated, and two were chosen, showcasing a resource-conscious approach.

Keyframe Selection: One image was chosen to be based for video generation.

Video Prompt Reinvention: The pre-planned video prompt was discarded in favor of a new, more concise and action-oriented prompt tailored to Kling AI.

Iterative: Show how I generate image, and pick which one that have potential, and created the video.

This level of detailed decision-making and adaptation was applied to every shot in the music video. The visual generation stage of "Cliff's Edge: Shattered" exemplifies a highly adaptive and resourceful approach to AI filmmaking. By embracing the limitations of the available tools, prioritizing human artistic judgment, and focusing on creative problem-solving, a compelling visual narrative was crafted.

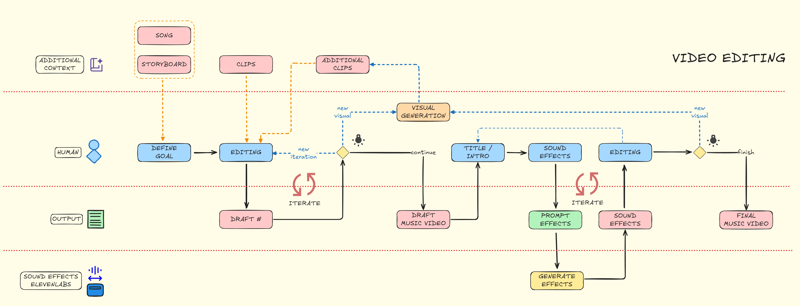

Video Editing

The video editing stage marked the culmination of the entire production process, bringing together the AI-generated visuals, the original song, and the narrative framework established in the preceding stages. This phase, executed using Adobe Premiere Pro, was the most intensely human-driven part of the project, requiring significant creative problem-solving, artistic judgment, and adaptation in the face of both technical limitations and the inherent unpredictability of AI-generated content. The goal was to transform a collection of disparate clips and a song into a cohesive, emotionally resonant, and narratively compelling music video, all while working within the constraints of my existing video editing skills.

Assembling the Pieces

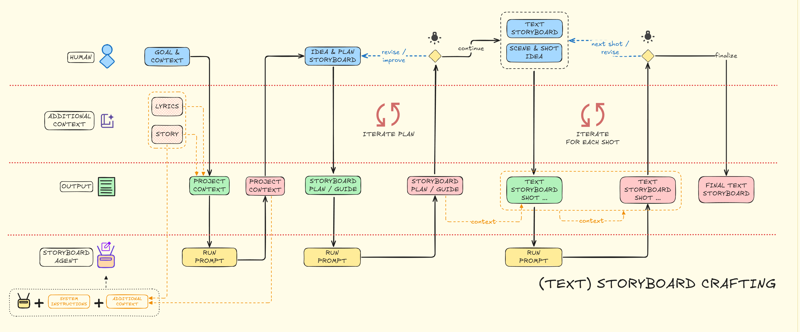

While the preceding stages involved collaboration with AI tools, the video editing process was almost entirely a human endeavor. This stage was not about simply assembling pre-determined pieces; it was about actively shaping the narrative, crafting the emotional arc, and making countless artistic choices based on intuition, aesthetic sensibility, and a deep understanding of the story being told. The LLM was not used for guidance during the editing itself; the storyboard served as a starting point, but the final decisions were driven by a hands-on, iterative process of experimentation and refinement. The workflow, illustrated below, highlights this iterative and human-centered approach:

Figure 9 Video Editing Workflow

The video editing process involved the following key steps:

Initial Assembly: The selected AI-generated video clips were imported into Adobe Premiere Pro and arranged along the timeline, roughly following the sequence outlined in the text storyboard and synchronized with the song "Cliff's Edge."

Iterative Refinement: This was the core of the editing process. It involved:

Sequencing and Pacing: Experimenting with the order and duration of clips, adjusting the pacing to match the emotional flow of the music and narrative.

Shot Selection: Re-evaluating the initial shot choices, sometimes discarding clips that didn't work as well as anticipated, or making unexpected connections between seemingly disparate visuals.

Visual Storytelling: Using cuts, transitions (primarily simple cuts, given my focus on accessibility), and the juxtaposition of images to create meaning and enhance the emotional impact.

Addressing Inconsistencies: Working creatively with the inherent inconsistencies in AI-generated visuals (e.g., character appearance), either minimizing them through editing or embracing them as part of the overall aesthetic.

Addressing Storyboard Gap: Making the film aligned with the intended vision, that might be missing in the storyboard phase.

Returning to Visual Generation: When some shots/scene is not working/not aligned with the vision, I decided to regenerate the new visuals.

Title and Intro Sequence: A simple title sequence was created, using a combination of previously unused AI-generated clips and text overlays. The choice of visuals and text for the intro was particularly crucial, requiring extensive deliberation (almost two days) to ensure it set the appropriate tone and provided a clear context for the viewer without triggering unwanted reactions.

Sound Effects Integration: AI-generated sound effects (e.g., waves, wind) created using ElevenLabs were added to the intro and outro sequences to enhance the atmosphere and provide a sense of immersion. This was done because I intentionally avoided adding SFX in video, to minimize complexity.

Final Review and Refinement: The assembled music video was reviewed multiple times, with further minor adjustments made to pacing, transitions, and overall flow.

Description: While editing, I also refining the music video description.

The iterative and adaptive nature of the editing process is best illustrated by the fact that the final music video diverged from the initial storyboard in several significant ways. This was not due to errors or omissions, but rather to conscious artistic choices made during the editing process. These decisions were driven by:

Visual Flow: Ensuring a smooth and engaging visual rhythm.

Emotional Impact: Prioritizing shots and sequences that best conveyed the intended emotions.

Narrative Clarity: Making choices that reinforced the story's themes and message.

Working with the AI-Generated Material: Rather than trying to force the AI-generated clips to fit a rigid pre-conceived plan, I adapted the plan to make the most of the available material, embracing unexpected visual juxtapositions and allowing the editing process to shape the final narrative.

This adaptive, human-driven approach was essential for creating a cohesive and impactful music video, given the limitations of both the AI tools and my own video editing experience.

Conclusion

The creation of "Cliff's Edge: Shattered" represents a journey of artistic exploration, technical learning, and a deep dive into the potential – and limitations – of AI-assisted filmmaking. From the initial spark of a personal story to the final polished music video, this project has demonstrated that impactful and emotionally resonant cinematic experiences can be created with accessible tools and a human-centric, iterative workflow.

While AI tools like FreePik Mystic 2.5, Kling AI, Suno, Gemini, and ElevenLabs were instrumental in bringing this vision to life, they served as collaborators, not replacements, for human creativity. The most significant challenges – managing AI inconsistencies, refining prompts, shaping the narrative, and making countless artistic decisions – were overcome through human ingenuity, adaptability, and a commitment to telling a story that would connect with viewers on a deeply emotional level. The tools are impressive, but always need human to make the best result of it.

Ultimately, "Cliff's Edge: Shattered" is a testament to the democratizing power of AI in the arts. It is a proof of concept, showing that individuals without traditional filmmaking backgrounds can leverage these tools to express their unique visions and share their stories with the world. It is my hope that this project, and this Production Diary, will inspire other aspiring creators to explore the possibilities of AI filmmaking, embracing both its potential and its limitations, and always prioritizing the human element at the heart of every creative endeavor. I also hope that with this music video will make others who might be feel lonely, despair, and isolated, feel less alone and find a little spark of hope.

.jpeg)