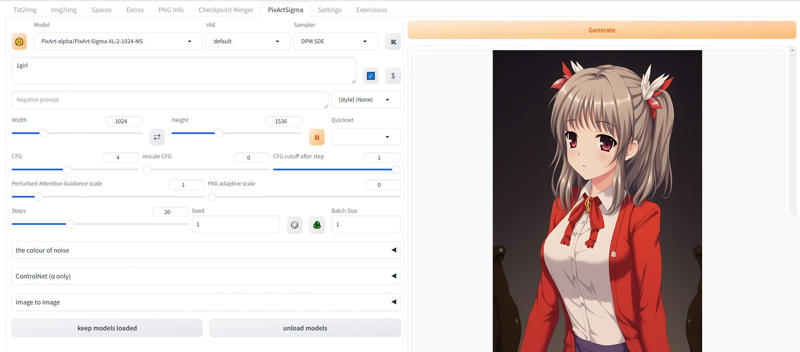

Please note that the this cover image has been adjusted for thumbnail visibility by inverting the colors and modifying the image layout. This is not the actual UI, so please keep that in mind.

2/16:The extension developer updated it so that custom models can be added!

You can check the changelog in the repository for details.I truly appreciate the creator’s continuous support—this extension is very user-friendly.

My article is slightly outdated, so a quick glance should be enough. the official source provides detailed information, so it's recommended for accurate details!

■Forge extensions and SDNext use the Diffusers model for inference, so regular checkpoints cannot be used. Therefore, if you want to use a fine-tuned model, you need to search for the PixArt Diffusers model that is automatically downloaded during the initial inference and replace it with the Diffusers model of the fine-tuned model within the Forge folder. This time, we'll focus on Forge, but the same approach can be applied to SDNext.

This is a general method that works with any software using Diffusers models, so it's worth remembering. It could be especially valuable for less widely adopted models like Sana and Lumina.

Install the Forge extension. Not necessary as sdnext is already implemented.The GitHub change log also includes details on quality improvement features, so be sure to check it.

It worked fine after installation, but if you're concerned, install the required dependencies just in case.

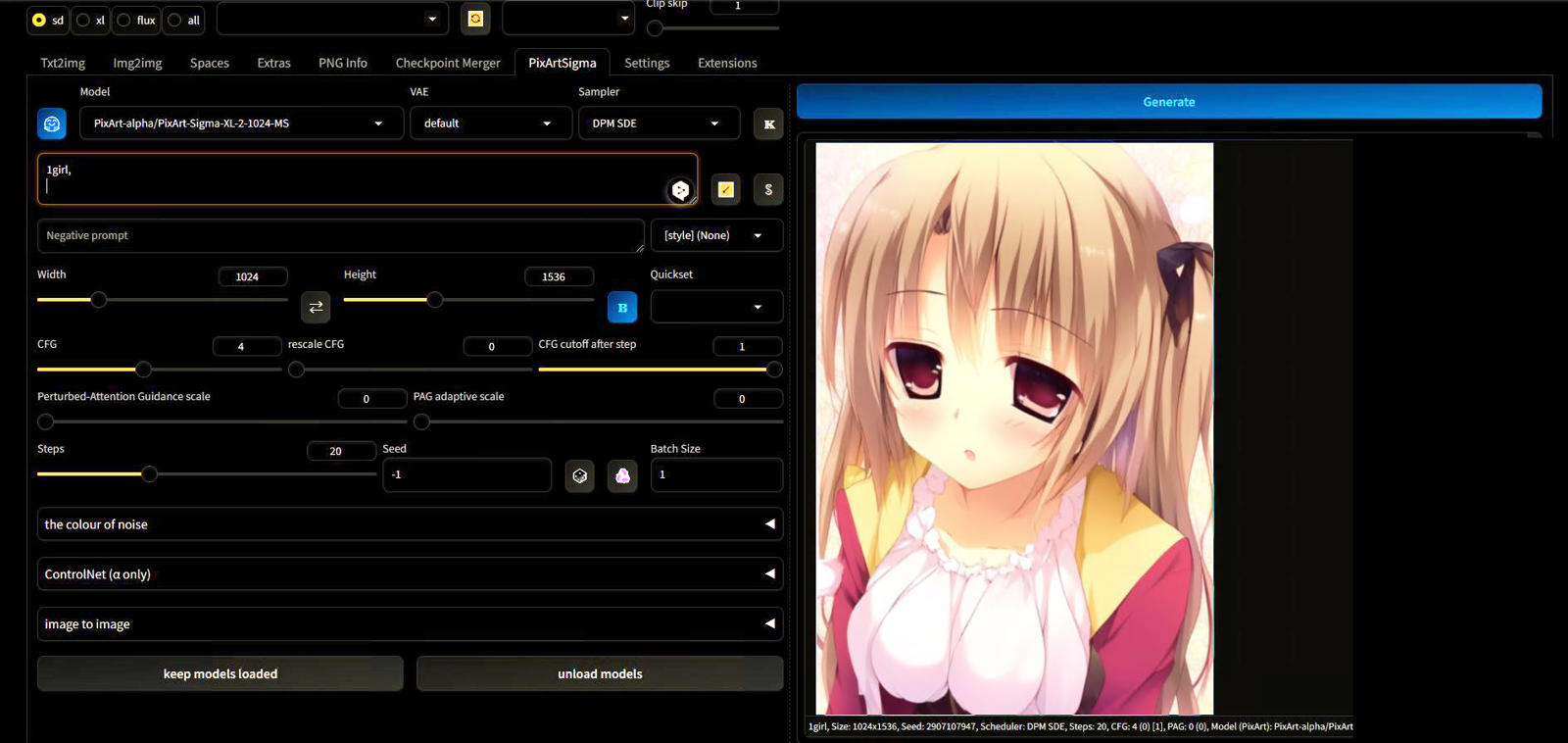

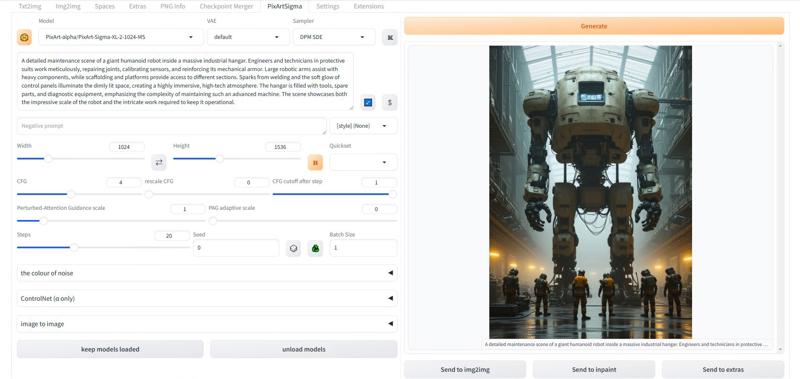

Move to the PixArt tab, enter an appropriate prompt, and try running it. It will take some time initially to automatically download models like T5 or DIT (U-Net). Note that there may be some lag during inference when changing prompts, as T5 needs to be recalculated. Frequent changes might cause some stress. Pressing "Keep Models Loaded" at the bottom left of the UI will keep T5 loaded in CPU RAM, which may speed up processing slightly.However, in ComfyUI, you can select GPU loading, so there's almost no lag.

■The K button enables Karras Sigma for the scheduler/sampler.

■The SuperPrompt button (ꌗ) rewrites simple prompts into more detailed ones and overwrites them. It's a prompt assistant.

■The B button toggles resolution binning. Internally, it may be generated at the optimal resolution for generation and then upscaled to the resolution specified by the user.This is already enabled. In this UI, the orange button indicates that it is in the active state.

Also, it's great that PAG and others can be used as well.This PixArt extension is amazing, with a wider range of features than expected.

Moreover, it is very valuable that the implementation includes models requiring inference in special pipelines like the experimental pixart-900m-1024-ft-v0.7-stage1,2. There are also various other models listed.You might be able to enjoy different results than usual.

https://huggingface.co/terminusresearch/pixart-900m-1024-ft-v0.7-stage1

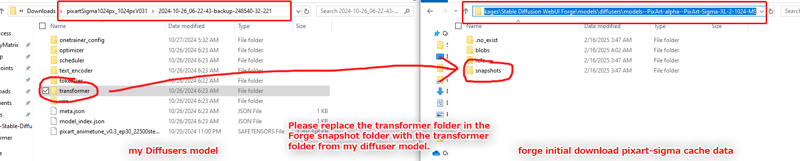

Once the image is displayed and you have confirmed that it is working, let's replace it with the fine-tuning model. The data that needs to be replaced is the transformer folder, which corresponds to the DIT (U-Net) part. The automatically downloaded PixArt Diffusers model can be found in the Diffusers folder. Please refer to the provided image to locate it. Once you find the snapshots folder, inside it you'll find the transformer folder. Replace this with the transformer folder from the fine-tuning model's Diffusers model. The image might be compressed and hard to see...

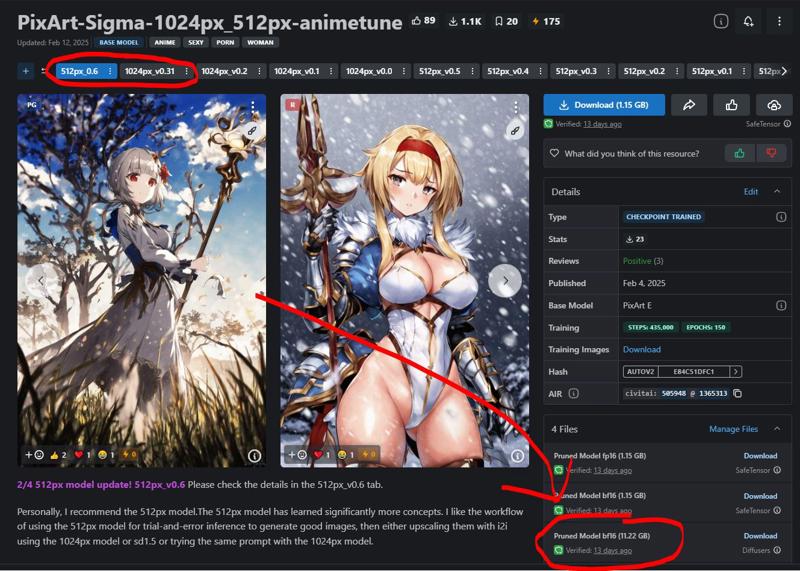

By the way, in my case, the Diffusers model consists of over 10GB of data, as shown in the attached image. In reality, only the 1GB transformer is necessary, but since all the data is included, it is quite large. Please understand that. You can discard the data other than the transformer. There are both 512px and 1024px models available, so feel free to use the one you prefer.

my pixart model:https://civitai.com/models/505948?modelVersionId=1365313

After replacing the model, either restart Forge to clean the cache. Then, try inference again, and if the results have changed, it’s a success. In some cases, it might be hard to notice the difference, but in my model’s case, since it has a strong novel game style, it might be easier to see the change.

●By the way, I feel that the generation results from Forge look cleaner than those from Comfy. It's strange. Even with 512px, images of 1024px size can be generated, which is strange. This is likely the effect of resolution binning.

●Also, my model has lower quality, so it may be difficult to achieve good results without referring to long prompts or tags that help improve quality, as mentioned in my model's description.

Using SD1.5 or SDXL for image-to-image generation is also a good option. Even bad results can sometimes turn into quite good images. Specifically, hands are something that PixArt struggles with, but SD1.5 and SDXL improve on that.Depending on the model you choose, you can create any style you like and select the model according to your preferences.

●There are also other easy ways to try PixArt.

I think the "Comfy Sigma Portable" can be used even by those who have never used ComfyUI before. There's no need for a difficult installation. Just download and try it out!

https://civitai.com/models/523149?modelVersionId=581233

It is still undecided, but the developer of TinyBreaker mentioned Forge briefly, so it might be implemented in the future. This is yet another great example of exploring the potential of PixArt!

https://civitai.com/models/1213728/tinybreaker?modelVersionId=1367156