Select "Image-to-image variations (img2img)" from the workflow dropdown on the top, where usually "Text-to-image" is. It will allow you to upload your own images. I think you can't use Flux for this (would be a waste of Buzz anyway, probably), but SDXL models work. The "Denoise" slider will set how much the image will change, from not at all at 0 to more or less an unrelated new image at 1 (in theory, it still tends to bleed a little). The meaningful range for most applications starts at around 0.5 (it just doesn't do much below that) and then goes up to 0.95.

The classic application of this is style transfer (the old "cartoonify a photo" and so on), and you can also use it for ghetto inpainting - do simple, basic photoshop fixes on your generations and then run them through img2img to homogenize the look again. But one of the underrated uses of it is to do rough sketches as best as you can and then run a full, detailed prompt over it with a high (>0.7) denoise, see these examples - prompt and settings visible in this post, apart from the denoise settings and the base image, so those are here.

Hints: Pure lineart will not work, you need solid blobs of pixels for the model to grab on to. They don't need to be detailed though. Shading a little can help but isn't necessary - the rough composition is what you want here. It will fix proportions to some degree at really high denoise levels.

Also keep in mind that it won't change resolutions whatsoever at the moment, so scale your image to the usual 1024x1024 or other standard generator resolutions beforehand unless you want bad results and/or huge Buzz costs.

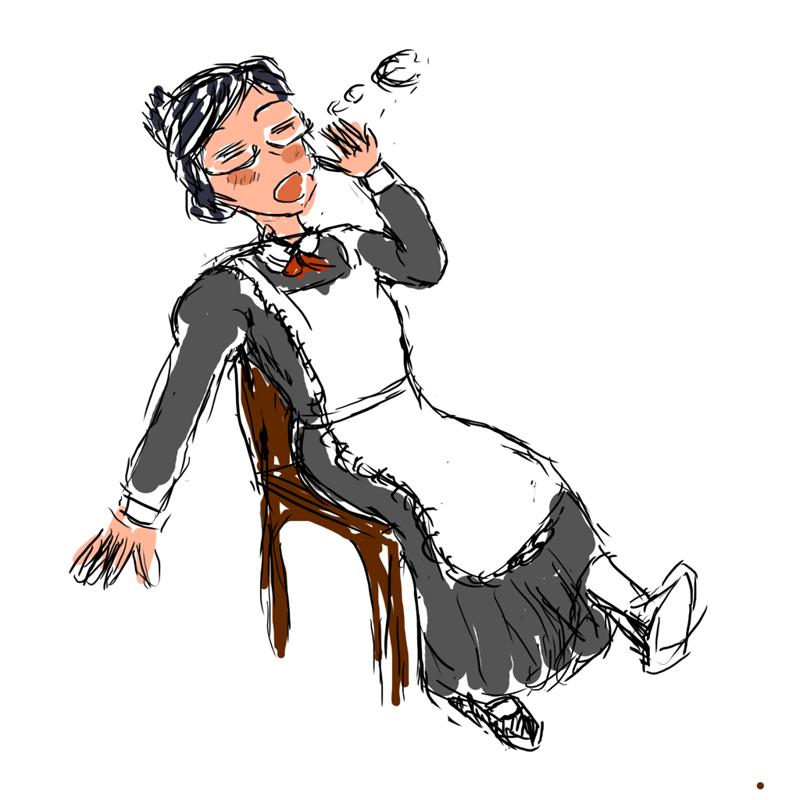

Base image

Denoise: 0.0

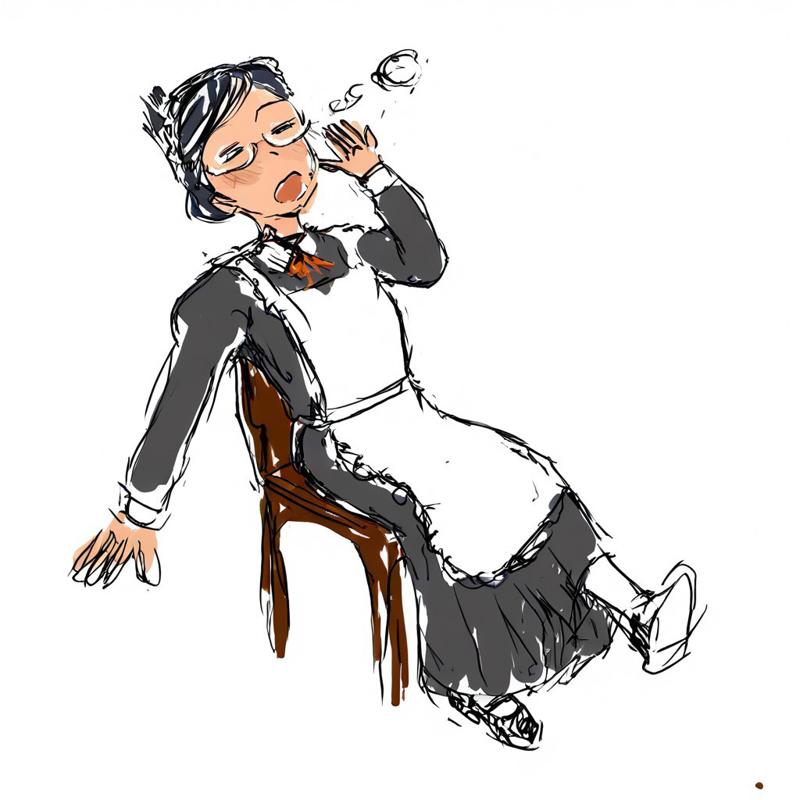

Denoise: 0.4

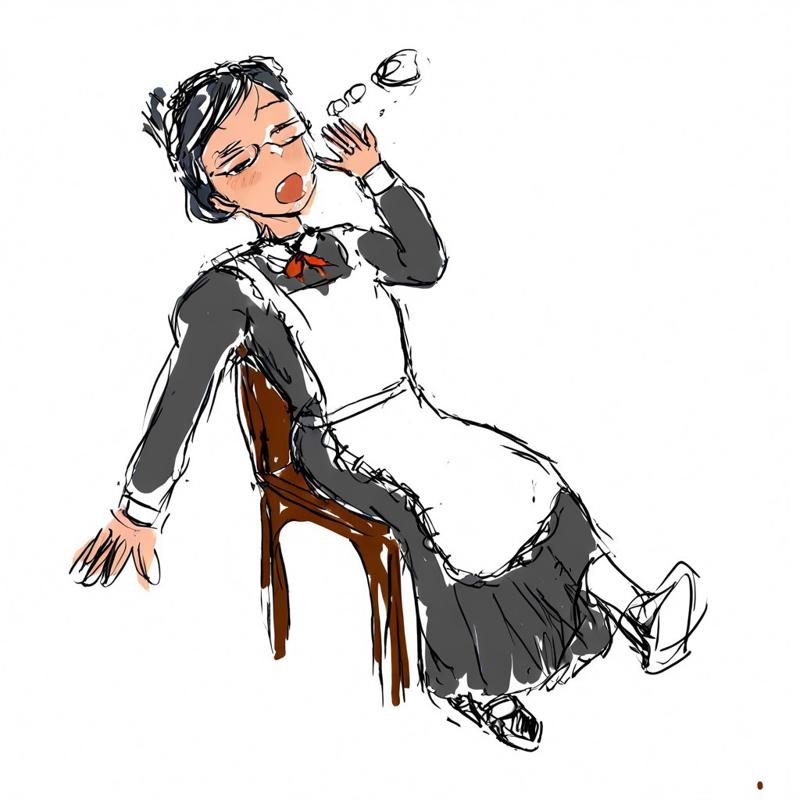

Denoise: 0.75

Denoise: 0.95