Chapter 4: The 768px chapter ... kinda

( Prev chapter at https://civitai.com/articles/10292 )

This is the continuation of my efforts to retrain "SD1.5" base, with the SDXL VAE

For various reasons, I was experimenting with 512x768 sized training images, and output.

The results were interesting, in a fairly positive way.

Then I decided, "what the heck, lets see what happens if I go all the way to 768x768?"

Not only were the results not horrible.... but the model seems to take larger training EASIER than 512x512 resolution. ???

[Edit: turns out, this is not the case. I was doing "something wrong" with my prior training]

How did it come to this?

This came about because I wanted to augment my limited 2:3 ratio (hand-picked) dataset with some square handpicked images, since the model supposedly favours square images.

So I spent a lot of time culling from 51million total LAION aesthetic, down to 80k or so vaguely appropriatedly sized images, and then pulled out JUST the "woman, solo"type images....

and then kept only the really clean, sharp ones.

I was left with 1900 images.

I decided to see what I could make of those anyway. So I did the standard run.

Then I generated WD "tag" style captions, and ran with only those.

Then I decided to run with both, (one pass for each caption style) and it was better than either of them alone

But it was still very few images, so I decided, what the heck, 512x768 was good... so what happens if I mix in 768x768?

Since I would now not only be throwing things off by changing the VAE, but also changing the fundamental model image size.... I thought it would be horrible.

Similarly, there was another reason it "shouldnt" have worked:

I left "training resolution" at 512

.... and yet, I got results better than I was previously, so long as I ONLY trained with 768x768, without mixing training sizes.

A sample output, phase 2, only 12 epochs using this method across 1900 images.

So... now you can see why I am inclined to change the direction of XLSD from a plain 512x512 re-imagining, to a full on 768x768 model. Kinda like what "SD2.x" might have been, but wasn't.

Back to realigning and re-tagging datasets, and then crazy retraining. This time, I think I'll have to go back all the way to ground 0, and create a new "Phase 1" base to train on!

... which was really, really slow to train the first time I did it, since I used an extended "throw more junk at it" approach for the base. (1 million images from CC12M)

See you in a few weeks, I guess....

New plan summary

Select "new" square images set to train on ( https://huggingface.co/datasets/opendiffusionai/laion2b-squareish-1536px )

Generate Natural Language style captions, AND tag style captions (since tag style is more information dense)

Train a new base, starting at "phase0", rather than reusing my previously trained base

It turns out that step 1 there is easy: I already had the images downloaded from the LAION-aesthetic set, so its a matter of choosing sizes, and then redefining my training "concept" definitions in OneTrainer to resize to the new, larger resolution instead of 512.

Similarly, I already had natural-language captions, so I just needed to generate the "tagged" style with the WD model.

(Side quest: there are now 8+? versions of the WD model, so I did some evaluation of the options)

I chose the latest "eva02-large-v3" model.

This was a really good thing, as it identified 1000 images with watermarks that needed to be thrown out!

This left 79,000 images that are roughly square, and over 1535 pixels in height.

Out of 51 million in that LAION set :-/

Note also, that they aren't validated as good quality images by me personally.. they just exist in the LAION subset. And, only 15k out of the 79k even have humans in them!

That being said.... they still appear to improve the quality of the model, so.... let's see what happens!

I'm then going to use batchsize 32, accum 4, for effective batchsize of 128. That would be technically a little large for 80k images, but just right for (80k * 2), which is basically what I'm using. (Note that makes for around 600 effective-batch-size steps per epoch)

Early results

Huhhh.... I expected to have to wait for 100,00 steps or something to see decent results.

That was incorrect.

In some ways, not as good at the result above, but also in some ways better, after only epoch 3, and this is starting from scratch, rather than a "phase 2" training like above.

I'm hoping for good things after 50 epochs.

Schitzo after 5 epochs

Sadly, using the large-size with the 512px training res seems to be schitzophrenic.

One might recall that SD1.5 has problems rendering "large" images, often breaking them up into multiple overlapping concepts.

My initial samples suggested that something about this configuration somehow overcame that.

While epoch0 sample had the typical duality mess, epoch1 was actually fixed, as was epoch 2, and 3!

But, it was only random luck, I think.

While the 768x768 output looks normal at 3 epochs, the next one (and the one following) degrades as per usual:

Therefore, it seems I must EITHER restart with this nice newer dataset, and commit to 512 resolution 100%...

or fully commit to actual 768 resolution.

Moving forward with 768px

I'm going to update the training resolution to match, like I "should have done" in the first place, to see how fast it cleans up.

Potential problems with 768x768

I think perhaps because of the architecture size, I may be limited to 512x768, or 768x512, but NOT be able to reliably use 768x768

I recall this is actually somewhat of a known problem with SD15, but was hoping the new VAE might help somewhat.

This does not seem to be the case, after 8 epochs. However, on advice from a friend, I shall run it out for longer to see if it clears up.

This README claims it shouldnt take more than 40 epochs across 30k images.

https://huggingface.co/panopstor/SD15-768

and they were only using LR=1e-06, effective batchsize=32.

Since I'm using either 80k images, or 2x80k images, I should then expect to see positive results before 20 epochs?

Although they did intermediate training at 640x640 res, so not 100% sure about how long it should take really.

Win some, lose some: 15 epoch results

On the plus side, I started seeing some actually nice results, for the 768x768 output, after 15 epochs.

This looks better than a lot of my "phase 2" tests!

On the downside though.... it still suffers badly from "resolution too large" syndrome. Duping of the supposed single subject, in ovelapping, tiled style.

So seems like 768x768 is probably out, and I need to focus on 512x768 for high-detail.

For the record, the training samples of 512x768 for this run show improvement, but not as good as the above.

512x512 samples were worst of all. Which shouldnt be too surprising.

So, I think I shall have to revert to dual-res, lower res training:

512x512, and 512x768

The differences between this time, and prior ones, is:

I have a better quality, but not tiny, dataset

I have a better tagging approach: (use both NL and tagging)

I'm not going to reuse my flawed "phase1" model. I'm going to be restarting from pure SD1.5+SDXL vae

Just kidding, back to fp32

Why this again?

I was noticing that doing things at increased resolution (768x768) did drastically improve quality... but the SD1.5 unet cant handle that very well.

So... how can I increase resolution, without ACTUALLY increasing resolution?

Training with bf16 is literally throwing half the information away, from its fp32 origins. So I figured I needed to try that again. But this time not mess up.

I had to start with an actual fp32 SD1.5base. and then make sure everything is 32bit clean.

Which I did.

And suddenly I'm getting better results after ONE epoch of training, than I did with 10 at bf16.

Mind you I'm also using a super large dataset again: 500k images, rather than 100k at a time. But just going by step count, I'm getting more with 32k steps at batch16, in fp32, than I did for 100k steps at batch16, in bf16.

The following is what I get at only 54,000 steps from baseline

( that is, starting from https://huggingface.co/opendiffusionai/XLSD-V0.0/blob/main/XLsd32-base-v0.safetensors )

Its unfortunate that steps are 4x as slow as bf16 though :(

Datasets used

On the above, I am currently using:

https://huggingface.co/datasets/opendiffusionai/laion2b-45ish-1120px with both WD captions AND moondream captions

https://huggingface.co/datasets/opendiffusionai/laion2b-squareish-1536px with just WD captions

And a new "squareish" laion set,

https://huggingface.co/datasets/opendiffusionai/laion2b-squareish-1024px

This last one I actually used by accident. I meant to use the 1536px one with moondream,but... oh well. Letting it run and seeing what happens with the extra 150k images in the training!

It really seems to like 2:3, 448x640. Even though im training on square and 4:5

Latest samples: 2025/03/03

Halfway through epoch 3, NOT cherrypicked:

Later samples: 2025/03/07

Top row: epoch 9

Bottom row: epoch 11 ( I didnt like epoch 10)

Alpha1 version of fp32 model

If you are super-curious, you can play with the current state of the model yourself.

See https://huggingface.co/opendiffusionai/xlsd32-alpha1

Update 2025/3/15

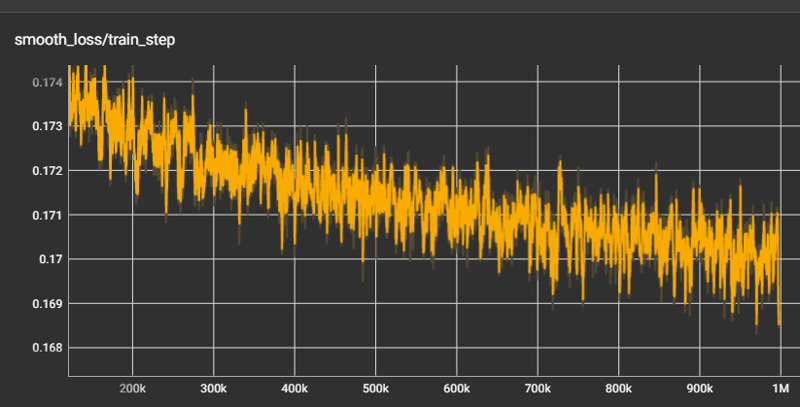

Very boring times... letting it run. Seeing if smooth loss average for latest epoch will drop to under 0.170 eventually.

Will most likely take another week at least.

Update 2025/3/20

I'd really like to redo with an improved dataset. but... I also want to let this one run, and I dont have a second 4090. Sigh....

Pitfall discovered: OneTrainer and LION bf16

I think I discovered why my bf16 results were not all they could be.

OneTrainer has "stochiastic rounding" to smooth out rounding errors when using bf16 precision.

It has that option for ADAMW and CAME, for example...

But not LION or D-LION :(

So, LION/DLION is fine if you run it with fp32.. but less so for bf16

1 Million Steps! (2025/03/20)

(This is an "in-training" sample so looks very different from the samples above)

(aw, civit broke the image. oh well, sorry)

Part 5 ...

Continued in