This is the continuation of my efforts to retrain "SD1.5" base, with the SDXL VAE

(Part 4 at https://civitai.com/articles/11818 )

Why? Because there are some AMAZING quality detailed SD1.5 models... but they were made in the dark, and they are somewhat abandoned. Lets recreate that quality, openly document how to do it, then go even further with the better VAE, and see what is really possible with small hardware.

I am also trying to maximize the quality of the model by focusing on realism only.

Any time you train on non-realistic images, you touch Every Single Value In the Model. You basically corrupt it a little in the non-real direction. So, fully train up realistic first, then doing more avant-guard stuff would be possible as derivative models after that.

Our story so far...

I reran a "phase 1" finetune from scratch, fp32, DLION, for over a million steps. (UNet only, frozen Text Encoder.) (edit: this was 1mil 'microbatch' steps, which at b16a16 is a mere 62,500 effective-batch steps)

The run then crashed, which gave me time to reflect.

The model had reached approximate parity with original base SD1.5. How to go further?

I tried a few different training options, but nothing was really crossing that bridge.

So I finally decided to take the plunge into the untapped land of Text Encoder training.

Text Encoder Training

Originally, I tried the same strategy as before:

Throw a large-ish dataset at D-LION, see what happens.

I disabled Unet training, and enabled Text Encoder training in OneTrainer, and......

It went off the rails. D-LION kept increasing the LR. it just wasnt converging.

I asked ChatGPT for a strategy. It suggested:

Dataset: 10,000–30,000 pairs

Epochs: 3

Batch size: 32

Learning rate (TE): ~5e-6 to 1e-5

I switched to straight LION, and tried this out. And... after viciously hacking down my dataset to be simpler, it seems to show progress! But with an odd wrinkle or two...

Best results are after 200 steps???

This, I dont get. I've never done text encoder training before. And this is kind of a special situation. But... not even a full "1 epoch"? Just 200 steps at batchsize 32??

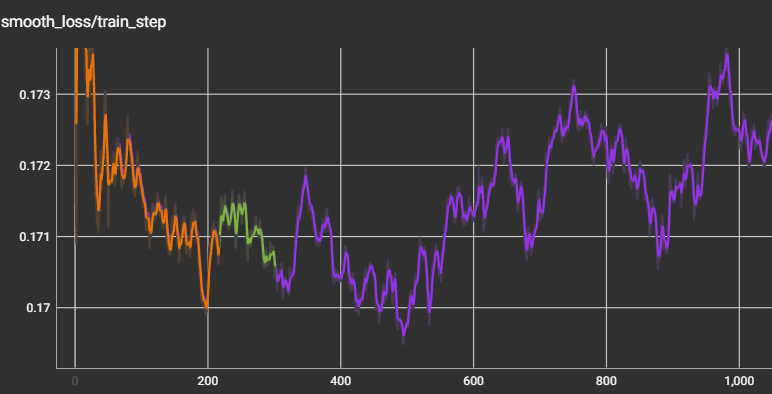

Image/Sample quality doesnt always line up with the loss curve, but this time it does, for the first part. Show here is the smooth loss for multiple runs:

Interestingly, however, the sample at 450 is NOT better than the one at step 200.

Also, best LR seems to be just UNDER the chatgpt suggestion: 3e-6

I determined this by trying out runs at: 5e-6, 4e-6, 3e-6, 2e-6

Final TE training config

The config I ended up using for final phase1 TE training is:

LR 3e-06

Epoch count 3 (I didnt finish, but this is relevent to warmup value)

warmup: 10%

Batchsize 32, accum 1

Scheduler=constant

This was run on a 30k dataset, with tag-style-only captions

... and as mentioned earlier, I ended up stopping it at only 250 steps!

Retroactive thoughts on TE learning rate

I went back later and asked chatgpt for its opinions on CLIP training. It said that it is typically more sensitivel than the unet, so should typically have a lower LR: Anywhere from 1/2 to 1/10 the value.

That certainly explains my observations

What did you do to the datasets?

Tag limits

ChatGPT game me further advice for TE training:

Lower Optimal Range: (5–10 tags)

Benefits most from clear, concise, and well-defined embedding associations.

Excessive tags introduce semantic noise, degrading embedding clarity and consistency.

(In comparison, for SD1.5 Unet training, it suggests a maximum tag count of 15 per image)

So for the TE training, I went to pure tag style captions, and limited it to 10 per image.

Image contents

Given that the goals were to "avoid semantic noise", I also simplified the dataset for this task.

I trimmed out just a "woman, solo" subset, and then I went in by hand to remove extra people, etc from the image.

(I plan to put the specific datasets up on HuggingFace. I'm using 2 at the moment. One of them is more or less https://huggingface.co/datasets/opendiffusionai/pexels-woman-solo .. The other is a subset of the CC8M images there focused on "woman")

Selecting a release candidate TE

Given that best results were around 200 steps, I droped my sampling intervals down to 50 steps.

And then felt paranoid so I dropped it down to every 10 steps. (and also verified that the new run's "50 steps" samples are identical to the prior run)

Seems like samples at 230, 240, and 250 actually look better than 200.

With vs without TE Training

Left is original. Right is a sample at 230. Facial details are much nicer

The only thing changed here, was training the TE.

I ended up taking the 250 step result.

Current results (2025/04/01)

Here is a comparison for base sd1.5, vs phase 1 model (32 epochs, 1 million steps), vs current phase2 at 2 epochs, after doing the TE training.

No this is not an April Fool's joke :)

Training continues, probably for another 2 weeks at least.

I am currently training this phase on a more general case subset of CC12M (compared to the TE training stagge)

https://huggingface.co/datasets/opendiffusionai/cc12m-2mp-realistic

Why not keep training the TE alongside the Unet?

Because it takes up more VRAM, which means I would have to decrease batch size.

Plus.... I'm a "change one variable at a time" kinda guy anyway.

Update 2025/04/07 - validation images and crash

On the down side, I was doing a 100 epoch run, and it crashed 34 epochs in.

On the other hand, I tried using "validation image sets" for the first time, and it seems super useful.

The idea is to carve out either 10%, or in my case 150 images, from your training dataset, and tell OneTrainer, "use these as validation images, not training".

OneTrainer will then periodically do a loss comparison of (how far off are the current model gens, from these images it has not been trained on?)

Results seem to be very informative. Below are the graphs for validation loss vs training loss.

Training loss keeps averaging down, suggesting that the model is improving: however, validation loss says otherwise. And when I do actual model-save comparisons, it tracks validation loss.

That is to say, the best saved model so far seems to be at the validation graph's lowest point, around 51k steps. (e18)

That being said, I'm not sure if it wouldn't have improved later on. So I'm going to re-run the training, hopefully out to 100(or perhaps 50) and see if it ends up going down again.

Future Text Encoder

At some point in the not too distance future, I would like to actually change out the Text Encoder AGAIN, by switching to "long Clip-L"

2025/04/10 - The future is now!

I have taken the plunge and transitioned to a retrain with Long-CLIP.

The new model is called XLLsd (article link, not model)

Do note the extra "L" in there!

Cover art note

FYI: yes the cover art at the top was generated by this model. It was done after the TE training, in the middle of "phase 2" training, but I hadn't even completed 1 epoch yet.

2025/09/10 - REEEEdo.....

I should ideally make a new chapter for this. Long story short... now that I intend to eventually, hopefully, use T5 for text, ....I'm experimenting with a whole new toolset. Which I wrote.

https://github.com/ppbrown/ai-training/

Its a pure CLI based training program. I've added stuff like a caching system I like more than others, and ways to train specific layers. Also better logging to tensorboard.

Plus, I'm counting steps "correctly" now. Previously with OneTrainer, if you were running batchsize 16, accum 16, it would count steps as "micro-batch" steps. Whereas the "proper" way is a single step after all the accum is run. So I'll edit up above to update step count of my earlier model properly.

And to make sure I'm doing things right, I'm attempting to redo (and maybe improve) the XLsd model.

.... and it is turning out to be a pain in the butt.

The good news is, I'm going to hopefully document things better.

More good news: I found the "proper" way to do mixed-precision training.

The bad news: it's fast, but not good enough for this.

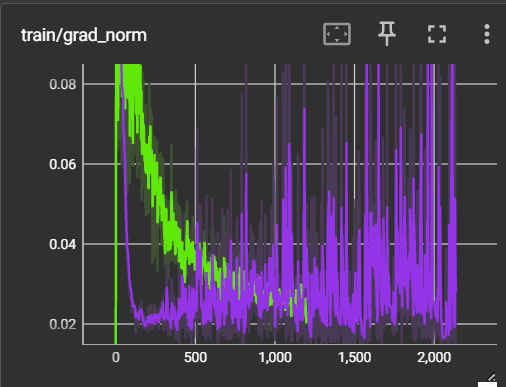

My extended logging shows me what the "average gradient change" is per step. Using mixed mode, it starts out good... but then the updates start spiking and splintering.

But now I went to full FP32, it is behaving more like expected. Here's a graph:

Its unfortunate the fp32 (green) is "behind" the other one, but trust me, it smoothes out :)

Trouble is though, there are multiple changes involved between these two methods: different optimizer, different LR, ....

But for the record, green is using:

d_lion, b16a16, constant. LR is adjusting to 3.6e-5 (after about 210 steps of auto-warmup), whereas the other one is fixed, at roughly around 2e-6 using ADAMW8.

Unlike previously, this is an all-square dataset! (250k images)

So, lots of improvements. More later, when I have results to show from the run. It's been under a day so far, not even completing a single epoch yet ( 1600 steps). Then perhaps I'll move this to a new chapter.