Introduction

In my effort to train a model with a large number of characters (LHC), I realized that a lot of people would be interested in the new character knowledge, but already had specific models they really liked in terms of usage or aesthetics and didn't want to lose that just to have a few more characters to play around with.

Luckily, it is possible to transfer trained knowledge between models (with some caveats).

What exactly are we trying to do?

The short is, we are trying to transplant training progress between different models.

More specificically:

If we have two models "A" and "B", and we train a specific "concept_a" onto Model "A", resulting in Model "A+concept_a", we want to transfer "concept_a" onto Model "B" in such a way that the resulting model would hopefully be similar to what a Model "B+concept_a" would be, where we instead trained "concept_a" onto Model "B" in the first place.

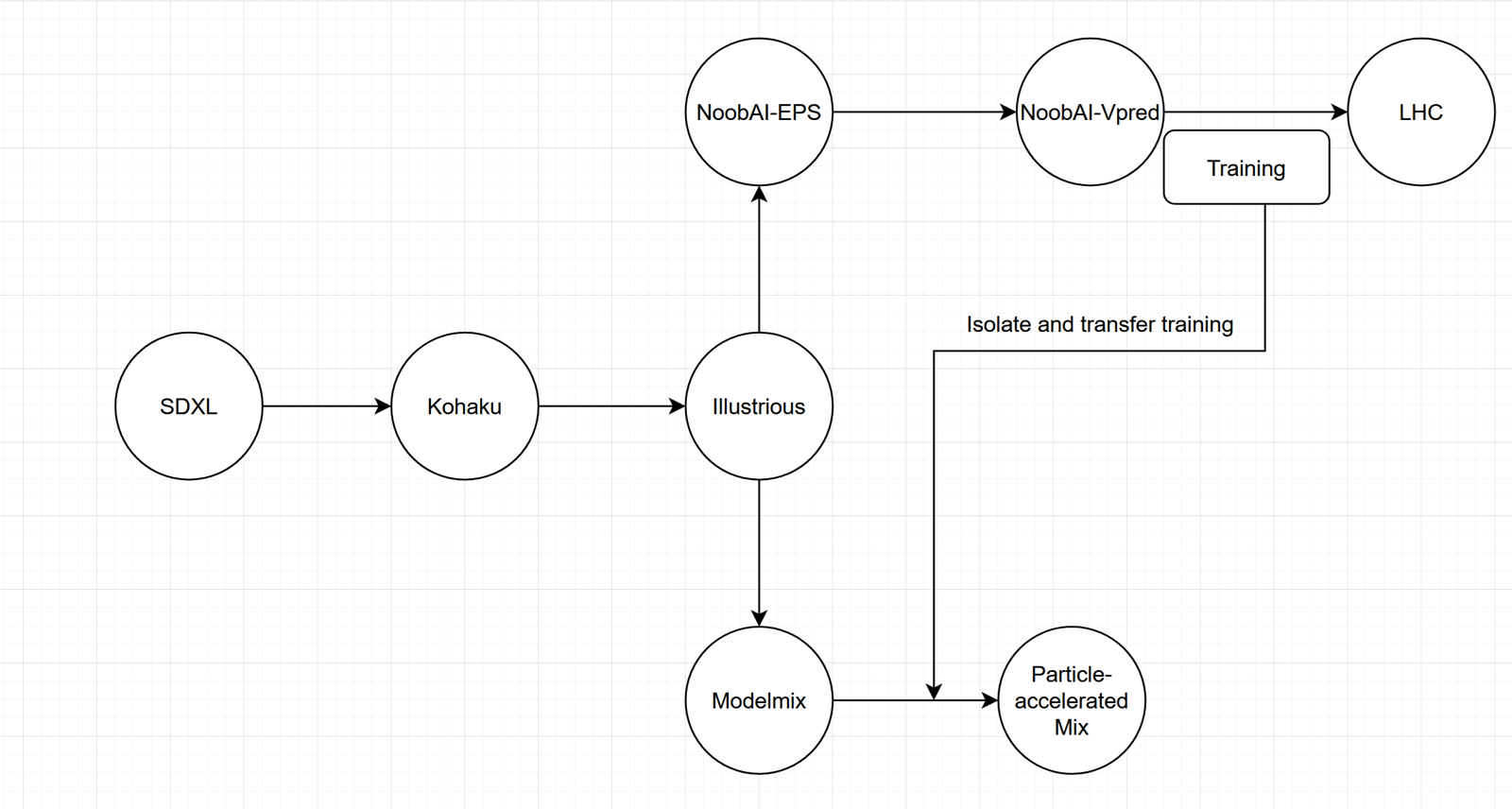

An example of this is https://civitai.com/models/1028575, for which the cover image of this article shows the approximate "family tree" of the models.

How can you do this?

The process itself is rather simple, and no more difficult than creating a regular modelmix. However instead of just doing a normal merge, where we take a kind of "average" of the model weights, we instead need to take the difference between the model with the concept we want, and its base model, and add that difference to a different model.

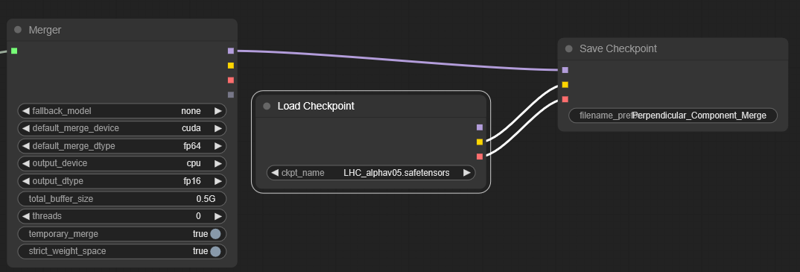

In the attachments of this article, you can find a ComfyUI workflow that does exactly this.

Disclaimer: The workflow requires the custom mecha-nodes bundle.

Quick tutorial on the workflow:

Step 1:

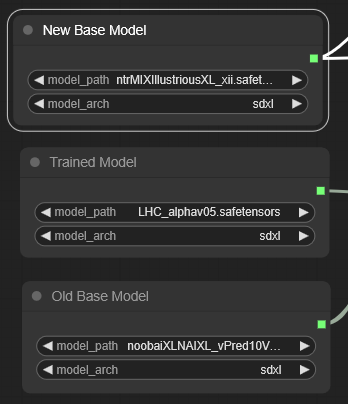

Select your old and new "bases" as well as the model that has been trained on the old base model with the concepts you want to transfer.

Step 2:

In a lot of cases you will also want to make sure the final model uses a specific CLIP and VAE, for which you will need to specify the source(s) near the end of the workflow and load them seperately

For example to use the clip and vae of LHC, you will want to load the lhc checkpoint and connect its clip and vae with those of the save checkpoint node.

Step 3:

Start the workflow. This will take a bit of time, but eventually, you will have a file called something along the lines of "perpendicular_component_merge.safetensors" in the output folder of your comfyui installation.

Conclusion

So, does this mean that we can replace finetuning multiple models on the same dataset? Sadly, no. While this method is better than regular merging or Lora extraction in transferring knowledge, it is still not comparable to finetuning the other model. Since the two bases are different from each other, just transferring the changes incurred during training will not end up in the same place. In general, the more degrees of seperation there are between the bases, the worse the result will be. It can of course happen that the two bases are coincidentally somewhat similar, resulting in a better model, but this can not be predicted usually. In my experience though, as long as one model is very close to a direct ancestor of the other model, the result will still be useful (see for example the iterComp merges, which uses the Itercomp model trained on top of the base SDXL)

Afterword

Hopefully you will find this article helpful, or at least interesting, and if you have any questions, or suggestions on things I could be clearer on/need to include, I'm always happy to get feedback.