2025-07-29:

Just a heads-up, this tutorial was written pretty early on when Wan2.1 came out. If you wanna use Wan2.1 and Wan2.2 way faster, you can just download these simple but useful workflows here:

Wan2.2

Text-2-Image&Video

Image-2-Video:

https://civitai.com/models/1820099/wan22i2vt2i14bgguftestedworkswell

Wan2.1:

Image-2-Video:

https://civitai.com/models/1801984/wan21-image-to-videolowvramggufsimple

Text-2-Image&Video:

https://civitai.com/models/1760289/merida-brave-2012

(I didn't release a T2I workflow for Wan2.1, but you can just drag the example image into ComfyUI. This workflow is what I use daily – it's simple, easy to use, and I've even added NAG (Negative Attention Guidance, so you can use negative prompts even when CFG is set to 1).

Wan2.1 Vace T2I_Inpainting&out_gguf_8g_LowVram_feet&hand_fix_easy:

https://civitai.com/models/1805031/wan21-vace-t2iinpaintingandoutgguf8glowvramfeetandhandfixeasy

Wan2.1_Vace_T2I_R2V_controlnet_LowVram_StepDistill-8step_easy_workflow:

https://civitai.com/models/1789799/wan21vacet2ir2vcontrolnetlowvramstepdistill-8stepeasyworkflow

Want to try Wan 2.1 but don’t know where to start? Look no further—this is the right place!

ComfyUI:

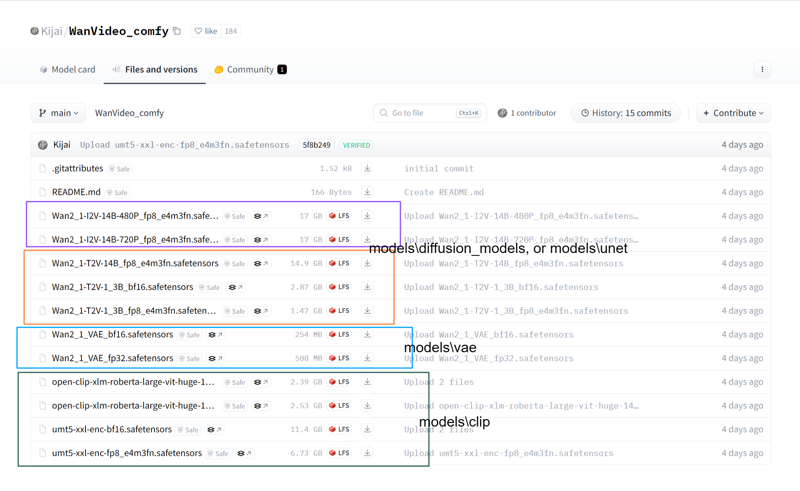

Kijai/WanVideo_comfy

1.Place the downloaded models in the designated location.

2.Install this node: https://github.com/kijai/ComfyUI-WanVideoWrapper (You can install it manually. Go to ComfyUI_windows_portable\ComfyUI\custom_nodes, type 'cmd' in the address bar, and use the command: git clone https://github.com/kijai/ComfyUI-WanVideoWrapper.git. Before manual installation, ensure you have Git installed.)

3.Install dependencies,go to ComfyUI_windows_portable,type 'cmd' in the address bar, and use the command:

python_embeded\python.exe -m pip install -r ComfyUI\custom_nodes\ComfyUI-WanVideoWrapper\requirements.txt

4.Drag the workflow into ComfyUI, and you’re ready to start using it.

Model Link:https://huggingface.co/Kijai/WanVideo_comfy/tree/main

Model Path:

(T2V, I2V checkpoints, VAE, CLIP, T5. You can mix and match the models based on your configuration needs, but I recommend choosing smaller ones)

kijai is always the first to develop and adapt nodes for open-source video generation models, which is truly remarkable. However, running kijai’s workflows requires some knowledge and isn’t straightforward for beginners. That’s why I’ll offer alternative options.

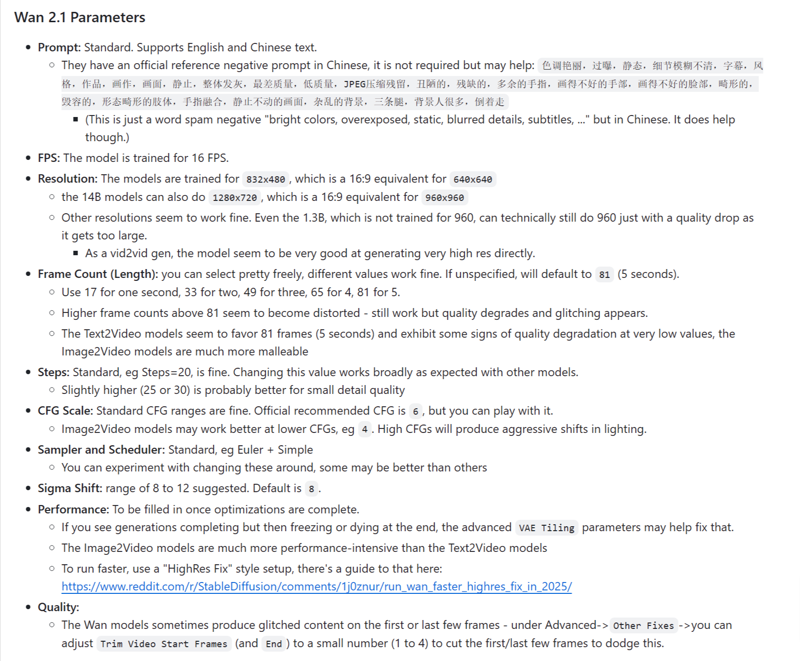

For this, mainly pay attention to these two issues:

1--A dimension error may occur if the resolution is set too small.

2--Make sure the models are the ones shown in the screenshot. Models downloaded from elsewhere might sometimes be incompatible with the workflow and trigger errors.

SwarmUI:

I assume you’ve already installed SwarmUI (it’s really easy to install), and you’ve double-clicked 'update-windows.bat' to update to version 'SwarmUI v0.9.5.0 (2025-02-26 22:08:10).'

Next, just select the models you need and place them in the correct locations.

VAE, CLIP, and T5 models can be directly downloaded from kijia’s model link—they are usable.

You can choose safetensors or GGUF checkpoints. Here, there are checkpoints from 3 sources to choose from. Generally, if you’ve already downloaded kijia’s checkpoints, you don’t need to download them again. However, I usually opt for GGUF format to reduce VRAM usage:

safetensors:

1-Comfy-Org

https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/tree/main/split_files/diffusion_models

2-kijia

https://huggingface.co/Kijai/WanVideo_comfy/tree/main

GGUF:

3-city96

T2V 14B https://huggingface.co/city96/Wan2.1-T2V-14B-gguf/tree/main

I2V 480p https://huggingface.co/city96/Wan2.1-I2V-14B-480P-gguf/tree/main

I2V 720p https://huggingface.co/city96/Wan2.1-I2V-14B-720P-gguf/tree/main

I wrote halfway and realized I don’t need to continue because the SwarmUI official author has already explained it very clearly in the documentation. For now, I haven’t found any sections that require my explanation. I recommend starting with the 1.3B T2V and using 480P resolution for your first attempt.

github.com/mcmonkeyprojects/SwarmUI/blob/master/docs/Video%20Model%20Support.md#wan-21

official reference negative prompt:色调艳丽,过曝,静态,细节模糊不清,字幕,风格,作品,画作,画面,静止,整体发灰,最差质量,低质量,JPEG压缩残留,丑陋的,残缺的,多余的手指,画得不好的手部,画得不好的脸部,畸形的,毁容的,形态畸形的肢体,手指融合,静止不动的画面,杂乱的背景,三条腿,背景人很多,倒着走

.jpeg)