Just to get a quick feel of what directly finetuning a model is all about, i wanted to do a quick test... but didn't really have a good idea about what to test 😅

Meanwhile, i had a model stashed for a while. Nicknamed "Juicy" initially, it was a test about trying to import Psychonex into Illustrious (I first mentionned it here, it was the base used to create the CocktailMix JuiceV1 LoRA)

This base model can do nice semi-realistic picture in the style I like by default:

But if i use FreeU, SAG, Detail Daemon and two LoRA to boost the result, i get something "More" from it:

Difference is minimal here in this demo, but overall after a few generation, i was wondering if i should merge the LoRA in the checkpoint... but i would not get the infuence of the forge plugin this way... so, let's try finetuning based on generated pictures!

First, i generated a bunch a prompt using DTG and then, i generated the associated pictures. That's 1300 in total after cleaning up a few (around 200) bad ones. What's cool with generating prompt this way is that I could use them as tag caption afterward :D

And then, using BrainBlip, i generated a natural language caption per image.

It was now the time to try the articles I found regarding finetuning:

1) Merging Pictures and caption files to create a metadata json file for the dataset

python ~/kohya_ss/sd-scripts/finetune/merge_captions_to_metadata.py $(pwd)/data $(pwd)/meta_cap.json2) Add the associated prompt extracted as text files in the metadata

python ~/kohya_ss/sd-scripts/finetune/merge_dd_tags_to_metadata.py --caption_extension '.prompt' --in_json $(pwd)/meta_cap.json $(pwd)/data $(pwd)/meta_full.json3) Generate the latent from the image

python ~/kohya_ss/sd-scripts/finetune/prepare_buckets_latents.py $(pwd)/data $(pwd)/meta_full.json $(pwd)/meta_with_latent.json ~/kohya_ss/models/sdxl_vae.safetensors --batch_size 1 --mixed_precision bf16 --min_bucket_reso 256 --max_bucket_reso 2048 --max_resolution 1024,10244) Run the finetuning! (after a few mini tests and following what i tested with LoRA, i have ran it using a different set of parameters than usual)

accelerate launch --dynamo_backend no --dynamo_mode default --mixed_precision bf16 --num_processes 1 --num_machines 1 --num_cpu_threads_per_process 2 ~/kohya_ss/sd-scripts/sdxl_train.py --lr_scheduler_type=CosineAnnealingLR --lr_scheduler_args T_max=13000 eta_min=1e-7 --optimizer_type=came_pytorch.CAME --optimizer_args weight_decay=0.01 --vae=~/kohya_ss/models/sdxl_vae.safetensors --pretrained_model_name_or_path=~/webui/models/Stable-diffusion/Juicy.safetensors --in_json=$(pwd)/meta_with_latent.json --train_data_dir=$(pwd)/data --train_batch_size=1 --learning_rate=5e-6 --gradient_checkpointing --gradient_accumulation_steps=1 --max_data_loader_n_workers=1 --persistent_data_loader_workers --mixed_precision=bf16 --full_bf16 --save_model_as=safetensors --save_precision=fp16 --save_every_n_epochs=5 --max_train_epochs=10 --max_grad_norm=0.0 --xformers --output_dir=$(pwd)/result/ --output_name=HoJ --logging_dir=$(pwd)/logs --log_with=tensorboard --max_token_length=225 --cache_latents --sample_sampler="euler_a" --sample_prompts=$(pwd)/prompt.txt --sample_every_n_steps=650The main idea was to start with a "standard" LR, get down as I trained to avoid overfitting but without going to low (hence the eta_min for the LR Scheduler) and use a more modern Optimizer (CAME).

With 1300 pictures, that's a 13000 steps run and to be fair, i think it could have gone for more. When looking at the tensorboard, the loss was going down but soooooo slowly ^^;

But to run this fast enough, i had to rent a good GPU instance (it took 31GB of VRAM as-is, it could probably be better configured). So, let's stop here and see the result:

Nice! But not exactly what i was looking for. Should i trash this? ... NO! It is still new interesting values that could be used in an other way. Time to start Supermerger.

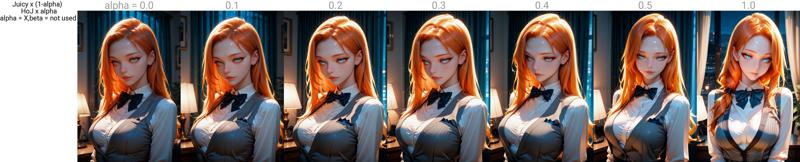

Here is a few XYZ plots of weighted sums between Juicy and the current result:

Normal

CosineA

CosineB

I really feel that something good can get out of this, this for example

But i think now would be a good time to try a few Block merge test to lock a final result for release :D

EDIT: a quick XYZ Plot to check IN/OUT pair influence. The 01 pair is probably the one i want to avoid merging :D

Look forward the release of a brand new model :D

EDIT2:

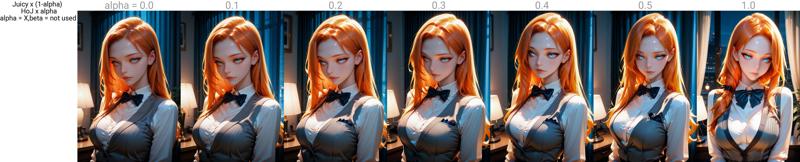

Current test with

Juicy x (1-alpha) + HoJ x alpha (0.5,0.25,0.0,0.15,0.15,0.15,0.15,0.15,0.15,0.15,0.5,0.25,0.0,0.15,0.15,0.15,0.15,0.15,0.15,0.15)

cosineA