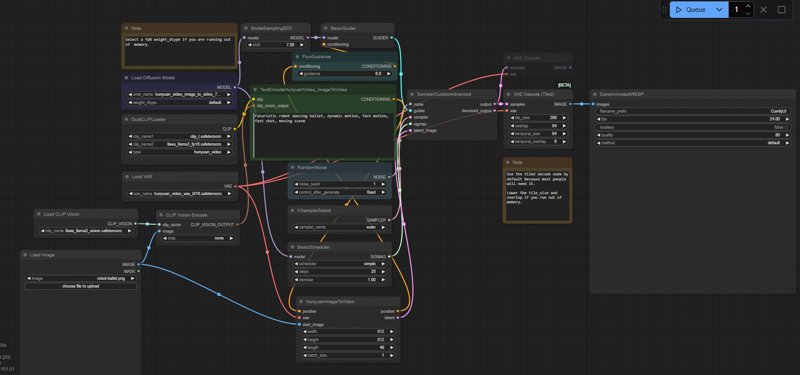

Objective: Testing the new model Hunyuan I2V

Check out the blog for comfyUI for official information : https://blog.comfy.org/p/hunyuan-image2video-day-1-support

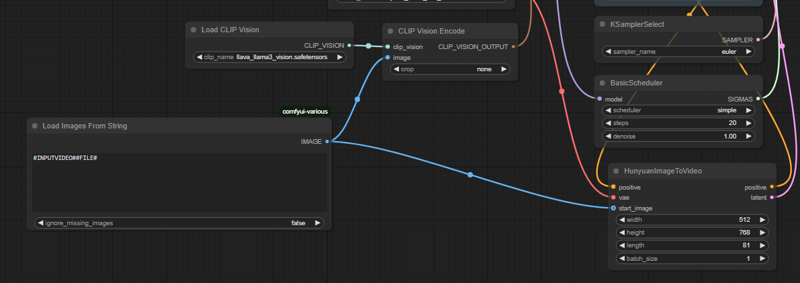

After you update ComfyUI to the last version you can drag&drop the image from this link : https://raw.githubusercontent.com/Comfy-Org/example_workflows/refs/heads/main/hunyuan-video/i2v/robot.webp to your comfyUI page. It will show you where to download the missing files that you need.

The demo starts from an image and produces a video of 49 frames 512x512

For my test I have upload an image 512x768 and changed the size

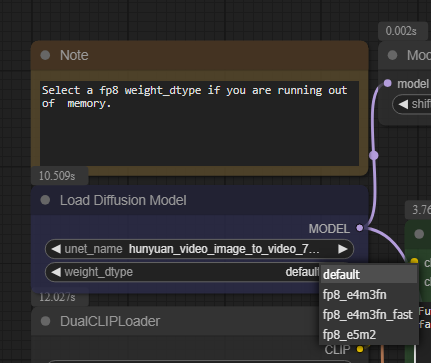

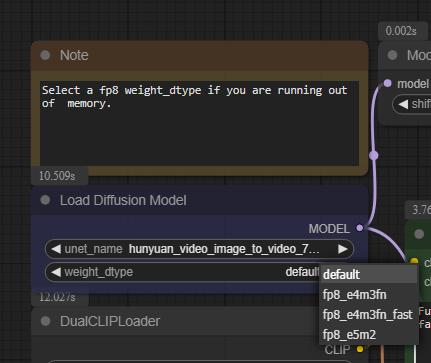

If you see that in your computer takes too much time you can change the weight_dtype from default to the other settings.

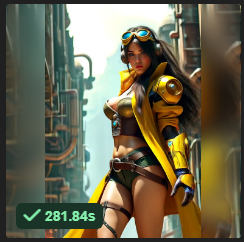

With the default settings a

it took 281.84s (more that 4 minutes) with my GPU4080 16GB

Here is the output

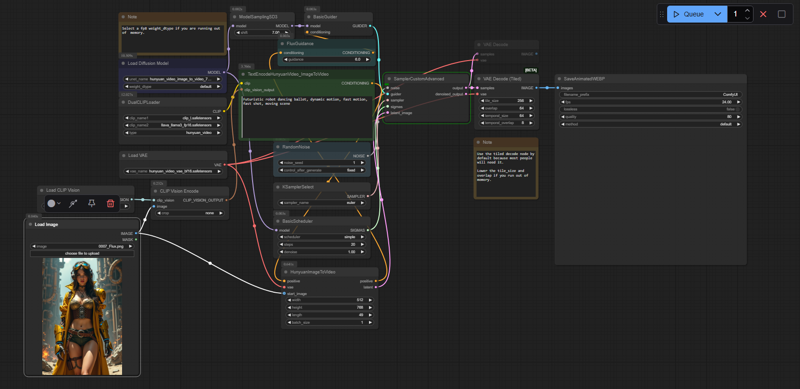

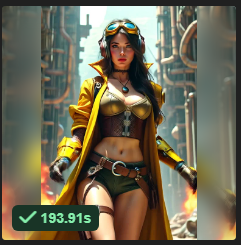

By changing the weight_dtype to fp8_e4m3fn_fast

it took 193.91s (a little bit more that 3 minutes)

Here is the result:

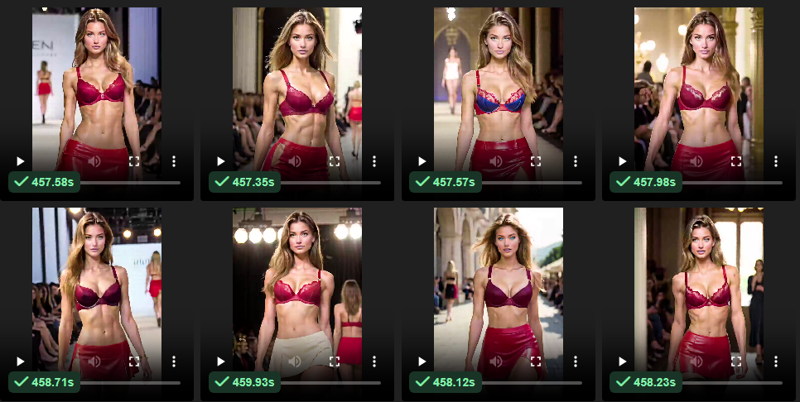

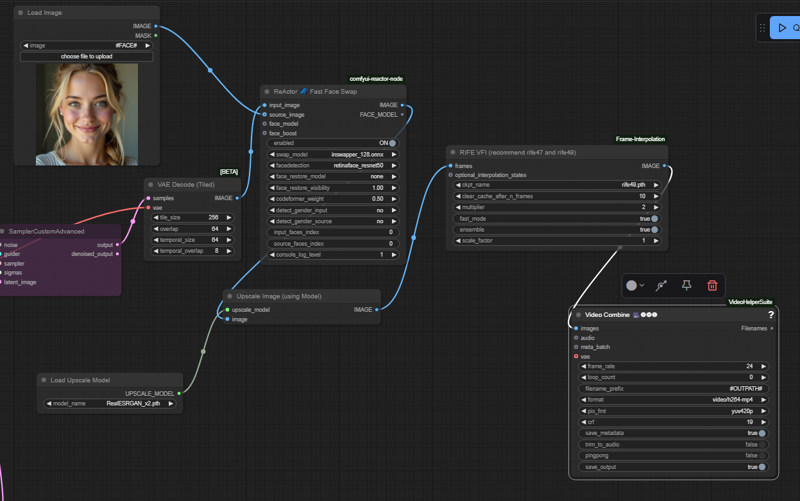

In my case I decided to create a worfkow with 2x upscaled, reactor to change the face and interpolate to make the video longer.

The time increased to 450 but quality id HD.

Check my posts:

Find Attached my workflow.

I added a "Load Image from String" which is very useful to pass the image from any disk with the path. The "load Image" works only with the input folder (I hate it)

At the end after the Vae Decode (Tiled) I added the Reator to change the face . This component is not anymore available on github in the previous position (they change due of github policies, now is under : https://github.com/Gourieff/ComfyUI-ReActor )

I use the RIFE VFI for frame interpolation and I save it all with Video Combine in mp4.

Attached to this article you can find the code of the workflow.

Conclusion : Output is great, start to be near the quality of https://hailuoai.video/ and https://klingai.com/ . I recommend it .