Advancements in LoRA Training: Introducing a New Training Method

LoRA training technology is evolving rapidly, but the fundamental training method has remained largely unchanged since its inception. This time, I have developed a new training method and will explain its details. This technique reduces the training time required for difference learning in the copier learning method to one-tenth to one-twentieth of the original duration while also allowing simultaneous training on multiple image sets. Additionally, it has the potential to be applied not only to image models but to diffusion models in general.

Training code is following,

https://github.com/hako-mikan/sd-webui-traintrain

Trained sample LoRA (Add-detail for XL models)

https://civitai.com/models/1317134/detailnxl-add-detail-for-xl

LoRA Training and Copier Learning Method

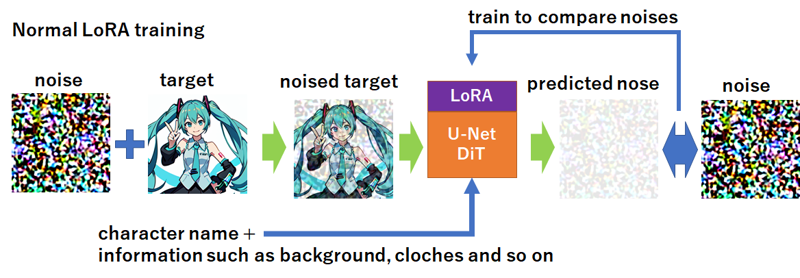

First, let’s review the standard training method.

Traditional Training Method

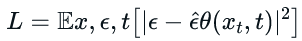

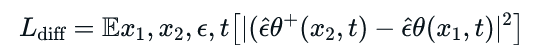

In conventional LoRA (or other diffusion model) training, an image with added noise xt is processed through a U-Net (or in recent models, a DiT). The predicted noise ϵ^θ(xt,t is then compared to the original noise ϵ\epsilon. The loss function LL is defined as:

Since this process involves noise, it may seem abstract. However, this is essential in diffusion models, where the goal is to reconstruct an image by gradually reducing noise. The training ensures that the predicted noise ϵ^θ(xt,t) aligns with the original noise ϵ. Simply put, the process evaluates how similar the generated image is to the input image.

However, a key issue arises: the model tends to learn not only the desired character features but also unintended elements within the image. For example, if a character is making a peace sign, the model may associate the character with always making that gesture. Similarly, it may learn the background, the artistic style, or other details unintentionally.

Tagging and training on a large dataset can mitigate this to some extent, but they can also lead to missing important features.

Copier Learning Method

To address this issue, the Copier Learning Method was developed by 月須和・那々. This method trains two separate LoRA models:

First, a single image is trained to the extreme, creating a model that generates the same image regardless of initial noise.

Then, a slightly modified version of the image is used to train another LoRA model. Since the first model only generates the same image, the second model learns only the differences between the images.

This method allows the creation of LoRA models that modify only specific aspects of an image—something previously difficult to achieve.

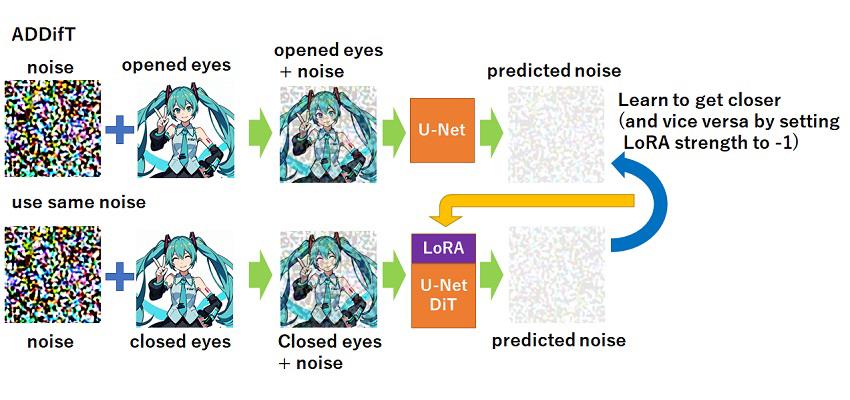

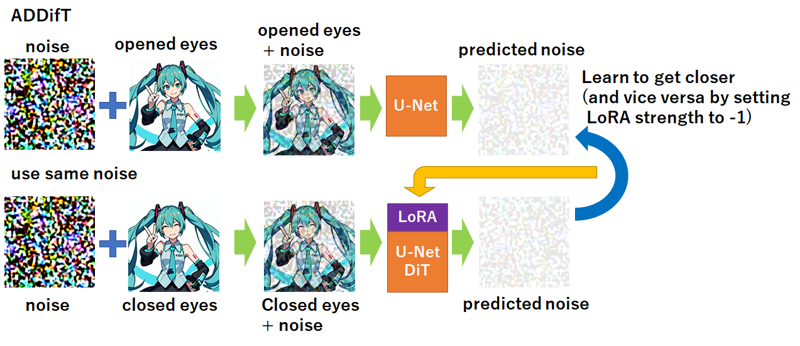

Introducing ADDifT: A New Training Method

While ADDifT shares similarities with the Copier Learning Method in that it learns differences, its approach is distinct.

ADDifT, or Alternating Direct Difference Training, directly learns differences between paired images (x1,x2). The “direct” aspect means that the LoRA model explicitly learns only the changes between images.

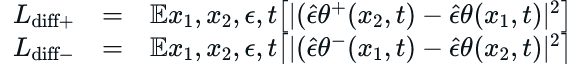

For example, consider learning the difference between an image with open eyes and closed eyes. In ADDifT, the LoRA parameters are updated based on the following loss function:

Unlike traditional training, which focuses on generating a specific image, ADDifT is designed to learn only the modifications between images.

This method eliminates the need to first create a copier model, significantly reducing the number of training steps required—typically only 30 to 100 steps. In fact, training beyond 100 steps can lead to overfitting.

Alternating Training

The “Alternating” aspect of ADDifT means that the model alternates between two opposite training directions:

Learning “open eyes → closed eyes”

Learning “closed eyes → open eyes” (with negative LoRA application)

This alternation prevents unwanted features from being learned. By inverting the LoRA application during the reverse process, only the key differences are retained.

The corresponding loss functions are:

Scheduled Random Timesteps

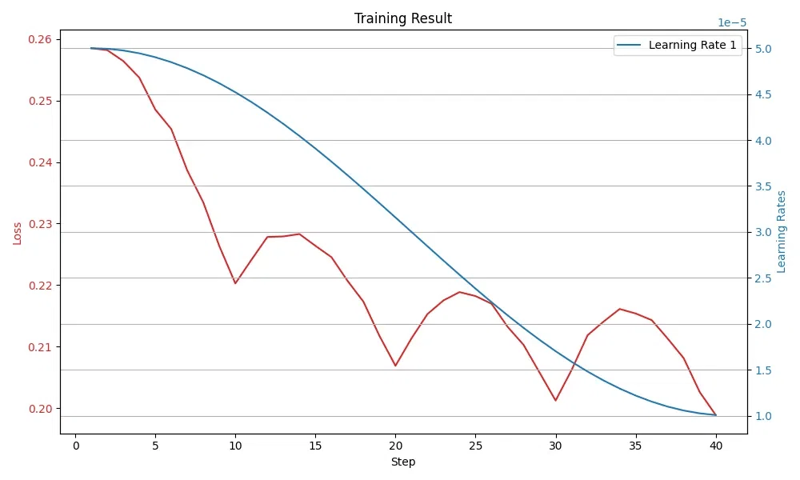

ADDifT introduces a new concept: Scheduled Random Timesteps. Since training only requires 30 to 100 steps, the distribution of timesteps becomes unbalanced. Normally, timesteps are randomly selected between 0 and 1000, but with fewer steps, the distribution can become skewed.

To address this, the method segments the timesteps into five equal groups and selects them sequentially. For example:

Select from 0–200

Then from 201–400

… up to 800–1000

For eye-related LoRA training, the focus is on high timesteps (500–1000), as they lead to more stable training. Conversely, for style LoRA, lower timesteps (200–400) are preferred.

The actual trend of the loss is as follows. The higher the timesteps, the smaller the loss tends to be. It feels like flattening this curve might be a good idea, but at the same time, it might be better to leave it as is. For now, it's working well even without any adjustments, so I'll leave it as it is for the time being.

Performance and Speed Comparison

This method reduces the training time from:

(500+500 steps)×batch size 2=2000 to: 30 steps×batch size 1=30

For SD1.5, training an "eyes closed" LoRA takes only 30 seconds on an RTX 3060 (12GB).

However, speed alone is meaningless if the performance is poor.

Comparison – Top: without LoRA, Middle: Copier-style training, Bottom: ADDifT.

This is a comparison with a LoRA created using copier-style training, and as you can see, the results are comparable in quality.

Next, let’s try a style LoRA. For the style LoRA, the training timesteps are set between 200 and 400. The training images are the ones before and after applying Hires-Fix. We apply Hires-Fix at 3x on 512x512 images and then downscale them back to 512x512 for comparison. The expectation is that the model can learn the added detail that results from this process.

It’s working well. Creating LoRAs for things like closing eyes is relatively simple, but in the case of style LoRAs, preparing suitable training images is more difficult and requires trial and error. With copier-style training, it used to take 10–20 minutes to generate a LoRA, making experimentation cumbersome. But if it can be done in around a minute, it becomes much easier to iterate.

Now, ADDifT has benefits beyond just speed. One of them is that it allows training on multiple images. With copier-style training, you could only train on a single pair of images. This limits generalization. For example, with a LoRA for opening/closing eyes, training only on front-facing images reduces effectiveness when the subject is looking sideways or tilting their head. Addressing this would normally require training multiple sets and merging them later, which is quite a hassle.

However, ADDifT allows training with varied image sets in a single process, enabling faster and more generalized learning.

Future Developments

One potential application of this method is self-regularization in LoRA training. In DreamBooth training, overfitting is mitigated by mixing general images with the target images. However, this can lead to unwanted artifacts.

Instead, ADDifT could compare noise predictions from LoRA-applied and non-applied generations to filter out unwanted learning effects.

Furthermore, since this method is applicable to all diffusion models, it could be extended to video and other generative tasks.

Conclusion

ADDifT is a novel training technique that rapidly learns image differences and is widely applicable to diffusion models. Given its efficiency and flexibility, it could be a valuable tool for future AI-driven content creation.