Hello everyone! I’d like to share a little about hunyuan-video-training, a small program I created to help train Hunyuan Video model. It started as a personal experiment, but I think it might be helpful for some of you — especially if you’re eager to train Hunyuan Video Models but don’t have access to high-end hardware.

What’s This Program All About?

In a nutshell, hunyuan-video-training is a tool builds on the diffusion-pipe , but I’ve spiced it up with some enhancements: integration with Modal cloud, a user-friendly Gradio interface, and features like testing LoRA with ComfyUI workflows.

What Does It Offer?

I aimed to keep things simple and practical. Here’s what you’ll get with the program:

Cloud-Based Training: Run your training on Modal’s cloud. They offer $30/month free for accounts — no credit card needed.

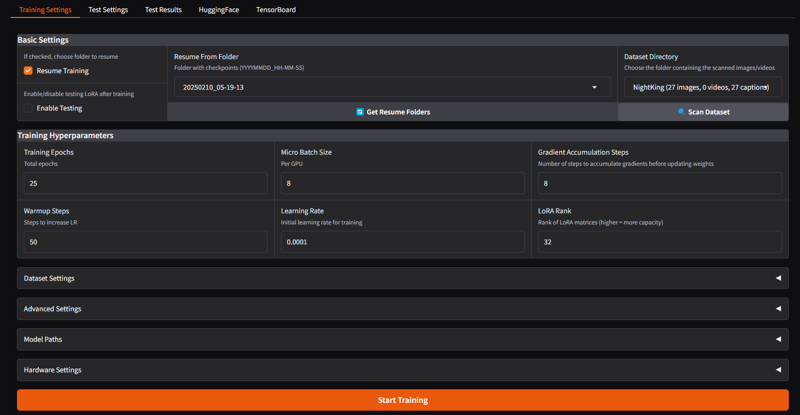

Gradio Interface: A clean, easy-to-use UI that doesn’t overcomplicate things.

ComfyUI Integration: Testing LoRA is baked into the workflow, making it super convenient to generate videos from your trained models.

Model Management: Upload and download models effortlessly with Hugging Face support, plus track your progress with Tensorboard.

I’ve got you covered with a YouTube tutorial. It walks you through everything, step by step.

How to Install It

Please refer to README file

How to Use It

Once installed and activate env, here’s how to put it to work:

Launch the UI with python ui_setting.py.

Drop your dataset folder in the same directory as the script.

Tweak your training parameters in the interface.

Hit the “Start Training” button.

Keep an eye on progress on Modal console or with Tensorboard.

Generate videos from your trained models using the ComfyUI API.

And that’s it ~!