Hey peeps,

Any old school boyz and girlz still using the a1111 WebUI like me ? (v1.10.1 when writing this)

Wanna better images ?

Wanna change your CLIP models cause Comfy peeps are bullying you ?

CLIP models to use for better images with SDXL (not only).

Here are a new clip_L trained by zer0int and the well known clip_G named 'BigG' :

clip_L : https://huggingface.co/zer0int/CLIP-Registers-Gated_MLP-ViT-L-14/blob/main/ViT-L-14-REG-GATED-balanced-ckpt12.safetensors

clip_G : https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k/resolve/main/open_clip_model.safetensors?download=true

_________________________________________________________________________________

How To :

Download the 2 models above into the folder

stable-diffusion-webui\models\CLIPEdit the file

stable-diffusion-webui\modules\sd_models.py:

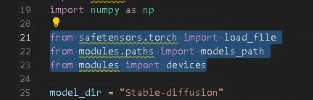

-Code to insert at the top of the file (~line 21 for A1111) :

from safetensors.torch import load_file

from modules.paths import models_path

from modules import devicesLike this :

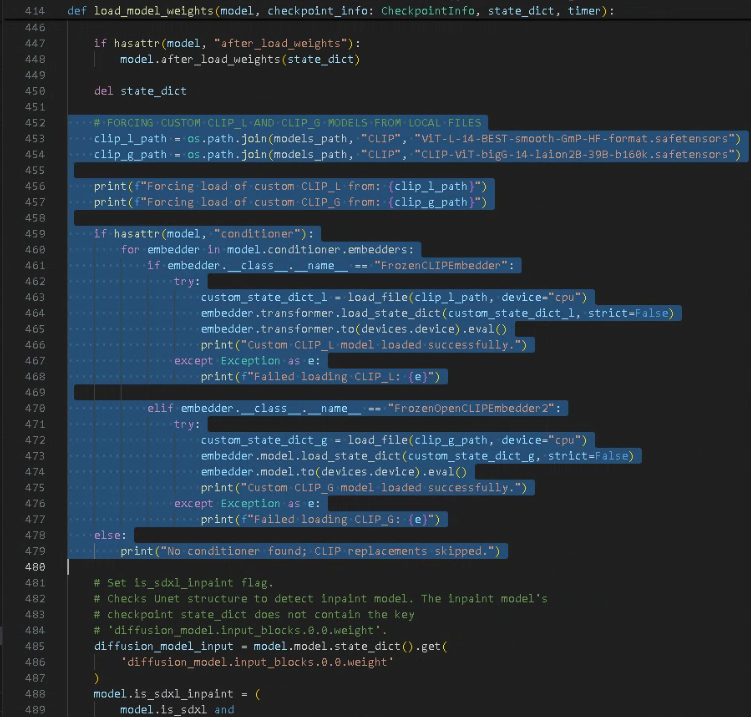

-Code to insert after the line del state_dict (~line 452 for A1111) :

# FORCING CUSTOM CLIP_L AND CLIP_G MODELS FROM LOCAL FILES

clip_l_path = os.path.join(models_path, "CLIP", "ViT-L-14-REG-GATED-balanced-ckpt12.safetensors")

clip_g_path = os.path.join(models_path, "CLIP", "CLIP-ViT-bigG-14-laion2B-39B-b160k.safetensors")

print(f"Forcing load of custom CLIP_L from: {clip_l_path}")

print(f"Forcing load of custom CLIP_G from: {clip_g_path}")

if hasattr(model, "conditioner"):

for embedder in model.conditioner.embedders:

if embedder.__class__.__name__ == "FrozenCLIPEmbedder":

try:

custom_state_dict_l = load_file(clip_l_path, device="cpu")

embedder.transformer.load_state_dict(custom_state_dict_l, strict=False)

embedder.transformer.to(devices.device).eval()

print("Custom CLIP_L model loaded successfully.")

except Exception as e:

print(f"Failed loading CLIP_L: {e}")

elif embedder.__class__.__name__ == "FrozenOpenCLIPEmbedder2":

try:

custom_state_dict_g = load_file(clip_g_path, device="cpu")

embedder.model.load_state_dict(custom_state_dict_g, strict=False)

embedder.model.to(devices.device).eval()

print("Custom CLIP_G model loaded successfully.")

except Exception as e:

print(f"Failed loading CLIP_G: {e}")

else:

print("No conditioner found; CLIP replacements skipped.")Like this :

Or, for A1111 v1.10.1, just download the attached file here, then extract and replace "sd_models.py" by this one.

NB: Never trust other's code !

-Start/Restart the WebUI & Enjoy !

With this code, the (huge) CLIP models will run on RAM/CPU. So 0 additional GPU/VRAM usage nor generation time.

I started this as an extension, to have decent dropdown menus in the settings tab to choose the models from the folder, but it required a more evolved reflexion than i thought.

I may try again at some point and I'll edit this article if so.

Cheers ! 🥂