Alibaba has released a new WAN model, which support a new collection of controlnets and another for inpainting. This is being named "Control Video".

We will look at using it with ComfyUI, at the time of writing (28/3/2020) this is fresh out the oven today and so expect future updates with FP8 or GGUF quantization's as they become available.

INSTALLATION

1. Git clone the cogvideox_fun repo

cd ComfyUI/custom_nodes/

git clone https://github.com/aigc-apps/VideoX-Fun

git clone https://github.com/Kosinkadink/ComfyUI-VideoHelperSuite

cd CogVideoX-Fun/

python install.py

2. Download models into ComfyUI/models/Fun_Models/

Wan2.1-Fun-1.3B-InP (19GB) https://huggingface.co/alibaba-pai/Wan2.1-Fun-1.3B-InP

Wan2.1-Fun-1.3B text-to-video weights, trained at multiple resolutions, supporting start and end frame prediction.

Wan2.1-Fun-14B-InP (47GB) https://huggingface.co/alibaba-pai/Wan2.1-Fun-14B-InP

Wan2.1-Fun-14B text-to-video weights, trained at multiple resolutions, supporting start and end frame prediction.

Wan2.1-Fun-1.3B-Control (19GB) https://huggingface.co/alibaba-pai/Wan2.1-Fun-1.3B-Control

Wan2.1-Fun-1.3B video control weights, supporting various control conditions such as Canny, Depth, Pose, MLSD, etc., and trajectory control. Supports multi-resolution (512, 768, 1024) video prediction at 81 frames, trained at 16 frames per second, with multilingual prediction support.

Wan2.1-Fun-14B-Control (47GB) https://huggingface.co/alibaba-pai/Wan2.1-Fun-14B-Control

Wan2.1-Fun-14B video control weights, supporting various control conditions such as Canny, Depth, Pose, MLSD, etc., and trajectory control. Supports multi-resolution (512, 768, 1024) video prediction at 81 frames, trained at 16 frames per second, with multilingual prediction support.

3. (Optional) Download Lora models

place inside comfyUI/models/loras/fun_models/

WORKFLOWS (official)

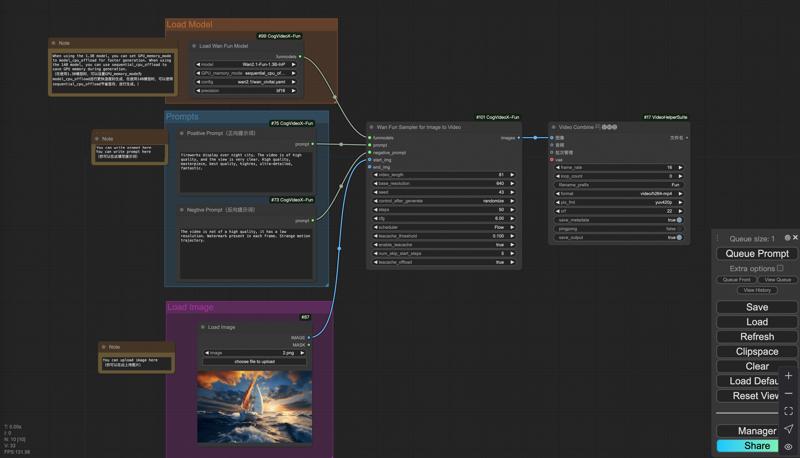

image to video workflow:

https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/wan_fun/asset/v1.0/wan2.1_fun_workflow_i2v.json text to video workflow

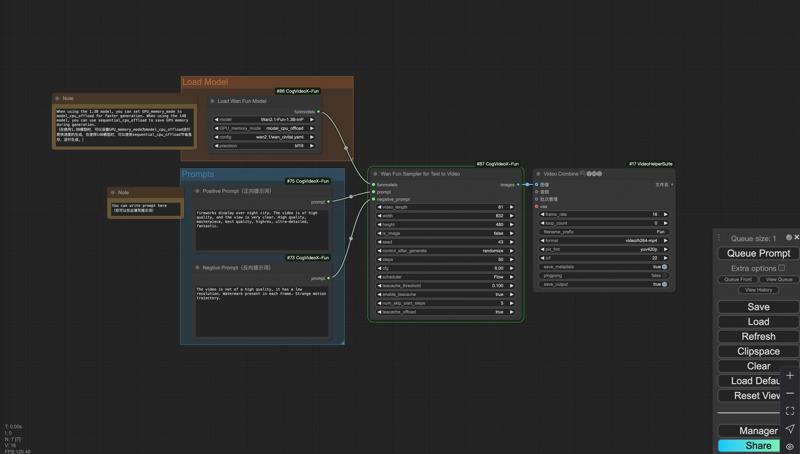

text to video workflow

https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/wan_fun/asset/v1.0/wan2.1_fun_workflow_t2v.json trajectory control video generation:

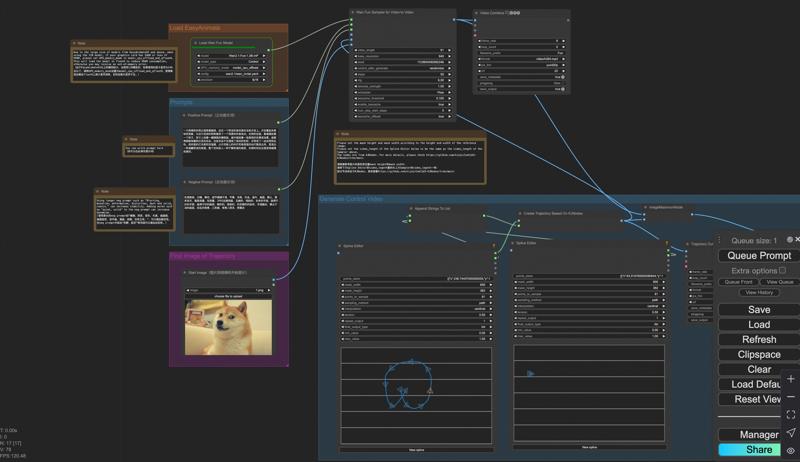

trajectory control video generation:

https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/wan_fun/asset/v1.0/wan2.1_fun_workflow_control_trajectory.json Control Video Generation:

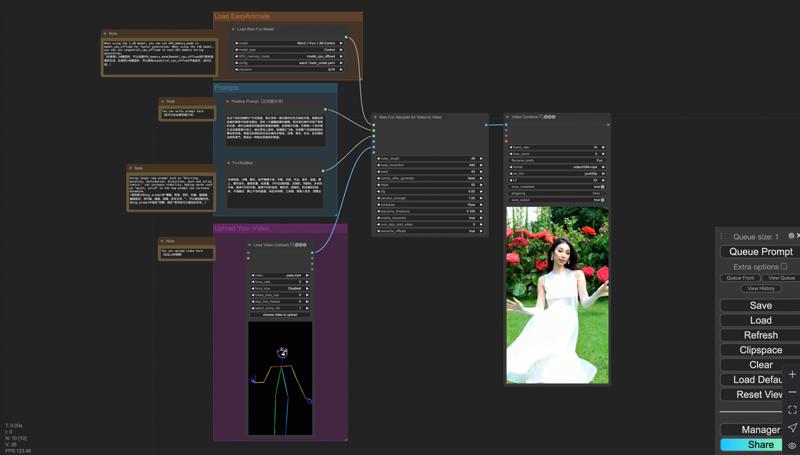

Control Video Generation:

https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/wan_fun/asset/v1.0/wan2.1_fun_workflow_v2v_control.json

GGUF WAN FUN INPAINT

video inpainting model was released in GGUF by City96, you can use the existing WAN video pipeline with those CLIP/VAE weights.

GGUF

Q8_0 https://huggingface.co/city96/Wan2.1-Fun-14B-InP-gguf/resolve/main/wan2.1-fun-14b-inp-Q8_0.gguf

Q4_0 https://huggingface.co/city96/Wan2.1-Fun-14B-InP-gguf/resolve/main/wan2.1-fun-14b-inp-Q4_0.gguf

place inside models/unet

MORE INFO

https://huggingface.co/collections/alibaba-pai/wan21-fun-67e4fb3b76ca01241eb7e334