Here is described a merge trick i think could be useful.

When doing a lot of merging and finetuning of a model, at one point, things may have gone too far and the esthetic is there, just the composition and prompt following parts start going out the window: that may means simply that the CLIP (remember, the component that translate the prompt into concepts for the UNET to generate) is messed up.

To fix this, i tried a few times a bit of switch in Python were i replace the model CLIP with the original one from the base checkpoint. It does help, but it is still not perfect. And it means doing stuff in Python, that may be too complicated for some of us right?

So, here is a procedure using the good-old Supermerger extension in A1111, and a demo using HoJ.

Here is the example: with this prompt, the flower ends up in the drink. And there is a mess of tables in the end of the street.

1girl, brunette, summer dress, straw hat, sitting at a table, from side, looking away, depth of field, cafe terrace, outside, paris city street, setting sun, golden hour glow, sun rays, flowers, aesthetic composition, subtle shadows

I did mess up a bit stuff when building HoJ ^^; So, how to fix this? Here is how in two merges:

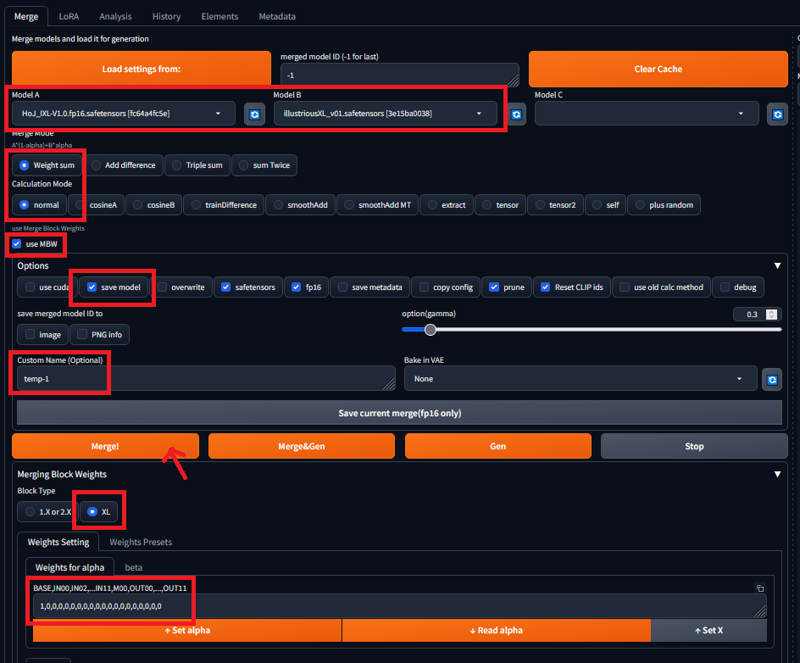

First, let's replace the CLIP with the Illustrious base CLIP using Block merge only for BASE at 1 (and keeping the rest at 0)

For copy pasting, here is the list of numbers down there: 1,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

Hit "Merge!" and you'll get your "temp-1" checkpoint.

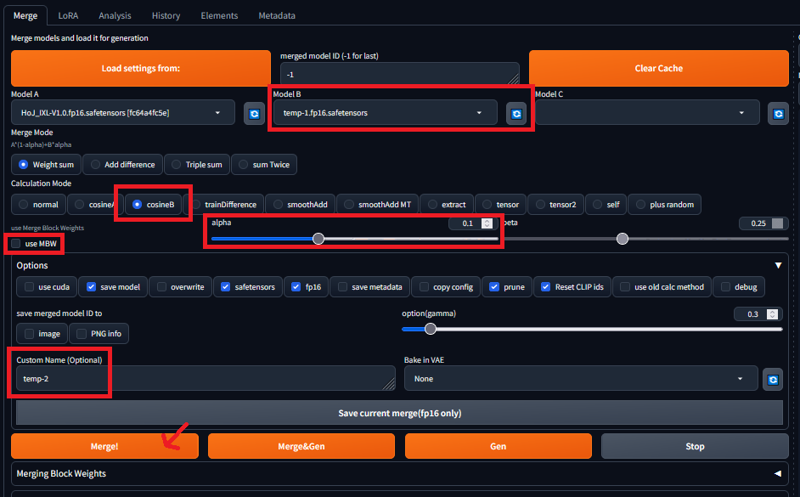

Hit the reload button next to Model B and select "temp-1". Now, we are going to merge it back in the original model using CosineB. We can't do it directly because CosineB with block merge will do stuff even at 0 (so, remember to disable MBW)

Hit "Merge!" and you'll get "temp-2" (you can throw away "temp-1" now if you want).

I am doing it this way to get some measure of the original model CLIP back in to avoid losing its "originality" but keeping most of the Illustrious CLIP.

Now, let's see the result! (while we wait for generation, let's talk about how the Cosine stuff works, you can skip the math if you wants 😉)

When using CosineA or CosineB, first, key by key, the Cosine Similarity of the two models is calculated (it is either similarity of A to B or similarity of B to A). This result in a single number "k" showing how much they are similar. This number (minus the alpha value) is what is used for the merge instead of just alpha.

Normally, it goes like this: A * (1-alpha) + B * alpha

Now, we have: A * k + B * (1-k)

This means that if the two models at this key are too differents, one of the model is prefered, if they are very similar, the other one is prefered. This help smooth things up and in general means that:

- With CosineA, it will look like Model A with some details of Model B

- With CosineB, it will look like Model B with some details of Model A

Nice! (the flower is still in the drink, but the overall result feels clearer).

At this point, you can continue your merging journey from a fresh base or just use "temp-2" as-is :D

Or, you can also try if you want CosineA with a higher alpha. Since the UNET are exactly the same, regardless of the computation, it should not change :D

Thanks for reading! 😊

NB: Here, i used Illustrious since HoJ is an Illustrious checkpoint, but you can also use a previous checkpoint of your merge journey before you went all-out with the merging of LoRA and stuff :D