The Mechanism and Concept of Tagging in LoRA Creation [Based on Dataset Tag Editor]

Tagging is an essential part of creating a LoRA.

However, many people may not actually know how the tags they assign are used during training.

This article explains how tags function and how to apply them effectively for different purposes, assuming the use of the Dataset Tag Editor (DTE).

What Does It Mean to “Remove the Tags You Want It to Learn”?

You may have heard on YouTube or blogs that you should “delete the words you want it to learn.”

But doesn’t that seem contradictory?

Shouldn’t you add tags for what you want the AI to learn?

This question makes sense once you understand how AI learns tags.

AI Learns the “Relationship Between Tags and Images”

AI doesn’t treat tags as mere labels—it learns the relationship between tags and the visual elements in the image.

For example, if an image with a red ribbon is tagged “red ribbon” or “red bow,” the AI associates those tags with the visual appearance of the ribbon.

So when you input “red ribbon” in a text-to-image (t2i) prompt, the LoRA uses this learned connection to generate a red ribbon.

Understanding the Mechanism of Trigger Words

LoRA uses “trigger words” to activate its learned features.

However, these are not magic keywords.

They are influenced by other elements like prompts and the base model.

Think of them as helpful cues to call on the features the LoRA has learned.

What’s important here is that a trigger word is a type of tag.

When you don’t assign specific tags to visual elements during training, those features get associated with the trigger word instead.

This means the trigger word can serve as an umbrella, encapsulating the entire image content.

Used well, you can create a LoRA where “typing one word brings up everything.”

Comparing 3 Tagging Patterns

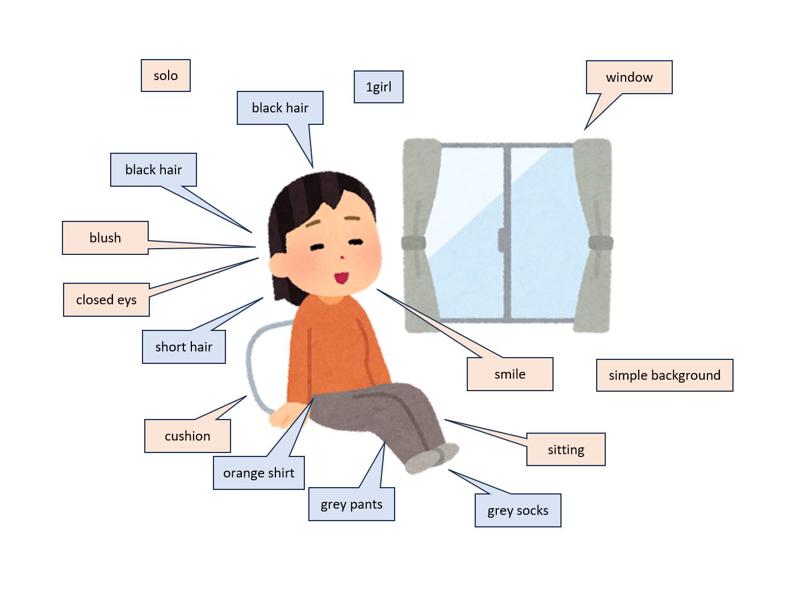

Tagging involves selecting elements like appearance, expressions, poses, background, or props, extracted by an interrogator.

Choosing what to include or exclude significantly affects LoRA quality.

Let’s compare three patterns assuming you want to train a LoRA that focuses only on a character’s appearance and clothing.

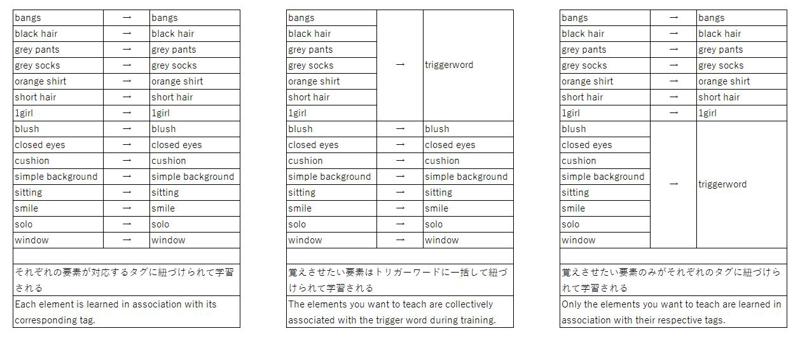

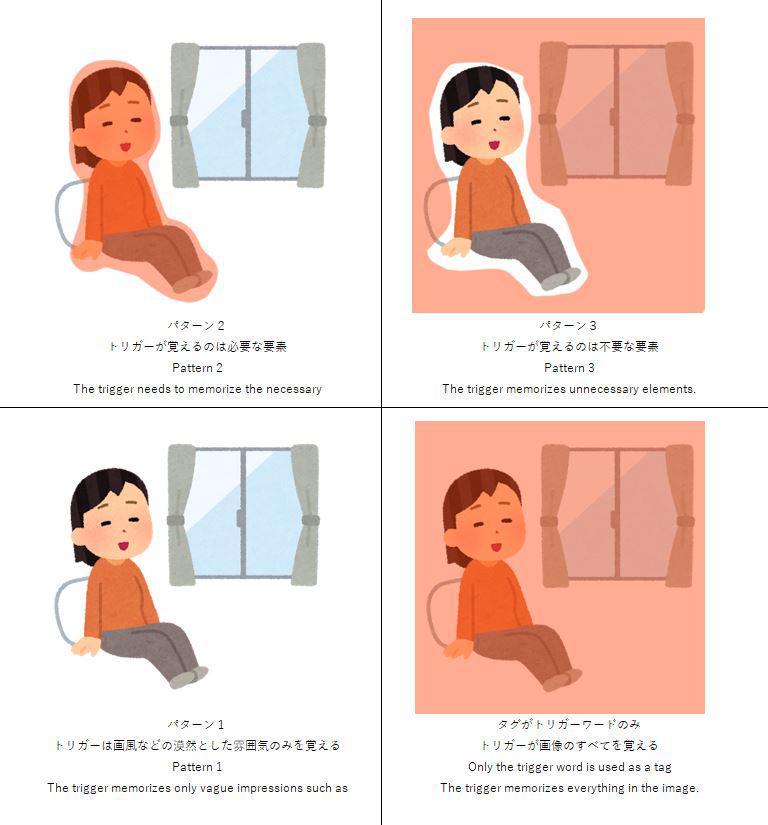

Pattern 1: Apply All Tags

You keep all the tags extracted by the interrogator.

Tags:

1girl, bangs, black hair, blush, closed eyes, cushion, grey pants, grey socks, orange shirt, short hair, simple background, sitting, smile, solo, window

Characteristics & Effects:

Each element is learned separately and weakly.

Outcomes:

Each tag’s learning density is low → reduced reproducibility.

You must explicitly specify tags in prompts.

Trigger word has minimal effect due to weak tag linkage.

Pattern 2: Remove Tags You Want to Learn (Keep Only Unwanted Tags)

You don’t tag the elements you want to learn.

Instead, you tag only the unwanted elements, and use a trigger word to encapsulate everything else.

Tags:

1girl, blush, closed eyes, cushion, simple background, sitting, smile, solo, window

Characteristics & Effects:

All learning elements are triggered with one word.

Outcomes:

Character appearance & outfit are recalled by the trigger word.

Tagged unwanted elements (e.g., background) won’t affect output unless prompted.

If you try to manually add details, it may conflict with base model elements.

Ideal for multi-outfit LoRA, as trigger words can clearly separate styles.

Pattern 3: Keep Only Tags You Want to Learn (Delete All Unwanted Tags)

You only tag the desired features and remove background or props.

Tags:

bangs, black hair, grey pants, grey socks, orange shirt, short hair

Characteristics & Effects:

High accuracy per tag; fine control via prompts.

Outcomes:

The LoRA may show little effect from the trigger word alone.

You can directly summon each element by using its tag.

In multi-outfit LoRA, overlapping tags may cause interference, requiring nuanced prompt phrasing (e.g., “blue uniform” vs. “navy school outfit”).

These patterns can be represented in a table or diagram as shown below.

Summary: Tagging Depends on “What You Want to Teach”

LoRA behavior changes significantly based on how tags are used.

The key is to clearly define your goal upfront:

“Do I want it to learn everything?” or

“Do I want to teach it something specific?”

Advanced: Using Tags to Control Blurry Results

If your dataset contains blurry or low-quality images, your LoRA output may look “fuzzy.”

Tags can help isolate and control this.

Example:

Tag blurry images with blurry

→ LoRA learns that “blurry = low sharpness.”Then avoid using blurry in prompts

→ This helps suppress the blurry trait and achieve clearer output.

This is an effective technique to “isolate unwanted traits with tags.”

A Fun Real-World Example

Here’s an interesting case that might seem strange unless you understand tagging:

Someone trained a bunny-eared character using Pattern 2 (untagged learning + trigger word).

When they added “rabbit ears” to the prompt during generation, the output showed four ears.

Why? Because:

The trigger word already included the bunny ears from training.

Adding “rabbit ears” in the prompt brought in another pair from the base model.

So, both were generated, resulting in four ears!

This may seem like a bug, but with tagging knowledge, it makes perfect sense.

※ This article is based on self-study and personal interpretation. Please feel free to point out any inaccuracies.