Level Up Your Local Prompts: Big Update to the AI Prompt Enhancer!

Update: changes have been made to the app thanks to a couple of suggestions in the comments:

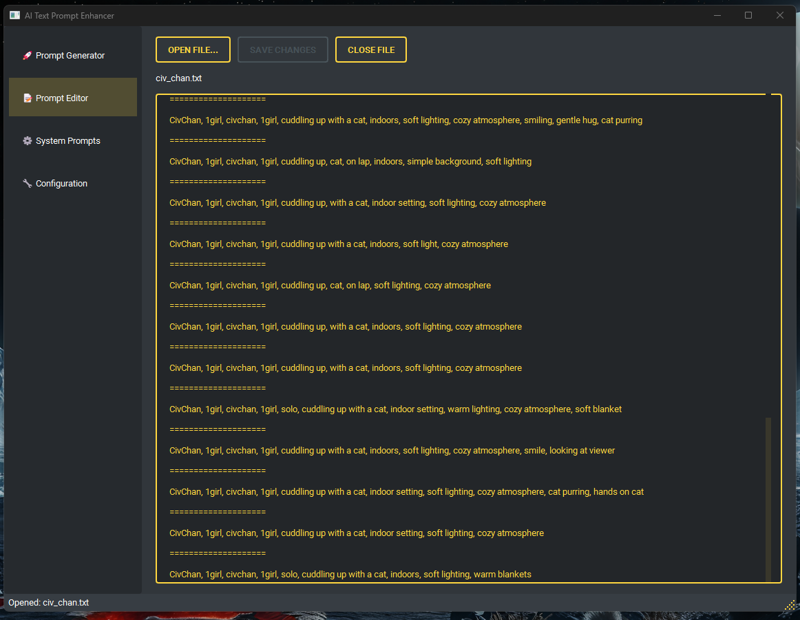

Prompt editor tab added (simple text editor to manually check and edit generated prompts)

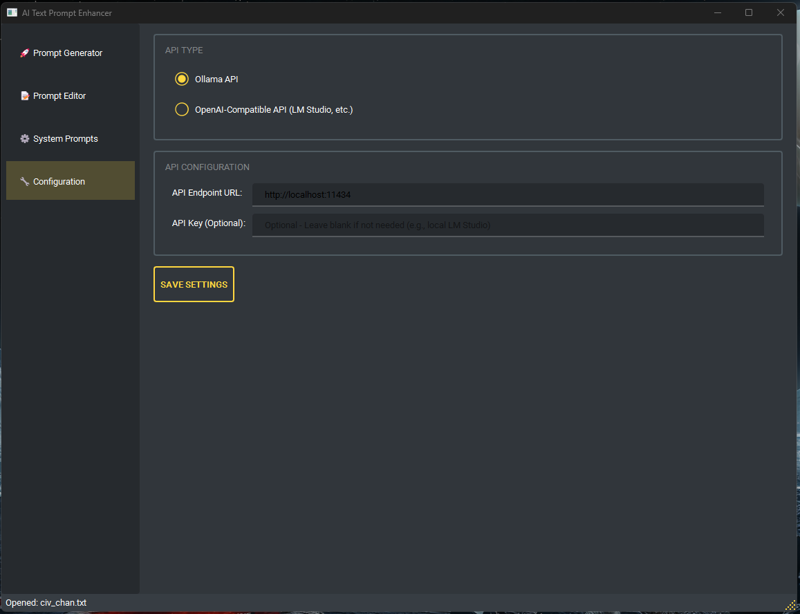

configuration tab added (set api and api keys within the app, remembers between sessions, still able to edit the config file manually)

support for openai-compatible api, like lmstudio (in theory should also work with chatgpt, jan and other apis that mimic the openai api, not tested all due to there being so many so let me know if you have issues)

Thank you to everyone suggested changes and additions in the comments and through DM, feel free to give feedback, this is my first project I've wanted to keep improving and update, so all feedback is welcome :).

The project link is on github https://github.com/LamEmil/ai-prompt-enhancer

Some of you might remember the simple local prompt enhancer script I shared a couple of days ago – a basic Python script to interact with local AI tools like Ollama and generate better prompts based on examples. Well, I have to admit, I got hooked! I've been using it non-stop across different AI generators, and while it worked, my use made me realize it could be so much more.

So, I decided to give it a major overhaul! It's evolved from that initial script into a proper desktop application with a focus on a much-improved user experience, cleaner code under the hood, and features designed for long-term, practical use.

If you found the original useful, I think you'll love this new version! I've uploaded the complete project to GitHub so you can grab it easily:

What's New and Improved?

This wasn't just a minor tweak; it was a significant rewrite aimed at making the tool more powerful and pleasant to use.

Modern, User-Friendly Interface:

Gone is the basic tkinter ui! The app now uses PySide6 (Qt for Python) to create a clean, modern dark UI.

The layout now features a navigation sidebar to switch between the main "Generator" and the "System Prompts" management areas, keeping things organized.

Controls are grouped logically, making it more intuitive to find what you need.

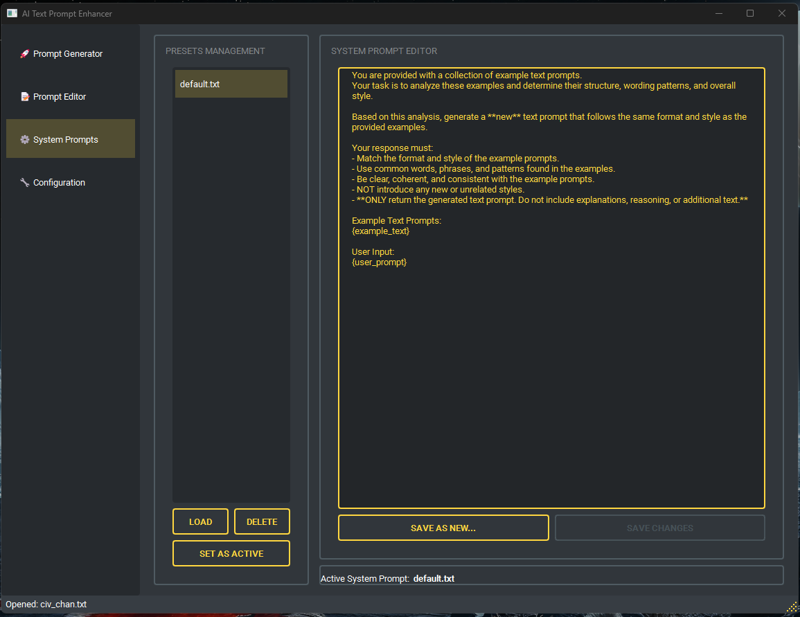

Robust System Prompt Management: This was a key area for improvement!

Editable System Prompts: You're no longer stuck with the instructions I initially wrote. You can now directly edit the core system prompt (the instructions given to the AI model) within the app.

Save/Load Presets: Experiment with different instruction styles! You can save multiple system prompt variations as named presets (e.g., "Creative Style", "Technical Explainer", "Concise Prompts", "Suno prompts", "wan prompts").

Set Active Prompt: Easily select which saved preset is currently active for generation, allowing you to quickly switch the AI's behavior without constant editing. The app remembers your active prompt between sessions.

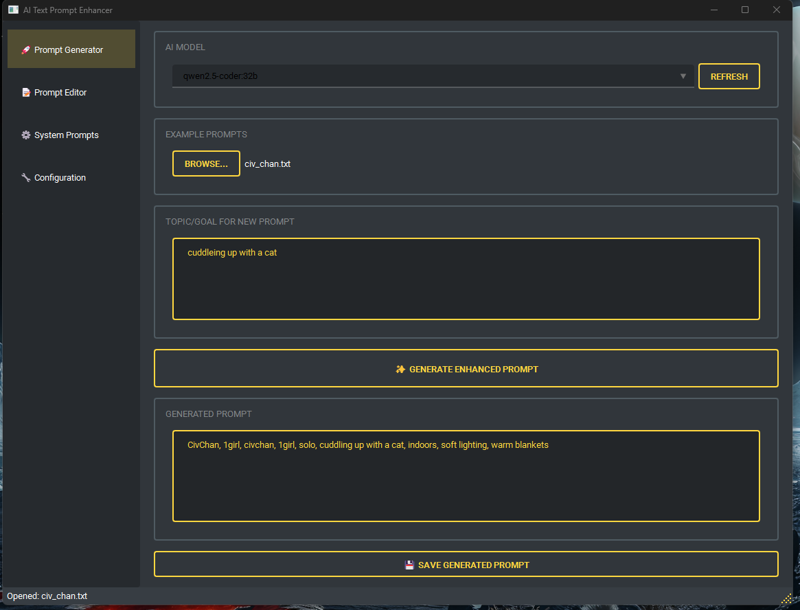

Streamlined Output Saving: This is a huge convenience boost!

Instead of just printing the output, there's now a "Save Generated Prompt" button.

The first time you click it, you choose a text file to save to.

Every subsequent time you click save, the newly generated prompt is automatically appended to the end of that same file, neatly separated.

Why is this great? I found this incredibly useful for generating batches of prompts. You can just sit there, generate variations, hit save, and end up with a single

.txtfile ready to be loaded directly into tools like ComfyUI (using file inputs for nodes like Load String) or other batch processing workflows, completely eliminating manual copy-pasting!

Under-the-Hood Cleanup:

The code is now structured into multiple files (

main_window.py,api_client.py, etc.), making it cleaner, easier to understand, and potentially easier for others (or future me!) to modify or contribute to.API calls run in separate threads, keeping the UI responsive even during generation.

Configuration (like the API endpoint) is managed in a simple

config.jsonfile.

Core Features (Still Included!)

Of course, the core functionality remains:

Connects to a local AI API (defaults to

http://localhost:11434for Ollama, but configurable in app).Loads example prompts from a

.txtfile to learn the desired style/format.Generates new prompts based on those examples and the topic/goal you provide.

Getting Started

You can get the code from: https://github.com/LamEmil/ai-prompt-enhancer

Check the README: The

README.mdfile on GitHub has the latest requirements and setup instructions.Install Libraries: You'll primarily need Python 3,

PySide6,qt-material, andrequests. Using therequirements.txtfile from the repo is the easiest way:pip install -r requirements.txtRun: Launch the app with

python run_app.pywor double click the file.

Let me know what you think if you give it a try!