I got tired recently by people at reddit telling me how good is ComfyUI but refusing to share any workable workflow or giving anything substantial.

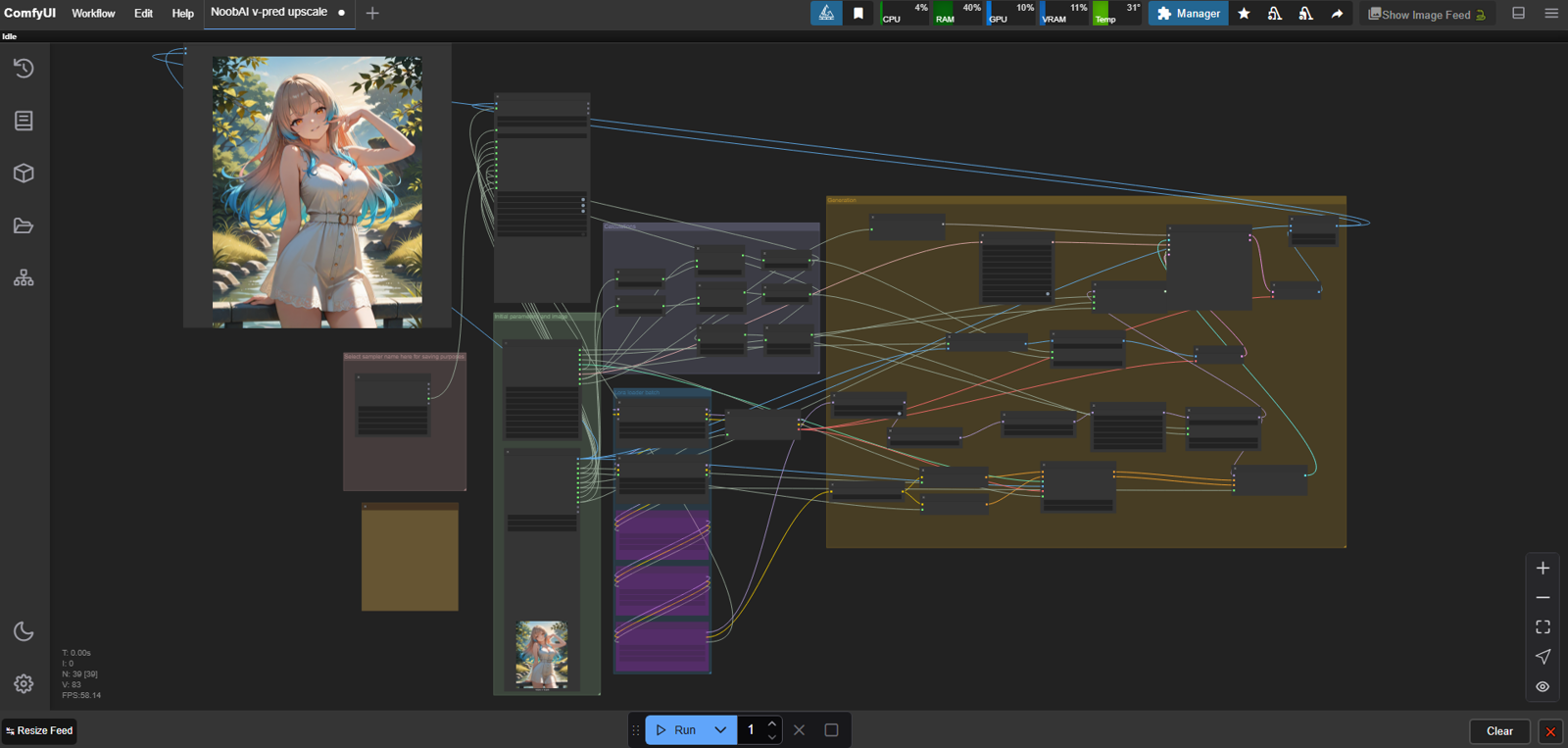

Previously I made a set of guides on making NoobAI v-pred work in ForgeUI. While we are all waiting for full release of RouWei model (and if you are not - shame on you). I wanted to mimic my Forge experience in Comfy. This basically my first attempt in making a workflow that I could use myself in Comfy. Outside of that I tried couple of workflows from authors, tried searching for ones but all v-pred ones were even missing modelsamplingdiscrete node. Of course they were not working well.

So I set on a quest of figuring out how to not just make it work, but also produce results at least comparable to what I do in Forge. Take it with grain of salt, I cannot call myself "A top tier AI artist", but I have my preferences an I sunk quite some time into it to now goods and bads. I hopped on this train back in times when SD1.5 was already mature but SDXL was not out yet.

TLDR - Comfy is not there in terms of usability. Custom nodes can fix some stuff, but not really. I had fun building workflows, but using them was not that fun. I'm back to Forge.

What I tried to recreate? My way of doing things is working with prompt under normal resolution until I get something satisfactory. Then I inpaint the image for details and fix stuff. Then I upscale the image using MOD and fix details/inconsistencies introduced by upscale.

First of all, you just cannot simply throw image from one workflow to another in comfy. By that I mean not just moving the image, but moving all associated parameters with it, like prompt, sampler, etc. Usually Comfy in general Is 3-4 clicks and even action behind of all other UI's, which is infuriating. Also workflows I built are slower then Forge. This is kinda lol for SDXL. Granted, I could use teacache, convert to tensorrt on the fly etc for speedup, but really, that was unexpected.

I found 4 ways to save metadata in image, but only one is actually able to load something from it - it is SD Prompt Read. And stuff you read with it from image saved SD Prompt saver node is not enought to save it one more time. Really? Guess tech is not there yet.

Back to workflows.

I barely organised them for you to be able to link them up or just incorporate stuff to your own workflow.

Basic workflow

It is, well, basic. Generate images, load loras, barebones. But it features:

Incorporating v-pred specific nodes (RescaleCFG and ModelSamplingDiscrete)

Incorporating all goodies that I consider valuable for generating waifus (Detailerdaemon, SAG, FreeU (had to use custom node for it because official implementation does not have start at and stop at, making it unusable for me))

Saves ALL metadata with Civitai compatible hashes. All loras that you used will be properly linked now thanks to SD Prompt Reader extension.

Inpaint workflow

Basically a copy of official Inpaint Crop and Stitch workflow of unofficial node (lol) with v-pred specific inference. It is best that I found, but nowhere near to old A1111. In order to do a full image inpaint here you will have to add second mask and adjust target resolution. Also it does not support upscaling cropped are with upscale model because it is a different pipeline in comfy. But it is best I found, it does not deteriorate image outside mask, it basically implements Softinpaint for seamless stitching back to image etc.

Upscale workflow

Here comes the most elitism and sheer hatred I got from reddit "discussions". If you are unaware - there is hi-res fix in A1111. It upscales and it is really good. But someone made a node in comfy that does simple upscale via img2img and called it hi-res fix. It is simple to check that - launch Forge, do a generation click use hires fix, then paste original into img2img and give it a go there. Nowhere near. I spent months tring to figure out a way that is better, an I was not the only one.

Now I share it with you in comfy, feel free to roast it xD

First eye opener was that when upscaling with upscale model - end result is always x4. You have to scale it down afterwards. Sounds inefficient but ok. Btw comfy does not output tiling process for GANs in console so comfy users think that it is not tiled like DAT, but whatever.

Also I tried to get generation data from image that has it all (and you dont really need to change anythig outside of seed), but due to comfy being comfy ended up overcomplicating workflow. Also there is a cool feature that converts bunch of nodes into a single one. Tried it on generation parameters, but it broke node inside, so here comes even more clutter.

But back to workflow. It:

Upscales by a specified factor. No need to calculate and change all the values in bunch of nodes. By the way it is one part where it is better then Forge.

First uses an upscale model, then does img2img pass using Mixture of Diffusers for seamless tiling.

Incorporates tiling controlnet. With MOD and it you can go as high as 0.65 denoise and image will have consistent details with really rare ocasions of leaking. Usually it happens on flat surfaces, inpaint them later or lower denoise a bit.

Uses awesome node to compare results. But images with mask are broken so it requires to load additional image.

All workflows are linked in zip file. Also link to images for direct drag and drop: