Step-by-Step Guide Series:

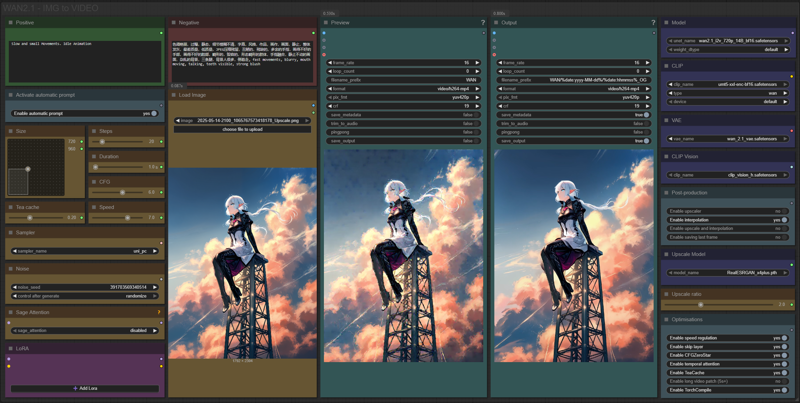

ComfyUI - IMG to VIDEO Workflow 2.X

This article accompanies this workflow: link

Foreword :

English is not my mother tongue, so I apologize for any errors. Do not hesitate to send me messages if you find any.

This guide is intended to be as simple as possible, and certain terms will be simplified.

Workflow description :

The aim of this workflow is to generate video from an existing image in a simple window.

Prerequisites :

If you are on windows, you can use my script to download and install all prerequisites : link

ComfyUI,

Microsoft Visual Studio build tools :

winget install --id Microsoft.VisualStudio.2022.BuildTools -e --source winget --override "--quiet --wait --norestart --add Microsoft.VisualStudio.Component.VC.Tools.x86.x64 --add Microsoft.VisualStudio.Component.Windows10SDK.20348" 📂Files :

For base version

I2V Model : wan2.1_i2v_480p_14B_fp8_e4m3fn.safetensors or wan2.1_i2v_720p_14B_fp8_e4m3fn.safetensors

In models/diffusion_models

CLIP: umt5_xxl_fp8_e4m3fn_scaled.safetensors

in models/clip

For GGUF version

>24 gb Vram: Q8_0

16 gb Vram: Q5_K_M

<12 gb Vram: Q3_K_S

I2V Quant Model : wan2.1-i2v-14b-480p-QX.gguf or wan2.1-i2v-14b-720p-QX.gguf

In models/diffusion_models

Quant CLIP: umt5-xxl-encoder-QX.gguf

in models/clip

CLIP-VISION: clip_vision_h.safetensors

in models/clip_vision

VAE: wan_2.1_vae.safetensors

in models/vae

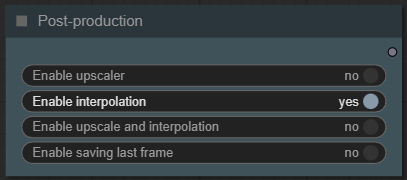

ANY upscale model (depreciated):

Realistic : RealESRGAN_x4plus.pth

Anime : RealESRGAN_x4plus_anime_6B.pth

in models/upscale_models

📦Custom Nodes :

Don't forget to close the workflow and open it again once the nodes have been installed.

Usage :

In this new version of the workflow everything is organized by color:

Green is what you want to create, also called prompt,

Red is what you don't want,

Yellow is all the parameters to adjust the video,

Pale-blue are feature activation nodes,

Blue are the model files used by the workflow,

Purple is for LoRA.

We will now see how to use each node:

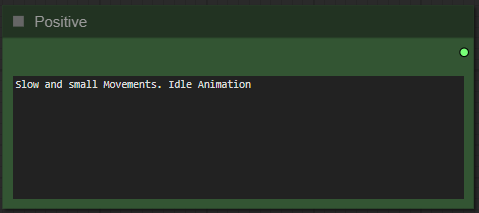

Write what you want in the “Positive” node :

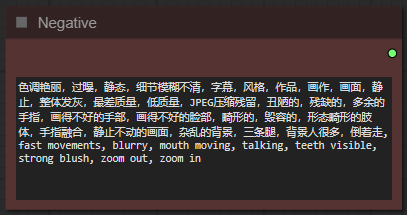

Write what you dont want in the "Negative" node :

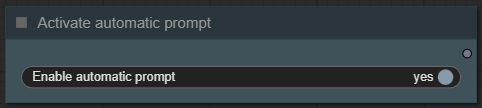

Choose if you want automatic prompt addition :

If enabled, the workflow will analyze your image and automatically add a prompt to your.

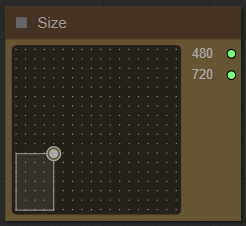

Select image format :

The larger it is, the better the quality, but the longer the generation time and the greater the VRAM required.

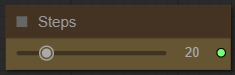

Choose a number of steps :

I recommend between 15 and 30. The higher the number, the better the quality, but the longer it takes to generate video.

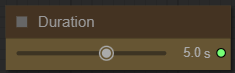

Choose the duration of the video :

The longer the video, the more time and VRAM it requires.

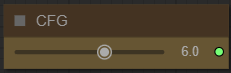

Choose the guidance level :

I recommend to star at 6. The lower the number, the freer you leave the model. The higher the number, the more the image will resemble what you “strictly” asked for.

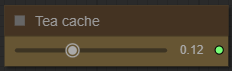

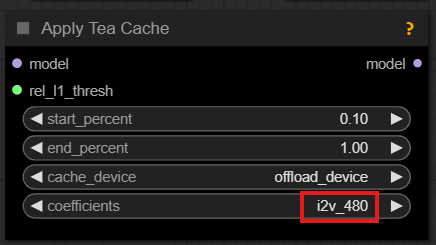

Choose a Teacache coefficients :

This saves a lot of time on generation. The higher the coefficient, the faster it is, but increases the risk of quality loss.

Recommended setting :

for 480P : 0.13 | 0.19 | 0.26

for 720P : 0.18 | 0.20 | 0.30

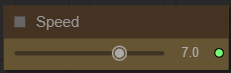

Choose video speed :

This allows you to slow down or speed up the overall animation. The default speed is 8.

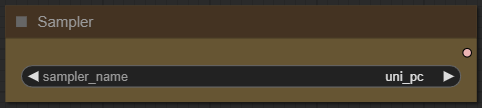

Choose a sampler and a scheduler :

If you dont know what is it, dont touch it.

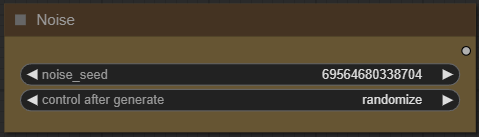

Define a seed or let comfy generate one:

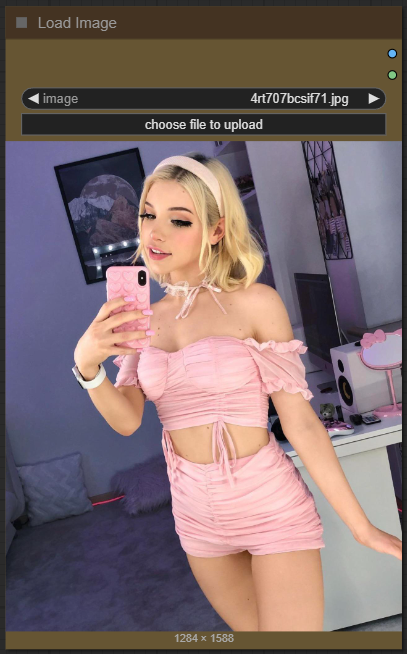

Import your base image :

Don't forget that it will be reduced or enlarged to the format you've chosen. An image with too different a resolution can lead to poor results.

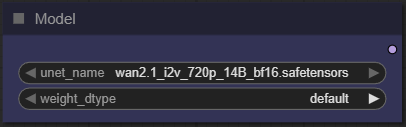

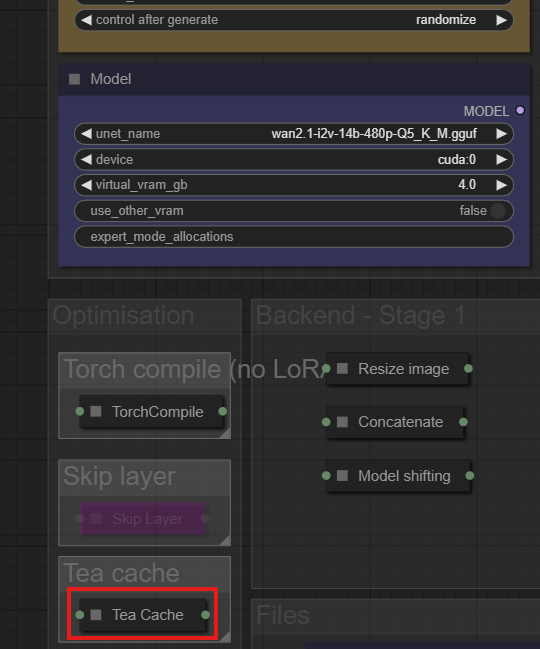

Select your model :

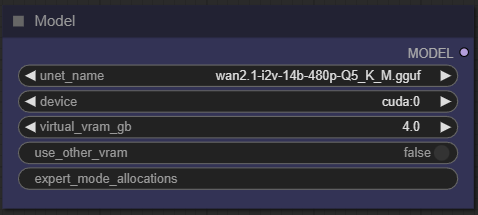

Select your model and set virtual VRAM : (only low-VRAM version)

Here, you can switch between Q8 and Q4 depending on the number of VRAMs you have. Higher values are better, but slower.

The virtual VRAM setting allows you to unload part of the model into your RAM instead of your VRAM. This allows you to load larger models or increase stability at a very slight performance penalty.

The right amount depends a lot on your available VRAM. The easiest way is to gradually increase this setting until you notice that all of your VRAM is consumed during video generation. (Indeed, if 100% is used, it is probably you are actually in an overflow situation.)

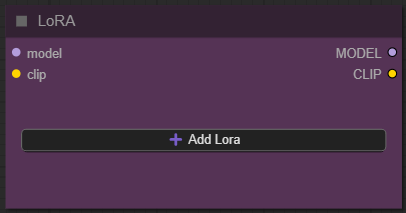

Add how many LoRA you want to use, and define it :

If you dont know what is LoRA just dont active any.

Now you're ready to create your video.

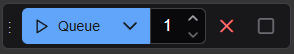

Just click on the “Queue” button to start:

A preview will be displayed here, then the final video :

But there are still plenty of menus left? Yes indeed, here is the explanation of the additional options menu:

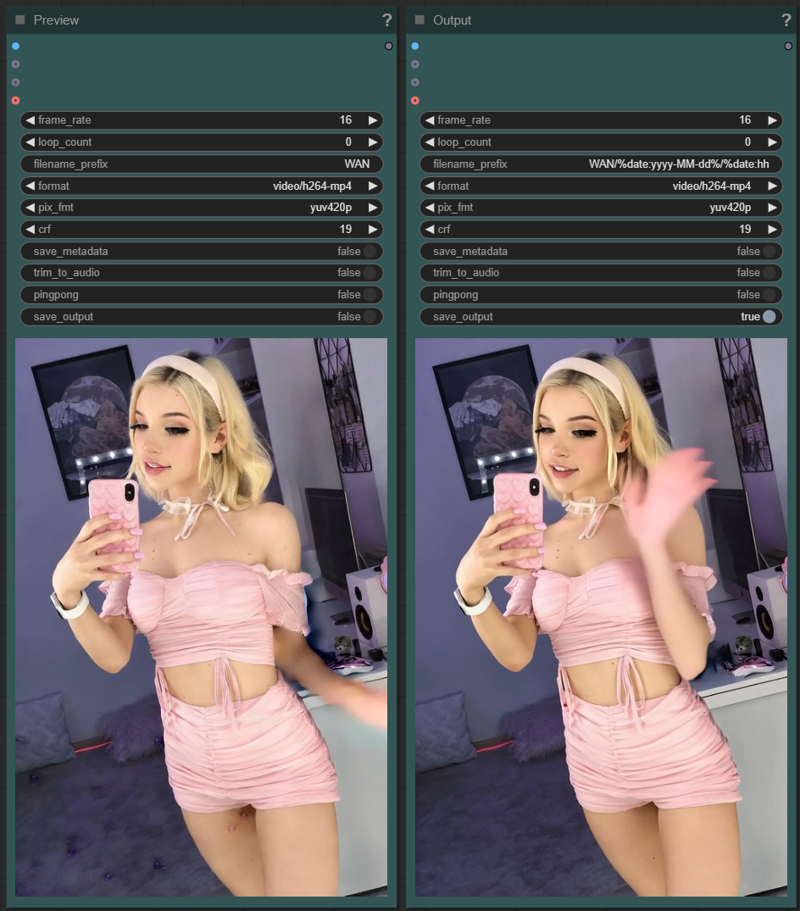

In this menu you can activate processing on your video once it is finished:

An upscaler to increase the resolution,

an interpolation to increase fluidity,

both preceding at the same time,

recording the last frame (useful for creating a sequence, for example)

Here you can select your upscale model and then the resolution increase ratio.

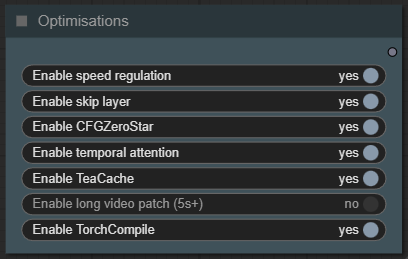

This last node allows you to activate different optimizations:

Speed regulation: allows you to take into account the "speed" slider to modulate the overall speed of the video

Skip Layer: Skips certain layers during guidance, improving structure and motion consistency in animations.

- Can reduce jitter and overprocessing of fine details

NOTE: Can degrade quality if used with low guidance or complex promptsCFGZeroStar: Improves overall quality of low CFG generations by blending outputs to reduce artifacts.

- Helps reduce flickering and hallucinations.

NOTE: May reduce contrast/detail if overusedTemporal Attention: Lets the model attend to multiple frames at once, rather than treating each frame independently.

- Improves consistency in moving objects (hair, limbs, etc.)

NOTE: Uses more VRAM; may slow down generationTeaCache: Smart frame caching that skips rendering similar frames, saving compute and increasing temporal coherence.

- Speeds up generation significantly

NOTE: High threshold may cause “frozen” frames

Long video patch: This option uses RifleXRoPE to reduce bugs for videos longer than 5 seconds. The developer recommends up to 8 seconds with WAN and this module.

Torch Compile: Optimizes your model into a faster, more efficient version.

- Significantly Speeds up processing

- Reduces memory usage and improves performance

NOTE: 1st run will be slower due to compilation/optimization

Some additional information:

Organization of recordings:

All generated files are stored in comfyui/output/WAN/YYYY-MM-DD.

Depending on the options chosen you will find:

"hhmmss_OG_XXXXX" the basic file,

"hhmmss_IN_XXXXX" the interpoled,

"hhmmss_UP_XXXXX" the upscaled,

"hhmmss_LF_XXXXX" the last frame.

Attention: if you're using a 720p model, you'll also need to change the "Apply Tea Cache" node settings.

This guide is now complete. If you have any questions or suggestions, don't hesitate to post a comment.