🌙 HiDream LoRA Training Guide

"Don't let your dreams be dreams."

— Shia LaBeouf, modern philosopher

Let’s make those dreams real. At least in HiDream.

This guide assumes you're on Windows 10 or higher, with a GPU with 24GB of VRAM and 64GB of RAM. that’s my personal setup.

Everything should work with less VRAM and on Linux too, but you’ll need to tweak some parameters accordingly. I’ll drop hints where I can.

This is a follow-along in which we will create a LoRA together. All needed files (training data, helper scripts) can be found in the attachements of this article.

🧰 Requirements

VSCode

The editor for people who would get brain bleeds from Vim.

Get it here and install it like any other app:

👉 https://code.visualstudio.com/

Git

Git lets you download (and upload) code from GitHub.

I'm not sure if Git comes bundled with VSCode nowadays, so just to be safe:

👉 https://git-scm.com/

WSL

AI researchers aren't paid to make beautiful code that runs everywhere, they're paid to produce results fast. That’s why many cool projects and libraries are Linux-only and look like mom’s spaghetti.

WSL is Microsoft's way to run Linux inside Windows.

Follow this guide to install it:

👉 Install WSL – Microsoft Docs

Quick version:

Open a terminal with admin rights and run:

wsl --install

You can also use the standalone installer:

👉 WSL GitHub Release Page

Remember the username and password you set up!

When we need the wsl user I will call it wsl_username

CUDA 12.8

CUDA lets you use your GPU for more than just gaming.

Download it from:

👉 https://developer.nvidia.com/cuda-downloads

Also make sure to have the latest drivers installed for your GPU. This way your wsl system can access your GPU as well.

Hugging Face Account

We’ll need the LLM llama-3.1 for training HiDream. Unfortunately, downloading it requires a Hugging Face account.

Create an account.

Visit this page and click "Accept" to unlock access:

👉 LLaMA 3.1 8B Instruct

If you made it this far: nice work.

If not, let ChatGPT (or any other LLM) guide you through the steps.

Getting diffusion-pipe and the models to train

diffusion-pipe

We are using diffusion-pipefor training because it's currently (04/23) the only training framework which enables you training HiDream LoRAs locally

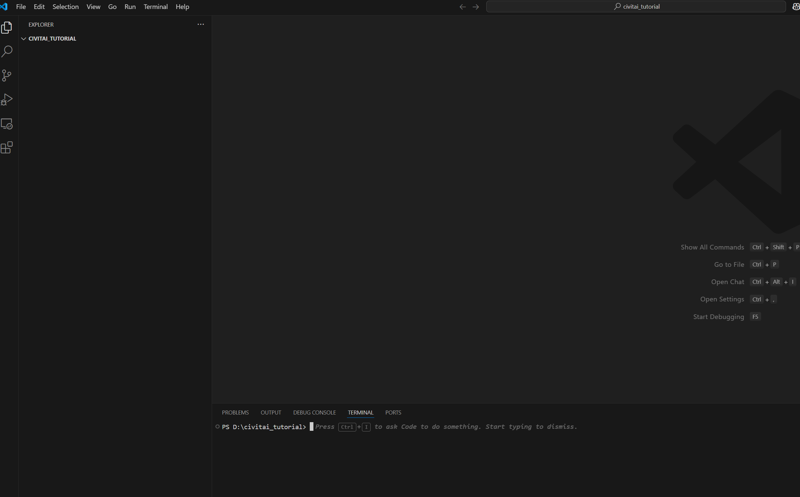

Create an empty folder anywhere on your system and open it inside VSCode

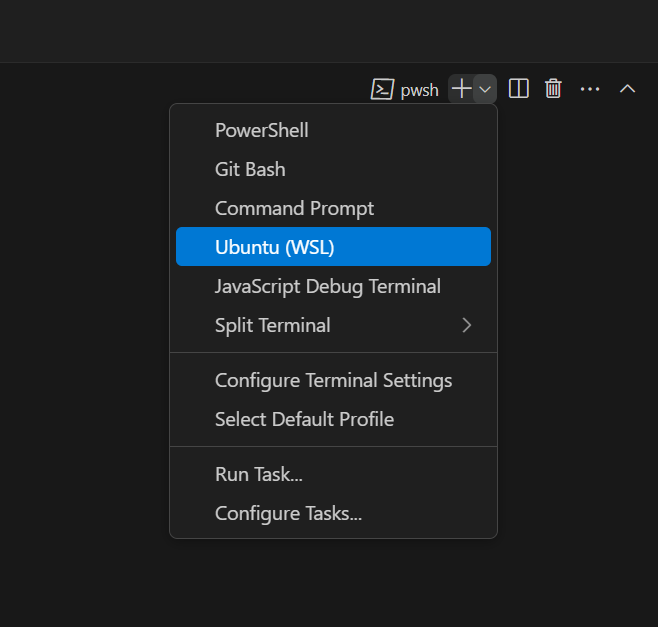

In VSCode open up the wsl terminal so we can start with some serious terminal shenanigans

Now let's do some terminal shenanigans! Be sure to be INSIDE WSL!

# Copy each line starting without '#' seperately into the wsl terminal

# Update your wsl environment and install some needed tools

sudo apt update

sudo apt full-upgrade -y

sudo apt-get install -y git-lfs wget python3-dev build-essential unzip

# Copy the whole line into the vscode terminal to clone diffusion-pipe

git clone --recurse-submodules https://github.com/tdrussell/diffusion-pipe .

# Create environment for python and activate it

python -m venv venv

source venv/bin/activate

# Install pytorch

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu126

# Install huggingface downloader

pip install -U "huggingface_hub[cli]"

# Install diffusion-pipe requirements

pip install -r requirements.txt

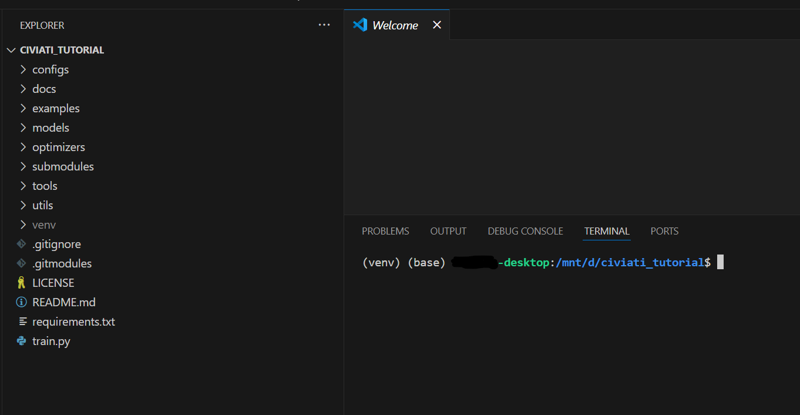

If everything went ok it should look like this - the contents of diffusion-pipe with a venv folder and the terminal starts with (venv)

---------------------------------------------

The models

Let's continue downloading the models! It'll need 74GB

Open your Windows Explorer and type the following into the address bar

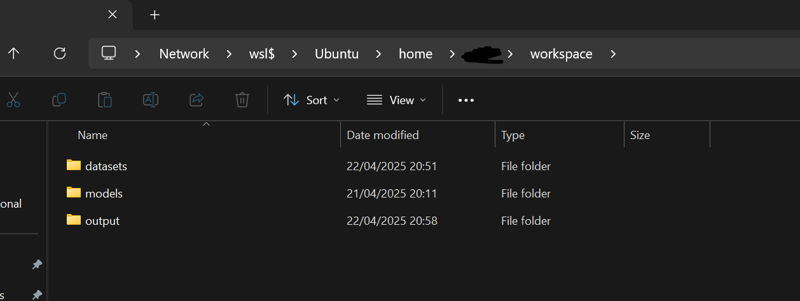

\\wsl$\Ubuntu\home\<wsk_username>You are now in the home dir of your wsl linux distro. Create a workspacefolder and inside of it a datasets and outputand models folder

Back into vscode type the following into the terminal

# Login into Huggingface (follow the instructions after executing the command)

huggingface-cli login

# Download hidream

huggingface-cli download HiDream-ai/HiDream-I1-Full --local-dir /home/<wsl_username>/workspace/models/hidream-full

# Download llama 3.1

huggingface-cli download meta-llama/Llama-3.1-8B-Instruct --local-dir /home/<wsl_username>/workspace/models/llama-3.1If all is done inside the models directory you should have all models needed for training

Setting up the data

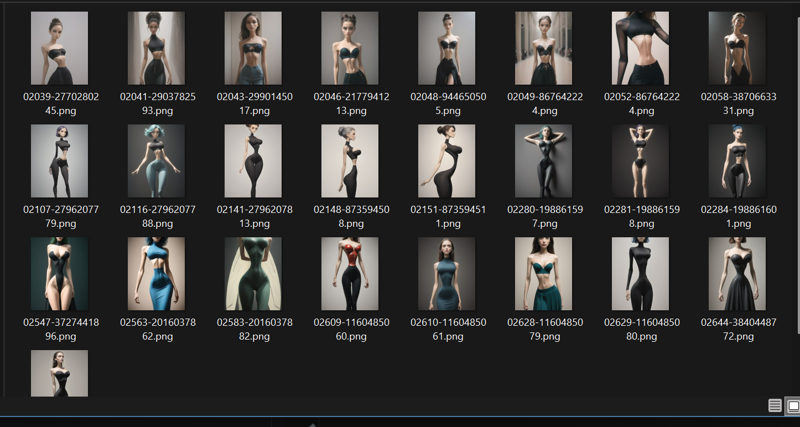

Get your images ready! Or if you want to follow along download microwaist_small.zipattached to this article.

It's the worst dataset in existence, but will proof my point that you can train HiDream with shit and get something usable out of it.

It's a bunch of girls prompted with small waistinside some Pony models.

And as you can probably imagine we will train a "small waist" LoRA.

Unzip it and copy the folder microwaist_smallinto your datasets folder in your workspace

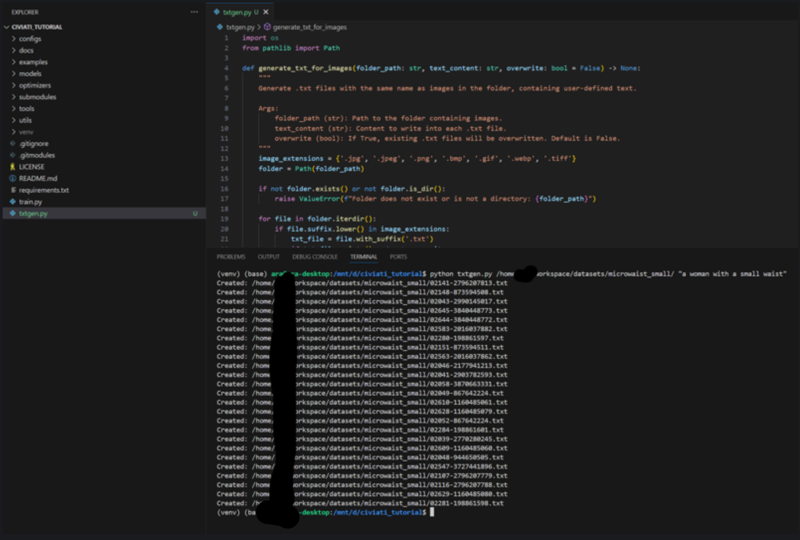

As you probably know you need to caption your images. For that you can find txtgen.pyin the attachements which you will download and put into your diffusion-pipedirectory

Then you can batch create a text file for every image with

python txtgen.py /home/<wsl_username>/workspace/datasets/microwaist_small/ "a woman with a small waist"

Of course feel free to use whatever images you want

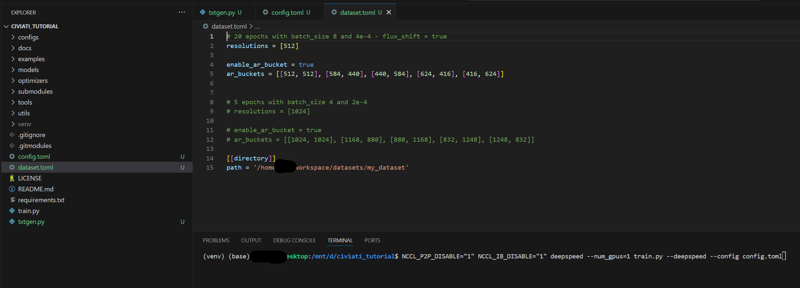

Setting up the training configuration

Because of reasons nobody knows the attachement upload of civtai does the check if the uploaded data is a text file or not in the absolute worst way possible and that's why it'll block *.toml files.

So you need to do some copy paste

create a config.tomland a dataset.tomlinside your diffusion-pipefolder and copy following content into it

config.toml

# Output path for training runs. Each training run makes a new directory in here.

output_dir = '/home/<wsl_username>/workspace/output/'

dataset = 'dataset.toml'

epochs = 1000

micro_batch_size_per_gpu = 4

pipeline_stages = 1

gradient_accumulation_steps = 1

gradient_clipping = 1.0

warmup_steps = 25

blocks_to_swap = 20

eval_every_n_epochs = 1

eval_before_first_step = true

eval_micro_batch_size_per_gpu = 1

eval_gradient_accumulation_steps = 1

save_every_n_epochs = 10

#checkpoint_every_n_epochs = 1

checkpoint_every_n_minutes = 15

activation_checkpointing = true

partition_method = 'parameters'

save_dtype = 'bfloat16'

caching_batch_size = 1

steps_per_print = 1

video_clip_mode = 'single_beginning'

[model]

type = 'hidream'

diffusers_path = '/home/<wsl_username>/workspace/models/hidream-full'

llama3_path = '/home/<wsl_username>/workspace/models/llama-3.1'

llama3_4bit = true

dtype = 'bfloat16'

transformer_dtype = 'nf4'

max_llama3_sequence_length = 128

flux_shift = true

[adapter]

type = 'lora'

rank = 32

dtype = 'bfloat16'

#init_from_existing = '/mnt/d/Diffusion/output/20250418_19-01-50/epoch28'

[optimizer]

type = 'adamw_optimi'

lr = 4e-4

betas = [0.9, 0.99]

weight_decay = 0.01

eps = 1e-8

datset.toml

# 20 epochs with batch_size 8 and 4e-4 - flux_shift = true

resolutions = [512]

enable_ar_bucket = true

ar_buckets = [[512, 512], [584, 440], [440, 584], [624, 416], [416, 624]]

# 5 epochs with batch_size 4 and 2e-4

# resolutions = [1024]

# enable_ar_bucket = true

# ar_buckets = [[1024, 1024], [1168, 880], [880, 1168], [832, 1248], [1248, 832]]

[[directory]]

path = '/home/<wsl_username>/workspace/datasets/microwaist_small'If you are using other paths or images or whatever do change the properties.

⚠️ If you have <24GB Vram you can set micro_batch_size_per_gputo 1 and gradient_accumulation_steps to 4 instead. Also try upping the blocks_to_swap

Start the training!

All there is left to do is starting the training. Run the following

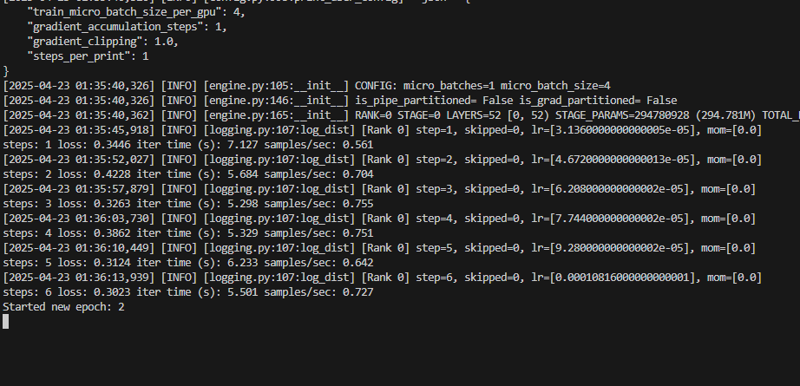

NCCL_P2P_DISABLE="1" NCCL_IB_DISABLE="1" deepspeed --num_gpus=1 train.py --deepspeed --config config.toml

That's it! The training should boot up and every 10 epochs (a run through all training images = 1 epoch) a LoRA will get saved and every 15minutes a backup.

Let the training run for 30 minutes.

Trying your LoRA out

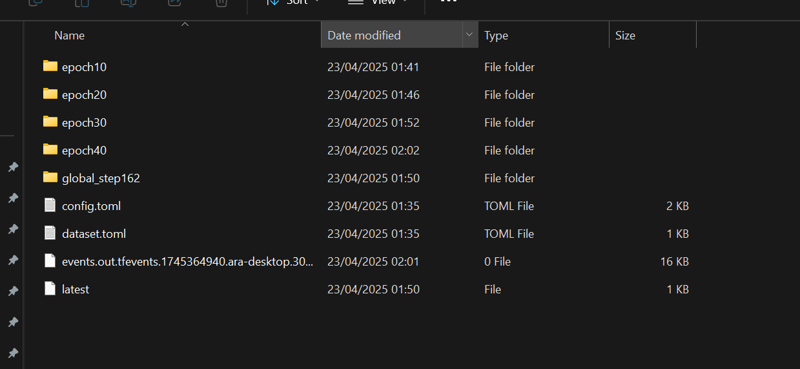

After a while the output directory shoud look like this

The global_stepfolders are backup folders which contain no LoRA but state information to resume training fron that point.

Your LoRAs are in the epoch folders.

Go into the epoch40 one and copy the adapter_model.safetensorsinto your ComfyUI models/loradirectory.

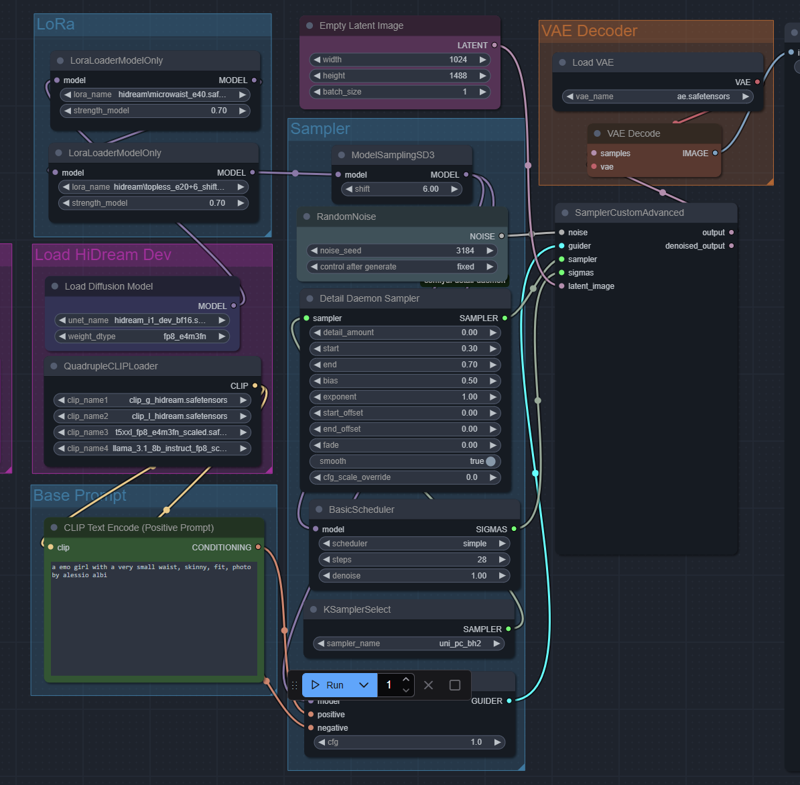

If you need a quick workflow to test it out:

https://civitai.com/articles/13868/pyros-hidream-dev-workflow

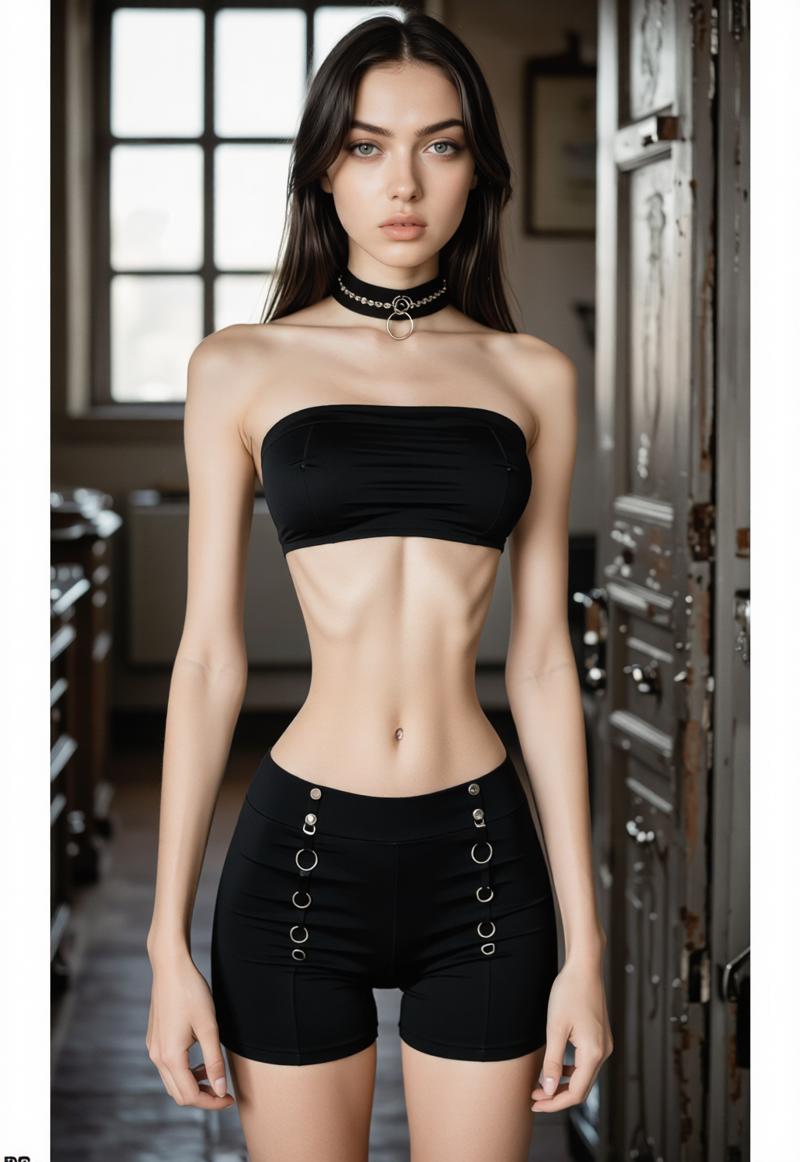

And it should produce some small waist girls:

Good job, you did your first HiDream LoRA!

FAQ:

Q; I want to join your cult!

A: https://discord.gg/PSa9EMEMCe

Q: My wsl environment crashes all the time!

A: Create a .wslconfigfile inside your C:\Users\<windows_username> folder with following content

[wsl2]

# Limit RAM to avoid host thrashing and crashing WSL silently

memory=40GB # Use 48 out of 64GB, keep room for Windows

# Cap CPU usage, you likely don’t need all cores

processors=12 # Adjust depending on how many cores you have (e.g. 16/24 total)

# Create swap for stability under load (especially with mixed precision)

swap=40GB

swapFile=C:\\Users\\<win_username>\\wsl-swap.vhdx # Optional: custom swap file path on fast SSD

# Optional: allow faster networking between Windows and WSL

localhostForwarding=true

# For debugging purposes (only enable if everything crashes so people can help you)

# debugConsole=trueInside a normal windows terminal put in wsl --shutdownto restart your wsl instance and activate this config.

Increase swap and decrease memoryif crashing continues.

Version History:

v1.0 - 23.04.2025 - first release