When you train a LoRA of a person with kohya_ss, you probably have a single dataset folder named like this:

2_Person Name

This contains all your training images, which are a healthy mix of closeups/head shots, mid shots and full body shots. The number before the underscore is the repeat count and anything after that are the class tokens. In this case every image will be repeated two times, which then makes up the training set of an epoch (the training order is randomized every epoch and depends on the seed).

A problem I came across is that I sometimes don't have enough good full body shots to use for example. This leads to under training of the body type and far away faces. This is the root cause of bad likeness in generated full body shots. What I need in these cases is to increase the repeats of certain images only. So I just make another folder:

2_Person Name

4_Person Name

Moving body shots to the folder with 4 repeats has the desired effect. DataLoader shuffling works freely across multiple folders (I confirmed this just in case). So the randomized training order is not affected by this, just that some files get trained twice as often per epoch. In trainer terminology, separate folders are intended for training different "concepts" in the same run. But it really is that simple and there is nothing else to it (it's all just convention!).

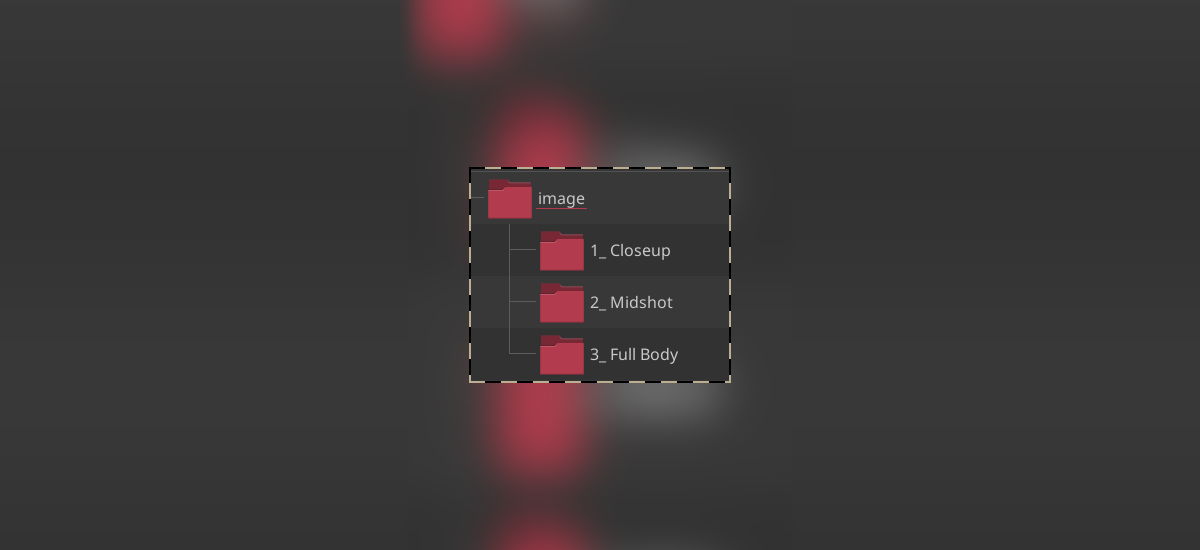

We can make this more user friendly if all our images have a .txt caption file (which you should always do). The trainer will ignore folder names (class tokens) in this case (except for the repeat count). Class tokens are only used in case of a missing caption, where they are injected verbatim as the caption itself. It's just another convention: instance/class tokens are nothing more than fallback captions! So training isn't affected at all when we pick more descriptive folder names like these:

2_Near

4_Far

This has the added benefit that we can split a set even if the repeat counts are equal:

2_Closeup

2_Midshot

4_Full Body

This gives you a much better overview of the balance between the three different zoom levels. You will immediately see which images you need more of for example. This can be hard to tell from looking at all the images sorted by filenames only.

I categorize my images based on the size of the face relative to the whole image. So full visibility of the body doesn't actually matter for the last category. In closeups, the face fills roughly half of the image or more. Mid shots usually show the upper body. When knees come into frame, the face is so small that I use the "full body" folder.

When you add or remove images, you might have to recalculate the repeats of course. There is an automated feature in kohya_ss for this. Under Training | LoRA | Dataset Preparation there is a little tab "Dreambooth/LoRA Dataset balancing" which automates this exact thing. But I have never used this feature once.

What I observed is that a perfectly balanced set of closeups/mid/body shots doesn't lead to good training results at all. It even happens when all the repeats are equal (all unique images). If you set your train for far away shots to have good facial likeness, then closeups are generated with exaggerated features (looking weird, older or like a caricature). Eyes and teeth might also start deforming, at which point we are clearly in cooking/frying territory.

You would then find that generating closeups works perfectly fine with a lower weight/strength. But the correct fix is to adjust the training instead. After some direct testing on this issue, I settled on the "1/2/3 rule" for now. Mid shots times the repeat count should be twice that of closeups (times their repeat). Full body shots should be trained at roughly three times the closeups (equal to closeup + mid, so 50% of total). These are just my findings and only valid for my training sets and settings. You might find that completely different numbers work for you. It's all in the context of training with a specific SDXL checkpoint and it might be a model specific quirk even.

The reason for this imbalance is probably how training progresses at different speeds. In full body shots, the face is only a very small part of the image (and therefore the loss, which guides the training). Of course when we generate images, we run a face detailer. That detailing pass is just img2img and will run at closeup or mid shot distance, but we usually run fairly low denoising strength to keep everything consistent. If the far away face is off by a lot in head shape for instance, the detailer can't make a face with high likeness from that.

Closeups seem to train very quickly and accurately. This is not surprising since the face covers almost the complete image. Knowledge about the face will also cross over from the mid shots. That's why we can get away with training a closeup only every 6th image on average (2 mids and 3 body shots per closeup).

Note that according to that 1/2/3 ratio, 50% of all trained images are from the full body folder. If you find you need a very high repeat count for the body, you should really add more (and varied) images. This will prevent overfitting on poses and clothes. Also note that if you just merge mid shots and closeups (or don't have any), it's a simple 50/50 rule and you could let kohya calculate the balance for you every time.

To figure out if your train has any of these issues and needs a rebalance, it's helpful to have a fixed set of prompts for testing which cover a range of closeups, mid and full body shots. Simple prompts with facial expressions that are in the training set are best for this.

There are other uses for this separate repeat folder method. You can increase the repeats of everything and then add a folder at repeat count 1 that contains any low quality images you want to include. They will pass on some knowledge into the model but hopefully won't infect everything with their grain/noise/quality level.

Maybe you have a set of high quality images, but they are all from the same event or photo shoot. You want the model to benefit from the variety in camera angles and poses, but don't want the common piece of clothing to bleed in everywhere. That would also be a candidate for inclusion at a lower repeat count.

Now that you know that instance/class tokens are not a thing and concept folders are just made up, maybe you can think of other uses.

Summary:

Half of all training images times repeats should probably be body shots (face zoomed out)

Of the half that are closer than full body, it's probably enough to have one third be closeups

kohya_ss training concept folder contents are mixed freely during training

Instance/class tags from folder names are ignored if all images have captions

We can reuse the concept folder mechanism to freely adjust repeats for groups of images

Take control of repeats and adjust as needed (maybe don't equalize everything)