Trying to increase the training accuracy, I was using multiple taggers and merging the results using a script, but some anon commented when I disclosed my data prep and captioning pipeline that he was also doing the same in an automated way. I took inspiration from his post and also managed put together an inference script that automated this process.

This is just a fork/modified version of the tag_images_by_wd14_tagger.py from kohya_ss training script repository repurposed to use multiple taggers at the same time and theoretically reduce the tagging errors (false positives and false negatives). With this version, I'm using swinv2, conv2 and moat2 at the same time and taking the mean of the classification results. It's useful if you don't plan to spend much time revising the captions.

Downloads

Setup

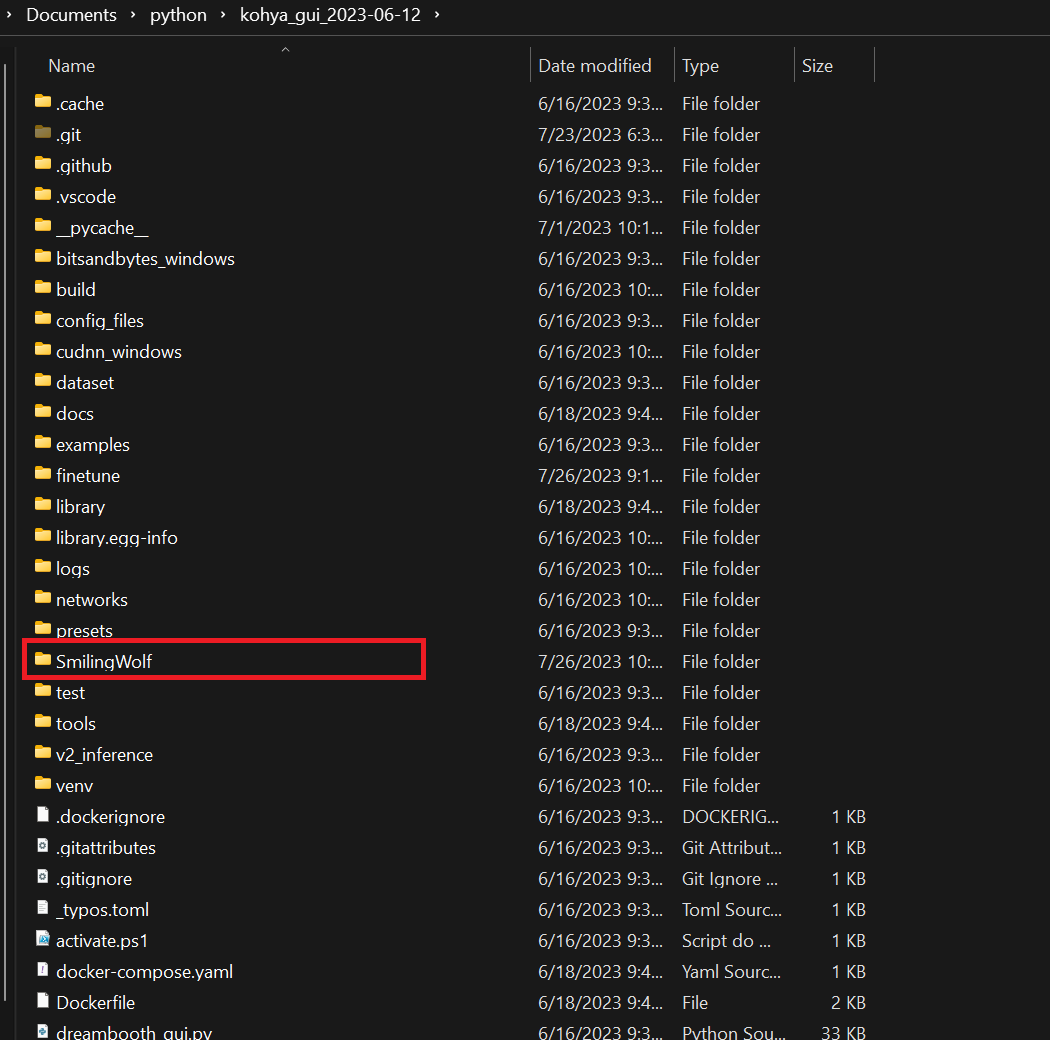

1 - Create a new folder named "SmilingWolf" in the root of your training script of choice (in my case, bmaltais gui)

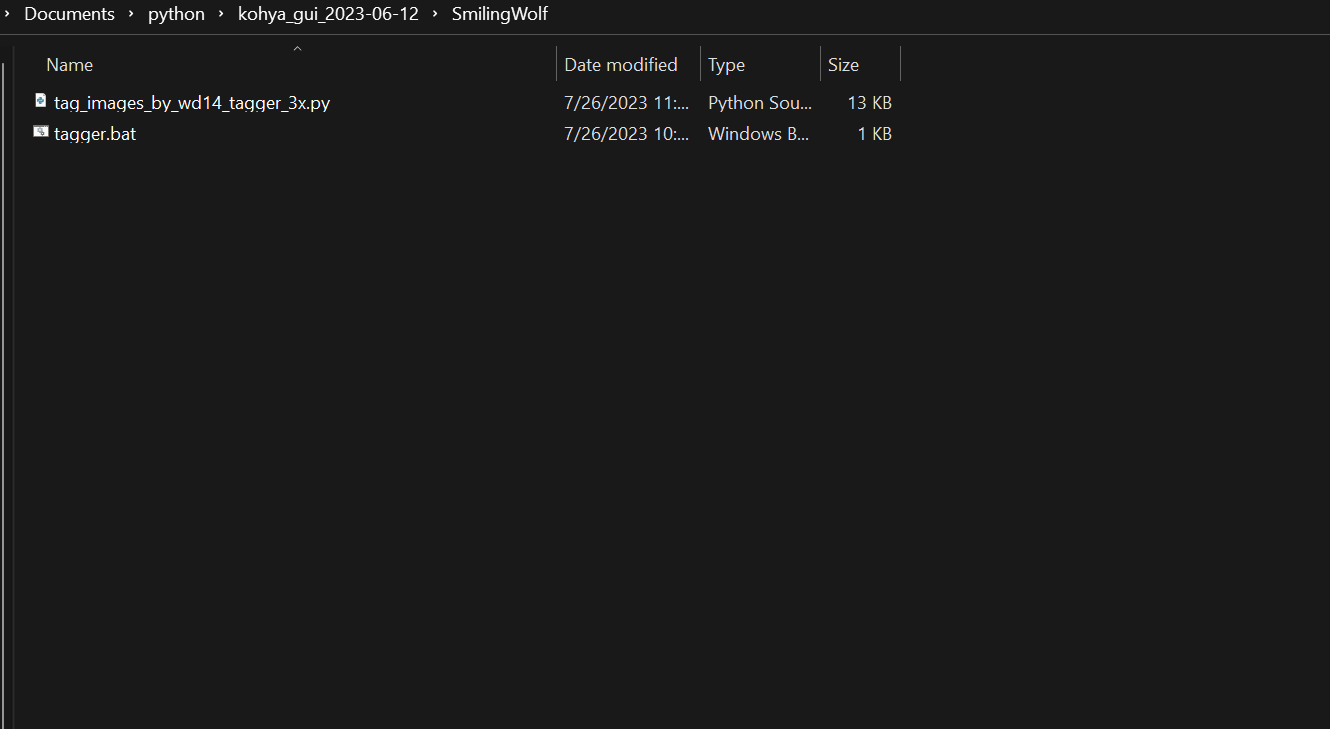

2 - Download the modified version of the tagger inference script and batch file and put it inside the "SmilingWolf" folder

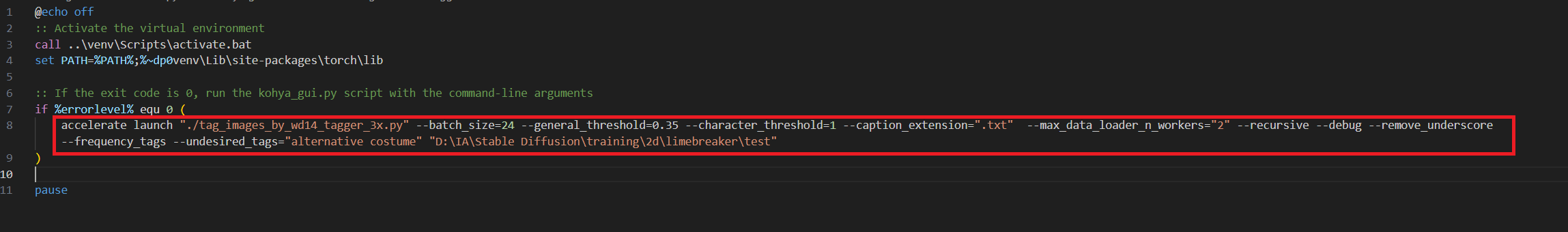

3 - Open the "tag_images_by_wd14_tagger_3x.py" script with any text editor and edit the "TAGGERS" variable at the line 23 and remove/add all the models you want to use

You will only need to do the steps above whenever you want to download and use a new tagger that you didn't already installed and referenced in the script.

Captioning

4 - Open the "tagger.bat" file with any text editor and edit the arguments like you were using the taggers normally and then execute the batch file to call up the tagging script and start the captioning process

5 - Go take a walk and come back when it's done!

Credits:

Anon from /h/ who shared the insight for this

SmilingWolf for his amazing work at training the WD1.4 taggers

Kohya-ss for the original tagging and trainer scripts

bmaltais for the training gui and venv batch files