Introduction

Hello, everybody. I am bweh and I make videos using ComfyUI and Wan 2.1. More specifically, I make NSFW videos with anime-style women either having sex or looking sexy. At the time of writing this, I have been on Civitai for a little over two months, I have published over 60 videos, and I have just over 450 followers. Honestly, I haven’t been around for very long at all I don't think I even do very much around here.

I am writing this article for a rather unfortunate reason. After this article is published to Civitai, I will be halting all my AI video generation work. I will no longer be creating or uploading any AI-generated content anywhere on the internet, and I will likely leave the site soon after. I have reasons for why I am leaving this hobby behind, which I will explain at the end of the article. This article will mark the conclusion of my time with this hobby.

Because of this, I wanted to leave behind this guide for how I’ve used ComfyUI and Wan to create my videos. This guide is equal parts how-to and a look behind the scenes at my process. It's intended both for other creators to learn from, and for others curious about my process. I’ve been asked a few times over DMs how I make my videos, and I think an article explaining it would be helpful.

This article is also just me pretending that my very brief time here mattered and I wanted to reflect on what I’ve done and what I think it meant to me.

With all of this in mind, I would like to caution readers on what to expect. For starters, I am not an expert on all things AI and video generation. While I have said this is a guide, this is not me trying to tell people I have the “right” way of doing things, because I honestly don’t know what the best practices are. A lot of the reasons for why I’ve done the things described in this article are just “I don’t know” and “I’m not sure”. I am seriously not an expert in this.

All of my knowledge was self-taught, there are many things I just don’t know enough about, and I haven’t been able to deep dive as many parts as I would have wanted while I was still active. The knowledge I am sharing is my own, and I suspect there may be parts where I’m wrong or working suboptimally. I hope others can understand, and I hope what little knowledge I can share may be helpful in some way.

Initial setup

Hardware

I want to start first with my hardware. You can likely tell I don’t use Civitai’s generator. All my image and video generation is done on my PC with its Nvidia Geforce 5080 and 32gb of RAM. It’s no 4090/5090, but this is quite powerful for running AI workflows. As a result, I had way fewer performance issues on my side and I did very minimal optimizations. I occasionally ran into OOM errors, but nothing that couldn't be solved by restarting ComfyUI or my PC. I haven't tried doing any video generation on lower VRAM, so I don't know how to optimize for less powerful hardware.

Unfortunately, this means if you are running on less VRAM, I don’t have any solutions for you. In a way, it feels more like I’m brute forcing myself through my problems with sheer power from the GPU.

My content style

As you can tell by checking out my profile, my content is focused entirely on making videos of female original characters, often having sex, other times dancing or moving erotically. I consider my tastes pretty tame and I definitely avoid more extreme fetishes. And while I do prefer larger breasts and they feature prominently in my videos, I do so out of convenience for animation and because I don’t want people or platforms to freak out over smaller or flat chests for looking underaged.

I prefer anime styled depictions over realistic or semi-realistic styles. My mental baselines for what my videos should resemble are inspired by Live2D animations. The goal for my videos is to create an impression of an animated image in motion, albeit more smoothly and with a high frame rate. I also don’t want my videos to appear too 3D, or like the characters are CG models. Not that I dislike them, but it’s a requirement given the generated image I would create first.

My steps for creating videos consist of:

Creating the base image or starting frame of the video

Running a Wan 2.1 image-to-video generation workflow to generate the video

Refining or iterating on the video

I will describe the first two steps in their own sections. The last section has way less content than the others, unfortunately, and I just combined it with the second. The short explanation is that refining is hard and time-consuming and there’s no structured way to properly explain it, so you’re mostly on your own there. That being said, the first two steps ought to inform you how to get started.

Creating the start frame

This first step is just typical image generation, and there isn’t that much I need to explain if you already know how to generate images.

To achieve the style for my videos, I use Hassaku XL for my checkpoint. There isn't a specific reason why I use Hassaku XL over anything else. I just tried it initially, thought the style was nice, and I stuck with it. Why I haven't tried, say, Pony, is more due to laziness than anything else.

My workflows

I have my super basic general workflow attached ("basic-char-generator.json") if people are interested. It’s essentially just the default ComfyUI image generation workflow but with a LoRA stack loader stuck in the middle. I debated whether it was even necessary to include because everybody should know how to create something like this by themselves.

For LoRAs, I often use one for better quality (https://civitai.com/models/929497) and another for better hands (https://civitai.com/models/200255). I’ll be honest that I don’t know how much having either of these two actually contributes to the quality of my images and I feel like I’ve just set them once on my work template and have left them in since then.

For the KSampler, I use 20 steps, CFG 8.0, Euler sampler, Karras scheduler, and a denoise of 1.0. How much these settings matter aren't entirely clear to me and I haven't experimented enough to determine which settings work best. I remember reading somewhere online that Karras was good for anime-style images, so that's what I stuck with. Honestly, most of it was set just once and I've stuck with using them since then.

I have the seed set to "randomize" when I'm using it, but when I load an image I generated with this workflow, I switch it to fixed. Often I'll need to load an older image and try to make a small change to it while keeping as much of the same image. Setting the seed control to fixed ensures it doesn't massively change.

My latent image size is 832px width and 1216px height. This was one of the recommended resolutions for Hassaku XL, so I stuck with it.

For my positive and negative prompts, I always start from these:

Positive:

1girl,

nsfw,perfect hands,

absurdres,best quality,

score_9, score_8_up, score_7_up, score_6_upNegative:

lowres, bad anatomy, bad eyes, ((bad hands)), text, error, missing fingers, ((extra fingers)),extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, bad quality, sketchI do almost all my work with this general workflow. Because I’ve saved a template of my workflow with my prompts, LoRAs, and all my settings prefilled, it’s easy to load and start working on something. It’s simple and easy to change the tags and I can also reload an image and work from it later on.

Aside from the general workflow, there are a few others I made myself that I also occasionally use. Two of these workflows involve using an image tagger to get tags from uploaded images.

The “get-img-tags.json” workflow just takes an uploaded image and uses WD 1.4 to return tags. As with my general workflow, I was also debating whether to include something this basic.

I use it to examine tags. For example, if I want to add something to an image but I don’t know how to tag it, I will use this workflow to examine the tags. Other times, I will upload an image of a person in a pose and then copy all or some of the tags into my general workflow to create a very rough approximation of that original image. It basically just gives me a bunch of tags to start with that I could then keep changing or adding as I see fit.

I was also experimenting if I could copy the image seed so the general workflow could use both the tags and seed to create a rough approximation of the original image. This doesn’t quite work, but it was interesting to experiment with.

I took this idea and tried expanding it with my second “i2i-convert.json” workflow–mostly to simplify needing to manually copy-and-paste and replace tags between workflows. I’ll be honest that this workflow needs a lot more work and I don’t recommend using it in its current form. It’s incomplete, inefficient, and it’s also not possible to open a generated image and make incremental changes to it.

This workflow is basically trying to do what ControlNet does, but without ControlNet, and with somewhat mixed results. However, I have used it for some of my videos with some surprising success. It’s not intended to make a 1:1 styled copy of the image. It just gives me a varied image and set of tags I could keep working off of, and helped me to come up with ideas when I had none.

The final workflow I wanted to share uses Comfy Couple (https://github.com/Danand/ComfyUI-ComfyCouple). I didn't use it all that much due to one major limitation, but I found it helpful for creating basic 2girl yuri images. The problem with generating 2girls images is that you don't have a way of specifying which tags should apply to which specific character in the image. There are supposed to be solutions involving ControlNet, but I wasn't able to get any of them working and this turned out to be the easiest and simplest solution. It's not proper regional prompting, but it lets you ensure some tags apply only to a specific character and not get mixed up.

But be warned that there is a major limitation: this custom node has a tag size limit that's really easy to hit if you have too many tags. For example, in my above screencap, adding just one more tag to Character 1 causes the workflow to fail. There seems to be a limit to the number of tokens it can have per-character and there isn’t a fix at the moment.

That being said, this workflow and the custom node are fine if the scene and characters aren’t too complicated.

Start frame considerations

The key consideration is that the base image generated in this step isn’t just an image for Wan to animate. The image is actually the first frame of the video. Everything in this image will be interpreted and used by Wan to generate the rest of the frames. Because of this, it’s important that this image be set up to build the rest of the video after it.

For making a good image, there are a few things I think about:

Use the negative prompt to get rid of undesirable features.

In particular, I put motion lines, trembling, breath, and occasionally orgasm in the negative prompt.

The body trembling lines often seen in anime-style images look particularly bad when animated. These exist in static images to convey motion and sensation, but there’s no need to show them at all in a video because the video is obviously animated. These lines also occasionally show up when a character is climaxing, so orgasm is another tag to add if trembling doesn’t already remove them.

breath isn’t always needed in the negative prompt, but I often prefer to leave out because they might show up as overly thick clouds of smoke when animated.

I sometimes add sweat to the negative prompt. I don’t like how it looks on characters when animated because it just sticks in place and stays unnaturally still while the rest of the body is moving. Sweat drops on the bottom of a character’s chin, for example, often looks like a blob stuck to them when animated and can look really distracting.

Anticipate what is going to be moving in the video and anything that might be hidden.

I try to watch out for anything in the image that might look good only in a static image but not when animated. For example, very long and flowing hair always looks great, but remember the hair might just end up floating in place while animated. The same goes for loose single strands of hair. When animated, that single strand might be mistaken for a background detail and get stuck in place instead.

As well, if you are going to have a character undressing in the video, keep in mind that Wan doesn’t actually know what’s under the character’s clothes. While it can anticipate some anatomy, its interpolated results might not be very good. Basically, whatever is in the image is all that Wan has to work off of.

Yuri and group sex scenes are really hard to work with.

Creating yuri scenes, threesomes, or group sex images is shockingly difficult. Hassaku XL seems unable to consistently create good images of these scenes by itself. The difficulty ensuring certain character tags are only applied to a specific character with 2girls already necessitated a special workflow. I highly recommend finding LoRAs to help with creating these images.

Check for bad hands, questionable anatomy, etc.

Self-explanatory and obligatory. I don’t do any inpainting, but inpainting would help a lot here.

Remember you can always (and likely will) go back to update the image.

It’s hard to anticipate how an image is going to look when it gets animated. Fortunately, you can just drag it back into ComfyUI and load the workflow. When doing this, just remember to change the seed “control after generate” setting to “fixed”. If not, the workflow will create a whole new image from a new randomized seed and it will not resemble the last image you had.

Running the Wan 2.1 workflow

I use UmeAIRT's workflow: https://civitai.com/models/1309369. Read the documentation, follow the steps, and maybe even run the setup scripts to install a prepackaged ComfyUI that supports the workflow.

The workflow is literally the only reason I have been able to generate videos. The fact I was able to make it work and the way it automatically includes all the required parts is a miracle. If this workflow did not exist, I would not have been here. That's how great this workflow is. And if you can get it working and you have the hardware to run it, you will be able to generate videos exactly the way I do if you use the same settings.

My settings

The settings in the workflow are intimidating at first glance, but not all of them need to be changed. In fact, most can and should just be left alone. Generally, if you don’t know what they do, don’t touch them.

These are all the settings I use or have often touched in some way. However, be aware that I wrote this article while I was using the 2.2 gguf workflow. At the time of writing, there is now a v2.3 nightly workflow that is slightly different. Because I have stopped my video generation work, I have not updated to the latest. The workflow layout doesn’t look too different, so I imagine most of my settings would probably still work there.

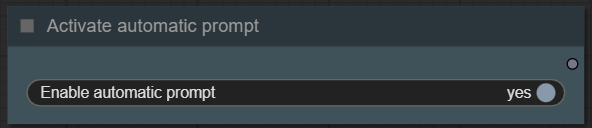

Automatic prompt

This is turned on by default. I don't have any preferences for it being on or off. I don't think it largely matters because I end up writing quite a bit in the prompt anyway.

Frames

This represents how long the video will be. Since Wan generates videos at 16fps, 80 frames means generating a 5 second video (80 frames / 16 frames-per-second). This was switched to a more conventional "Duration" slider in 2.3.

I chose 5 seconds as a balance between video length and generation time. I found 5 seconds to be a decent length that allows just enough animation in the video. Generation time for videos longer than 5 seconds is also excessively long, so 5 seconds seemed alright.

CFG

I normally just leave it at 6, but if I want the model to follow my prompt a bit more closely, I set it to 7 or 7.5. Don’t set it very high expecting it to follow your prompt exactly, however.

Shift

This was changed to “Speed” in the latest version. Honestly, I just left this alone most of the time.

Noise

Just like the seed settings in image generation, I leave it as “randomize” while generating videos and I set it to “fixed” when I load an existing file.

Preview and output

While there's no harm in leaving this completely unchanged, I disable the save metadata and save output for both. This generates additional video and thumbnail files for the preview and output video files if enabled, but I don't actually need them because the collapsed save interpoled and save upscaled steps already creates them.

Interpolation

Interpolation renders extra frames between the typical 16fps based on the interpolation ratio. I’ve gotten very kind messages from others asking how my videos are so smooth and I honestly think it’s entirely this setting doing it. The extra frames make a massive difference in smoothness for the video.

I just leave the ratio at its default 2, which would make the final video 32fps. This is essential for a smoother video and I highly recommend turning it on.

Upscale

Also essential for a higher quality video. I just use the default RealESRGAN_x4 upscaler. I leave the upscale ratio at 2.

Sage attention

This one is critical. I highly recommend getting Sage attention working if you want to do anything with Wan 2.1 video generation, period. It's complicated and requires extra work, but it's worth it. I noticed at least a faster generation by at least a minute with it on. This is absolutely critical if you want faster video generation.

Optimizations

2.3 has a bunch of new options that I can't comment on. From 2.2, the only settings I enabled were Skip layer and Tea cache. Truth be told, I haven't done any benchmarking to determine what kind of difference enabling either or a combination of them do, but I just enable them and trust they improve my generation times.

I couldn't get Torch compile working and I'd probably be leaving it off anyway because I use LoRAs. I also turn off CFGZero most of the time. I was getting some really strange results when using it–either warped or discoloured video, or some really bizarre and outright cursed animation other times. Unsure why, but I seemed to be doing okay without it.

Models

The model depends on your GPU. For my 5080, I use the 480p Q5_K_S gguf model.

I also enable the virtual VRAM, and I typically have it set to 6-8gb. I’ve noticed I get significantly slower generation times if I use the Q5 model with 0 virtual VRAM, so I’ve enabled it ever since.

LoRAs

My videos depend quite heavily on LoRAs and I have a list of the ones I've used the most frequently. Note this is not intended to be an endorsement of these LoRAs over any others, or me trying to sell you on using them. These just turned out to be the ones I use most because in my experience, I could just add them and I either know exactly what strength to set them to or leave them on 1.0 strength for acceptable results.

https://civitai.com/models/1307155/wan-general-nsfw-model-fixed

Honestly, just a pretty good one to have for any occasion. At the very least, I feel like it makes sex movements and animations a lot smoother when combined with other more specific LoRAs and even does sex positions pretty well by itself. For these reasons, I always include it in my videos at the recommended strength of 0.9.

https://civitai.com/models/1343431/bouncing-boobs-wan-i2v-14b

I don't think the choice of exactly which boob bouncing LoRA matters that much, but I always keep going back to this one for convenience. I usually leave it at 1.0 if the character has large tits and I really want them to shake, but then I gradually turn it down if they shake too much or if the character has smaller tits. This one does add quite a bit of jiggle, if that's what you're into (I am)

https://civitai.com/models/1362624/lets-dancewan21-i2v-lora

This is one of my favourite LoRAs for a general sexy dance and body movement. I didn't publish nearly as many videos using this, but I often like creating a base image of a girl and using this LoRA just to animate her moving. Combine it with others and it makes for some really interesting movements.

https://civitai.com/models/1376409/sexy-enhancer-wan-i2v-14b

This one is a bit unpredictable to use, but I sometimes like the results. It just makes the character do some extra sexy movements, although it does slow down their motion quite a bit. I include it, particularly with the dance LoRA, at a very low strength of 0.4 or even lower. I find it adds some really interesting movement variation that's worth experimenting with, even just for a normal sex scene.

https://civitai.com/models/1331682/wan-pov-missionary

A lot of my videos seem to involve pretty generic male POV missionary sex and this one generally works really well. No complaints.

https://civitai.com/models/1472688/female-orgasm-lora-for-wan21-i2v-and-hunyuan-video

Quickly became one of my favourite recent LoRAs. I always need the video to have some kind of extra movement or reaction to avoid being too static. This also has the added benefit of not warping the face too much.

I also use various other LoRAs depending on the variety of sex positions I'm showing. I don't have any to specifically mention because they vary quite a bit.

Picking LoRAs requires a lot of experimentation to understand how the specific LoRA works, what strengths they should be set to, and remembering what keywords to trigger them with. I'll admit that I just keep a text file open on the side that mentions the LoRA by filename, what trigger phrases it needs, and a link to their page on Civitai so I could check them again if needed. I'm positive there has to be a better way, but I never bothered to find out.

I often use three or four LoRAs: usually the general NSFW LoRA, some kind of bouncing breasts LoRA, and a LoRA that specializes in whichever activity is most prominent in the video. And then, I sometimes add an optional fourth like the orgasm or sexy dance enhancer depending on whether I want some extra movement variation.

For LoRA strengths, I typically set them in values between these ranges:

0.85 - 1.0: high strength that should influence the model the most

0.70 - 0.75: slightly less strength than the above so it isn’t as overpowering

0.50 - 0.65: “medium” strength

0.30 - 0.45: very low strength where the LoRA is just barely there

It takes a lot of trial-and-error to know exactly what certain LoRAs need to be set to. Part of the reason I like the ones I listed is because I know most of them could be set at high strength without negatively affecting the video. Otherwise, I’d often add LoRAs at high strength and then gradually bring them down into the next lowest range group if the LoRA is causing problems. I’m also not afraid to drop a LoRA strength all the way down to 0.3 and there have been a few that I’ve done that with.

Prompts

I treat the prompts as equal parts LoRA keyword delivery and as a loose and general guide for what actions or bigger movements Wan should make in the video. It’s important to note that the prompt is never going to be 100% followed by the Wan. Even if you set the CFG to some high value like 8.0-9.0, the model is not going to magically follow exactly what the prompt says. For example, I would use the prompt to inform Wan it will have a sex scene with a certain specific position, but I can’t tell it to specifically move a hand to a specific body part, so I avoid trying to specify really finely detailed actions like these.

It’s also important to note that the prompt should not contain any excessive body motions or movements that aren’t being guided by a LoRA. If you ask Wan to move the body too much, then Wan needs to guess and interpolate what the body is supposed to look like while doing that movement and the effects often look really awkward.

The prompts I write tend to look really bizarre. Aside from having to just shove in whatever trigger text the LoRAs need, my prompts tend to use short, awkward sentences with inconsistent punctuation, spacing, and capitalization, and they directly describe what is happening in the scene.

I write my prompts so they start by describing or summarizing what the model or viewer should be seeing in the scene. After that, I describe the specific actions going on that should be visible in the video. I also try to write each descriptive sentence so it gets more specific and detailed than the last.

And to finish things off, I optionally also try to add one very small additional movement or action to the video. Something extra, often done by the woman, that breaks up the monotony of just penis back-and-forth motion and bouncing breasts. I want to at least have a varied facial expression, an orgasmic reaction, or maybe a body movement of some kind.

For example:

nsfwsks.a woman is having missionary sex with the viewer. The viewer is quickly thrusting his penis back and forth in the woman's vagina. The viewer's penis goes in and out of her vagina. Her breasts are bouncing. The woman is panting and moaning, she experiences an orgasmTo break this down:

nsfwsks - this is the trigger for the general nsfw model LoRA I like to use.

a woman is having missionary sex with the viewer - a general description of what the scene is about. I often try to explicitly mention the woman in the scene and the man or viewer doing the thrusting.

The viewer is quickly thrusting his penis back and forth in the woman's vagina - for a generic vaginal sex scene, it was always helpful to explicitly mention how the penis-holder is thrusting their penis back and forth. This is either a trigger phrase from the LoRAs I commonly use, or is something wan 2.1 can be told to specifically animate. I also include this to try and dictate the speed of the thrusting by saying they are "quickly" thrusting. These statements are necessary or else the video just doesn't have enough thrusting motion.

The viewer's penis goes in and out of her vagina - same as above, but now describing that the penis is actually going in and out of the vagina. It often feels necessary to add this to specifically tell the model to make the penis go back and forth.

Her breasts are bouncing - the trigger text for the bouncing breasts LoRA I like to use. The text is very short and easy to remember, so this is probably why I use this LoRA.

The woman is panting and moaning, she experiences an orgasm - I often try to make the woman show some kind of reaction and movement while the scene is happening to keep it from being too static. Making a mention of them panting or moaning often causes them to change their facial expression. "she experiences an orgasm" is the trigger text for the orgasm LoRA and it guarantees a changing facial expression, which is why I like the LoRA.

Some times, I add these extra parts to the prompt:

the woman looks directly at the viewer, or anything similar if the base image has a POV view, or if I need to just change where the subject is looking.

no talking, mute and speaking in the negative prompt to try and prevent the character's mouth from moving if the character's mouth is flapping too much. It sometimes works

Generating the video

Based on my hardware with my choice of settings, model, virtual VRAM, and enabling sage attention and tea cache, I've noticed my videos will take roughly 7-8 minutes in total to finish generating. The first video generation after I start up ComfyUI always takes the longest because it has to load the Wan model, but not by too long. Overall, 7-8 minutes is the time I expect a video to take.

However, UmeAiRT's workflow also generates the preview before it moves onto its interpolation and upscaling steps. My preview generates around 3 minutes into the total 7-8 minute complete generation. What this often means is I can wait 3 minutes, see a preview for the video, and then decide if I want to let it proceed to interpolation and upscaling, or cancel the run early to adjust my prompt or LoRA strengths.

Because I disabled the save output from above, the workflow generates two sets of files: the interpolated video and its metadata file (marked by the “_IN_” in their file names) and the upscaled video and its metadata (“_UP_”).

The upscaled files marked with UP in their filenames are the final output files that should be kept.

Refining/fixing the video

This really ought to be its own section and be a lot more detailed, but I found myself unable to write it due to tone and a lack of good or structured information to share.

This is the step where, after your video is generated, you look at it and you realize it has problems. There are many countless possible problems your video can have–ranging from bad to outright cursed animation, character mouths uncontrollably flapping open and close, your sex animations just not having enough movement or having the wrong movement, your video not doing something you said it should in your prompt. Or it just doesn’t look good for some miniscule reason.

Whatever the reason, this is the step where you need to try and fix your video by figuring out what combination of prompt, LoRAs and LoRA strengths, and noise seed will make it acceptable.

I’ll be honest–this part is awful. It is the longest and most grueling part of the video generation process because there is no reliable or systematic way to fix specific problems in the output video. Most of the time will be spent making a small change and running the workflow again to see what happens. Whether that change will be on the prompt, LoRA, or randomized noise seed just depends on how the video is going and what you feel like. If it works–great. If it doesn’t, you’re just doing it again. And again. And again…

To give some perspective on how much time I spend refining a video, remember that it takes 3 minutes out of the total 7-8 for the preview to render and I could just stop the run if it’s already not looking good and tweak the values and run it again. But doing this actually means the new total time is now around 6 minutes. And it’s likely the second value tweaks won’t fix the video, either and would need another run–this suddenly makes it 9 minutes, or 10 if we round it up.

Ten minutes to tweak the video twice and see the results. Worse, this is ten minutes of interrupted time because I would need to catch the preview and stop the run part way. And I can’t just walk away and do something else because three minutes isn’t long to switch to doing other tasks, so I’d end up just waiting around during that time. But I would almost certainly have to do a few rounds of value tweaking and follow-on fixes, so the time just keeps adding on in these 10 minute chunks. It easily turns into 30 minutes, and 30 minutes turns into a whole hour, and so on. All the while, it’s time where I’m mostly waiting around to see early results and then running the generation again after updating some values.

For these reasons, I don't have anything concrete to mention in this part. Be prepared to just keep generating videos until you somehow end up with a seed that works, and expect the process to take a lot of time.

The most basic advice I can provide for this step:

Set the seed to “fixed” and make small, single changes.

It’s the same deal with images. The noise seed can be very unpredictable and could result in significantly different animations. Controlling this value will let you see the results of changing prompts and LoRAs more easily and possibly help to finetune a video.

Save copies of the better looking videos.

If you chance upon a good video, keep it because it might actually be the best one you could generate. Otherwise, you also have the option of loading it to work off of. Think of each generated video as a checkpoint or save point you can go back to.

It helps to treat refining a video less like taking a draft and cleaning it up step-by-step to get an increasingly more polished result and more like creating several copies gradually picking the least worst-looking of the batch.

Be ready to go back and change your starting frame.

Sometimes the start frame you have just isn’t working out for whatever reason. In which case, it’s perfectly acceptable to go back and update that image. For example, there might be a feature in the image that doesn’t look good when animated, so you need to get rid of it. Or you might need to change something about the character to make it easier for Wan or your LoRAs to detect and animate.

It isn’t just about making a small change, either. Sometimes your idea just might not be doable and you need to cut your losses. Other times, your idea might not be as interesting as you thought once animated, but you might have accidentally stumbled on something more interesting. Be ready to embrace some spur of the moment randomness or sudden inspiration.

Know when to stop.

This ties in with the previous two points. At some point, you may hit a wall where your videos just aren’t getting any better no matter how much you tweak your prompt or LoRAs. Either you just haven’t been able to generate something better than your existing best attempt, or the current starting frame just isn’t working out no matter what you prompt.

Stubbornness can be good and admirable, but don’t let yourself get stuck for hours with no results to show and no progress. Know when to cut your losses and change your approach to something else entirely.

Final remarks

So there you have it–a somewhat lengthy guide or explanation on how I generate my videos. To be honest, most of the steps outlined aren’t complicated at all. I’d go as far as to say it’s way easier than most may be expecting. Honestly, if somebody reading this is shocked by how easy it sounds, then I will have considered my task complete.

With all this said and done, we can now move on to the conclusion.

Why am I quitting?

Simply put, I just stopped enjoying it.

My lack of enjoyment comes from feeling like I have no ownership or control over the creative process. Every essential part of generating content with AI felt like it belonged to somebody else. The workflows, models, LoRA, etc. were all made by other people and all I’m contributing are the ideas and a finger to press the Run button. I used to think being able to contribute ideas still meant a lot, but this meant shockingly little to me the more I did it.

There was novelty at the beginning seeing some of the ideas in my head come to life this easily. But over time, it started feeling really empty. It felt like I wasn’t doing anything at all and just letting the models do their thing. Especially in cases where the output didn’t quite match what I was thinking of, I found I was often unable to corral the model into making just that. Any input I could provide felt minimal at best. It felt less like I was part of an iterative process and more like I was on the receiving end from somebody else.

The process feels more like rolling a gacha and being at the mercy of RNG instead of real creative involvement. And it only gets worse when considering the amount of time I’d spend trying to fix or refine a video. I touched on it in the previous section, but the constant back-and-forth of rendering a preview, tweaking values, and rendering another preview takes time and the time adds up in steps of 10 minutes or more, easily getting up to an hour or more before I know it.

I’m essentially burning my hours waiting for a model to decide when it wants to give me a good result, with nothing to do on my part because my input just doesn’t matter. It makes me question why I’m spending this much time not having any direct control over the direction of my work.

After having done this for about two months, I’ve realized this just isn’t the creativity I want to pursue. I feel too disengaged and uninvolved to feel any sense of fulfilment. I’m sure there are ways to make myself more involved and to lessen the role of RNG, like using more advanced tools like ControlNet or building better workflows or even training my own models and LoRAs–but this just isn’t the creativity I personally want.

So for the sake of my creative needs and my time, I’ve decided the only viable way forward is to stop and leave this hobby behind.

The immediate future

I won’t be uploading any new content going forward. Because Civitai broke some of my older videos, I will be gradually reuploading them to see if it fixes their playback and I hope they stay that way for long enough. I will also make their prompts public. While I’m doing this, I might also go through each post and write a short description for the video and my thoughts on them. I have a surprising amount to say about them that I want to get off my chest. Mostly good reflections and fun thoughts because I’m an overly sentimental sucker like that.

I should also stress that I will not be migrating to any other platforms, like tensor.art or PixAI. Neither are a suitable place for me because they only allow adding video content generated on their platforms and I’d also prefer to run on my own hardware instead of subscribing. I also don't have any intentions of posting on twitter. Regardless, switching platforms doesn’t do anything to address my reasons for stopping.

Actual final remarks

I want to thank you if you have made it all the way to the end. I also want to thank you even if you jumped straight to my reasons for quitting out of curiosity. I appreciate you for taking the time to read my many words.

I want to express my gratitude to all the talented creators of the workflows, models, and LoRAs I used these last two months. None of my creations would have been possible if not for your hard work.

And I also want to thank all my followers. At the time of writing this, there are over 450 of you and I have been genuinely surprised there are this many over just two months. I have no idea who all of you are or how you ended up finding my content. But I’m glad you were here and it means a lot that there are people out there who enjoyed the things I created.

Take care. Create and do what makes you happy.